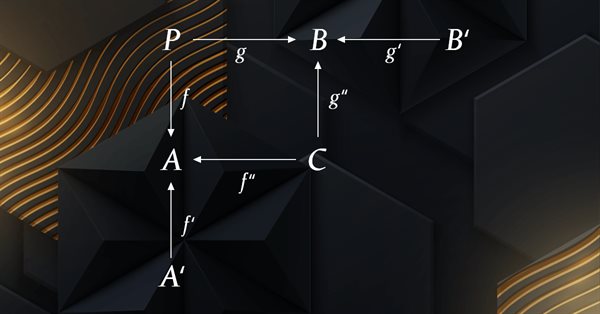

Category Theory in MQL5 (Part 4): Spans, Experiments, and Compositions

Category Theory is a diverse and expanding branch of Mathematics which as of yet is relatively uncovered in the MQL5 community. These series of articles look to introduce and examine some of its concepts with the overall goal of establishing an open library that provides insight while hopefully furthering the use of this remarkable field in Traders' strategy development.

Build Self Optimizing Expert Advisors in MQL5 (Part 7): Trading With Multiple Periods At Once

In this series of articles, we have considered multiple different ways of identifying the best period to use our technical indicators with. Today, we shall demonstrate to the reader how they can instead perform the opposite logic, that is to say, instead of picking the single best period to use, we will demonstrate to the reader how to employ all available periods effectively. This approach reduces the amount of data discarded, and offers alternative use cases for machine learning algorithms beyond ordinary price prediction.

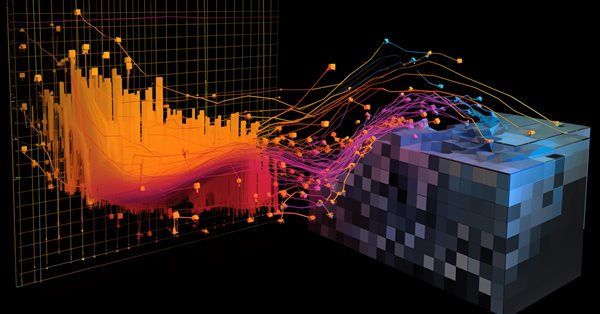

Data Science and ML (Part 46): Stock Markets Forecasting Using N-BEATS in Python

N-BEATS is a revolutionary deep learning model designed for time series forecasting. It was released to surpass classical models for time series forecasting such as ARIMA, PROPHET, VAR, etc. In this article, we are going to discuss this model and use it in predicting the stock market.

Data label for time series mining (Part 4):Interpretability Decomposition Using Label Data

This series of articles introduces several time series labeling methods, which can create data that meets most artificial intelligence models, and targeted data labeling according to needs can make the trained artificial intelligence model more in line with the expected design, improve the accuracy of our model, and even help the model make a qualitative leap!

Neural networks made easy (Part 40): Using Go-Explore on large amounts of data

This article discusses the use of the Go-Explore algorithm over a long training period, since the random action selection strategy may not lead to a profitable pass as training time increases.

Population optimization algorithms: Mind Evolutionary Computation (MEC) algorithm

The article considers the algorithm of the MEC family called the simple mind evolutionary computation algorithm (Simple MEC, SMEC). The algorithm is distinguished by the beauty of its idea and ease of implementation.

Reimagining Classic Strategies (Part X): Can AI Power The MACD?

Join us as we empirically analyzed the MACD indicator, to test if applying AI to a strategy, including the indicator, would yield any improvements in our accuracy on forecasting the EURUSD. We simultaneously assessed if the indicator itself is easier to predict than price, as well as if the indicator's value is predictive of future price levels. We will furnish you with the information you need to decide whether you should consider investing your time into integrating the MACD in your AI trading strategies.

Neural networks made easy (Part 57): Stochastic Marginal Actor-Critic (SMAC)

Here I will consider the fairly new Stochastic Marginal Actor-Critic (SMAC) algorithm, which allows building latent variable policies within the framework of entropy maximization.

Build a Remote Forex Risk Management System in Python

We are making a remote professional risk manager for Forex in Python, deploying it on the server step by step. In the course of the article, we will understand how to programmatically manage Forex risks, and how not to waste a Forex deposit any more.

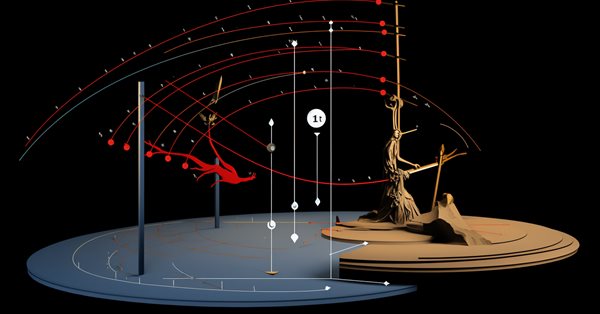

Neural Networks in Trading: A Complex Trajectory Prediction Method (Traj-LLM)

In this article, I would like to introduce you to an interesting trajectory prediction method developed to solve problems in the field of autonomous vehicle movements. The authors of the method combined the best elements of various architectural solutions.

Integrate Your Own LLM into EA (Part 5): Develop and Test Trading Strategy with LLMs (II)-LoRA-Tuning

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

Example of CNA (Causality Network Analysis), SMOC (Stochastic Model Optimal Control) and Nash Game Theory with Deep Learning

We will add Deep Learning to those three examples that were published in previous articles and compare results with previous. The aim is to learn how to add DL to other EA.

Gain An Edge Over Any Market (Part IV): CBOE Euro And Gold Volatility Indexes

We will analyze alternative data curated by the Chicago Board Of Options Exchange (CBOE) to improve the accuracy of our deep neural networks when forecasting the XAUEUR symbol.

MQL5 Wizard Techniques you should know (Part 28): GANs Revisited with a Primer on Learning Rates

The Learning Rate, is a step size towards a training target in many machine learning algorithms’ training processes. We examine the impact its many schedules and formats can have on the performance of a Generative Adversarial Network, a type of neural network that we had examined in an earlier article.

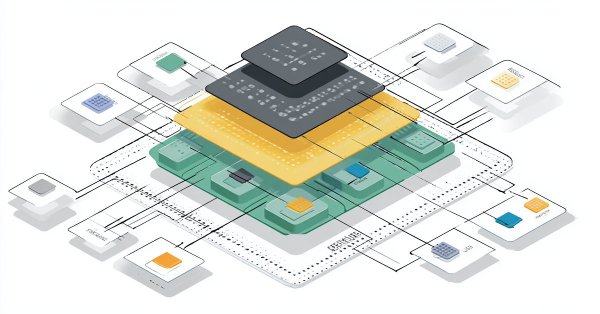

MetaTrader 5 Machine Learning Blueprint (Part 6): Engineering a Production-Grade Caching System

Tired of watching progress bars instead of testing trading strategies? Traditional caching fails financial ML, leaving you with lost computations and frustrating restarts. We've engineered a sophisticated caching architecture that understands the unique challenges of financial data—temporal dependencies, complex data structures, and the constant threat of look-ahead bias. Our three-layer system delivers dramatic speed improvements while automatically invalidating stale results and preventing costly data leaks. Stop waiting for computations and start iterating at the pace the markets demand.

Propensity score in causal inference

The article examines the topic of matching in causal inference. Matching is used to compare similar observations in a data set. This is necessary to correctly determine causal effects and get rid of bias. The author explains how this helps in building trading systems based on machine learning, which become more stable on new data they were not trained on. The propensity score plays a central role and is widely used in causal inference.

Neural Networks in Trading: Controlled Segmentation

In this article. we will discuss a method of complex multimodal interaction analysis and feature understanding.

Neural Networks in Trading: Directional Diffusion Models (DDM)

In this article, we discuss Directional Diffusion Models that exploit data-dependent anisotropic and directed noise in a forward diffusion process to capture meaningful graph representations.

Evolutionary trading algorithm with reinforcement learning and extinction of feeble individuals (ETARE)

In this article, I introduce an innovative trading algorithm that combines evolutionary algorithms with deep reinforcement learning for Forex trading. The algorithm uses the mechanism of extinction of inefficient individuals to optimize the trading strategy.

Category Theory in MQL5 (Part 23): A different look at the Double Exponential Moving Average

In this article we continue with our theme in the last of tackling everyday trading indicators viewed in a ‘new’ light. We are handling horizontal composition of natural transformations for this piece and the best indicator for this, that expands on what we just covered, is the double exponential moving average (DEMA).

Neural networks made easy (Part 72): Trajectory prediction in noisy environments

The quality of future state predictions plays an important role in the Goal-Conditioned Predictive Coding method, which we discussed in the previous article. In this article I want to introduce you to an algorithm that can significantly improve the prediction quality in stochastic environments, such as financial markets.

Data Science and ML (Part 43): Hidden Patterns Detection in Indicators Data Using Latent Gaussian Mixture Models (LGMM)

Have you ever looked at the chart and felt that strange sensation… that there’s a pattern hidden just beneath the surface? A secret code that might reveal where prices are headed if only you could crack it? Meet LGMM, the Market’s Hidden Pattern Detector. A machine learning model that helps identify those hidden patterns in the market.

Neural Network in Practice: Pseudoinverse (I)

Today we will begin to consider how to implement the calculation of pseudo-inverse in pure MQL5 language. The code we are going to look at will be much more complex for beginners than I expected, and I'm still figuring out how to explain it in a simple way. So for now, consider this an opportunity to learn some unusual code. Calmly and attentively. Although it is not aimed at efficient or quick application, its goal is to be as didactic as possible.

Implementing Practical Modules from Other Languages in MQL5 (Part 03): Schedule Module from Python, the OnTimer Event on Steroids

The schedule module in Python offers a simple way to schedule repeated tasks. While MQL5 lacks a built-in equivalent, in this article we’ll implement a similar library to make it easier to set up timed events in MetaTrader 5.

Self Optimizing Expert Advisors in MQL5 (Part 8): Multiple Strategy Analysis (2)

Join us for our follow-up discussion, where we will merge our first two trading strategies into an ensemble trading strategy. We shall demonstrate the different schemes possible for combining multiple strategies and also how to exercise control over the parameter space, to ensure that effective optimization remains possible even as our parameter size grows.

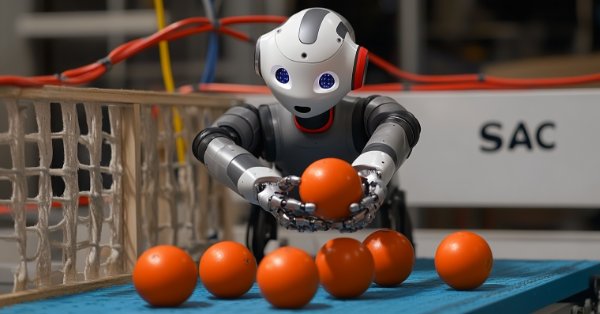

MQL5 Wizard Techniques you should know (Part 51): Reinforcement Learning with SAC

Soft Actor Critic is a Reinforcement Learning algorithm that utilizes 3 neural networks. An actor network and 2 critic networks. These machine learning models are paired in a master slave partnership where the critics are modelled to improve the forecast accuracy of the actor network. While also introducing ONNX in these series, we explore how these ideas could be put to test as a custom signal of a wizard assembled Expert Advisor.

Neural networks made easy (Part 62): Using Decision Transformer in hierarchical models

In recent articles, we have seen several options for using the Decision Transformer method. The method allows analyzing not only the current state, but also the trajectory of previous states and actions performed in them. In this article, we will focus on using this method in hierarchical models.

Artificial Bee Hive Algorithm (ABHA): Tests and results

In this article, we will continue exploring the Artificial Bee Hive Algorithm (ABHA) by diving into the code and considering the remaining methods. As you might remember, each bee in the model is represented as an individual agent whose behavior depends on internal and external information, as well as motivational state. We will test the algorithm on various functions and summarize the results by presenting them in the rating table.

Neural Network in Practice: Straight Line Function

In this article, we will take a quick look at some methods to get a function that can represent our data in the database. I will not go into detail about how to use statistics and probability studies to interpret the results. Let's leave that for those who really want to delve into the mathematical side of the matter. Exploring these questions will be critical to understanding what is involved in studying neural networks. Here we will consider this issue quite calmly.

MQL5 Wizard Techniques you should know (Part 72): Using Patterns of MACD and the OBV with Supervised Learning

We follow up on our last article, where we introduced the indicator pair of the MACD and the OBV, by looking at how this pairing could be enhanced with Machine Learning. MACD and OBV are a trend and volume complimentary pairing. Our machine learning approach uses a convolution neural network that engages the Exponential kernel in sizing its kernels and channels, when fine-tuning the forecasts of this indicator pairing. As always, this is done in a custom signal class file that works with the MQL5 wizard to assemble an Expert Advisor.

Neural networks made easy (Part 65): Distance Weighted Supervised Learning (DWSL)

In this article, we will get acquainted with an interesting algorithm that is built at the intersection of supervised and reinforcement learning methods.

Neural networks made easy (Part 64): ConserWeightive Behavioral Cloning (CWBC) method

As a result of tests performed in previous articles, we came to the conclusion that the optimality of the trained strategy largely depends on the training set used. In this article, we will get acquainted with a fairly simple yet effective method for selecting trajectories to train models.

Neural Networks in Trading: Spatio-Temporal Neural Network (STNN)

In this article we will talk about using space-time transformations to effectively predict upcoming price movement. To improve the numerical prediction accuracy in STNN, a continuous attention mechanism is proposed that allows the model to better consider important aspects of the data.

Overcoming The Limitation of Machine Learning (Part 6): Effective Memory Cross Validation

In this discussion, we contrast the classical approach to time series cross-validation with modern alternatives that challenge its core assumptions. We expose key blind spots in the traditional method—especially its failure to account for evolving market conditions. To address these gaps, we introduce Effective Memory Cross-Validation (EMCV), a domain-aware approach that questions the long-held belief that more historical data always improves performance.

Population optimization algorithms: Simulated Annealing (SA) algorithm. Part I

The Simulated Annealing algorithm is a metaheuristic inspired by the metal annealing process. In the article, we will conduct a thorough analysis of the algorithm and debunk a number of common beliefs and myths surrounding this widely known optimization method. The second part of the article will consider the custom Simulated Isotropic Annealing (SIA) algorithm.

Neural networks made easy (Part 52): Research with optimism and distribution correction

As the model is trained based on the experience reproduction buffer, the current Actor policy moves further and further away from the stored examples, which reduces the efficiency of training the model as a whole. In this article, we will look at the algorithm of improving the efficiency of using samples in reinforcement learning algorithms.

Population optimization algorithms: Changing shape, shifting probability distributions and testing on Smart Cephalopod (SC)

The article examines the impact of changing the shape of probability distributions on the performance of optimization algorithms. We will conduct experiments using the Smart Cephalopod (SC) test algorithm to evaluate the efficiency of various probability distributions in the context of optimization problems.

MQL5 Wizard Techniques you should know (Part 11): Number Walls

Number Walls are a variant of Linear Shift Back Registers that prescreen sequences for predictability by checking for convergence. We look at how these ideas could be of use in MQL5.

MQL5 Wizard Techniques you should know (Part 22): Conditional GANs

Generative Adversarial Networks are a pairing of Neural Networks that train off of each other for more accurate results. We adopt the conditional type of these networks as we look to possible application in forecasting Financial time series within an Expert Signal Class.

Codex Pipelines, from Python to MQL5, for Indicator Selection: A Multi-Quarter Analysis of the XLF ETF with Machine Learning

We continue our look at how the selection of indicators can be pipelined when facing a ‘none-typical’ MetaTrader asset. MetaTrader 5 is primarily used to trade forex, and that is good given the liquidity on offer, however the case for trading outside of this ‘comfort-zone’, is growing bolder with not just the overnight rise of platforms like Robinhood, but also the relentless pursuit of an edge for most traders. We consider the XLF ETF for this article and also cap our revamped pipeline with a simple MLP.