ARIMA Forecasting Indicator in MQL5

In this article we are implementing ARIMA forecasting indicator in MQL5. It examines how the ARIMA model generates forecasts, its applicability to the Forex market and the stock market in general. It also explains what AR autoregression is, how autoregressive models are used for forecasting, and how the autoregression mechanism works.

Quantitative Analysis of Trends: Collecting Statistics in Python

What is quantitative trend analysis in the Forex market? We collect statistics on trends, their magnitude and distribution across the EURUSD currency pair. How quantitative trend analysis can help you create a profitable trading expert advisor.

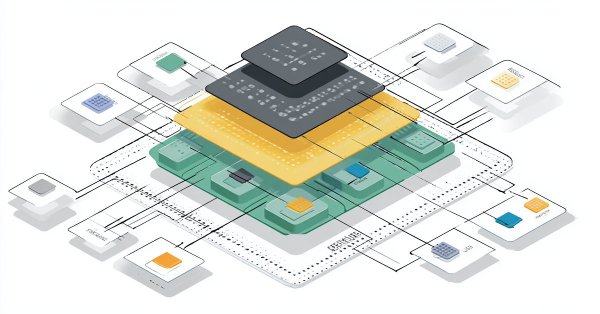

Integrating MQL5 with Data Processing Packages (Part 7): Building Multi-Agent Environments for Cross-Symbol Collaboration

The article presents a complete Python–MQL5 integration for multi‑agent trading: MT5 data ingestion, indicator computation, per‑agent decisions, and a weighted consensus that outputs a single action. Signals are stored to JSON, served by Flask, and consumed by an MQL5 Expert Advisor for execution with position sizing and ATR‑derived SL/TP. Flask routes provide safe lifecycle control and status monitoring.

Algorithmic Trading Strategies: AI and Its Road to Golden Pinnacles

This article demonstrates an approach to creating trading strategies for gold using machine learning. Considering the proposed approach to the analysis and forecasting of time series from different angles, it is possible to determine its advantages and disadvantages in comparison with other ways of creating trading systems which are based solely on the analysis and forecasting of financial time series.

Angular Analysis of Price Movements: A Hybrid Model for Predicting Financial Markets

What is angular analysis of financial markets? How to use price action angles and machine learning to make accurate forecasts with 67% accuracy? How to combine a regression and classification model with angular features and obtain a working algorithm? What does Gann have to do with it? Why are price movement angles a good indicator for machine learning?

Analyzing Overbought and Oversold Trends Via Chaos Theory Approaches

We determine the overbought and oversold condition of the market according to chaos theory: integrating the principles of chaos theory, fractal geometry and neural networks to forecast financial markets. The study demonstrates the use of the Lyapunov exponent as a measure of market randomness and the dynamic adaptation of trading signals. The methodology includes an algorithm for generating fractal noise, hyperbolic tangent activation, and moment optimization.

Integrating Computer Vision into Trading in MQL5 (Part 1): Creating Basic Functions

The EURUSD forecasting system with the use of computer vision and deep learning. Learn how convolutional neural networks can recognize complex price patterns in the foreign exchange market and predict exchange rate movements with up to 54% accuracy. The article shares the methodology for creating an algorithm that uses artificial intelligence technologies for visual analysis of charts instead of traditional technical indicators. The author demonstrates the process of transforming price data into "images", their processing by a neural network, and a unique opportunity to peer into the "consciousness" of AI through activation maps and attention heatmaps. Practical Python code using the MetaTrader 5 library allows readers to reproduce the system and apply it in their own trading.

Exploring Machine Learning in Unidirectional Trend Trading Using Gold as a Case Study

This article discusses an approach to trading only in the chosen direction (buy or sell). For this purpose, the technique of causal inference and machine learning are used.

Employing Game Theory Approaches in Trading Algorithms

We are creating an adaptive self-learning trading expert advisor based on DQN machine learning, with multidimensional causal inference. The EA will successfully trade simultaneously on 7 currency pairs. And agents of different pairs will exchange information with each other.

Swap Arbitrage in Forex: Building a Synthetic Portfolio and Generating a Consistent Swap Flow

Do you want to know how to benefit from the difference in interest rates? This article considers how to use swap arbitrage in Forex to earn stable profit every night, creating a portfolio that is resistant to market fluctuations.

Neuroboids Optimization Algorithm 2 (NOA2)

The new proprietary optimization algorithm NOA2 (Neuroboids Optimization Algorithm 2) combines the principles of swarm intelligence with neural control. NOA2 combines the mechanics of a neuroboid swarm with an adaptive neural system that allows agents to self-correct their behavior while searching for the optimum. The algorithm is under active development and demonstrates potential for solving complex optimization problems.

Neural Networks in Trading: Hybrid Graph Sequence Models (Final Part)

We continue exploring hybrid graph sequence models (GSM++), which integrate the advantages of different architectures, providing high analysis accuracy and efficient distribution of computing resources. These models effectively identify hidden patterns, reducing the impact of market noise and improving forecasting quality.

Data Science and ML (Part 48): Are Transformers a Big Deal for Trading?

From ChatGPT to Gemini and many model AI tools for text, image, and video generation. Transformers have rocked the AI-world. But, are they applicable in the financial (trading) space? Let's find out.

Developing Trend Trading Strategies Using Machine Learning

This study introduces a novel methodology for the development of trend-following trading strategies. This section describes the process of annotating training data and using it to train classifiers. This process yields fully operational trading systems designed to run on MetaTrader 5.

Neural Networks in Trading: Hybrid Graph Sequence Models (GSM++)

Hybrid graph sequence models (GSM++) combine the advantages of different architectures to provide high-fidelity data analysis and optimized computational costs. These models adapt effectively to dynamic market data, improving the presentation and processing of financial information.

Central Force Optimization (CFO) algorithm

The article presents the Central Force Optimization (CFO) algorithm inspired by the laws of gravity. It explores how principles of physical attraction can solve optimization problems where "heavier" solutions attract less successful counterparts.

Build a Remote Forex Risk Management System in Python

We are making a remote professional risk manager for Forex in Python, deploying it on the server step by step. In the course of the article, we will understand how to programmatically manage Forex risks, and how not to waste a Forex deposit any more.

Neural Networks in Trading: Two-Dimensional Connection Space Models (Final Part)

We continue to explore the innovative Chimera framework – a two-dimensional state-space model that uses neural network technologies to analyze multidimensional time series. This method provides high forecasting accuracy with low computational cost.

Forex arbitrage trading: Analyzing synthetic currencies movements and their mean reversion

In this article, we will examine the movements of synthetic currencies using Python and MQL5 and explore how feasible Forex arbitrage is today. We will also consider ready-made Python code for analyzing synthetic currencies and share more details on what synthetic currencies are in Forex.

Fibonacci in Forex (Part I): Examining the Price-Time Relationship

How does the market observe Fibonacci-based relationships? This sequence, where each subsequent number is equal to the sum of the two previous ones (1, 1, 2, 3, 5, 8, 13, 21...), not only describes the growth of the rabbit population. We will consider the Pythagorean hypothesis that everything in the world is subject to certain relationships of numbers...

Neural Networks in Trading: Two-Dimensional Connection Space Models (Chimera)

In this article, we will explore the innovative Chimera framework: a two-dimensional state-space model that uses neural networks to analyze multivariate time series. This method offers high accuracy with low computational cost, outperforming traditional approaches and Transformer architectures.

Neuroboids Optimization Algorithm (NOA)

A new bioinspired optimization metaheuristic, NOA (Neuroboids Optimization Algorithm), combines the principles of collective intelligence and neural networks. Unlike conventional methods, the algorithm uses a population of self-learning "neuroboids", each with its own neural network that adapts its search strategy in real time. The article reveals the architecture of the algorithm, the mechanisms of self-learning of agents, and the prospects for applying this hybrid approach to complex optimization problems.

Building Volatility models in MQL5 (Part I): The Initial Implementation

In this article, we present an MQL5 library for modeling volatility, designed to function similarly to Python's arch package. The library currently supports the specification of common conditional mean (HAR, AR, Constant Mean, Zero Mean) and conditional volatility (Constant Variance, ARCH, GARCH) models.

Successful Restaurateur Algorithm (SRA)

Successful Restaurateur Algorithm (SRA) is an innovative optimization method inspired by restaurant business management principles. Unlike traditional approaches, SRA does not discard weak solutions, but improves them by combining with elements of successful ones. The algorithm shows competitive results and offers a fresh perspective on balancing exploration and exploitation in optimization problems.

Neural Networks in Trading: Multi-Task Learning Based on the ResNeXt Model (Final Part)

We continue exploring a multi-task learning framework based on ResNeXt, which is characterized by modularity, high computational efficiency, and the ability to identify stable patterns in data. Using a single encoder and specialized "heads" reduces the risk of model overfitting and improves the quality of forecasts.

Data Science and ML (Part 47): Forecasting the Market Using the DeepAR model in Python

In this article, we will attempt to predict the market with a decent model for time series forecasting named DeepAR. A model that is a combination of deep neural networks and autoregressive properties found in models like ARIMA and Vector Autoregressive (VAR).

Creating a mean-reversion strategy based on machine learning

This article proposes another original approach to creating trading systems based on machine learning, using clustering and trade labeling for mean reversion strategies.

Billiards Optimization Algorithm (BOA)

The BOA method is inspired by the classic game of billiards and simulates the search for optimal solutions as a game with balls trying to fall into pockets representing the best results. In this article, we will consider the basics of BOA, its mathematical model, and its efficiency in solving various optimization problems.

Reimagining Classic Strategies (Part 20): Modern Stochastic Oscillators

This article demonstrates how the stochastic oscillator, a classical technical indicator, can be repurposed beyond its conventional use as a mean-reversion tool. By viewing the indicator through a different analytical lens, we show how familiar strategies can yield new value and support alternative trading rules, including trend-following interpretations. Ultimately, the article highlights how every technical indicator in the MetaTrader 5 terminal holds untapped potential, and how thoughtful trial and error can uncover meaningful interpretations hidden from view.

Pure implementation of RSA encryption in MQL5

MQL5 lacks built-in asymmetric cryptography, making secure data exchange over insecure channels like HTTP difficult. This article presents a pure MQL5 implementation of RSA using PKCS#1 v1.5 padding, enabling safe transmission of AES session keys and small data blocks without external libraries. This approach provides HTTPS-like security over standard HTTP and even more, it fills an important gap in secure communication for MQL5 applications.

Codex Pipelines, from Python to MQL5, for Indicator Selection: A Multi-Quarter Analysis of the XLF ETF with Machine Learning

We continue our look at how the selection of indicators can be pipelined when facing a ‘none-typical’ MetaTrader asset. MetaTrader 5 is primarily used to trade forex, and that is good given the liquidity on offer, however the case for trading outside of this ‘comfort-zone’, is growing bolder with not just the overnight rise of platforms like Robinhood, but also the relentless pursuit of an edge for most traders. We consider the XLF ETF for this article and also cap our revamped pipeline with a simple MLP.

Overcoming The Limitation of Machine Learning (Part 9): Correlation-Based Feature Learning in Self-Supervised Finance

Self-supervised learning is a powerful paradigm of statistical learning that searches for supervisory signals generated from the observations themselves. This approach reframes challenging unsupervised learning problems into more familiar supervised ones. This technology has overlooked applications for our objective as a community of algorithmic traders. Our discussion, therefore, aims to give the reader an approachable bridge into the open research area of self-supervised learning and offers practical applications that provide robust and reliable statistical models of financial markets without overfitting to small datasets.

Chaos Game Optimization (CGO)

The article presents a new metaheuristic algorithm, Chaos Game Optimization (CGO), which demonstrates a unique ability to maintain high efficiency when dealing with high-dimensional problems. Unlike most optimization algorithms, CGO not only does not lose, but sometimes even increases performance when scaling a problem, which is its key feature.

Neural Networks in Trading: Multi-Task Learning Based on the ResNeXt Model

A multi-task learning framework based on ResNeXt optimizes the analysis of financial data, taking into account its high dimensionality, nonlinearity, and time dependencies. The use of group convolution and specialized heads allows the model to effectively extract key features from the input data.

MetaTrader 5 Machine Learning Blueprint (Part 6): Engineering a Production-Grade Caching System

Tired of watching progress bars instead of testing trading strategies? Traditional caching fails financial ML, leaving you with lost computations and frustrating restarts. We've engineered a sophisticated caching architecture that understands the unique challenges of financial data—temporal dependencies, complex data structures, and the constant threat of look-ahead bias. Our three-layer system delivers dramatic speed improvements while automatically invalidating stale results and preventing costly data leaks. Stop waiting for computations and start iterating at the pace the markets demand.

Neural Networks in Trading: Hierarchical Dual-Tower Transformer (Final Part)

We continue to build the Hidformer hierarchical dual-tower transformer model designed for analyzing and forecasting complex multivariate time series. In this article, we will bring the work we started earlier to its logical conclusion — we will test the model on real historical data.

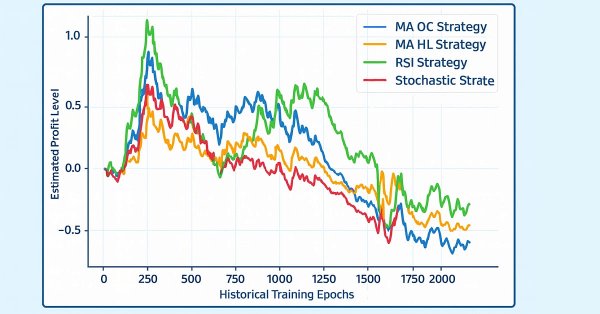

Overcoming The Limitation of Machine Learning (Part 8): Nonparametric Strategy Selection

This article shows how to configure a black-box model to automatically uncover strong trading strategies using a data-driven approach. By using Mutual Information to prioritize the most learnable signals, we can build smarter and more adaptive models that outperform conventional methods. Readers will also learn to avoid common pitfalls like overreliance on surface-level metrics, and instead develop strategies rooted in meaningful statistical insight.

Overcoming The Limitation of Machine Learning (Part 7): Automatic Strategy Selection

This article demonstrates how to automatically identify potentially profitable trading strategies using MetaTrader 5. White-box solutions, powered by unsupervised matrix factorization, are faster to configure, more interpretable, and provide clear guidance on which strategies to retain. Black-box solutions, while more time-consuming, are better suited for complex market conditions that white-box approaches may not capture. Join us as we discuss how our trading strategies can help us carefully identify profitable strategies under any circumstance.

Neural Networks in Trading: Hierarchical Dual-Tower Transformer (Hidformer)

We invite you to get acquainted with the Hierarchical Double-Tower Transformer (Hidformer) framework, which was developed for time series forecasting and data analysis. The framework authors proposed several improvements to the Transformer architecture, which resulted in increased forecast accuracy and reduced computational resource consumption.

Market Simulation (Part 06): Transferring Information from MetaTrader 5 to Excel

Many people, especially non=programmers, find it very difficult to transfer information between MetaTrader 5 and other programs. One such program is Excel. Many use Excel as a way to manage and maintain their risk control. It is an excellent program and easy to learn, even for those who are not VBA programmers. Here we will look at how to establish a connection between MetaTrader 5 and Excel (a very simple method).