Population optimization algorithms: Intelligent Water Drops (IWD) algorithm

Contents

1. Introduction

2. Algorithm

3. Modified version of SDSm

4. Test results

1. Introduction

The riverbed is one of nature's most graceful creations, with every drop of water playing its part in the unique dance of life. With every kilometer of the way, the river forms its new boundaries, majestically radiating its energy and wisdom. Like this natural phenomenon, the Intelligent Water Drops optimization algorithm strives to achieve harmony and perfection in its functioning using the principles of self-organization and interaction between particles. This algorithm reflects the grandeur of nature and its ability to create and maintain balance making it an important tool in optimizing and solving complex problems.

Any river has its own valley, formed by erosion processes under the influence of surface and groundwater. The lowest part of this valley, cut into the ground by a river flow, is called a bed. The main part of the river flow and bottom sediments move along it.

The relief of the riverbed is constantly changing. Stones and sand captured by water form ridges and rifts that cross the bed at an acute angle. At bends, rivers can wash out a new path and form oxbow lakes - sections of the old channel that gradually turn into a lake with its own ecosystem and eventually dry up or turn into a swamp.

When we observe rivers in nature, we notice many twists and turns along their path. The question arises, why did these twists appear and is there any logic or reason behind them? If yes, can we exploit the mechanisms that occur in rivers and, as a consequence, can we design and develop intelligent algorithms based on them? The IWD algorithm is an attempt to model some of the processes that occur in natural rivers and then implement them as an algorithm.

IWD is one of the relatively new algorithms in the field of swarm intelligence. It simulates the dynamics of river systems. IWD is a population-based algorithm in which each water drop represents a solution, and sharing water drops during the search results in better solutions.

In 2007, Iranian scientist Hamed Shah-Hosseini developed an algorithm for the behavior of intelligent drops. The IWD algorithm features several artificial drops of water, which, as a result of interaction, are able to change their environment in such a way that they find the optimal way along the path of least resistance. The IWD algorithm is a constructive population-oriented optimization algorithm.

2. Algorithm

IWD is a model, in which water drops find the optimal path to their destination by changing the course of a river. This is facilitated by three important parameters. Due to their own speed, drops are able to capture soil from the bottom of the river. The higher the speed, the greater the amount of soil each drop can carry with it, respectively, the freer the path becomes for subsequent agents. The flow rate increases where there is no soil to clear. The optimal path is the one containing the least amount of soil where the highest speed can be reached. With the help of IWD, it is possible to implement an optimization strategy where random agents intelligently interact with each other in such a way that they jointly change the course of the river and create an optimal path, in which no soil is encountered at all and the flow rate of the agents becomes the highest possible.

Basic principles:

- a water drop prefers a path with less soil

- a water drop prefers a shorter path when forced to choose between several routes on the way from source to destination.

- the difficulty of a path is determined by the amount of soil on it. A path with more soil is considered difficult, while a path with less soil is considered easy.

In nature, many drops of water are observed in rivers, where they form huge masses (swarms of water drops). The paths, along which natural rivers flow, were created by swarms of water drops. Swarms of water drops (rivers) are part of the environment that has been significantly modified by the swarm and is also subject to change in the future. Moreover, the environment itself has a significant influence on the paths of water drops. They face the resistance of river banks. For instance, a swarm of water drops is resisted more by those parts of the environment that make up hard soil than by parts that make up soft soil. The natural course of a river is the result of competition between water drops and the environment, which resists their movement.

One of the characteristics of a water drop flowing into a river is its speed. Another characteristic is soil, a certain amount of which each river can carry. Thus, a drop of water is capable of transporting some amount of soil from one place to another. This soil is transferred from fast particles to slow ones. This means the soil carried by the river along with the fast current will settle in a place with a slow current.

Three obvious changes will occur during this transition period:

- a water drop speed increases

- soil saturation of water drops increases

- between these two points, the amount of soil in the bed decreases (graph points)

It was noted above that each drop of water has its own speed. This speed plays a direct role in the collection of soil in the riverbed. A water drop with a high speed picks up more soil than a drop with a slower speed. Thus, water droplets at a faster rate remove more soil from the river bottom than water droplets at a slower rate. Soil removal is thus related to the speed of the water drop.

The speed of a water drop increases on a path with a low soil level more than on a path with a high soil level. Thus, a path with a small amount of soil allows the flowing water droplet to collect more soil and gain greater speed, while a path with large levels of soil results in a decrease in speed.

So, in the IWD algorithm, drops are characterized by two main properties:

- speed

- soil

Both of these properties can change during the algorithm operation. Drops in IWD move from a source to a destination and begin their journey with an initial velocity and zero ground. During their journey, water drops move through an environment, from which some soil is removed, and can gain some speed. IWD is assumed to be performed iteratively. The droplet speed increases from the current location to the next by an amount nonlinearly proportional to the inverse of the soil between the two locations:

vel = vel (t-1) + Av / [Bv + Cv * soil^2(i,j)]

where: Av, Bv, Cv are speed ratios (inputs), while soil (i,j) is the amount of soil between the graph vertices.

Therefore, a path with less soil allows the IWD droplet to move faster than a path with more soil.

The IWD drop collects soil as it travels through the environment. This soil is removed from the path connecting the two locations. The amount of soil added to an IWD droplet is nonlinearly proportional to the time required to move the IWD from its current location to the next. This time interval is calculated using simple laws of physics for linear motion:

time(i,j,vel) = R / vel

where R is a distance between two points.

Amount of soil added to the drop:

dSoil(i,j) = As / [Bs + Cs * time(i,j,vel)]

where: As, Bs and Cs are soil inwash ratios.

The new amount of soil on the path between points will look like this:

soil (i+1,j+1) = Po * soil(i,j) + Pn * dSoil(i,j)

where Po and Pn are the ratios of changing the amount of soil.

Thus, the time spent moving is inversely proportional to the speed of movement and directly proportional to the distance between two points. In other words, soil is a quantitative indicator of information about the environment. These equations determine the preference of water drops to choose low-priming paths over high-priming ones. This path selection is accomplished by applying a uniform random distribution to the available paths. The probability of choosing the next path is inversely proportional to the soil level of the available paths. Therefore, paths with lower-priming levels have a higher chance of being selected by IWD drops.

The original IWD algorithm was developed by the author to solve optimal path problems, such as graph search problems and traveling salesman problems. However, this algorithm is not suitable for solving the problems that we consider in our tests. Therefore, it is necessary to adapt the IWD algorithm to solve our optimization problems. In contrast, the algorithms described in the Population optimization algorithms articles are capable of solving problems of any type, including graph search problems. The previous articles have already presented a similar highly specialized algorithm that required revision, namely "Ant Colony (ACO)".

To adapt IWD to the ability to solve any optimization problem, and for IWD to compete in population algorithm competitions, it is necessary to first determine how to apply the soil deposition and movement process. We cannot follow the approach used in the ACO algorithm, where pheromones are equivalent to the fitness function value. In the case of IWD, soil is a dynamic parameter and does not correspond proportionally to fitness.

The idea arose to divide the coordinates into sectors, similar to dividing a river valley into sections with the same area. The idea is that if the position of the drop (agent) improves, then the amount of soil in the corresponding sectors along all coordinates decreases. The quantitative change in the soil will be determined by the difference in the change in the fitness function over the last two iterations normalized across all water drops by the difference between the maximum and minimum change in fitness.

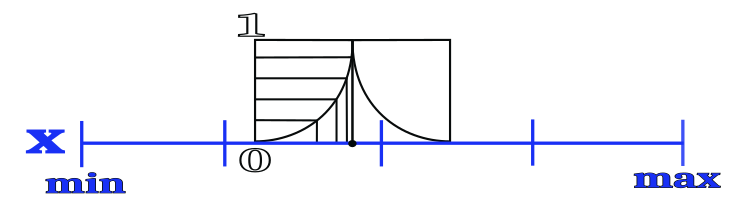

The water drop movement behavior will be based on a random selection of sectors that have the least amount of soil, i.e., where the probability will be proportional to the amount of soil in the corresponding sector. We will store the best coordinates in all areas in the global "bed" storage. After a water drop selects a sector, an attempt will be made to improve the coordinate stored in the storage in such a way that the probability of the new coordinate will obey a quadratic law, according to which the new coordinate will be closer to the previous coordinate with a higher probability than further away. The width of the area within which the new coordinate will lie is determined by an external parameter and is expressed in parts of the sector size. The breakdown of a coordinate into sectors and the probability distribution of the new coordinate are displayed in Figure 1.

Figure 1. Dividing the coordinate into sectors and the probability distribution of choosing a new coordinate in the vicinity of the best known one

Based on the above provisions and concepts of the IWD algorithm, we can compose pseudo-code broken down into the following steps:

- Random water drop generation (first iteration)

- Calculate FF

- Update the best global result

- Random water drop generation (second iteration)

- Calculate FF

- Update the best global result

- Calculate the change in height of each water drop (current and previous heights)

- Update heights by sector

- New drops (choose a sector probabilistically, a drop in the vicinity of a known hole)

- Calculate FF

- Update the best global result

- Repeat from p. 7

Let's move on to code analysis.

Represent the "water drop" search agent as a S_Drop structure containing the following fields:

- Init (int coords): the function initializes a structure object taking the number of "coords" coordinates as an argument. Inside the function, the sizes of the "c" and "rSection" arrays are changed up to the specified number of coordinates. The "f", "fPrev" and "altChange" variables are initialized as well

- c: array to store coordinates

- rSection: array for storing river sections

- f: fitness indicator for a given drop

- fPrev: previous fitness value

- altChange: height change

struct S_Drop { void Init (int coords) { ArrayResize (c, coords); ArrayResize (rSection, coords); f = -DBL_MAX; fPrev = -DBL_MAX; altChange = 0.0; } double c []; //coordinates int rSection []; //river section (number of cells: number of coordinates, cell value: sector index on the coordinate) double f; //fitness double fPrev; //previous fitness double altChange; //change in altitude };

We will need a storage where there will be a description of the river (the best coordinates of the depths and, in fact, the depths themselves), so we will call the structure S_Riverbed:

- riverbedDepth: the array for storing river bed depths, the number of cells in the array corresponds to the number of sectors at the coordinate, and the value of each cell is the bed depth at the corresponding sector

- coordOnSector: the array for storing a specific coordinate of the deepest place on a sector, the number of cells in the array corresponds to the number of sectors on the coordinate, and the value of each cell is the coordinate on the corresponding sector.

//—————————————————————————————————————————————————————————————————————————————— struct S_Riverbed //riverbed { double riverbedDepth []; //riverbed depth double coordOnSector []; //coordinate on the sector }; //——————————————————————————————————————————————————————————————————————————————

Let's declare the C_AO_IWDm class (m - modified), which implements an artificial optimization algorithm based on water drops. Here is a description of each class element:

Class properties:

- cB: array to store the best coordinates

- fB: stores the value of the target function for the best coordinates

- p: array for particles (water drops)

- "rangeMax", "rangeMin" and "rangeStep" arrays are used to define the search range

Class methods:

- Init: initialize the C_AO_IWDm class object with the given parameters: "coordsP" - number of coordinates, "popSizeP" - population size, "sectorsNumberP" - number of sectors and "waterViscosityP" - water viscosity.

- Moving: move water drops

- Revision: perform a revision of the state of water drops

- coords: number of coordinates

- popSize: number of drops

- sectorsNumbe: number of sectors

- waterViscosity: water viscosity ratio in parts of the sector size

- sectorSpace: sector size

- rb: array of coordinates to describe the river bed

- iterationCounter

- revision: flag indicating the need for revision

- SeInDiSp: calculates a new coordinate value in a given range with a given step

- RNDfromCI: generates a random number in a given interval

- Scale: scaling the number to the specified range

//—————————————————————————————————————————————————————————————————————————————— class C_AO_IWDm { //---------------------------------------------------------------------------- public: double cB []; //best coordinates public: double fB; //FF of the best coordinates public: S_Drop p []; //particles (drops) public: double rangeMax []; //maximum search range public: double rangeMin []; //manimum search range public: double rangeStep []; //step search public: void Init (const int coordsP, //coordinates number const int popSizeP, //population size const int sectorsNumberP, //sectors number const double waterViscosityP); //water viscosity (>= 1) public: void Moving (); public: void Revision (); //---------------------------------------------------------------------------- private: int coords; //coordinates number private: int popSize; //population size private: int sectorsNumber; //sectors number private: double waterViscosity; //water viscosity private: double sectorSpace []; //sector space private: S_Riverbed rb []; //riverbed private: int iterationCounter; private: double SeInDiSp (double In, double InMin, double InMax, double Step); private: double RNDfromCI (double min, double max); private: double Scale (double In, double InMIN, double InMAX, double OutMIN, double OutMAX, bool revers); }; //——————————————————————————————————————————————————————————————————————————————

The Init method initializes the C_AO_IWDm class object with the specified parameters: number of coordinates, number of drops, number of sectors, water viscosity.

This method does the following:

1. Resets the random number generator.

2. Initializes the value of the "fB" target function using the "-DBL_MAX" value

3. Sets the iteration counter to zero

4. Sets the number of "coords" coordinates and the "popSize" population size to the given values

5. Sets "sectorsNumber" and "waterViscosity" to the specified values

6. Changes the "sectorSpace" array size to "coord"

7. Change the "p" array size to "popSize" and initializes each water drop in the "p" array

8. Changes the "rangeMax", "rangeMin", "rangeStep" and "cB" array sizes to "coords"

9. Changes the "rb" array size to "coords" and initializes each element of the "rb" array, including the "riverbedDepth" and "coordOnSector" arrays setting them to their default values

//—————————————————————————————————————————————————————————————————————————————— void C_AO_IWDm::Init (const int coordsP, //coordinates number const int popSizeP, //population size const int sectorsNumberP, //sectors number const double waterViscosityP) //water viscosity (>= 1) { MathSrand ((int)GetMicrosecondCount ()); // reset of the generator fB = -DBL_MAX; iterationCounter = 0; coords = coordsP; popSize = popSizeP; sectorsNumber = sectorsNumberP; waterViscosity = waterViscosityP; ArrayResize (sectorSpace, coords); ArrayResize (p, popSize); for (int i = 0; i < popSize; i++) p [i].Init (coords); ArrayResize (rangeMax, coords); ArrayResize (rangeMin, coords); ArrayResize (rangeStep, coords); ArrayResize (cB, coords); ArrayResize (rb, coords); for (int i = 0; i < coords; i++) { ArrayResize (rb [i].riverbedDepth, sectorsNumber); ArrayResize (rb [i].coordOnSector, sectorsNumber); ArrayInitialize (rb [i].riverbedDepth, 0.0); ArrayInitialize (rb [i].coordOnSector, -DBL_MAX); } } //——————————————————————————————————————————————————————————————————————————————

Let's look at the Moving() method in parts.

This code block is executed only in the first and second iterations. It calculates and sets the size of each "sectorSpace" sector for each coordinate. The sector size is determined by dividing the range of "rangeMax - rangeMin" values by the number of "sectorsNumber" sectors.

Next, the drops are initialized with initial values based on random values in specified ranges with a uniform distribution.

//---------------------------------------------------------------------------- if (iterationCounter == 0) { for (int i = 0; i < coords; i++) sectorSpace [i] = (rangeMax [i] - rangeMin [i]) / sectorsNumber; } //1,4------------------------------------------------------------------------- if (iterationCounter == 0 || iterationCounter == 1) { double min = 0.0; double max = 0.0; int s = 0; for (int i = 0; i < popSize; i++) { p [i].fPrev = p [i].f; for (int c = 0; c < coords; c++) { s = (int)(RNDfromCI (0, sectorsNumber)); if (s >= sectorsNumber) s = sectorsNumber - 1; p [i].rSection [c] = s; min = rangeMin [c] + sectorSpace [c] * s; max = min + sectorSpace [c]; p [i].c [c] = SeInDiSp (RNDfromCI (min, max), rangeMin [c], rangeMax [c], rangeStep [c]); } } for (int i = 0; i < popSize; i++) p [i].fPrev = p [i].f; return; }

The code snippet then calculates and normalizes the altChange for each water drop in the population. The only subtlety in this code is to check the equality of the maximum and minimum change in height to avoid division by "0". In this case, we assume that there were no changes in the water drop heights.

The "altChange" value for each water drop is calculated as a normalized value that is normalized to range from 0 to 1. Normalization is done by subtracting "minAltChange" from "altChange" and dividing the result by the difference between "maxAltChange" and "minAltChange".

//7--------------------------------------------------------------------------- double maxAltChange = -DBL_MAX; double minAltChange = DBL_MAX; double altChange = 0.0; double randSC = 0.0; //random selection component double maxRC = 0.0; double nowRC = 0.0; int indSector = 0; for (int i = 0; i < popSize; i++) { altChange = fabs (p [i].f - p [i].fPrev); p [i].altChange = altChange; if (altChange < minAltChange) minAltChange = altChange; if (altChange > maxAltChange) maxAltChange = altChange; } if (minAltChange == maxAltChange) { for (int i = 0; i < popSize; i++) { p [i].altChange = 0.0; } } else { for (int i = 0; i < popSize; i++) { altChange = p [i].altChange; p [i].altChange = (altChange - minAltChange) / (maxAltChange - minAltChange); } }

The following code fragment updates the depth of the river bed for each coordinate if the current value of the fitness function is greater than the previous value for the corresponding drop. This allows us to take into account changes in height and influence the shape of the river bed. Thus, every time a drop improves its position, we believe that the river bed has changed.

//8--------------------------------------------------------------------------- for (int i = 0; i < popSize; i++) { if (p [i].f > p [i].fPrev) { for (int c = 0; c < coords; c++) { rb [c].riverbedDepth [p [i].rSection [c]] += p [i].altChange; } } }

The following code snippet performs two basic actions to change the coordinates of the agents that provide the search strategy:

- a water drop selects another water drop randomly from the population and borrows the sector number from it, the combinatorial properties of the algorithm are ensured

- a water drop randomly selects a sector of the river in proportion to the known depth in each sector

As soon as the sector is selected, we generate a new coordinate for the water drop with a uniform distribution if the sector was taken from another drop, and with a quadratic function distribution if the sector was selected from information stored in the river bed. The resulting value for each coordinate is brought into the acceptable range.

//9--------------------------------------------------------------------------- for (int i = 0; i < popSize; i++) { for (int c = 0; c < coords; c++) { n = (int)(RNDfromCI (0, popSize)); if (n >= popSize) n = popSize - 1; if (p [n].f > p [i].f) { p [i].rSection [c] = p [n].rSection [c]; min = rangeMin [c] + sectorSpace [c] * p [i].rSection [c]; max = min + sectorSpace [c]; p [i].c [c] = SeInDiSp (RNDfromCI (min, max), rangeMin [c], rangeMax [c], rangeStep [c]); } else { randSC = RNDfromCI (0.0, 1.0); nowRC = rb [c].riverbedDepth [0] * randSC; maxRC = nowRC; indSector = 0; for (int r = 1; r < sectorsNumber; r++) { nowRC = rb [c].riverbedDepth [r] * randSC; if (nowRC > maxRC) { maxRC = nowRC; indSector = r; } } if (rb [c].coordOnSector [indSector] == -DBL_MAX) { min = rangeMin [c] + sectorSpace [c] * indSector; max = min + sectorSpace [c]; p [i].c [c] = RNDfromCI (min, max); } else { double x = RNDfromCI (-1.0, 1.0); double y = x * x; double pit = 0.0; double dif = Scale (y, 0.0, 1.0, 0.0, sectorSpace [c] * waterViscosity, false); pit = rb [c].coordOnSector [indSector]; pit += x > 0.0 ? dif : -dif; p [i].c [c] = pit; } min = rangeMin [c] + sectorSpace [c] * indSector; max = min + sectorSpace [c]; p [i].c [c] = SeInDiSp (p [i].c [c], rangeMin [c], rangeMax [c], rangeStep [c]); p [i].rSection [c] = indSector; } } }

Next, we update the previous fitness value "fPrev" for each water drop if the current value of the objective function 'f' is greater than the previous value. This allows us to track changes in the objective function and use this information in the algorithm to make decisions about choosing the next step.

for (int i = 0; i < popSize; i++) { if (p [i].f > p [i].fPrev) p [i].fPrev = p [i].f; }

Finally, let's consider the Revision method. It is designed to update the best solution "cB" and the corresponding fitness function value. If a better solution is found at the current iteration, then we save the coordinate of the corresponding sector. Thus, the river bed remembers the deepest places in each sector at each coordinate. In this method, we increase the iteration counter "iterationCounter" by one so that in the Moving method we can navigate and perform actions corresponding to the iterations.

//—————————————————————————————————————————————————————————————————————————————— void C_AO_IWDm::Revision () { //3,6,11---------------------------------------------------------------------- for (int i = 0; i < popSize; i++) { if (p [i].f > fB) { fB = p [i].f; ArrayCopy (cB, p [i].c, 0, 0, WHOLE_ARRAY); for (int c = 0; c < coords; c++) { rb [c].coordOnSector [p [i].rSection [c]] = p [i].c [c]; } } else { for (int c = 0; c < coords; c++) { if (rb [c].coordOnSector [p [i].rSection [c]] == -DBL_MAX) { rb [c].coordOnSector [p [i].rSection [c]] = p [i].c [c]; } } } } iterationCounter++; } //——————————————————————————————————————————————————————————————————————————————

3. Modified version of SDSm

We will consider the results of the IWDm algorithm later, but they prompted the idea of trying to improve another algorithm, the best in the rating - SDS, since both IWDm and SDS use similar entities (sectors and restaurants, respectively). The method used in IWD, namely the use of position refinement using a quaternary probability distribution, helped make SDS even better: the best position refinement method had a large impact on the final test results. The only difference is that in SDSm the distribution is truncated if it goes to a neighboring restaurant.

Changes were made to the Revision method of the SDS algorithm, and due to significant changes in the distribution of new coordinates within the restaurant (previously it was uniform) and testing results, the algorithm was assigned the "m" index.

In the code, we see that the Research function is now responsible for the distribution of the random variable, which takes as arguments the distribution width ratio, the address of the restaurant, the size of the restaurant, the minimum possible value along the coordinate, the step along the coordinate, the fitness at the previous step and the coordinate that we are trying to improve.

In the SDSm algorithm, we try to improve three kinds of coordinates (dishes in restaurants):

- interlocutor candidate coordinate

- the best coordinate in a randomly selected restaurant (just like in IWDm for a river bed, in SDSm we store the best coordinates for all restaurants - this is like storing a list of the best dishes in all restaurants in the city)

- our own coordinate for the corresponding restaurant

The ratios of the probability distribution width could be included in external parameters, but I did not do this setting the best values, in my opinion.

//—————————————————————————————————————————————————————————————————————————————— void C_AO_SDSm::Revision () { /* here is the old code, no changes */ for (int i = 0; i < populationSize; i++) { for (int c = 0; c < coords; c++) { n = (int)(RNDfromCI (0, populationSize)); if (n >= populationSize) n = populationSize - 1; if (cands [n].fPrev > cands [i].fPrev) { cands [i].raddr [c] = cands [n].raddrPrev [c]; Research (0.25, cands [i].raddr [c], restSpace [c], rangeMin [c], rangeStep [c], cands [n].cPrev [c], cands [i].c [c]); } else { rnd = RNDfromCI (0.0, 1.0); if (rnd < probabRest) { n = (int)(RNDfromCI (0, restNumb)); if (n >= restNumb) n = restNumb - 1; cands [i].raddr [c] = n; Research (1.0, cands [i].raddr [c], restSpace [c], rangeMin [c], rangeStep [c], rb [c].coordOnSector [n], cands [i].c [c]); } else { cands [i].raddr [c] = cands [i].raddrPrev [c]; Research (0.25, cands [i].raddr [c], restSpace [c], rangeMin [c], rangeStep [c], cands [i].cPrev [c], cands [i].c [c]); } } } } } //——————————————————————————————————————————————————————————————————————————————

Here is the "magic" Research function that made it possible to improve the previous testing leader by more than 12%.

The function does the following:

- a random number is generated in the range [-1.0;1.0],

- the resulting value is scaled in increments corresponding to the size of the restaurant with the distribution scale factor

- added to the current upgraded coordinate

- restaurant boundaries are determined

- the number is converted to acceptable values in accordance with the step of the optimized parameter with trimming along the restaurant boundaries

//—————————————————————————————————————————————————————————————————————————————— void C_AO_SDSm::Research (const double ko, const int raddr, const double restSpaceI, const double rangeMinI, const double rangeStepI, const double pitOld, double &pitNew) { double x = RNDfromCI(-1.0, 1.0); double y = x * x; double pit = pitOld; double min = 0.0; double max = 0.0; double dif = Scale(y, 0.0, 1.0, 0.0, restSpaceI * ko, false); pit += x > 0.0 ? dif : -dif; min = rangeMinI + restSpaceI * raddr; max = min + restSpaceI; pitNew = SeInDiSp (pit, min, max, rangeStepI); } //——————————————————————————————————————————————————————————————————————————————

4. Test results

IWD test stand results:

C_AO_IWDm:50;10;3.0

=============================

5 Rastrigin's; Func runs 10000 result: 63.304838882364926

Score: 0.78438

25 Rastrigin's; Func runs 10000 result: 49.20424466627239

Score: 0.60967

500 Rastrigin's; Func runs 10000 result: 39.68464591598694

Score: 0.49172

=============================

5 Forest's; Func runs 10000 result: 0.6975685023058024

Score: 0.39458

25 Forest's; Func runs 10000 result: 0.19878497533879688

Score: 0.11244

500 Forest's; Func runs 10000 result: 0.0569274044494088

Score: 0.03220

=============================

5 Megacity's; Func runs 10000 result: 3.44

Score: 0.28667

25 Megacity's; Func runs 10000 result: 1.088

Score: 0.09067

500 Megacity's; Func runs 10000 result: 0.28480000000000005

Score: 0.02373

=============================

All score: 2.82606

To put it mildly, the results are below average in the comparison table.

SDSm test stand results:

C_AO_SDSm:100;100;0.05

=============================

5 Rastrigin's; Func runs 10000 result: 80.65976429448276

Score: 0.99942

25 Rastrigin's; Func runs 10000 result: 79.95659847225565

Score: 0.99071

500 Rastrigin's; Func runs 10000 result: 57.23107170601535

Score: 0.70913

=============================

5 Forest's; Func runs 10000 result: 1.7241883676504082

Score: 0.97529

25 Forest's; Func runs 10000 result: 1.497160007591401

Score: 0.84687

500 Forest's; Func runs 10000 result: 0.29481739063639945

Score: 0.16676

=============================

5 Megacity's; Func runs 10000 result: 10.559999999999999

Score: 0.88000

25 Megacity's; Func runs 10000 result: 6.824000000000001

Score: 0.56867

500 Megacity's; Func runs 10000 result: 1.2384

Score: 0.10320

=============================

All score: 6.24004

SDSm results are really impressive.

The animated visualization of the IWDm algorithm shows some degree of ability to cluster potentially good search regions, especially noticeable in the Rastrigin function. But the long flat sections of the convergence graph demonstrate where the algorithm has noticeably stuck.

IWDm on the Rastrigin test function.

IWDm on the Forest test function.

IWDm on the Megacity test function.

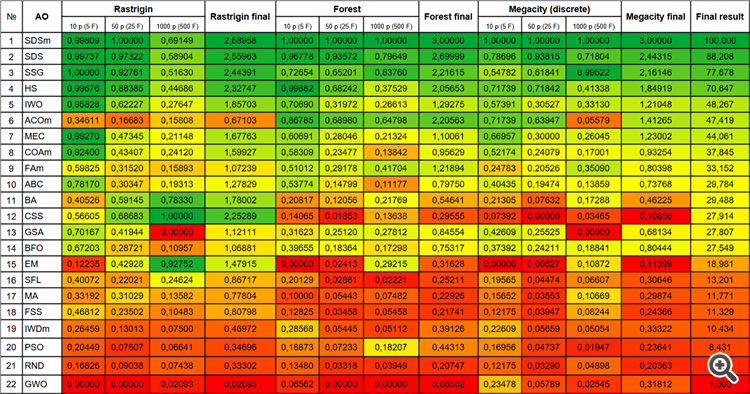

This article examined the IWDm algorithm, which took 19th place among 22 algorithms, or 4th place from the bottom, overtaking the well-known and popular PSO algorithm. IWDm shows the best results on smooth functions. Consistent improvement of the convergence graph on all three test functions gives hope that with an increase in the number of iterations the algorithm will continue to converge, but then the practical meaning and expediency of its use disappear. The test condition is strict, 10,000 iterations, no more and no less.

In addition, it is worth noting the modified SDSm algorithm, which took 7 out of 9 possible first places. This algorithm is a real "all-rounder" among optimization algorithms. The improvement in SDSm results compared to conventional SDS on the "sharp" Forest and complex discrete Megacity functions is especially noticeable (on the latter function, the improvement is almost 30% with 1000 parameters). Thus, in addition to the main subject of the article - IWD, we see a new and magnificent guest of the table - SDSm. You can see the animation of SDS operation here. To see how SDSm works, run the test script from the archive located in the folder with SDS.

| # | AO | Description | Rastrigin | Rastrigin final | Forest | Forest final | Megacity (discrete) | Megacity final | Final result | ||||||

| 10 p (5 F) | 50 p (25 F) | 1000 p (500 F) | 10 p (5 F) | 50 p (25 F) | 1000 p (500 F) | 10 p (5 F) | 50 p (25 F) | 1000 p (500 F) | |||||||

| 1 | SDSm | stochastic diffusion search M | 0.99809 | 1.00000 | 0.69149 | 2.68958 | 1.00000 | 1.00000 | 1.00000 | 3.00000 | 1.00000 | 1.00000 | 1.00000 | 3.00000 | 100.000 |

| 2 | SDS | stochastic Diffusion Search | 0.99737 | 0.97322 | 0.58904 | 2.55963 | 0.96778 | 0.93572 | 0.79649 | 2.69999 | 0.78696 | 0.93815 | 0.71804 | 2.44315 | 88.208 |

| 3 | SSG | saplings sowing and growing | 1.00000 | 0.92761 | 0.51630 | 2.44391 | 0.72654 | 0.65201 | 0.83760 | 2.21615 | 0.54782 | 0.61841 | 0.99522 | 2.16146 | 77.678 |

| 4 | HS | harmony search | 0.99676 | 0.88385 | 0.44686 | 2.32747 | 0.99882 | 0.68242 | 0.37529 | 2.05653 | 0.71739 | 0.71842 | 0.41338 | 1.84919 | 70.647 |

| 5 | IWO | invasive weed optimization | 0.95828 | 0.62227 | 0.27647 | 1.85703 | 0.70690 | 0.31972 | 0.26613 | 1.29275 | 0.57391 | 0.30527 | 0.33130 | 1.21048 | 48.267 |

| 6 | ACOm | ant colony optimization M | 0.34611 | 0.16683 | 0.15808 | 0.67103 | 0.86785 | 0.68980 | 0.64798 | 2.20563 | 0.71739 | 0.63947 | 0.05579 | 1.41265 | 47.419 |

| 7 | MEC | mind evolutionary computation | 0.99270 | 0.47345 | 0.21148 | 1.67763 | 0.60691 | 0.28046 | 0.21324 | 1.10061 | 0.66957 | 0.30000 | 0.26045 | 1.23002 | 44.061 |

| 8 | COAm | cuckoo optimization algorithm M | 0.92400 | 0.43407 | 0.24120 | 1.59927 | 0.58309 | 0.23477 | 0.13842 | 0.95629 | 0.52174 | 0.24079 | 0.17001 | 0.93254 | 37.845 |

| 9 | FAm | firefly algorithm M | 0.59825 | 0.31520 | 0.15893 | 1.07239 | 0.51012 | 0.29178 | 0.41704 | 1.21894 | 0.24783 | 0.20526 | 0.35090 | 0.80398 | 33.152 |

| 10 | ABC | artificial bee colony | 0.78170 | 0.30347 | 0.19313 | 1.27829 | 0.53774 | 0.14799 | 0.11177 | 0.79750 | 0.40435 | 0.19474 | 0.13859 | 0.73768 | 29.784 |

| 11 | BA | bat algorithm | 0.40526 | 0.59145 | 0.78330 | 1.78002 | 0.20817 | 0.12056 | 0.21769 | 0.54641 | 0.21305 | 0.07632 | 0.17288 | 0.46225 | 29.488 |

| 12 | CSS | charged system search | 0.56605 | 0.68683 | 1.00000 | 2.25289 | 0.14065 | 0.01853 | 0.13638 | 0.29555 | 0.07392 | 0.00000 | 0.03465 | 0.10856 | 27.914 |

| 13 | GSA | gravitational search algorithm | 0.70167 | 0.41944 | 0.00000 | 1.12111 | 0.31623 | 0.25120 | 0.27812 | 0.84554 | 0.42609 | 0.25525 | 0.00000 | 0.68134 | 27.807 |

| 14 | BFO | bacterial foraging optimization | 0.67203 | 0.28721 | 0.10957 | 1.06881 | 0.39655 | 0.18364 | 0.17298 | 0.75317 | 0.37392 | 0.24211 | 0.18841 | 0.80444 | 27.549 |

| 15 | EM | electroMagnetism-like algorithm | 0.12235 | 0.42928 | 0.92752 | 1.47915 | 0.00000 | 0.02413 | 0.29215 | 0.31628 | 0.00000 | 0.00527 | 0.10872 | 0.11399 | 18.981 |

| 16 | SFL | shuffled frog-leaping | 0.40072 | 0.22021 | 0.24624 | 0.86717 | 0.20129 | 0.02861 | 0.02221 | 0.25211 | 0.19565 | 0.04474 | 0.06607 | 0.30646 | 13.201 |

| 17 | MA | monkey algorithm | 0.33192 | 0.31029 | 0.13582 | 0.77804 | 0.10000 | 0.05443 | 0.07482 | 0.22926 | 0.15652 | 0.03553 | 0.10669 | 0.29874 | 11.771 |

| 18 | FSS | fish school search | 0.46812 | 0.23502 | 0.10483 | 0.80798 | 0.12825 | 0.03458 | 0.05458 | 0.21741 | 0.12175 | 0.03947 | 0.08244 | 0.24366 | 11.329 |

| 19 | IWDm | intelligent water drops M | 0.26459 | 0.13013 | 0.07500 | 0.46972 | 0.28568 | 0.05445 | 0.05112 | 0.39126 | 0.22609 | 0.05659 | 0.05054 | 0.33322 | 10.434 |

| 20 | PSO | particle swarm optimisation | 0.20449 | 0.07607 | 0.06641 | 0.34696 | 0.18873 | 0.07233 | 0.18207 | 0.44313 | 0.16956 | 0.04737 | 0.01947 | 0.23641 | 8.431 |

| 21 | RND | random | 0.16826 | 0.09038 | 0.07438 | 0.33302 | 0.13480 | 0.03318 | 0.03949 | 0.20747 | 0.12175 | 0.03290 | 0.04898 | 0.20363 | 5.056 |

| 22 | GWO | grey wolf optimizer | 0.00000 | 0.00000 | 0.02093 | 0.02093 | 0.06562 | 0.00000 | 0.00000 | 0.06562 | 0.23478 | 0.05789 | 0.02545 | 0.31812 | 1.000 |

Summary

The original IWD algorithm was developed by the author to solve problems of finding shortest paths, such as transport logistics, network routing and finding optimal paths in geographic information systems. This article did not examine its capabilities and performance in these specific tasks, and the new modified IWDm algorithm, presented as a universal one, was unable to show impressive results. Increasing the number of sectors, unfortunately, did not lead to an increase in the efficiency of the algorithm.

However, the operation of the IWDm algorithm left me with the feeling that the full potential of this elegant algorithm had not yet been fully realized. The idea of dividing the search space into sectors and taking into account their rating, based on modeling the movement of soil in the river bed, seems very interesting. The results showed that such an approach, such as storing the best dishes in each restaurant, and perhaps using a bias in the probability of a new point in the vicinity of the previous one, significantly improved the performance of the SDS algorithm.

If we increase the number of restaurants in the new SDSm (the default parameter is 100) and divide the coordinates into smaller areas (the SDS parameter is 1000), the results become worse. This means that with this approach, the choice of an individual restaurant becomes more important, and a specific point in its vicinity ceases to be significant (the algorithm turns into a regular SDS). This means that the quadratic probability distribution has maximum influence for a certain size of sectors on the coordinates. This is a kind of balance between the methods present in the algorithm that differ in their goals.

The most important takeaway from this article is that it is impossible to say definitively which method used in individual search strategies is the best or the worst. The use of different methods can both worsen some algorithms and significantly improve others. It is the combination of methods used in optimization algorithms that is important, not the methods themselves.

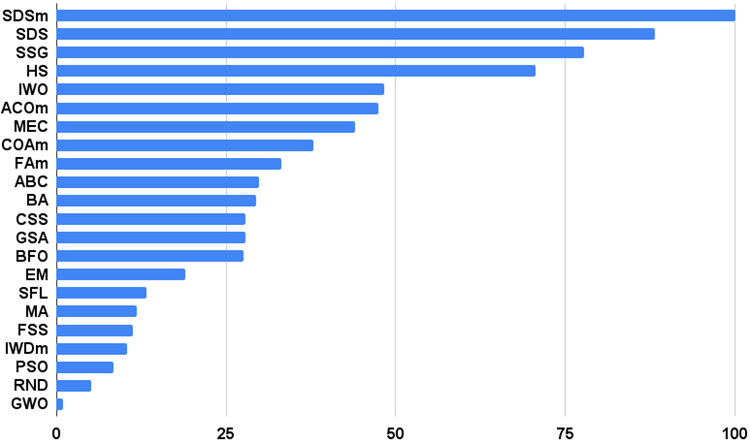

Figure 2. Color gradation of algorithms according to relevant tests

The histogram of the algorithm test results is provided below (on a scale from 0 to 100, the more the better, in the archive there is a script for calculating the rating table using the method described in this article):

Figure 3. Histogram of the final results of testing algorithms

Pros and cons of the modified Intelligent Water Drops (IWDm) algorithm:

Pros:

1. A small number of external parameters.

Cons:

1. Poor results on smooth and discrete functions.

2. Low convergence.

3. Tendency to get stuck in local extremes.

The article is accompanied by an archive with updated current versions of the algorithm codes described in previous articles. The author of the article is not responsible for the absolute accuracy in the description of canonical algorithms. Changes have been made to many of them to improve search capabilities. The conclusions and judgments presented in the articles are based on the results of the experiments.

Translated from Russian by MetaQuotes Ltd.

Original article: https://www.mql5.com/ru/articles/13730

Warning: All rights to these materials are reserved by MetaQuotes Ltd. Copying or reprinting of these materials in whole or in part is prohibited.

This article was written by a user of the site and reflects their personal views. MetaQuotes Ltd is not responsible for the accuracy of the information presented, nor for any consequences resulting from the use of the solutions, strategies or recommendations described.

Master MQL5 from beginner to pro (Part I): Getting started with programming

Master MQL5 from beginner to pro (Part I): Getting started with programming

Neural networks made easy (Part 64): ConserWeightive Behavioral Cloning (CWBC) method

Neural networks made easy (Part 64): ConserWeightive Behavioral Cloning (CWBC) method

Seasonality Filtering and time period for Deep Learning ONNX models with python for EA

Seasonality Filtering and time period for Deep Learning ONNX models with python for EA

Cross-validation and basics of causal inference in CatBoost models, export to ONNX format

Cross-validation and basics of causal inference in CatBoost models, export to ONNX format

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

All eyes on SDSm, thank you!

Thank you for your interest in the work.

The intrigue is just beginning.)

Published article Population-based Optimisation Algorithms: Intelligent Water Drops (IWD) Algorithm:

Author: Andrey Dik

It has nothing to do with trading.

Well, then the optimiser in MT5 has nothing to do with trading either.))))))

Does anyone really make money with this complex algorithm?