Artificial Ecosystem-based Optimization (AEO) algorithm

The article considers a metaheuristic Artificial Ecosystem-based Optimization (AEO) algorithm, which simulates interactions between ecosystem components by creating an initial population of solutions and applying adaptive update strategies, and describes in detail the stages of AEO operation, including the consumption and decomposition phases, as well as different agent behavior strategies. The article introduces the features and advantages of this algorithm.

Artificial Cooperative Search (ACS) algorithm

Artificial Cooperative Search (ACS) is an innovative method using a binary matrix and multiple dynamic populations based on mutualistic relationships and cooperation to find optimal solutions quickly and accurately. ACS unique approach to predators and prey enables it to achieve excellent results in numerical optimization problems.

Reimagining Classic Strategies: Crude Oil

In this article, we revisit a classic crude oil trading strategy with the aim of enhancing it by leveraging supervised machine learning algorithms. We will construct a least-squares model to predict future Brent crude oil prices based on the spread between Brent and WTI crude oil prices. Our goal is to identify a leading indicator of future changes in Brent prices.

MQL5 Wizard Techniques you should know (Part 07): Dendrograms

Data classification for purposes of analysis and forecasting is a very diverse arena within machine learning and it features a large number of approaches and methods. This piece looks at one such approach, namely Agglomerative Hierarchical Classification.

Population optimization algorithms: Evolution Strategies, (μ,λ)-ES and (μ+λ)-ES

The article considers a group of optimization algorithms known as Evolution Strategies (ES). They are among the very first population algorithms to use evolutionary principles for finding optimal solutions. We will implement changes to the conventional ES variants and revise the test function and test stand methodology for the algorithms.

Neural Network in Practice: Secant Line

As already explained in the theoretical part, when working with neural networks we need to use linear regressions and derivatives. Why? The reason is that linear regression is one of the simplest formulas in existence. Essentially, linear regression is just an affine function. However, when we talk about neural networks, we are not interested in the effects of direct linear regression. We are interested in the equation that generates this line. We are not that interested in the line created. Do you know the main equation that we need to understand? If not, I recommend reading this article to understanding it.

MQL5 Wizard Techniques you should know (Part 36): Q-Learning with Markov Chains

Reinforcement Learning is one of the three main tenets in machine learning, alongside supervised learning and unsupervised learning. It is therefore concerned with optimal control, or learning the best long-term policy that will best suit the objective function. It is with this back-drop, that we explore its possible role in informing the learning-process to an MLP of a wizard assembled Expert Advisor.

Data Science and ML (Part 34): Time series decomposition, Breaking the stock market down to the core

In a world overflowing with noisy and unpredictable data, identifying meaningful patterns can be challenging. In this article, we'll explore seasonal decomposition, a powerful analytical technique that helps separate data into its key components: trend, seasonal patterns, and noise. By breaking data down this way, we can uncover hidden insights and work with cleaner, more interpretable information.

Population optimization algorithms: Simulated Isotropic Annealing (SIA) algorithm. Part II

The first part was devoted to the well-known and popular algorithm - simulated annealing. We have thoroughly considered its pros and cons. The second part of the article is devoted to the radical transformation of the algorithm, which turns it into a new optimization algorithm - Simulated Isotropic Annealing (SIA).

Population optimization algorithms: Resistance to getting stuck in local extrema (Part II)

We continue our experiment that aims to examine the behavior of population optimization algorithms in the context of their ability to efficiently escape local minima when population diversity is low and reach global maxima. Research results are provided.

MQL5 Wizard Techniques you should know (Part 41): Deep-Q-Networks

The Deep-Q-Network is a reinforcement learning algorithm that engages neural networks in projecting the next Q-value and ideal action during the training process of a machine learning module. We have already considered an alternative reinforcement learning algorithm, Q-Learning. This article therefore presents another example of how an MLP trained with reinforcement learning, can be used within a custom signal class.

Neural Networks in Trading: Mask-Attention-Free Approach to Price Movement Forecasting

In this article, we will discuss the Mask-Attention-Free Transformer (MAFT) method and its application in the field of trading. Unlike traditional Transformers that require data masking when processing sequences, MAFT optimizes the attention process by eliminating the need for masking, significantly improving computational efficiency.

MQL5 Wizard Techniques you should know (Part 66): Using Patterns of FrAMA and the Force Index with the Dot Product Kernel

The FrAMA Indicator and the Force Index Oscillator are trend and volume tools that could be paired when developing an Expert Advisor. We continue from our last article that introduced this pair by considering machine learning applicability to the pair. We are using a convolution neural network that uses the dot-product kernel in making forecasts with these indicators’ inputs. This is done in a custom signal class file that works with the MQL5 wizard to assemble an Expert Advisor.

Matrix Factorization: The Basics

Since the goal here is didactic, we will proceed as simply as possible. That is, we will implement only what we need: matrix multiplication. You will see today that this is enough to simulate matrix-scalar multiplication. The most significant difficulty that many people encounter when implementing code using matrix factorization is this: unlike scalar factorization, where in almost all cases the order of the factors does not change the result, this is not the case when using matrices.

Population optimization algorithms: Bird Swarm Algorithm (BSA)

The article explores the bird swarm-based algorithm (BSA) inspired by the collective flocking interactions of birds in nature. The different search strategies of individuals in BSA, including switching between flight, vigilance and foraging behavior, make this algorithm multifaceted. It uses the principles of bird flocking, communication, adaptability, leading and following to efficiently find optimal solutions.

Reimagining Classic Strategies (Part 13): Taking Our Crossover Strategy to New Dimensions (Part 2)

Join us in our discussion as we look for additional improvements to make to our moving-average cross over strategy to reduce the lag in our trading strategy to more reliable levels by leveraging our skills in data science. It is a well-studied fact that projecting your data to higher dimensions can at times improve the performance of your machine learning models. We will demonstrate what this practically means for you as a trader, and illustrate how you can weaponize this powerful principle using your MetaTrader 5 Terminal.

The case for using a Composite Data Set this Q4 in weighing SPDR XLY's next performance

We consider XLY, SPDR’s consumer discretionary spending ETF and see if with tools in MetaTrader’s IDE we can sift through an array of data sets in selecting what could work with a forecasting model with a forward outlook of not more than a year.

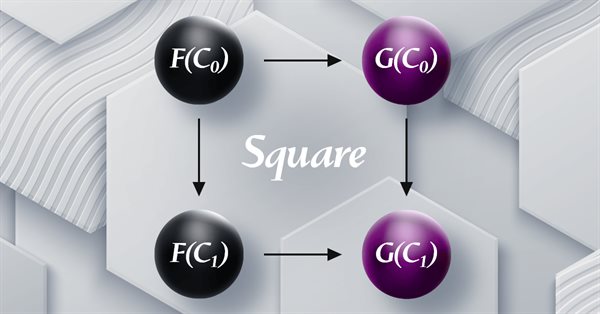

Category Theory in MQL5 (Part 19): Naturality Square Induction

We continue our look at natural transformations by considering naturality square induction. Slight restraints on multicurrency implementation for experts assembled with the MQL5 wizard mean we are showcasing our data classification abilities with a script. Principle applications considered are price change classification and thus its forecasting.

MQL5 Wizard Techniques you should know (Part 62): Using Patterns of ADX and CCI with Reinforcement-Learning TRPO

The ADX Oscillator and CCI oscillator are trend following and momentum indicators that can be paired when developing an Expert Advisor. We continue where we left off in the last article by examining how in-use training, and updating of our developed model, can be made thanks to reinforcement-learning. We are using an algorithm we are yet to cover in these series, known as Trusted Region Policy Optimization. And, as always, Expert Advisor assembly by the MQL5 Wizard allows us to set up our model(s) for testing much quicker and also in a way where it can be distributed and tested with different signal types.

Adaptive Social Behavior Optimization (ASBO): Schwefel, Box-Muller Method

This article provides a fascinating insight into the world of social behavior in living organisms and its influence on the creation of a new mathematical model - ASBO (Adaptive Social Behavior Optimization). We will examine how the principles of leadership, neighborhood, and cooperation observed in living societies inspire the development of innovative optimization algorithms.

Data label for time series mining (Part 5):Apply and Test in EA Using Socket

This series of articles introduces several time series labeling methods, which can create data that meets most artificial intelligence models, and targeted data labeling according to needs can make the trained artificial intelligence model more in line with the expected design, improve the accuracy of our model, and even help the model make a qualitative leap!

Data Science and ML (Part 47): Forecasting the Market Using the DeepAR model in Python

In this article, we will attempt to predict the market with a decent model for time series forecasting named DeepAR. A model that is a combination of deep neural networks and autoregressive properties found in models like ARIMA and Vector Autoregressive (VAR).

Neural networks made easy (Part 61): Optimism issue in offline reinforcement learning

During the offline learning, we optimize the Agent's policy based on the training sample data. The resulting strategy gives the Agent confidence in its actions. However, such optimism is not always justified and can cause increased risks during the model operation. Today we will look at one of the methods to reduce these risks.

MQL5 Wizard Techniques you should know (Part 34): Price-Embedding with an Unconventional RBM

Restricted Boltzmann Machines are a form of neural network that was developed in the mid 1980s at a time when compute resources were prohibitively expensive. At its onset, it relied on Gibbs Sampling and Contrastive Divergence in order to reduce dimensionality or capture the hidden probabilities/properties over input training data sets. We examine how Backpropagation can perform similarly when the RBM ‘embeds’ prices for a forecasting Multi-Layer-Perceptron.

Dynamic mode decomposition applied to univariate time series in MQL5

Dynamic mode decomposition (DMD) is a technique usually applied to high-dimensional datasets. In this article, we demonstrate the application of DMD on univariate time series, showing its ability to characterize a series as well as make forecasts. In doing so, we will investigate MQL5's built-in implementation of dynamic mode decomposition, paying particular attention to the new matrix method, DynamicModeDecomposition().

Category Theory in MQL5 (Part 21): Natural Transformations with LDA

This article, the 21st in our series, continues with a look at Natural Transformations and how they can be implemented using linear discriminant analysis. We present applications of this in a signal class format, like in the previous article.

MQL5 Wizard Techniques you should know (Part 74): Using Patterns of Ichimoku and the ADX-Wilder with Supervised Learning

We follow up on our last article, where we introduced the indicator pair of the Ichimoku and the ADX, by looking at how this duo could be improved with Supervised Learning. Ichimoku and ADX are a support/resistance plus trend complimentary pairing. Our supervised learning approach uses a neural network that engages the Deep Spectral Mixture Kernel to fine tune the forecasts of this indicator pairing. As per usual, this is done in a custom signal class file that works with the MQL5 wizard to assemble an Expert Advisor.

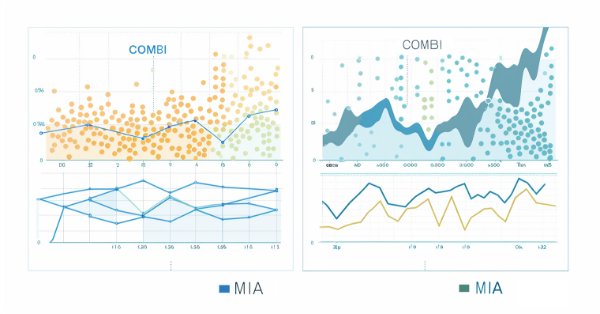

The Group Method of Data Handling: Implementing the Combinatorial Algorithm in MQL5

In this article we continue our exploration of the Group Method of Data Handling family of algorithms, with the implementation of the Combinatorial Algorithm along with its refined incarnation, the Combinatorial Selective Algorithm in MQL5.

Integrating Computer Vision into Trading in MQL5 (Part 1): Creating Basic Functions

The EURUSD forecasting system with the use of computer vision and deep learning. Learn how convolutional neural networks can recognize complex price patterns in the foreign exchange market and predict exchange rate movements with up to 54% accuracy. The article shares the methodology for creating an algorithm that uses artificial intelligence technologies for visual analysis of charts instead of traditional technical indicators. The author demonstrates the process of transforming price data into "images", their processing by a neural network, and a unique opportunity to peer into the "consciousness" of AI through activation maps and attention heatmaps. Practical Python code using the MetaTrader 5 library allows readers to reproduce the system and apply it in their own trading.

Integrating MQL5 with data processing packages (Part 1): Advanced Data analysis and Statistical Processing

Integration enables seamless workflow where raw financial data from MQL5 can be imported into data processing packages like Jupyter Lab for advanced analysis including statistical testing.

Integrating MQL5 with data processing packages (Part 4): Big Data Handling

Exploring advanced techniques to integrate MQL5 with powerful data processing tools, this part focuses on efficient handling of big data to enhance trading analysis and decision-making.

Artificial Bee Hive Algorithm (ABHA): Theory and methods

In this article, we will consider the Artificial Bee Hive Algorithm (ABHA) developed in 2009. The algorithm is aimed at solving continuous optimization problems. We will look at how ABHA draws inspiration from the behavior of a bee colony, where each bee has a unique role that helps them find resources more efficiently.

Resampling techniques for prediction and classification assessment in MQL5

In this article, we will explore and implement, methods for assessing model quality that utilize a single dataset as both training and validation sets.

Neural Networks in Trading: Exploring the Local Structure of Data

Effective identification and preservation of the local structure of market data in noisy conditions is a critical task in trading. The use of the Self-Attention mechanism has shown promising results in processing such data; however, the classical approach does not account for the local characteristics of the underlying structure. In this article, I introduce an algorithm capable of incorporating these structural dependencies.

MQL5 Wizard Techniques you should know (Part 32): Regularization

Regularization is a form of penalizing the loss function in proportion to the discrete weighting applied throughout the various layers of a neural network. We look at the significance, for some of the various regularization forms, this can have in test runs with a wizard assembled Expert Advisor.

Developing an MQL5 RL agent with RestAPI integration (Part 3): Creating automatic moves and test scripts in MQL5

This article discusses the implementation of automatic moves in the tic-tac-toe game in Python, integrated with MQL5 functions and unit tests. The goal is to improve the interactivity of the game and ensure the reliability of the system through testing in MQL5. The presentation covers game logic development, integration, and hands-on testing, and concludes with the creation of a dynamic game environment and a robust integrated system.

Reimagining Classic Strategies (Part IV): SP500 and US Treasury Notes

In this series of articles, we analyze classical trading strategies using modern algorithms to determine whether we can improve the strategy using AI. In today's article, we revisit a classical approach for trading the SP500 using the relationship it has with US Treasury Notes.

Neural Networks in Trading: Injection of Global Information into Independent Channels (InjectTST)

Most modern multimodal time series forecasting methods use the independent channels approach. This ignores the natural dependence of different channels of the same time series. Smart use of two approaches (independent and mixed channels) is the key to improving the performance of the models.

ALGLIB library optimization methods (Part I)

In this article, we will get acquainted with the ALGLIB library optimization methods for MQL5. The article includes simple and clear examples of using ALGLIB to solve optimization problems, which will make mastering the methods as accessible as possible. We will take a detailed look at the connection of such algorithms as BLEIC, L-BFGS and NS, and use them to solve a simple test problem.

Self Optimizing Expert Advisors in MQL5 (Part 12): Building Linear Classifiers Using Matrix Factorization

This article explores the powerful role of matrix factorization in algorithmic trading, specifically within MQL5 applications. From regression models to multi-target classifiers, we walk through practical examples that demonstrate how easily these techniques can be integrated using built-in MQL5 functions. Whether you're predicting price direction or modeling indicator behavior, this guide lays a strong foundation for building intelligent trading systems using matrix methods.