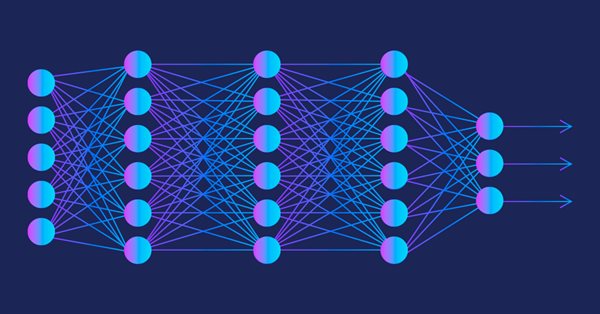

Multilayer perceptron and backpropagation algorithm

The popularity of these two methods grows, so a lot of libraries have been developed in Matlab, R, Python, C++ and others, which receive a training set as input and automatically create an appropriate network for the problem. Let us try to understand how the basic neural network type works (including single-neuron perceptron and multilayer perceptron). We will consider an exciting algorithm which is responsible for network training - gradient descent and backpropagation. Existing complex models are often based on such simple network models.

Neural networks made easy (Part 3): Convolutional networks

As a continuation of the neural network topic, I propose considering convolutional neural networks. This type of neural network are usually applied to analyzing visual imagery. In this article, we will consider the application of these networks in the financial markets.

Connecting NeuroSolutions Neuronets

In addition to creation of neuronets, the NeuroSolutions software suite allows exporting them as DLLs. This article describes the process of creating a neuronet, generating a DLL and connecting it to an Expert Advisor for trading in MetaTrader 5.

Experiments with neural networks (Part 1): Revisiting geometry

In this article, I will use experimentation and non-standard approaches to develop a profitable trading system and check whether neural networks can be of any help for traders.

Neural networks made easy (Part 9): Documenting the work

We have already passed a long way and the code in our library is becoming bigger and bigger. This makes it difficult to keep track of all connections and dependencies. Therefore, I suggest creating documentation for the earlier created code and to keep it updating with each new step. Properly prepared documentation will help us see the integrity of our work.

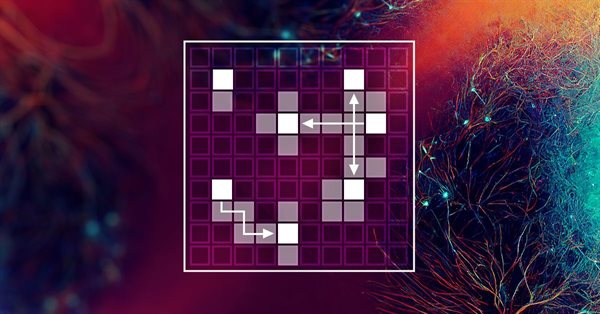

Neural networks made easy (Part 26): Reinforcement Learning

We continue to study machine learning methods. With this article, we begin another big topic, Reinforcement Learning. This approach allows the models to set up certain strategies for solving the problems. We can expect that this property of reinforcement learning will open up new horizons for building trading strategies.

Neural networks made easy (Part 29): Advantage Actor-Critic algorithm

In the previous articles of this series, we have seen two reinforced learning algorithms. Each of them has its own advantages and disadvantages. As often happens in such cases, next comes the idea to combine both methods into an algorithm, using the best of the two. This would compensate for the shortcomings of each of them. One of such methods will be discussed in this article.

Deep Neural Networks (Part III). Sample selection and dimensionality reduction

This article is a continuation of the series of articles about deep neural networks. Here we will consider selecting samples (removing noise), reducing the dimensionality of input data and dividing the data set into the train/val/test sets during data preparation for training the neural network.

Neural networks made easy (Part 5): Multithreaded calculations in OpenCL

We have earlier discussed some types of neural network implementations. In the considered networks, the same operations are repeated for each neuron. A logical further step is to utilize multithreaded computing capabilities provided by modern technology in an effort to speed up the neural network learning process. One of the possible implementations is described in this article.

Mastering ONNX: The Game-Changer for MQL5 Traders

Dive into the world of ONNX, the powerful open-standard format for exchanging machine learning models. Discover how leveraging ONNX can revolutionize algorithmic trading in MQL5, allowing traders to seamlessly integrate cutting-edge AI models and elevate their strategies to new heights. Uncover the secrets to cross-platform compatibility and learn how to unlock the full potential of ONNX in your MQL5 trading endeavors. Elevate your trading game with this comprehensive guide to Mastering ONNX

Neural Networks Made Easy (Part 96): Multi-Scale Feature Extraction (MSFformer)

Efficient extraction and integration of long-term dependencies and short-term features remain an important task in time series analysis. Their proper understanding and integration are necessary to create accurate and reliable predictive models.

Neural networks made easy (Part 27): Deep Q-Learning (DQN)

We continue to study reinforcement learning. In this article, we will get acquainted with the Deep Q-Learning method. The use of this method has enabled the DeepMind team to create a model that can outperform a human when playing Atari computer games. I think it will be useful to evaluate the possibilities of the technology for solving trading problems.

Reimagining Classic Strategies (Part 20): Modern Stochastic Oscillators

This article demonstrates how the stochastic oscillator, a classical technical indicator, can be repurposed beyond its conventional use as a mean-reversion tool. By viewing the indicator through a different analytical lens, we show how familiar strategies can yield new value and support alternative trading rules, including trend-following interpretations. Ultimately, the article highlights how every technical indicator in the MetaTrader 5 terminal holds untapped potential, and how thoughtful trial and error can uncover meaningful interpretations hidden from view.

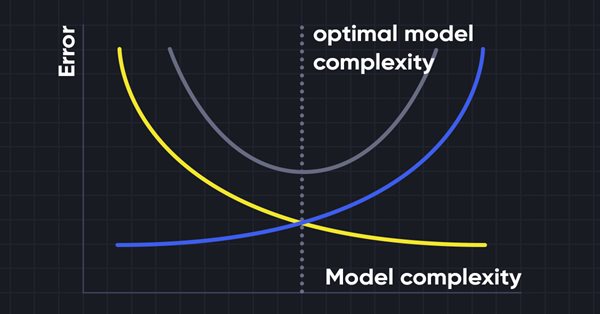

Neural networks made easy (Part 6): Experimenting with the neural network learning rate

We have previously considered various types of neural networks along with their implementations. In all cases, the neural networks were trained using the gradient decent method, for which we need to choose a learning rate. In this article, I want to show the importance of a correctly selected rate and its impact on the neural network training, using examples.

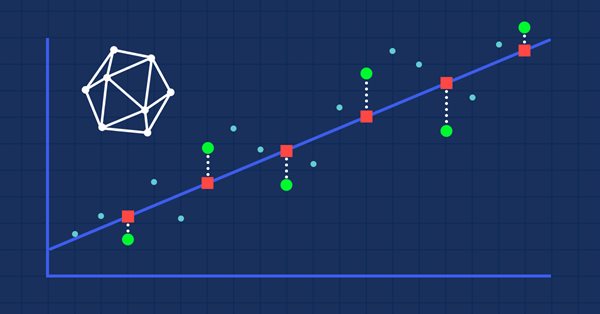

Evaluating ONNX models using regression metrics

Regression is a task of predicting a real value from an unlabeled example. The so-called regression metrics are used to assess the accuracy of regression model predictions.

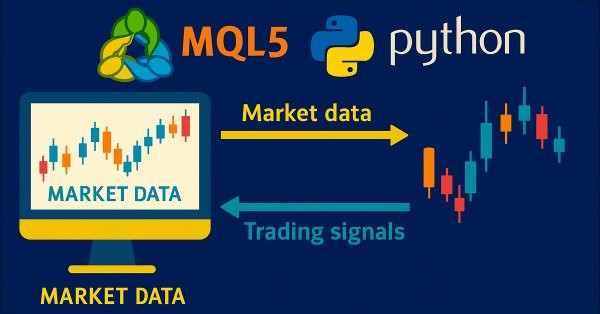

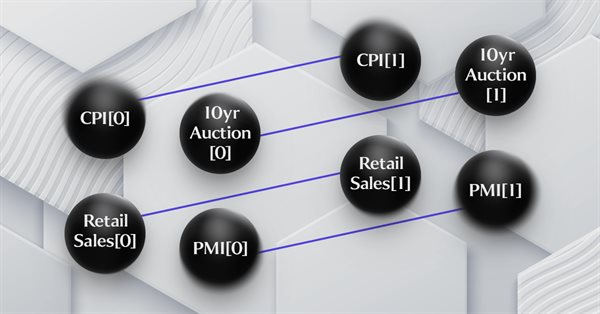

Multi-module trading robot in Python and MQL5 (Part I): Creating basic architecture and first modules

We are going to develop a modular trading system that combines Python for data analysis with MQL5 for trade execution. Four independent modules monitor different market aspects in parallel: volumes, arbitrage, economics and risks, and use RandomForest with 400 trees for analysis. Particular emphasis is placed on risk management, since even the most advanced trading algorithms are useless without proper risk management.

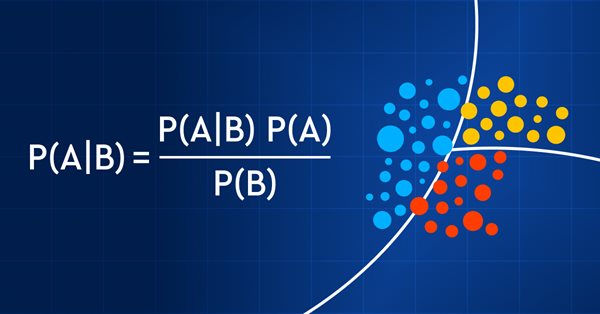

Data Science and Machine Learning (Part 11): Naïve Bayes, Probability theory in Trading

Trading with probability is like walking on a tightrope - it requires precision, balance, and a keen understanding of risk. In the world of trading, the probability is everything. It's the difference between success and failure, profit and loss. By leveraging the power of probability, traders can make informed decisions, manage risk effectively, and achieve their financial goals. So, whether you're a seasoned investor or a novice trader, understanding probability is the key to unlocking your trading potential. In this article, we'll explore the exciting world of trading with probability and show you how to take your trading game to the next level.

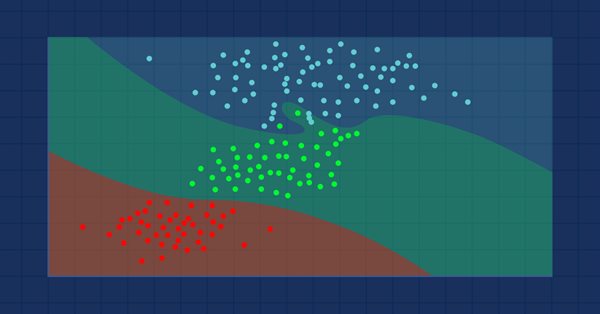

Data Science and Machine Learning (Part 09): The K-Nearest Neighbors Algorithm (KNN)

This is a lazy algorithm that doesn't learn from the training dataset, it stores the dataset instead and acts immediately when it's given a new sample. As simple as it is, it is used in a variety of real-world applications.

Algorithmic trading based on 3D reversal patterns

Discovering a new world of automated trading on 3D bars. What does a trading robot look like on multidimensional price bars? Are "yellow" clusters of 3D bars able to predict trend reversals? What does multidimensional trading look like?

Neural networks made easy (Part 13): Batch Normalization

In the previous article, we started considering methods aimed at improving neural network training quality. In this article, we will continue this topic and will consider another approach — batch data normalization.

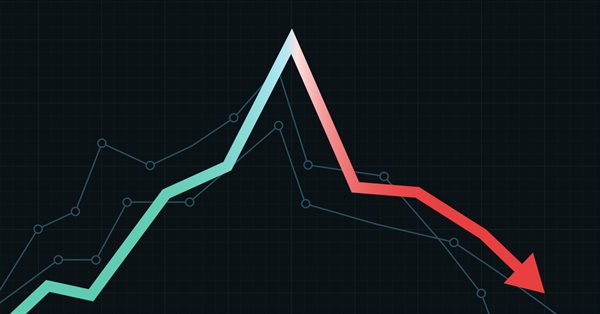

Data Science and Machine Learning (Part 04): Predicting Current Stock Market Crash

In this article I am going to attempt to use our logistic model to predict the stock market crash based upon the fundamentals of the US economy, the NETFLIX and APPLE are the stocks we are going to focus on, Using the previous market crashes of 2019 and 2020 let's see how our model will perform in the current dooms and glooms.

Neural networks made easy (Part 30): Genetic algorithms

Today I want to introduce you to a slightly different learning method. We can say that it is borrowed from Darwin's theory of evolution. It is probably less controllable than the previously discussed methods but it allows training non-differentiable models.

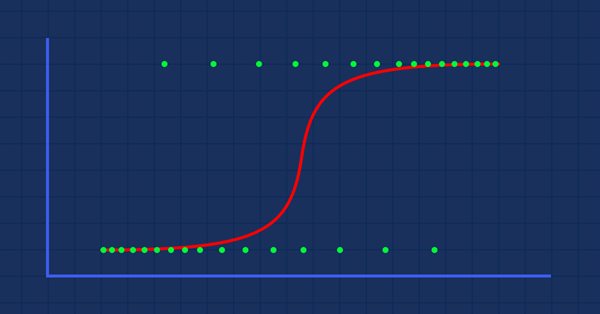

Data Science and Machine Learning (Part 02): Logistic Regression

Data Classification is a crucial thing for an algo trader and a programmer. In this article, we are going to focus on one of classification logistic algorithms that can probability help us identify the Yes's or No's, the Ups and Downs, Buys and Sells.

Experiments with neural networks (Part 6): Perceptron as a self-sufficient tool for price forecast

The article provides an example of using a perceptron as a self-sufficient price prediction tool by showcasing general concepts and the simplest ready-made Expert Advisor followed by the results of its optimization.

Population optimization algorithms: Particle swarm (PSO)

In this article, I will consider the popular Particle Swarm Optimization (PSO) algorithm. Previously, we discussed such important characteristics of optimization algorithms as convergence, convergence rate, stability, scalability, as well as developed a test stand and considered the simplest RNG algorithm.

Regression models of the Scikit-learn Library and their export to ONNX

In this article, we will explore the application of regression models from the Scikit-learn package, attempt to convert them into ONNX format, and use the resultant models within MQL5 programs. Additionally, we will compare the accuracy of the original models with their ONNX versions for both float and double precision. Furthermore, we will examine the ONNX representation of regression models, aiming to provide a better understanding of their internal structure and operational principles.

Developing a trading robot in Python (Part 3): Implementing a model-based trading algorithm

We continue the series of articles on developing a trading robot in Python and MQL5. In this article, we will create a trading algorithm in Python.

Gradient boosting in transductive and active machine learning

In this article, we will consider active machine learning methods utilizing real data, as well discuss their pros and cons. Perhaps you will find these methods useful and will include them in your arsenal of machine learning models. Transduction was introduced by Vladimir Vapnik, who is the co-inventor of the Support-Vector Machine (SVM).

Neural networks made easy (Part 14): Data clustering

It has been more than a year since I published my last article. This is quite a lot time to revise ideas and to develop new approaches. In the new article, I would like to divert from the previously used supervised learning method. This time we will dip into unsupervised learning algorithms. In particular, we will consider one of the clustering algorithms—k-means.

Neural networks made easy (Part 36): Relational Reinforcement Learning

In the reinforcement learning models we discussed in previous article, we used various variants of convolutional networks that are able to identify various objects in the original data. The main advantage of convolutional networks is the ability to identify objects regardless of their location. At the same time, convolutional networks do not always perform well when there are various deformations of objects and noise. These are the issues which the relational model can solve.

Price Action Analysis Toolkit Development (Part 36): Unlocking Direct Python Access to MetaTrader 5 Market Streams

Harness the full potential of your MetaTrader 5 terminal by leveraging Python’s data-science ecosystem and the official MetaTrader 5 client library. This article demonstrates how to authenticate and stream live tick and minute-bar data directly into Parquet storage, apply sophisticated feature engineering with Ta and Prophet, and train a time-aware Gradient Boosting model. We then deploy a lightweight Flask service to serve trade signals in real time. Whether you’re building a hybrid quant framework or enhancing your EA with machine learning, you’ll walk away with a robust, end-to-end pipeline for data-driven algorithmic trading.

MetaTrader 5 Machine Learning Blueprint (Part 2): Labeling Financial Data for Machine Learning

In this second installment of the MetaTrader 5 Machine Learning Blueprint series, you’ll discover why simple labels can lead your models astray—and how to apply advanced techniques like the Triple-Barrier and Trend-Scanning methods to define robust, risk-aware targets. Packed with practical Python examples that optimize these computationally intensive techniques, this hands-on guide shows you how to transform noisy market data into reliable labels that mirror real-world trading conditions.

Working with ONNX models in float16 and float8 formats

Data formats used to represent machine learning models play a crucial role in their effectiveness. In recent years, several new types of data have emerged, specifically designed for working with deep learning models. In this article, we will focus on two new data formats that have become widely adopted in modern models.

Creating volatility forecast indicator using Python

In this article, we will forecast future extreme volatility using binary classification. Besides, we will develop an extreme volatility forecast indicator using machine learning.

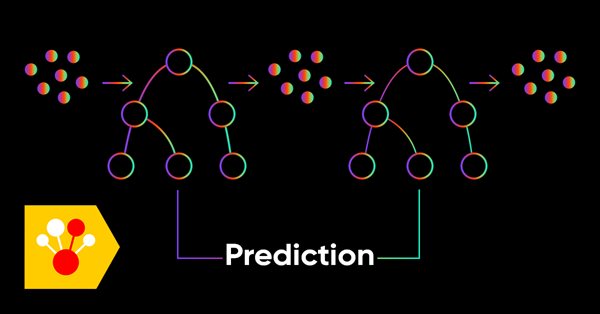

Data Science and Machine Learning (Part 05): Decision Trees

Decision trees imitate the way humans think to classify data. Let's see how to build trees and use them to classify and predict some data. The main goal of the decision trees algorithm is to separate the data with impurity and into pure or close to nodes.

Data Science and Machine Learning (Part 10): Ridge Regression

Ridge regression is a simple technique to reduce model complexity and prevent over-fitting which may result from simple linear regression

Population optimization algorithms: Ant Colony Optimization (ACO)

This time I will analyze the Ant Colony optimization algorithm. The algorithm is very interesting and complex. In the article, I make an attempt to create a new type of ACO.

Category Theory in MQL5 (Part 16): Functors with Multi-Layer Perceptrons

This article, the 16th in our series, continues with a look at Functors and how they can be implemented using artificial neural networks. We depart from our approach so far in the series, that has involved forecasting volatility and try to implement a custom signal class for setting position entry and exit signals.

Metamodels in machine learning and trading: Original timing of trading orders

Metamodels in machine learning: Auto creation of trading systems with little or no human intervention — The model decides when and how to trade on its own.

Integrating AI model into already existing MQL5 trading strategy

This topic focuses on incorporating a trained AI model (such as a reinforcement learning model like LSTM or a machine learning-based predictive model) into an existing MQL5 trading strategy.