Neural networks made easy (Part 34): Fully Parameterized Quantile Function

We continue studying distributed Q-learning algorithms. In previous articles, we have considered distributed and quantile Q-learning algorithms. In the first algorithm, we trained the probabilities of given ranges of values. In the second algorithm, we trained ranges with a given probability. In both of them, we used a priori knowledge of one distribution and trained another one. In this article, we will consider an algorithm which allows the model to train for both distributions.

Neural networks made easy (Part 80): Graph Transformer Generative Adversarial Model (GTGAN)

In this article, I will get acquainted with the GTGAN algorithm, which was introduced in January 2024 to solve complex problems of generation architectural layouts with graph constraints.

Using association rules in Forex data analysis

How to apply predictive rules of supermarket retail analytics to the real Forex market? How are purchases of cookies, milk and bread related to stock exchange transactions? The article discusses an innovative approach to algorithmic trading based on the use of association rules.

Pure implementation of RSA encryption in MQL5

MQL5 lacks built-in asymmetric cryptography, making secure data exchange over insecure channels like HTTP difficult. This article presents a pure MQL5 implementation of RSA using PKCS#1 v1.5 padding, enabling safe transmission of AES session keys and small data blocks without external libraries. This approach provides HTTPS-like security over standard HTTP and even more, it fills an important gap in secure communication for MQL5 applications.

Reimagining Classic Strategies (Part III): Forecasting Higher Highs And Lower Lows

In this series article, we will empirically analyze classic trading strategies to see if we can improve them using AI. In today's discussion, we tried to predict higher highs and lower lows using the Linear Discriminant Analysis model.

Data Science and ML (Part 27): Convolutional Neural Networks (CNNs) in MetaTrader 5 Trading Bots — Are They Worth It?

Convolutional Neural Networks (CNNs) are renowned for their prowess in detecting patterns in images and videos, with applications spanning diverse fields. In this article, we explore the potential of CNNs to identify valuable patterns in financial markets and generate effective trading signals for MetaTrader 5 trading bots. Let us discover how this deep machine learning technique can be leveraged for smarter trading decisions.

Brain Storm Optimization algorithm (Part II): Multimodality

In the second part of the article, we will move on to the practical implementation of the BSO algorithm, conduct tests on test functions and compare the efficiency of BSO with other optimization methods.

Utilizing CatBoost Machine Learning model as a Filter for Trend-Following Strategies

CatBoost is a powerful tree-based machine learning model that specializes in decision-making based on stationary features. Other tree-based models like XGBoost and Random Forest share similar traits in terms of their robustness, ability to handle complex patterns, and interpretability. These models have a wide range of uses, from feature analysis to risk management. In this article, we're going to walk through the procedure of utilizing a trained CatBoost model as a filter for a classic moving average cross trend-following strategy.

Population optimization algorithms: Bat algorithm (BA)

In this article, I will consider the Bat Algorithm (BA), which shows good convergence on smooth functions.

Data Science and ML (Part 31): Using CatBoost AI Models for Trading

CatBoost AI models have gained massive popularity recently among machine learning communities due to their predictive accuracy, efficiency, and robustness to scattered and difficult datasets. In this article, we are going to discuss in detail how to implement these types of models in an attempt to beat the forex market.

Atomic Orbital Search (AOS) algorithm: Modification

In the second part of the article, we will continue developing a modified version of the AOS (Atomic Orbital Search) algorithm focusing on specific operators to improve its efficiency and adaptability. After analyzing the fundamentals and mechanics of the algorithm, we will discuss ideas for improving its performance and the ability to analyze complex solution spaces, proposing new approaches to extend its functionality as an optimization tool.

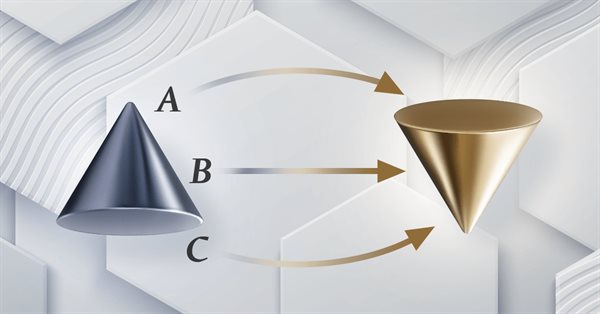

Category Theory in MQL5 (Part 18): Naturality Square

This article continues our series into category theory by introducing natural transformations, a key pillar within the subject. We look at the seemingly complex definition, then delve into examples and applications with this series’ ‘bread and butter’; volatility forecasting.

Reimagining Classic Strategies in Python: MA Crossovers

In this article, we revisit the classic moving average crossover strategy to assess its current effectiveness. Given the amount of time since its inception, we explore the potential enhancements that AI can bring to this traditional trading strategy. By incorporating AI techniques, we aim to leverage advanced predictive capabilities to potentially optimize trade entry and exit points, adapt to varying market conditions, and enhance overall performance compared to conventional approaches.

Data Science and ML (Part 29): Essential Tips for Selecting the Best Forex Data for AI Training Purposes

In this article, we dive deep into the crucial aspects of choosing the most relevant and high-quality Forex data to enhance the performance of AI models.

Integrate Your Own LLM into EA (Part 4): Training Your Own LLM with GPU

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

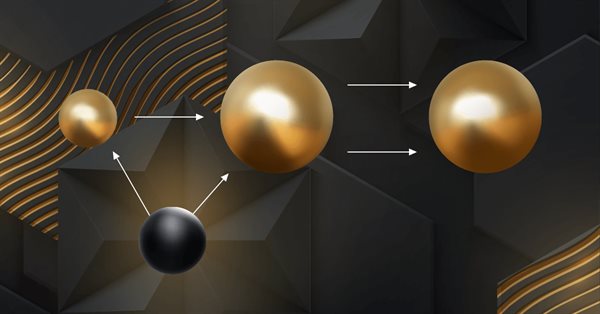

Billiards Optimization Algorithm (BOA)

The BOA method is inspired by the classic game of billiards and simulates the search for optimal solutions as a game with balls trying to fall into pockets representing the best results. In this article, we will consider the basics of BOA, its mathematical model, and its efficiency in solving various optimization problems.

Neural Networks in Trading: Practical Results of the TEMPO Method

We continue our acquaintance with the TEMPO method. In this article we will evaluate the actual effectiveness of the proposed approaches on real historical data.

Neural networks made easy (Part 68): Offline Preference-guided Policy Optimization

Since the first articles devoted to reinforcement learning, we have in one way or another touched upon 2 problems: exploring the environment and determining the reward function. Recent articles have been devoted to the problem of exploration in offline learning. In this article, I would like to introduce you to an algorithm whose authors completely eliminated the reward function.

Neural networks made easy (Part 60): Online Decision Transformer (ODT)

The last two articles were devoted to the Decision Transformer method, which models action sequences in the context of an autoregressive model of desired rewards. In this article, we will look at another optimization algorithm for this method.

Neural Networks in Trading: State Space Models

A large number of the models we have reviewed so far are based on the Transformer architecture. However, they may be inefficient when dealing with long sequences. And in this article, we will get acquainted with an alternative direction of time series forecasting based on state space models.

Neural Networks Made Easy (Part 84): Reversible Normalization (RevIN)

We already know that pre-processing of the input data plays a major role in the stability of model training. To process "raw" input data online, we often use a batch normalization layer. But sometimes we need a reverse procedure. In this article, we discuss one of the possible approaches to solving this problem.

MQL5 Wizard Techniques you should know (Part 64): Using Patterns of DeMarker and Envelope Channels with the White-Noise Kernel

The DeMarker Oscillator and the Envelopes' indicator are momentum and support/ resistance tools that can be paired when developing an Expert Advisor. We continue from our last article that introduced these pair of indicators by adding machine learning to the mix. We are using a recurrent neural network that uses the white-noise kernel to process vectorized signals from these two indicators. This is done in a custom signal class file that works with the MQL5 wizard to assemble an Expert Advisor.

Neural networks made easy (Part 39): Go-Explore, a different approach to exploration

We continue studying the environment in reinforcement learning models. And in this article we will look at another algorithm – Go-Explore, which allows you to effectively explore the environment at the model training stage.

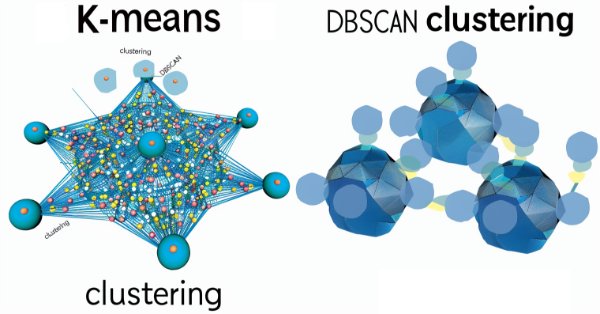

MQL5 Wizard Techniques you should know (Part 13): DBSCAN for Expert Signal Class

Density Based Spatial Clustering for Applications with Noise is an unsupervised form of grouping data that hardly requires any input parameters, save for just 2, which when compared to other approaches like k-means, is a boon. We delve into how this could be constructive for testing and eventually trading with Wizard assembled Expert Advisers

Reimagining Classic Strategies (Part VI): Multiple Time-Frame Analysis

In this series of articles, we revisit classic strategies to see if we can improve them using AI. In today's article, we will examine the popular strategy of multiple time-frame analysis to judge if the strategy would be enhanced with AI.

Neural Networks in Trading: Multi-Task Learning Based on the ResNeXt Model

A multi-task learning framework based on ResNeXt optimizes the analysis of financial data, taking into account its high dimensionality, nonlinearity, and time dependencies. The use of group convolution and specialized heads allows the model to effectively extract key features from the input data.

Neural Networks in Trading: Dual-Attention-Based Trend Prediction Model

We continue the discussion about the use of piecewise linear representation of time series, which was started in the previous article. Today we will see how to combine this method with other approaches to time series analysis to improve the price trend prediction quality.

Neural Networks in Trading: Unified Trajectory Generation Model (UniTraj)

Understanding agent behavior is important in many different areas, but most methods focus on just one of the tasks (understanding, noise removal, or prediction), which reduces their effectiveness in real-world scenarios. In this article, we will get acquainted with a model that can adapt to solving various problems.

Self Optimizing Expert Advisor With MQL5 And Python (Part IV): Stacking Models

Today, we will demonstrate how you can build AI-powered trading applications capable of learning from their own mistakes. We will demonstrate a technique known as stacking, whereby we use 2 models to make 1 prediction. The first model is typically a weaker learner, and the second model is typically a more powerful model that learns the residuals of our weaker learner. Our goal is to create an ensemble of models, to hopefully attain higher accuracy.

Data Science and Machine Learning (Part 20): Algorithmic Trading Insights, A Faceoff Between LDA and PCA in MQL5

Uncover the secrets behind these powerful dimensionality reduction techniques as we dissect their applications within the MQL5 trading environment. Delve into the nuances of Linear Discriminant Analysis (LDA) and Principal Component Analysis (PCA), gaining a profound understanding of their impact on strategy development and market analysis.

Turtle Shell Evolution Algorithm (TSEA)

This is a unique optimization algorithm inspired by the evolution of the turtle shell. The TSEA algorithm emulates the gradual formation of keratinized skin areas, which represent optimal solutions to a problem. The best solutions become "harder" and are located closer to the outer surface, while the less successful solutions remain "softer" and are located inside. The algorithm uses clustering of solutions by quality and distance, allowing to preserve less successful options and providing flexibility and adaptability.

Category Theory in MQL5 (Part 13): Calendar Events with Database Schemas

This article, that follows Category Theory implementation of Orders in MQL5, considers how database schemas can be incorporated for classification in MQL5. We take an introductory look at how database schema concepts could be married with category theory when identifying trade relevant text(string) information. Calendar events are the focus.

Functions for activating neurons during training: The key to fast convergence?

This article presents a study of the interaction of different activation functions with optimization algorithms in the context of neural network training. Particular attention is paid to the comparison of the classical ADAM and its population version when working with a wide range of activation functions, including the oscillating ACON and Snake functions. Using a minimalistic MLP (1-1-1) architecture and a single training example, the influence of activation functions on the optimization is isolated from other factors. The article proposes an approach to manage network weights through the boundaries of activation functions and a weight reflection mechanism, which allows avoiding problems with saturation and stagnation in training.

Neural networks made easy (Part 46): Goal-conditioned reinforcement learning (GCRL)

In this article, we will have a look at yet another reinforcement learning approach. It is called goal-conditioned reinforcement learning (GCRL). In this approach, an agent is trained to achieve different goals in specific scenarios.

Integrate Your Own LLM into EA (Part 3): Training Your Own LLM with CPU

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

Neural networks made easy (Part 78): Decoder-free Object Detector with Transformer (DFFT)

In this article, I propose to look at the issue of building a trading strategy from a different angle. We will not predict future price movements, but will try to build a trading system based on the analysis of historical data.

Archery Algorithm (AA)

The article takes a detailed look at the archery-inspired optimization algorithm, with an emphasis on using the roulette method as a mechanism for selecting promising areas for "arrows". The method allows evaluating the quality of solutions and selecting the most promising positions for further study.

Category Theory in MQL5 (Part 5): Equalizers

Category Theory is a diverse and expanding branch of Mathematics which is only recently getting some coverage in the MQL5 community. These series of articles look to explore and examine some of its concepts & axioms with the overall goal of establishing an open library that provides insight while also hopefully furthering the use of this remarkable field in Traders' strategy development.

Population optimization algorithms: Binary Genetic Algorithm (BGA). Part II

In this article, we will look at the binary genetic algorithm (BGA), which models the natural processes that occur in the genetic material of living things in nature.

Category Theory in MQL5 (Part 22): A different look at Moving Averages

In this article we attempt to simplify our illustration of concepts covered in these series by dwelling on just one indicator, the most common and probably the easiest to understand. The moving average. In doing so we consider significance and possible applications of vertical natural transformations.