Neural Networks: From Theory to Practice

Nowadays, every trader must have heard of neural networks and knows how cool it is to use them. The majority believes that those who can deal with neural networks are some kind of superhuman. In this article, I will try to explain to you the neural network architecture, describe its applications and show examples of practical use.

Neural Networks Made Easy

Artificial intelligence is often associated with something fantastically complex and incomprehensible. At the same time, artificial intelligence is increasingly mentioned in everyday life. News about achievements related to the use of neural networks often appear in different media. The purpose of this article is to show that anyone can easily create a neural network and use the AI achievements in trading.

Third Generation Neural Networks: Deep Networks

This article is dedicated to a new and perspective direction in machine learning - deep learning or, to be precise, deep neural networks. This is a brief review of second generation neural networks, the architecture of their connections and main types, methods and rules of learning and their main disadvantages followed by the history of the third generation neural network development, their main types, peculiarities and training methods. Conducted are practical experiments on building and training a deep neural network initiated by the weights of a stacked autoencoder with real data. All the stages from selecting input data to metric derivation are discussed in detail. The last part of the article contains a software implementation of a deep neural network in an Expert Advisor with a built-in indicator based on MQL4/R.

Neural network: Self-optimizing Expert Advisor

Is it possible to develop an Expert Advisor able to optimize position open and close conditions at regular intervals according to the code commands? What happens if we implement a neural network (multilayer perceptron) in the form of a module to analyze history and provide strategy? We can make the EA optimize a neural network monthly (weekly, daily or hourly) and continue its work afterwards. Thus, we can develop a self-optimizing EA.

Programming a Deep Neural Network from Scratch using MQL Language

This article aims to teach the reader how to make a Deep Neural Network from scratch using the MQL4/5 language.

Deep Neural Networks (Part V). Bayesian optimization of DNN hyperparameters

The article considers the possibility to apply Bayesian optimization to hyperparameters of deep neural networks, obtained by various training variants. The classification quality of a DNN with the optimal hyperparameters in different training variants is compared. Depth of effectiveness of the DNN optimal hyperparameters has been checked in forward tests. The possible directions for improving the classification quality have been determined.

Machine Learning: How Support Vector Machines can be used in Trading

Support Vector Machines have long been used in fields such as bioinformatics and applied mathematics to assess complex data sets and extract useful patterns that can be used to classify data. This article looks at what a support vector machine is, how they work and why they can be so useful in extracting complex patterns. We then investigate how they can be applied to the market and potentially used to advise on trades. Using the Support Vector Machine Learning Tool, the article provides worked examples that allow readers to experiment with their own trading.

Evaluation and selection of variables for machine learning models

This article focuses on specifics of choice, preconditioning and evaluation of the input variables (predictors) for use in machine learning models. New approaches and opportunities of deep predictor analysis and their influence on possible overfitting of models will be considered. The overall result of using models largely depends on the result of this stage. We will analyze two packages offering new and original approaches to the selection of predictors.

Deep Neural Networks (Part I). Preparing Data

This series of articles continues exploring deep neural networks (DNN), which are used in many application areas including trading. Here new dimensions of this theme will be explored along with testing of new methods and ideas using practical experiments. The first article of the series is dedicated to preparing data for DNN.

Deep Neural Networks (Part VIII). Increasing the classification quality of bagging ensembles

The article considers three methods which can be used to increase the classification quality of bagging ensembles, and their efficiency is estimated. The effects of optimization of the ELM neural network hyperparameters and postprocessing parameters are evaluated.

Deep Neural Networks (Part IV). Creating, training and testing a model of neural network

This article considers new capabilities of the darch package (v.0.12.0). It contains a description of training of a deep neural networks with different data types, different structure and training sequence. Training results are included.

Deep Neural Networks (Part VII). Ensemble of neural networks: stacking

We continue to build ensembles. This time, the bagging ensemble created earlier will be supplemented with a trainable combiner — a deep neural network. One neural network combines the 7 best ensemble outputs after pruning. The second one takes all 500 outputs of the ensemble as input, prunes and combines them. The neural networks will be built using the keras/TensorFlow package for Python. The features of the package will be briefly considered. Testing will be performed and the classification quality of bagging and stacking ensembles will be compared.

Machine learning in Grid and Martingale trading systems. Would you bet on it?

This article describes the machine learning technique applied to grid and martingale trading. Surprisingly, this approach has little to no coverage in the global network. After reading the article, you will be able to create your own trading bots.

How to master Machine Learning

Check out this selection of useful materials which can assist traders in improving their algorithmic trading knowledge. The era of simple algorithms is passing, and it is becoming harder to succeed without the use of Machine Learning techniques and Neural Networks.

Random Forests Predict Trends

This article considers using the Rattle package for automatic search of patterns for predicting long and short positions of currency pairs on Forex. This article can be useful both for novice and experienced traders.

Deep Neural Networks (Part VI). Ensemble of neural network classifiers: bagging

The article discusses the methods for building and training ensembles of neural networks with bagging structure. It also determines the peculiarities of hyperparameter optimization for individual neural network classifiers that make up the ensemble. The quality of the optimized neural network obtained in the previous article of the series is compared with the quality of the created ensemble of neural networks. Possibilities of further improving the quality of the ensemble's classification are considered.

Practical application of neural networks in trading. It's time to practice

The article provides a description and instructions for the practical use of neural network modules on the Matlab platform. It also covers the main aspects of creation of a trading system using the neural network module. In order to be able to introduce the complex within one article, I had to modify it so as to combine several neural network module functions in one program.

How to use ONNX models in MQL5

ONNX (Open Neural Network Exchange) is an open format built to represent machine learning models. In this article, we will consider how to create a CNN-LSTM model to forecast financial timeseries. We will also show how to use the created ONNX model in an MQL5 Expert Advisor.

Introduction to MQL5 (Part 7): Beginner's Guide to Building Expert Advisors and Utilizing AI-Generated Code in MQL5

Discover the ultimate beginner's guide to building Expert Advisors (EAs) with MQL5 in our comprehensive article. Learn step-by-step how to construct EAs using pseudocode and harness the power of AI-generated code. Whether you're new to algorithmic trading or seeking to enhance your skills, this guide provides a clear path to creating effective EAs.

Neural networks made easy (Part 7): Adaptive optimization methods

In previous articles, we used stochastic gradient descent to train a neural network using the same learning rate for all neurons within the network. In this article, I propose to look towards adaptive learning methods which enable changing of the learning rate for each neuron. We will also consider the pros and cons of this approach.

Neural networks made easy (Part 2): Network training and testing

In this second article, we will continue to study neural networks and will consider an example of using our created CNet class in Expert Advisors. We will work with two neural network models, which show similar results both in terms of training time and prediction accuracy.

Neural networks made easy (Part 4): Recurrent networks

We continue studying the world of neural networks. In this article, we will consider another type of neural networks, recurrent networks. This type is proposed for use with time series, which are represented in the MetaTrader 5 trading platform by price charts.

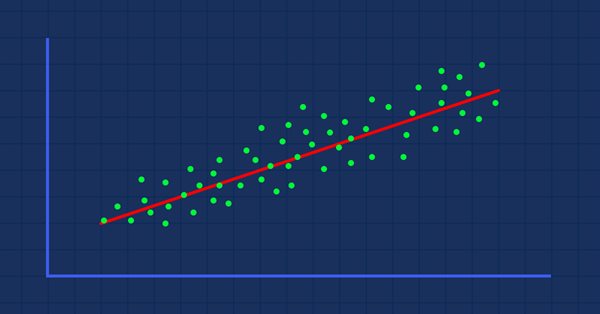

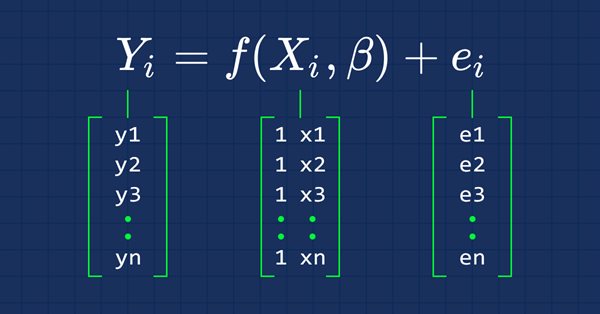

Data Science and Machine Learning (Part 01): Linear Regression

It's time for us as traders to train our systems and ourselves to make decisions based on what number says. Not on our eyes, and what our guts make us believe, this is where the world is heading so, let us move perpendicular to the direction of the wave.

Practical application of neural networks in trading (Part 2). Computer vision

The use of computer vision allows training neural networks on the visual representation of the price chart and indicators. This method enables wider operations with the whole complex of technical indicators, since there is no need to feed them digitally into the neural network.

Deep Learning Forecast and ordering with Python and MetaTrader5 python package and ONNX model file

The project involves using Python for deep learning-based forecasting in financial markets. We will explore the intricacies of testing the model's performance using key metrics such as Mean Absolute Error (MAE), Mean Squared Error (MSE), and R-squared (R2) and we will learn how to wrap everything into an executable. We will also make a ONNX model file with its EA.

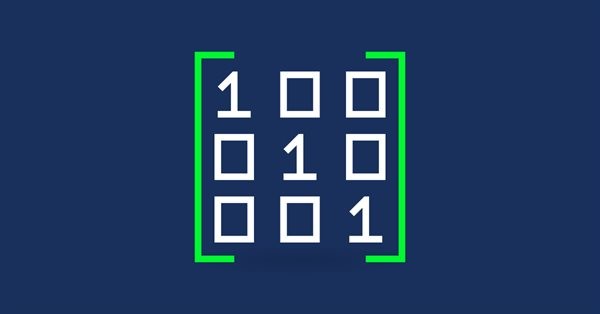

Matrices and vectors in MQL5

By using special data types 'matrix' and 'vector', it is possible to create code which is very close to mathematical notation. With these methods, you can avoid the need to create nested loops or to mind correct indexing of arrays in calculations. Therefore, the use of matrix and vector methods increases the reliability and speed in developing complex programs.

Multilayer perceptron and backpropagation algorithm (Part II): Implementation in Python and integration with MQL5

There is a Python package available for developing integrations with MQL, which enables a plethora of opportunities such as data exploration, creation and use of machine learning models. The built in Python integration in MQL5 enables the creation of various solutions, from simple linear regression to deep learning models. Let's take a look at how to set up and prepare a development environment and how to use use some of the machine learning libraries.

Neural Networks Cheap and Cheerful - Link NeuroPro with MetaTrader 5

If specific neural network programs for trading seem expensive and complex or, on the contrary, too simple, try NeuroPro. It is free and contains the optimal set of functionalities for amateurs. This article will tell you how to use it in conjunction with MetaTrader 5.

Neural networks made easy (Part 10): Multi-Head Attention

We have previously considered the mechanism of self-attention in neural networks. In practice, modern neural network architectures use several parallel self-attention threads to find various dependencies between the elements of a sequence. Let us consider the implementation of such an approach and evaluate its impact on the overall network performance.

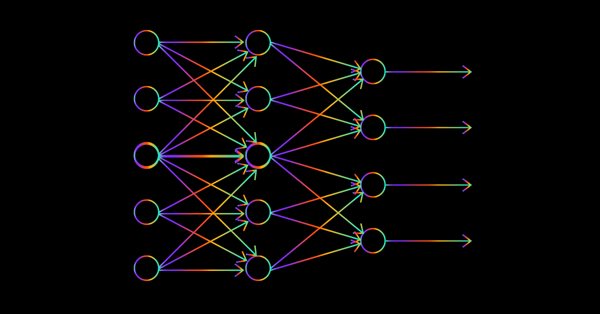

Data Science and Machine Learning — Neural Network (Part 01): Feed Forward Neural Network demystified

Many people love them but a few understand the whole operations behind Neural Networks. In this article I will try to explain everything that goes behind closed doors of a feed-forward multi-layer perception in plain English.

Deep Neural Networks (Part II). Working out and selecting predictors

The second article of the series about deep neural networks will consider the transformation and choice of predictors during the process of preparing data for training a model.

CatBoost machine learning algorithm from Yandex with no Python or R knowledge required

The article provides the code and the description of the main stages of the machine learning process using a specific example. To obtain the model, you do not need Python or R knowledge. Furthermore, basic MQL5 knowledge is enough — this is exactly my level. Therefore, I hope that the article will serve as a good tutorial for a broad audience, assisting those interested in evaluating machine learning capabilities and in implementing them in their programs.

Data Science and Machine Learning — Neural Network (Part 02): Feed forward NN Architectures Design

There are minor things to cover on the feed-forward neural network before we are through, the design being one of them. Let's see how we can build and design a flexible neural network to our inputs, the number of hidden layers, and the nodes for each of the network.

Neural networks made easy (Part 11): A take on GPT

Perhaps one of the most advanced models among currently existing language neural networks is GPT-3, the maximal variant of which contains 175 billion parameters. Of course, we are not going to create such a monster on our home PCs. However, we can view which architectural solutions can be used in our work and how we can benefit from them.

Neural Networks in Trading: A Multi-Agent Self-Adaptive Model (MASA)

I invite you to get acquainted with the Multi-Agent Self-Adaptive (MASA) framework, which combines reinforcement learning and adaptive strategies, providing a harmonious balance between profitability and risk management in turbulent market conditions.

Neural networks made easy (Part 12): Dropout

As the next step in studying neural networks, I suggest considering the methods of increasing convergence during neural network training. There are several such methods. In this article we will consider one of them entitled Dropout.

Introduction to MQL5 (Part 1): A Beginner's Guide into Algorithmic Trading

Dive into the fascinating realm of algorithmic trading with our beginner-friendly guide to MQL5 programming. Discover the essentials of MQL5, the language powering MetaTrader 5, as we demystify the world of automated trading. From understanding the basics to taking your first steps in coding, this article is your key to unlocking the potential of algorithmic trading even without a programming background. Join us on a journey where simplicity meets sophistication in the exciting universe of MQL5.

Neural networks made easy (Part 8): Attention mechanisms

In previous articles, we have already tested various options for organizing neural networks. We also considered convolutional networks borrowed from image processing algorithms. In this article, I suggest considering Attention Mechanisms, the appearance of which gave impetus to the development of language models.

Gradient Boosting (CatBoost) in the development of trading systems. A naive approach

Training the CatBoost classifier in Python and exporting the model to mql5, as well as parsing the model parameters and a custom strategy tester. The Python language and the MetaTrader 5 library are used for preparing the data and for training the model.

Data Science and Machine Learning (Part 03): Matrix Regressions

This time our models are being made by matrices, which allows flexibility while it allows us to make powerful models that can handle not only five independent variables but also many variables as long as we stay within the calculations limits of a computer, this article is going to be an interesting read, that's for sure.