Neural Networks in Trading: Parameter-Efficient Transformer with Segmented Attention (Final Part)

In the previous work, we discussed the theoretical aspects of the PSformer framework, which includes two major innovations in the classical Transformer architecture: the Parameter Shared (PS) mechanism and attention to spatio-temporal segments (SegAtt). In this article, we continue the work we started on implementing the proposed approaches using MQL5.

Creating Time Series Predictions using LSTM Neural Networks: Normalizing Price and Tokenizing Time

This article outlines a simple strategy for normalizing the market data using the daily range and training a neural network to enhance market predictions. The developed models may be used in conjunction with an existing technical analysis frameworks or on a standalone basis to assist in predicting the overall market direction. The framework outlined in this article may be further refined by any technical analyst to develop models suitable for both manual and automated trading strategies.

Category Theory (Part 9): Monoid-Actions

This article continues the series on category theory implementation in MQL5. Here we continue monoid-actions as a means of transforming monoids, covered in the previous article, leading to increased applications.

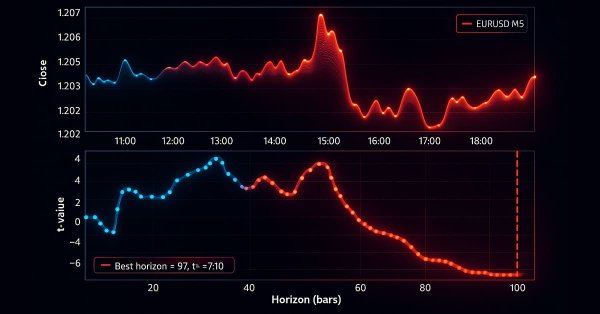

MetaTrader 5 Machine Learning Blueprint (Part 3): Trend-Scanning Labeling Method

We have built a robust feature engineering pipeline using proper tick-based bars to eliminate data leakage and solved the critical problem of labeling with meta-labeled triple-barrier signals. This installment covers the advanced labeling technique, trend-scanning, for adaptive horizons. After covering the theory, an example shows how trend-scanning labels can be used with meta-labeling to improve on the classic moving average crossover strategy.

High frequency arbitrage trading system in Python using MetaTrader 5

In this article, we will create an arbitration system that remains legal in the eyes of brokers, creates thousands of synthetic prices on the Forex market, analyzes them, and successfully trades for profit.

Build Self Optimizing Expert Advisors in MQL5 (Part 6): Stop Out Prevention

Join us in our discussion today as we look for an algorithmic procedure to minimize the total number of times we get stopped out of winning trades. The problem we faced is significantly challenging, and most solutions given in community discussions lack set and fixed rules. Our algorithmic approach to solving the problem increased the profitability of our trades and reduced our average loss per trade. However, there are further advancements to be made to completely filter out all trades that will be stopped out, our solution is a good first step for anyone to try.

Neural Networks in Trading: An Agent with Layered Memory

Layered memory approaches that mimic human cognitive processes enable the processing of complex financial data and adaptation to new signals, thereby improving the effectiveness of investment decisions in dynamic markets.

Neural networks made easy (Part 31): Evolutionary algorithms

In the previous article, we started exploring non-gradient optimization methods. We got acquainted with the genetic algorithm. Today, we will continue this topic and will consider another class of evolutionary algorithms.

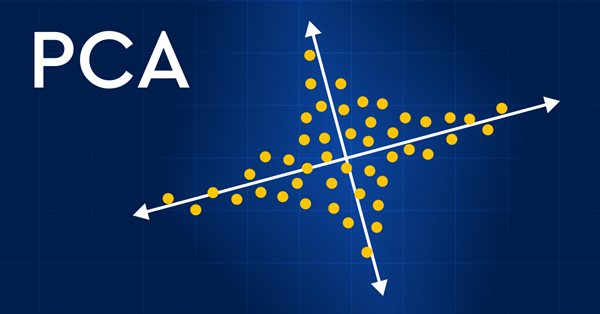

Data Science and Machine Learning (Part 13): Improve your financial market analysis with Principal Component Analysis (PCA)

Revolutionize your financial market analysis with Principal Component Analysis (PCA)! Discover how this powerful technique can unlock hidden patterns in your data, uncover latent market trends, and optimize your investment strategies. In this article, we explore how PCA can provide a new lens for analyzing complex financial data, revealing insights that would be missed by traditional approaches. Find out how applying PCA to financial market data can give you a competitive edge and help you stay ahead of the curve

Introduction to MQL5 (Part 6): A Beginner's Guide to Array Functions in MQL5 (II)

Embark on the next phase of our MQL5 journey. In this insightful and beginner-friendly article, we'll look into the remaining array functions, demystifying complex concepts to empower you to craft efficient trading strategies. We’ll be discussing ArrayPrint, ArrayInsert, ArraySize, ArrayRange, ArrarRemove, ArraySwap, ArrayReverse, and ArraySort. Elevate your algorithmic trading expertise with these essential array functions. Join us on the path to MQL5 mastery!

Building Your First Glass-box Model Using Python And MQL5

Machine learning models are difficult to interpret and understanding why our models deviate from our expectations is critical if we want to gain any value from using such advanced techniques. Without comprehensive insight into the inner workings of our model, we might fail to spot bugs that are corrupting our model's performance, we may waste time over engineering features that aren't predictive and in the long run we risk underutilizing the power of these models. Fortunately, there is a sophisticated and well maintained all in one solution that allows us to see exactly what our model is doing underneath the hood.

Data Science and Machine Learning (Part 21): Unlocking Neural Networks, Optimization algorithms demystified

Dive into the heart of neural networks as we demystify the optimization algorithms used inside the neural network. In this article, discover the key techniques that unlock the full potential of neural networks, propelling your models to new heights of accuracy and efficiency.

Neural networks made easy (Part 49): Soft Actor-Critic

We continue our discussion of reinforcement learning algorithms for solving continuous action space problems. In this article, I will present the Soft Actor-Critic (SAC) algorithm. The main advantage of SAC is the ability to find optimal policies that not only maximize the expected reward, but also have maximum entropy (diversity) of actions.

Population optimization algorithms: Gravitational Search Algorithm (GSA)

GSA is a population optimization algorithm inspired by inanimate nature. Thanks to Newton's law of gravity implemented in the algorithm, the high reliability of modeling the interaction of physical bodies allows us to observe the enchanting dance of planetary systems and galactic clusters. In this article, I will consider one of the most interesting and original optimization algorithms. The simulator of the space objects movement is provided as well.

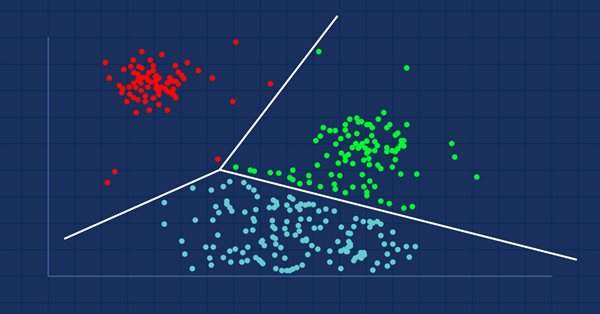

MQL5 Wizard Techniques you should know (Part 09): Pairing K-Means Clustering with Fractal Waves

K-Means clustering takes the approach to grouping data points as a process that’s initially focused on the macro view of a data set that uses random generated cluster centroids before zooming in and adjusting these centroids to accurately represent the data set. We will look at this and exploit a few of its use cases.

Python, ONNX and MetaTrader 5: Creating a RandomForest model with RobustScaler and PolynomialFeatures data preprocessing

In this article, we will create a random forest model in Python, train the model, and save it as an ONNX pipeline with data preprocessing. After that we will use the model in the MetaTrader 5 terminal.

Data Science and Machine Learning (Part 23): Why LightGBM and XGBoost outperform a lot of AI models?

These advanced gradient-boosted decision tree techniques offer superior performance and flexibility, making them ideal for financial modeling and algorithmic trading. Learn how to leverage these tools to optimize your trading strategies, improve predictive accuracy, and gain a competitive edge in the financial markets.

Population optimization algorithms: Fish School Search (FSS)

Fish School Search (FSS) is a new optimization algorithm inspired by the behavior of fish in a school, most of which (up to 80%) swim in an organized community of relatives. It has been proven that fish aggregations play an important role in the efficiency of foraging and protection from predators.

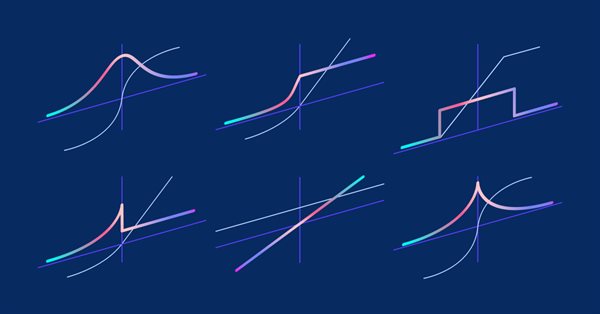

Matrices and vectors in MQL5: Activation functions

Here we will describe only one of the aspects of machine learning - activation functions. In artificial neural networks, a neuron activation function calculates an output signal value based on the values of an input signal or a set of input signals. We will delve into the inner workings of the process.

Reimagining Classic Strategies (Part XI): Moving Average Cross Over (II)

The moving averages and the stochastic oscillator could be used to generate trend following trading signals. However, these signals will only be observed after the price action has occurred. We can effectively overcome this inherent lag in technical indicators using AI. This article will teach you how to create a fully autonomous AI-powered Expert Advisor in a manner that can improve any of your existing trading strategies. Even the oldest trading strategy possible can be improved.

Neural Networks in Trading: A Multi-Agent System with Conceptual Reinforcement (Final Part)

We continue to implement the approaches proposed by the authors of the FinCon framework. FinCon is a multi-agent system based on Large Language Models (LLMs). Today, we will implement the necessary modules and conduct comprehensive testing of the model on real historical data.

Data Science and ML (Part 37): Using Candlestick patterns and AI to beat the market

Candlestick patterns help traders understand market psychology and identify trends in financial markets, they enable more informed trading decisions that can lead to better outcomes. In this article, we will explore how to use candlestick patterns with AI models to achieve optimal trading performance.

Introduction to MQL5 (Part 3): Mastering the Core Elements of MQL5

Explore the fundamentals of MQL5 programming in this beginner-friendly article, where we demystify arrays, custom functions, preprocessors, and event handling, all explained with clarity making every line of code accessible. Join us in unlocking the power of MQL5 with a unique approach that ensures understanding at every step. This article sets the foundation for mastering MQL5, emphasizing the explanation of each line of code, and providing a distinct and enriching learning experience.

Developing Trend Trading Strategies Using Machine Learning

This study introduces a novel methodology for the development of trend-following trading strategies. This section describes the process of annotating training data and using it to train classifiers. This process yields fully operational trading systems designed to run on MetaTrader 5.

Data Science and Machine Learning (Part 15): SVM, A Must-Have Tool in Every Trader's Toolbox

Discover the indispensable role of Support Vector Machines (SVM) in shaping the future of trading. This comprehensive guide explores how SVM can elevate your trading strategies, enhance decision-making, and unlock new opportunities in the financial markets. Dive into the world of SVM with real-world applications, step-by-step tutorials, and expert insights. Equip yourself with the essential tool that can help you navigate the complexities of modern trading. Elevate your trading game with SVM—a must-have for every trader's toolbox.

Сode Lock Algorithm (CLA)

In this article, we will rethink code locks, transforming them from security mechanisms into tools for solving complex optimization problems. Discover the world of code locks viewed not as simple security devices, but as inspiration for a new approach to optimization. We will create a whole population of "locks", where each lock represents a unique solution to the problem. We will then develop an algorithm that will "pick" these locks and find optimal solutions in a variety of areas, from machine learning to trading systems development.

Trend Prediction with LSTM for Trend-Following Strategies

Long Short-Term Memory (LSTM) is a type of recurrent neural network (RNN) designed to model sequential data by effectively capturing long-term dependencies and addressing the vanishing gradient problem. In this article, we will explore how to utilize LSTM to predict future trends, enhancing the performance of trend-following strategies. The article will cover the introduction of key concepts and the motivation behind development, fetching data from MetaTrader 5, using that data to train the model in Python, integrating the machine learning model into MQL5, and reflecting on the results and future aspirations based on statistical backtesting.

Neural networks made easy (Part 33): Quantile regression in distributed Q-learning

We continue studying distributed Q-learning. Today we will look at this approach from the other side. We will consider the possibility of using quantile regression to solve price prediction tasks.

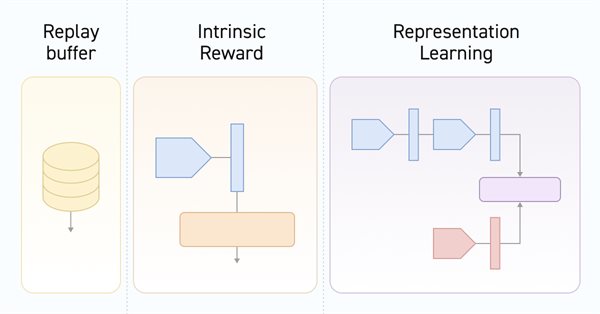

Neural networks made easy (Part 55): Contrastive intrinsic control (CIC)

Contrastive training is an unsupervised method of training representation. Its goal is to train a model to highlight similarities and differences in data sets. In this article, we will talk about using contrastive training approaches to explore different Actor skills.

Gain An Edge Over Any Market (Part V): FRED EURUSD Alternative Data

In today’s discussion, we used alternative Daily data from the St. Louis Federal Reserve on the Broad US-Dollar Index and a collection of other macroeconomic indicators to predict the EURUSD future exchange rate. Unfortunately, while the data appears to have almost perfect correlation, we failed to realize any material gains in our model accuracy, possibly suggesting to us that investors may be better off using ordinary market quotes instead.

Experiments with neural networks (Part 3): Practical application

In this article series, I use experimentation and non-standard approaches to develop a profitable trading system and check whether neural networks can be of any help for traders. MetaTrader 5 is approached as a self-sufficient tool for using neural networks in trading.

MetaTrader 5 Machine Learning Blueprint (Part 5): Sequential Bootstrapping—Debiasing Labels, Improving Returns

Sequential bootstrapping reshapes bootstrap sampling for financial machine learning by actively avoiding temporally overlapping labels, producing more independent training samples, sharper uncertainty estimates, and more robust trading models. This practical guide explains the intuition, shows the algorithm step‑by‑step, provides optimized code patterns for large datasets, and demonstrates measurable performance gains through simulations and real backtests.

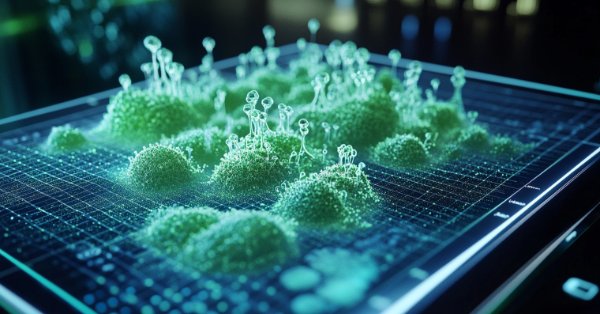

Artificial Algae Algorithm (AAA)

The article considers the Artificial Algae Algorithm (AAA) based on biological processes characteristic of microalgae. The algorithm includes spiral motion, evolutionary process and adaptation, which allows it to solve optimization problems. The article provides an in-depth analysis of the working principles of AAA and its potential in mathematical modeling, highlighting the connection between nature and algorithmic solutions.

Example of new Indicator and Conditional LSTM

This article explores the development of an Expert Advisor (EA) for automated trading that combines technical analysis with deep learning predictions.

Data Science and Machine Learning (Part 08): K-Means Clustering in plain MQL5

Data mining is crucial to a data scientist and a trader because very often, the data isn't as straightforward as we think it is. The human eye can not understand the minor underlying pattern and relationships in the dataset, maybe the K-means algorithm can help us with that. Let's find out...

Filtering and feature extraction in the frequency domain

In this article we explore the application of digital filters on time series represented in the frequency domain so as to extract unique features that may be useful to prediction models.

Data Science and ML (Part 28): Predicting Multiple Futures for EURUSD, Using AI

It is a common practice for many Artificial Intelligence models to predict a single future value. However, in this article, we will delve into the powerful technique of using machine learning models to predict multiple future values. This approach, known as multistep forecasting, allows us to predict not only tomorrow's closing price but also the day after tomorrow's and beyond. By mastering multistep forecasting, traders and data scientists can gain deeper insights and make more informed decisions, significantly enhancing their predictive capabilities and strategic planning.

Training a multilayer perceptron using the Levenberg-Marquardt algorithm

The article presents an implementation of the Levenberg-Marquardt algorithm for training feedforward neural networks. A comparative analysis of performance with algorithms from the scikit-learn Python library has been conducted. Simpler learning methods, such as gradient descent, gradient descent with momentum, and stochastic gradient descent are preliminarily discussed.

Volumetric neural network analysis as a key to future trends

The article explores the possibility of improving price forecasting based on trading volume analysis by integrating technical analysis principles with LSTM neural network architecture. Particular attention is paid to the detection and interpretation of anomalous volumes, the use of clustering and the creation of features based on volumes and their definition in the context of machine learning.

Neural networks made easy (Part 37): Sparse Attention

In the previous article, we discussed relational models which use attention mechanisms in their architecture. One of the specific features of these models is the intensive utilization of computing resources. In this article, we will consider one of the mechanisms for reducing the number of computational operations inside the Self-Attention block. This will increase the general performance of the model.