Pipelines in MQL5

In this piece, we look at a key data preparation step for machine learning that is gaining rapid significance. Data Preprocessing Pipelines. These in essence are a streamlined sequence of data transformation steps that prepare raw data before it is fed to a model. As uninteresting as this may initially seem to the uninducted, this ‘data standardization’ not only saves on training time and execution costs, but it goes a long way in ensuring better generalization. In this article we are focusing on some SCIKIT-LEARN preprocessing functions, and while we are not exploiting the MQL5 Wizard, we will return to it in coming articles.

Quantum computing and trading: A fresh approach to price forecasts

The article describes an innovative approach to forecasting price movements in financial markets using quantum computing. The main focus is on the application of the Quantum Phase Estimation (QPE) algorithm to find prototypes of price patterns allowing traders to significantly speed up the market data analysis.

Neural Networks in Trading: A Hybrid Trading Framework with Predictive Coding (StockFormer)

In this article, we will discuss the hybrid trading system StockFormer, which combines predictive coding and reinforcement learning (RL) algorithms. The framework uses 3 Transformer branches with an integrated Diversified Multi-Head Attention (DMH-Attn) mechanism that improves on the vanilla attention module with a multi-headed Feed-Forward block, allowing it to capture diverse time series patterns across different subspaces.

Neural Networks in Trading: An Ensemble of Agents with Attention Mechanisms (Final Part)

In the previous article, we introduced the multi-agent adaptive framework MASAAT, which uses an ensemble of agents to perform cross-analysis of multimodal time series at different data scales. Today we will continue implementing the approaches of this framework in MQL5 and bring this work to a logical conclusion.

Dynamic mode decomposition applied to univariate time series in MQL5

Dynamic mode decomposition (DMD) is a technique usually applied to high-dimensional datasets. In this article, we demonstrate the application of DMD on univariate time series, showing its ability to characterize a series as well as make forecasts. In doing so, we will investigate MQL5's built-in implementation of dynamic mode decomposition, paying particular attention to the new matrix method, DynamicModeDecomposition().

Big Bang - Big Crunch (BBBC) algorithm

The article presents the Big Bang - Big Crunch method, which has two key phases: cyclic generation of random points and their compression to the optimal solution. This approach combines exploration and refinement, allowing us to gradually find better solutions and open up new optimization opportunities.

Neural Networks in Trading: An Ensemble of Agents with Attention Mechanisms (MASAAT)

We introduce the Multi-Agent Self-Adaptive Portfolio Optimization Framework (MASAAT), which combines attention mechanisms and time series analysis. MASAAT generates a set of agents that analyze price series and directional changes, enabling the identification of significant fluctuations in asset prices at different levels of detail.

Overcoming The Limitation of Machine Learning (Part 3): A Fresh Perspective on Irreducible Error

This article takes a fresh perspective on a hidden, geometric source of error that quietly shapes every prediction your models make. By rethinking how we measure and apply machine learning forecasts in trading, we reveal how this overlooked perspective can unlock sharper decisions, stronger returns, and a more intelligent way to work with models we thought we already understood.

Trend strength and direction indicator on 3D bars

We will consider a new approach to market trend analysis based on three-dimensional visualization and tensor analysis of the market microstructure.

Black Hole Algorithm (BHA)

The Black Hole Algorithm (BHA) uses the principles of black hole gravity to optimize solutions. In this article, we will look at how BHA attracts the best solutions while avoiding local extremes, and why this algorithm has become a powerful tool for solving complex problems. Learn how simple ideas can lead to impressive results in the world of optimization.

Neural Networks in Trading: A Multi-Agent Self-Adaptive Model (Final Part)

In the previous article, we introduced the multi-agent self-adaptive framework MASA, which combines reinforcement learning approaches and self-adaptive strategies, providing a harmonious balance between profitability and risk in turbulent market conditions. We have built the functionality of individual agents within this framework. In this article, we will continue the work we started, bringing it to its logical conclusion.

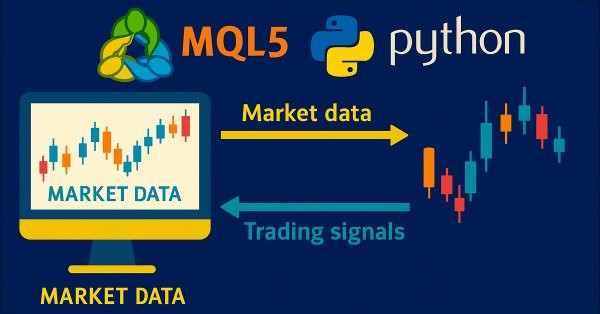

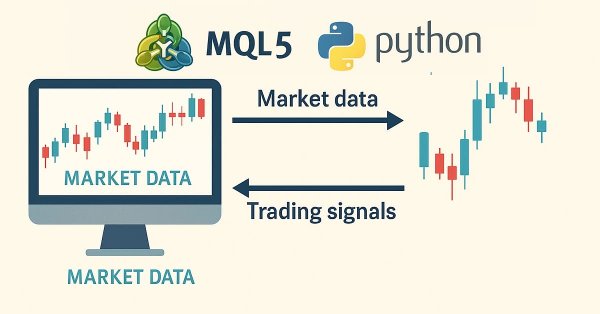

Multi-module trading robot in Python and MQL5 (Part I): Creating basic architecture and first modules

We are going to develop a modular trading system that combines Python for data analysis with MQL5 for trade execution. Four independent modules monitor different market aspects in parallel: volumes, arbitrage, economics and risks, and use RandomForest with 400 trees for analysis. Particular emphasis is placed on risk management, since even the most advanced trading algorithms are useless without proper risk management.

Analyzing binary code of prices on the exchange (Part II): Converting to BIP39 and writing GPT model

Continuing tries to decipher price movements... What about linguistic analysis of the "market dictionary" that we get by converting the binary price code to BIP39? In this article, we will delve into an innovative approach to exchange data analysis and consider how modern natural language processing techniques can be applied to the market language.

Neural Networks in Trading: A Multi-Agent Self-Adaptive Model (MASA)

I invite you to get acquainted with the Multi-Agent Self-Adaptive (MASA) framework, which combines reinforcement learning and adaptive strategies, providing a harmonious balance between profitability and risk management in turbulent market conditions.

Artificial Tribe Algorithm (ATA)

The article provides a detailed discussion of the key components and innovations of the ATA optimization algorithm, which is an evolutionary method with a unique dual behavior system that adapts depending on the situation. ATA combines individual and social learning while using crossover for explorations and migration to find solutions when stuck in local optima.

Analyzing binary code of prices on the exchange (Part I): A new look at technical analysis

This article presents an innovative approach to technical analysis based on converting price movements into binary code. The author demonstrates how various aspects of market behavior — from simple price movements to complex patterns — can be encoded in a sequence of zeros and ones.

MetaTrader 5 Machine Learning Blueprint (Part 2): Labeling Financial Data for Machine Learning

In this second installment of the MetaTrader 5 Machine Learning Blueprint series, you’ll discover why simple labels can lead your models astray—and how to apply advanced techniques like the Triple-Barrier and Trend-Scanning methods to define robust, risk-aware targets. Packed with practical Python examples that optimize these computationally intensive techniques, this hands-on guide shows you how to transform noisy market data into reliable labels that mirror real-world trading conditions.

MQL5 Wizard Techniques you should know (Part 79): Using Gator Oscillator and Accumulation/Distribution Oscillator with Supervised Learning

In the last piece, we concluded our look at the pairing of the gator oscillator and the accumulation/distribution oscillator when used in their typical setting of the raw signals they generate. These two indicators are complimentary as trend and volume indicators, respectively. We now follow up that piece, by examining the effect that supervised learning can have on enhancing some of the feature patterns we had reviewed. Our supervised learning approach is a CNN that engages with kernel regression and dot product similarity to size its kernels and channels. As always, we do this in a custom signal class file that works with the MQL5 wizard to assemble an Expert Advisor.

Self Optimizing Expert Advisors in MQL5 (Part 12): Building Linear Classifiers Using Matrix Factorization

This article explores the powerful role of matrix factorization in algorithmic trading, specifically within MQL5 applications. From regression models to multi-target classifiers, we walk through practical examples that demonstrate how easily these techniques can be integrated using built-in MQL5 functions. Whether you're predicting price direction or modeling indicator behavior, this guide lays a strong foundation for building intelligent trading systems using matrix methods.

Neural Networks in Trading: Parameter-Efficient Transformer with Segmented Attention (Final Part)

In the previous work, we discussed the theoretical aspects of the PSformer framework, which includes two major innovations in the classical Transformer architecture: the Parameter Shared (PS) mechanism and attention to spatio-temporal segments (SegAtt). In this article, we continue the work we started on implementing the proposed approaches using MQL5.

Price Action Analysis Toolkit Development (Part 36): Unlocking Direct Python Access to MetaTrader 5 Market Streams

Harness the full potential of your MetaTrader 5 terminal by leveraging Python’s data-science ecosystem and the official MetaTrader 5 client library. This article demonstrates how to authenticate and stream live tick and minute-bar data directly into Parquet storage, apply sophisticated feature engineering with Ta and Prophet, and train a time-aware Gradient Boosting model. We then deploy a lightweight Flask service to serve trade signals in real time. Whether you’re building a hybrid quant framework or enhancing your EA with machine learning, you’ll walk away with a robust, end-to-end pipeline for data-driven algorithmic trading.

Neural Networks in Trading: A Parameter-Efficient Transformer with Segmented Attention (PSformer)

This article introduces the new PSformer framework, which adapts the architecture of the vanilla Transformer to solving problems related to multivariate time series forecasting. The framework is based on two key innovations: the Parameter Sharing (PS) mechanism and the Segment Attention (SegAtt).

Integrating MQL5 with data processing packages (Part 5): Adaptive Learning and Flexibility

This part focuses on building a flexible, adaptive trading model trained on historical XAUUSD data, preparing it for ONNX export and potential integration into live trading systems.

Neural Networks in Trading: Enhancing Transformer Efficiency by Reducing Sharpness (Final Part)

SAMformer offers a solution to the key drawbacks of Transformer models in long-term time series forecasting, such as training complexity and poor generalization on small datasets. Its shallow architecture and sharpness-aware optimization help avoid suboptimal local minima. In this article, we will continue to implement approaches using MQL5 and evaluate their practical value.

Price Action Analysis Toolkit Development (Part 35): Training and Deploying Predictive Models

Historical data is far from “trash”—it’s the foundation of any robust market analysis. In this article, we’ll take you step‑by‑step from collecting that history to using it to train a predictive model, and finally deploying that model for live price forecasts. Read on to learn how!

Expert Advisor based on the universal MLP approximator

The article presents a simple and accessible way to use a neural network in a trading EA that does not require deep knowledge of machine learning. The method eliminates the target function normalization, as well as overcomes "weight explosion" and "network stall" issues offering intuitive training and visual control of the results.

Algorithmic trading based on 3D reversal patterns

Discovering a new world of automated trading on 3D bars. What does a trading robot look like on multidimensional price bars? Are "yellow" clusters of 3D bars able to predict trend reversals? What does multidimensional trading look like?

Price Action Analysis Toolkit Development (Part 34): Turning Raw Market Data into Predictive Models Using an Advanced Ingestion Pipeline

Have you ever missed a sudden market spike or been caught off‑guard when one occurred? The best way to anticipate live events is to learn from historical patterns. Intending to train an ML model, this article begins by showing you how to create a script in MetaTrader 5 that ingests historical data and sends it to Python for storage—laying the foundation for your spike‑detection system. Read on to see each step in action.

Implementing Practical Modules from Other Languages in MQL5 (Part 03): Schedule Module from Python, the OnTimer Event on Steroids

The schedule module in Python offers a simple way to schedule repeated tasks. While MQL5 lacks a built-in equivalent, in this article we’ll implement a similar library to make it easier to set up timed events in MetaTrader 5.

Population ADAM (Adaptive Moment Estimation)

The article presents the transformation of the well-known and popular ADAM gradient optimization method into a population algorithm and its modification with the introduction of hybrid individuals. The new approach allows creating agents that combine elements of successful decisions using probability distribution. The key innovation is the formation of hybrid population individuals that adaptively accumulate information from the most promising solutions, increasing the efficiency of search in complex multidimensional spaces.

MQL5 Wizard Techniques you should know (Part 76): Using Patterns of Awesome Oscillator and the Envelope Channels with Supervised Learning

We follow up on our last article, where we introduced the indicator couple of the Awesome-Oscillator and the Envelope Channel, by looking at how this pairing could be enhanced with Supervised Learning. The Awesome-Oscillator and Envelope-Channel are a trend-spotting and support/resistance complimentary mix. Our supervised learning approach is a CNN that engages the Dot Product Kernel with Cross-Time-Attention to size its kernels and channels. As per usual, this is done in a custom signal class file that works with the MQL5 wizard to assemble an Expert Advisor.

Neural Networks in Trading: Enhancing Transformer Efficiency by Reducing Sharpness (SAMformer)

Training Transformer models requires large amounts of data and is often difficult since the models are not good at generalizing to small datasets. The SAMformer framework helps solve this problem by avoiding poor local minima. This improves the efficiency of models even on limited training datasets.

Data Science and ML (Part 46): Stock Markets Forecasting Using N-BEATS in Python

N-BEATS is a revolutionary deep learning model designed for time series forecasting. It was released to surpass classical models for time series forecasting such as ARIMA, PROPHET, VAR, etc. In this article, we are going to discuss this model and use it in predicting the stock market.

Neural Networks in Trading: Optimizing the Transformer for Time Series Forecasting (LSEAttention)

The LSEAttention framework offers improvements to the Transformer architecture. It was designed specifically for long-term multivariate time series forecasting. The approaches proposed by the authors of the method can be applied to solve problems of entropy collapse and learning instability, which are often encountered with vanilla Transformer.

Non-linear regression models on the stock exchange

Non-linear regression models on the stock exchange: Is it possible to predict financial markets? Let's consider creating a model for forecasting prices for EURUSD, and make two robots based on it - in Python and MQL5.

MQL5 Wizard Techniques you should know (Part 74): Using Patterns of Ichimoku and the ADX-Wilder with Supervised Learning

We follow up on our last article, where we introduced the indicator pair of the Ichimoku and the ADX, by looking at how this duo could be improved with Supervised Learning. Ichimoku and ADX are a support/resistance plus trend complimentary pairing. Our supervised learning approach uses a neural network that engages the Deep Spectral Mixture Kernel to fine tune the forecasts of this indicator pairing. As per usual, this is done in a custom signal class file that works with the MQL5 wizard to assemble an Expert Advisor.

Neural Networks in Trading: Hyperbolic Latent Diffusion Model (Final Part)

The use of anisotropic diffusion processes for encoding the initial data in a hyperbolic latent space, as proposed in the HypDIff framework, assists in preserving the topological features of the current market situation and improves the quality of its analysis. In the previous article, we started implementing the proposed approaches using MQL5. Today we will continue the work we started and will bring it to its logical conclusion.

Arithmetic Optimization Algorithm (AOA): From AOA to SOA (Simple Optimization Algorithm)

In this article, we present the Arithmetic Optimization Algorithm (AOA) based on simple arithmetic operations: addition, subtraction, multiplication and division. These basic mathematical operations serve as the foundation for finding optimal solutions to various problems.

Neural Networks in Trading: Hyperbolic Latent Diffusion Model (HypDiff)

The article considers methods of encoding initial data in hyperbolic latent space through anisotropic diffusion processes. This helps to more accurately preserve the topological characteristics of the current market situation and improves the quality of its analysis.

Using association rules in Forex data analysis

How to apply predictive rules of supermarket retail analytics to the real Forex market? How are purchases of cookies, milk and bread related to stock exchange transactions? The article discusses an innovative approach to algorithmic trading based on the use of association rules.