Neural Network in Practice: Sketching a Neuron

In this article we will build a basic neuron. And although it looks simple, and many may consider this code completely trivial and meaningless, I want you to have fun studying this simple sketch of a neuron. Don't be afraid to modify the code, understanding it fully is the goal.

Anarchic Society Optimization (ASO) algorithm

In this article, we will get acquainted with the Anarchic Society Optimization (ASO) algorithm and discuss how an algorithm based on the irrational and adventurous behavior of participants in an anarchic society (an anomalous system of social interaction free from centralized power and various kinds of hierarchies) is able to explore the solution space and avoid the traps of local optimum. The article presents a unified ASO structure applicable to both continuous and discrete problems.

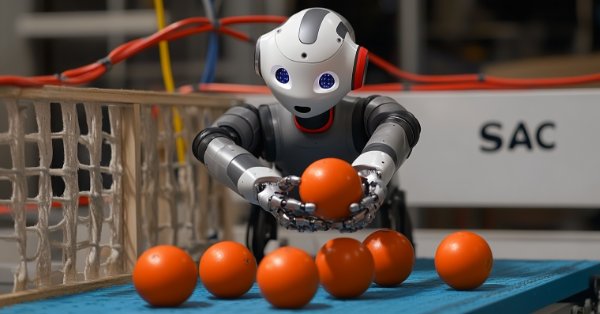

MQL5 Wizard Techniques you should know (Part 55): SAC with Prioritized Experience Replay

Replay buffers in Reinforcement Learning are particularly important with off-policy algorithms like DQN or SAC. This then puts the spotlight on the sampling process of this memory-buffer. While default options with SAC, for instance, use random selection from this buffer, Prioritized Experience Replay buffers fine tune this by sampling from the buffer based on a TD-score. We review the importance of Reinforcement Learning, and, as always, examine just this hypothesis (not the cross-validation) in a wizard assembled Expert Advisor.

Neural Networks in Trading: Injection of Global Information into Independent Channels (InjectTST)

Most modern multimodal time series forecasting methods use the independent channels approach. This ignores the natural dependence of different channels of the same time series. Smart use of two approaches (independent and mixed channels) is the key to improving the performance of the models.

Build Self Optimizing Expert Advisors in MQL5 (Part 6): Stop Out Prevention

Join us in our discussion today as we look for an algorithmic procedure to minimize the total number of times we get stopped out of winning trades. The problem we faced is significantly challenging, and most solutions given in community discussions lack set and fixed rules. Our algorithmic approach to solving the problem increased the profitability of our trades and reduced our average loss per trade. However, there are further advancements to be made to completely filter out all trades that will be stopped out, our solution is a good first step for anyone to try.

Neural Networks in Trading: Practical Results of the TEMPO Method

We continue our acquaintance with the TEMPO method. In this article we will evaluate the actual effectiveness of the proposed approaches on real historical data.

Animal Migration Optimization (AMO) algorithm

The article is devoted to the AMO algorithm, which models the seasonal migration of animals in search of optimal conditions for life and reproduction. The main features of AMO include the use of topological neighborhood and a probabilistic update mechanism, which makes it easy to implement and flexible for various optimization tasks.

MQL5 Wizard Techniques you should know (Part 54): Reinforcement Learning with hybrid SAC and Tensors

Soft Actor Critic is a Reinforcement Learning algorithm that we looked at in a previous article, where we also introduced python and ONNX to these series as efficient approaches to training networks. We revisit the algorithm with the aim of exploiting tensors, computational graphs that are often exploited in Python.

Neural Networks in Trading: Using Language Models for Time Series Forecasting

We continue to study time series forecasting models. In this article, we get acquainted with a complex algorithm built on the use of a pre-trained language model.

Neural Networks in Trading: Lightweight Models for Time Series Forecasting

Lightweight time series forecasting models achieve high performance using a minimum number of parameters. This, in turn, reduces the consumption of computing resources and speeds up decision-making. Despite being lightweight, such models achieve forecast quality comparable to more complex ones.

Artificial Bee Hive Algorithm (ABHA): Tests and results

In this article, we will continue exploring the Artificial Bee Hive Algorithm (ABHA) by diving into the code and considering the remaining methods. As you might remember, each bee in the model is represented as an individual agent whose behavior depends on internal and external information, as well as motivational state. We will test the algorithm on various functions and summarize the results by presenting them in the rating table.

Artificial Bee Hive Algorithm (ABHA): Theory and methods

In this article, we will consider the Artificial Bee Hive Algorithm (ABHA) developed in 2009. The algorithm is aimed at solving continuous optimization problems. We will look at how ABHA draws inspiration from the behavior of a bee colony, where each bee has a unique role that helps them find resources more efficiently.

Trend Prediction with LSTM for Trend-Following Strategies

Long Short-Term Memory (LSTM) is a type of recurrent neural network (RNN) designed to model sequential data by effectively capturing long-term dependencies and addressing the vanishing gradient problem. In this article, we will explore how to utilize LSTM to predict future trends, enhancing the performance of trend-following strategies. The article will cover the introduction of key concepts and the motivation behind development, fetching data from MetaTrader 5, using that data to train the model in Python, integrating the machine learning model into MQL5, and reflecting on the results and future aspirations based on statistical backtesting.

Neural Networks in Trading: Reducing Memory Consumption with Adam-mini Optimization

One of the directions for increasing the efficiency of the model training and convergence process is the improvement of optimization methods. Adam-mini is an adaptive optimization method designed to improve on the basic Adam algorithm.

Generative Adversarial Networks (GANs) for Synthetic Data in Financial Modeling (Part 2): Creating Synthetic Symbol for Testing

In this article we are creating a synthetic symbol using a Generative Adversarial Network (GAN) involves generating realistic Financial data that mimics the behavior of actual market instruments, such as EURUSD. The GAN model learns patterns and volatility from historical market data and creates synthetic price data with similar characteristics.

Data Science and ML (Part 33): Pandas Dataframe in MQL5, Data Collection for ML Usage made easier

When working with machine learning models, it’s essential to ensure consistency in the data used for training, validation, and testing. In this article, we will create our own version of the Pandas library in MQL5 to ensure a unified approach for handling machine learning data, for ensuring the same data is applied inside and outside MQL5, where most of the training occurs.

Integrate Your Own LLM into EA (Part 5): Develop and Test Trading Strategy with LLMs(IV) — Test Trading Strategy

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

Gating mechanisms in ensemble learning

In this article, we continue our exploration of ensemble models by discussing the concept of gates, specifically how they may be useful in combining model outputs to enhance either prediction accuracy or model generalization.

Adaptive Social Behavior Optimization (ASBO): Two-phase evolution

We continue dwelling on the topic of social behavior of living organisms and its impact on the development of a new mathematical model - ASBO (Adaptive Social Behavior Optimization). We will dive into the two-phase evolution, test the algorithm and draw conclusions. Just as in nature a group of living organisms join their efforts to survive, ASBO uses principles of collective behavior to solve complex optimization problems.

Neural Networks in Trading: Spatio-Temporal Neural Network (STNN)

In this article we will talk about using space-time transformations to effectively predict upcoming price movement. To improve the numerical prediction accuracy in STNN, a continuous attention mechanism is proposed that allows the model to better consider important aspects of the data.

Neural Network in Practice: Pseudoinverse (II)

Since these articles are educational in nature and are not intended to show the implementation of specific functionality, we will do things a little differently in this article. Instead of showing how to apply factorization to obtain the inverse of a matrix, we will focus on factorization of the pseudoinverse. The reason is that there is no point in showing how to get the general coefficient if we can do it in a special way. Even better, the reader can gain a deeper understanding of why things happen the way they do. So, let's now figure out why hardware is replacing software over time.

Neural Networks in Trading: Dual-Attention-Based Trend Prediction Model

We continue the discussion about the use of piecewise linear representation of time series, which was started in the previous article. Today we will see how to combine this method with other approaches to time series analysis to improve the price trend prediction quality.

Hidden Markov Models for Trend-Following Volatility Prediction

Hidden Markov Models (HMMs) are powerful statistical tools that identify underlying market states by analyzing observable price movements. In trading, HMMs enhance volatility prediction and inform trend-following strategies by modeling and anticipating shifts in market regimes. In this article, we will present the complete procedure for developing a trend-following strategy that utilizes HMMs to predict volatility as a filter.

Neural Networks in Trading: Piecewise Linear Representation of Time Series

This article is somewhat different from my earlier publications. In this article, we will talk about an alternative representation of time series. Piecewise linear representation of time series is a method of approximating a time series using linear functions over small intervals.

Adaptive Social Behavior Optimization (ASBO): Schwefel, Box-Muller Method

This article provides a fascinating insight into the world of social behavior in living organisms and its influence on the creation of a new mathematical model - ASBO (Adaptive Social Behavior Optimization). We will examine how the principles of leadership, neighborhood, and cooperation observed in living societies inspire the development of innovative optimization algorithms.

Neural Networks Made Easy (Part 97): Training Models With MSFformer

When exploring various model architecture designs, we often devote insufficient attention to the process of model training. In this article, I aim to address this gap.

Ensemble methods to enhance classification tasks in MQL5

In this article, we present the implementation of several ensemble classifiers in MQL5 and discuss their efficacy in varying situations.

Artificial Electric Field Algorithm (AEFA)

The article presents an artificial electric field algorithm (AEFA) inspired by Coulomb's law of electrostatic force. The algorithm simulates electrical phenomena to solve complex optimization problems using charged particles and their interactions. AEFA exhibits unique properties in the context of other algorithms related to laws of nature.

Neural Networks Made Easy (Part 96): Multi-Scale Feature Extraction (MSFformer)

Efficient extraction and integration of long-term dependencies and short-term features remain an important task in time series analysis. Their proper understanding and integration are necessary to create accurate and reliable predictive models.

MQL5 Wizard Techniques you should know (Part 51): Reinforcement Learning with SAC

Soft Actor Critic is a Reinforcement Learning algorithm that utilizes 3 neural networks. An actor network and 2 critic networks. These machine learning models are paired in a master slave partnership where the critics are modelled to improve the forecast accuracy of the actor network. While also introducing ONNX in these series, we explore how these ideas could be put to test as a custom signal of a wizard assembled Expert Advisor.

Integrate Your Own LLM into EA (Part 5): Develop and Test Trading Strategy with LLMs (III) – Adapter-Tuning

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

Integrating MQL5 with data processing packages (Part 4): Big Data Handling

Exploring advanced techniques to integrate MQL5 with powerful data processing tools, this part focuses on efficient handling of big data to enhance trading analysis and decision-making.

Across Neighbourhood Search (ANS)

The article reveals the potential of the ANS algorithm as an important step in the development of flexible and intelligent optimization methods that can take into account the specifics of the problem and the dynamics of the environment in the search space.

Ensemble methods to enhance numerical predictions in MQL5

In this article, we present the implementation of several ensemble learning methods in MQL5 and examine their effectiveness across different scenarios.

Neural Network in Practice: Pseudoinverse (I)

Today we will begin to consider how to implement the calculation of pseudo-inverse in pure MQL5 language. The code we are going to look at will be much more complex for beginners than I expected, and I'm still figuring out how to explain it in a simple way. So for now, consider this an opportunity to learn some unusual code. Calmly and attentively. Although it is not aimed at efficient or quick application, its goal is to be as didactic as possible.

Developing a trading robot in Python (Part 3): Implementing a model-based trading algorithm

We continue the series of articles on developing a trading robot in Python and MQL5. In this article, we will create a trading algorithm in Python.

Neural Networks Made Easy (Part 95): Reducing Memory Consumption in Transformer Models

Transformer architecture-based models demonstrate high efficiency, but their use is complicated by high resource costs both at the training stage and during operation. In this article, I propose to get acquainted with algorithms that allow to reduce memory usage of such models.

Utilizing CatBoost Machine Learning model as a Filter for Trend-Following Strategies

CatBoost is a powerful tree-based machine learning model that specializes in decision-making based on stationary features. Other tree-based models like XGBoost and Random Forest share similar traits in terms of their robustness, ability to handle complex patterns, and interpretability. These models have a wide range of uses, from feature analysis to risk management. In this article, we're going to walk through the procedure of utilizing a trained CatBoost model as a filter for a classic moving average cross trend-following strategy.

Trading Insights Through Volume: Trend Confirmation

The Enhanced Trend Confirmation Technique combines price action, volume analysis, and machine learning to identify genuine market movements. It requires both price breakouts and volume surges (50% above average) for trade validation, while using an LSTM neural network for additional confirmation. The system employs ATR-based position sizing and dynamic risk management, making it adaptable to various market conditions while filtering out false signals.

Chemical reaction optimization (CRO) algorithm (Part II): Assembling and results

In the second part, we will collect chemical operators into a single algorithm and present a detailed analysis of its results. Let's find out how the Chemical reaction optimization (CRO) method copes with solving complex problems on test functions.