Codex Pipelines, from Python to MQL5, for Indicator Selection: A Multi-Quarter Analysis of the XLF ETF with Machine Learning

We continue our look at how the selection of indicators can be pipelined when facing a ‘none-typical’ MetaTrader asset. MetaTrader 5 is primarily used to trade forex, and that is good given the liquidity on offer, however the case for trading outside of this ‘comfort-zone’, is growing bolder with not just the overnight rise of platforms like Robinhood, but also the relentless pursuit of an edge for most traders. We consider the XLF ETF for this article and also cap our revamped pipeline with a simple MLP.

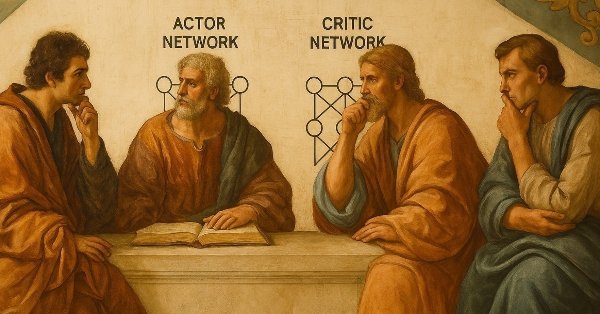

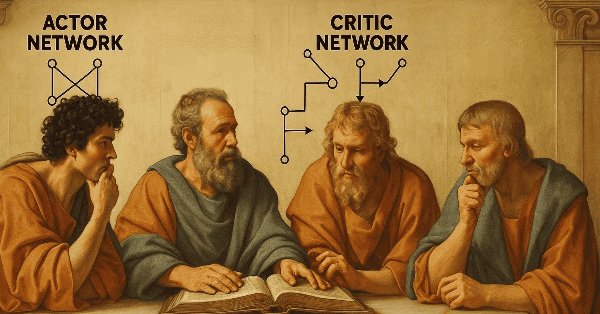

MQL5 Wizard Techniques you should know (Part 49): Reinforcement Learning with Proximal Policy Optimization

Proximal Policy Optimization is another algorithm in reinforcement learning that updates the policy, often in network form, in very small incremental steps to ensure the model stability. We examine how this could be of use, as we have with previous articles, in a wizard assembled Expert Advisor.

Neural networks made easy (Part 41): Hierarchical models

The article describes hierarchical training models that offer an effective approach to solving complex machine learning problems. Hierarchical models consist of several levels, each of which is responsible for different aspects of the task.

Neural Networks in Trading: Superpoint Transformer (SPFormer)

In this article, we introduce a method for segmenting 3D objects based on Superpoint Transformer (SPFormer), which eliminates the need for intermediate data aggregation. This speeds up the segmentation process and improves the performance of the model.

Neural Networks Made Easy (Part 97): Training Models With MSFformer

When exploring various model architecture designs, we often devote insufficient attention to the process of model training. In this article, I aim to address this gap.

MQL5 Wizard Techniques you should know (Part 43): Reinforcement Learning with SARSA

SARSA, which is an abbreviation for State-Action-Reward-State-Action is another algorithm that can be used when implementing reinforcement learning. So, as we saw with Q-Learning and DQN, we look into how this could be explored and implemented as an independent model rather than just a training mechanism, in wizard assembled Expert Advisors.

Blood inheritance optimization (BIO)

I present to you my new population optimization algorithm - Blood Inheritance Optimization (BIO), inspired by the human blood group inheritance system. In this algorithm, each solution has its own "blood type" that determines the way it evolves. Just as in nature where a child's blood type is inherited according to specific rules, in BIO new solutions acquire their characteristics through a system of inheritance and mutations.

Comet Tail Algorithm (CTA)

In this article, we will look at the Comet Tail Optimization Algorithm (CTA), which draws inspiration from unique space objects - comets and their impressive tails that form when approaching the Sun. The algorithm is based on the concept of the motion of comets and their tails, and is designed to find optimal solutions in optimization problems.

Neural Networks Made Easy (Part 91): Frequency Domain Forecasting (FreDF)

We continue to explore the analysis and forecasting of time series in the frequency domain. In this article, we will get acquainted with a new method to forecast data in the frequency domain, which can be added to many of the algorithms we have studied previously.

Generative Adversarial Networks (GANs) for Synthetic Data in Financial Modeling (Part 1): Introduction to GANs and Synthetic Data in Financial Modeling

This article introduces traders to Generative Adversarial Networks (GANs) for generating Synthetic Financial data, addressing data limitations in model training. It covers GAN basics, python and MQL5 code implementations, and practical applications in finance, empowering traders to enhance model accuracy and robustness through synthetic data.

Neural Networks in Trading: Market Analysis Using a Pattern Transformer

When we use models to analyze the market situation, we mainly focus on the candlestick. However, it has long been known that candlestick patterns can help in predicting future price movements. In this article, we will get acquainted with a method that allows us to integrate both of these approaches.

Neural Networks in Trading: Hierarchical Feature Learning for Point Clouds

We continue to study algorithms for extracting features from a point cloud. In this article, we will get acquainted with the mechanisms for increasing the efficiency of the PointNet method.

Across Neighbourhood Search (ANS)

The article reveals the potential of the ANS algorithm as an important step in the development of flexible and intelligent optimization methods that can take into account the specifics of the problem and the dynamics of the environment in the search space.

Neural networks made easy (Part 89): Frequency Enhanced Decomposition Transformer (FEDformer)

All the models we have considered so far analyze the state of the environment as a time sequence. However, the time series can also be represented in the form of frequency features. In this article, I introduce you to an algorithm that uses frequency components of a time sequence to predict future states.

MQL5 Wizard Techniques you should know (Part 31): Selecting the Loss Function

Loss Function is the key metric of machine learning algorithms that provides feedback to the training process by quantifying how well a given set of parameters are performing when compared to their intended target. We explore the various formats of this function in an MQL5 custom wizard class.

Dialectic Search (DA)

The article introduces the dialectical algorithm (DA), a new global optimization method inspired by the philosophical concept of dialectics. The algorithm exploits a unique division of the population into speculative and practical thinkers. Testing shows impressive performance of up to 98% on low-dimensional problems and overall efficiency of 57.95%. The article explains these metrics and presents a detailed description of the algorithm and the results of experiments on different types of functions.

Population optimization algorithms: Intelligent Water Drops (IWD) algorithm

The article considers an interesting algorithm derived from inanimate nature - intelligent water drops (IWD) simulating the process of river bed formation. The ideas of this algorithm made it possible to significantly improve the previous leader of the rating - SDS. As usual, the new leader (modified SDSm) can be found in the attachment.

Neural Networks in Trading: Hierarchical Vector Transformer (Final Part)

We continue studying the Hierarchical Vector Transformer method. In this article, we will complete the construction of the model. We will also train and test it on real historical data.

Neural Networks in Trading: Hybrid Graph Sequence Models (Final Part)

We continue exploring hybrid graph sequence models (GSM++), which integrate the advantages of different architectures, providing high analysis accuracy and efficient distribution of computing resources. These models effectively identify hidden patterns, reducing the impact of market noise and improving forecasting quality.

MQL5 Wizard Techniques you should know (Part 21): Testing with Economic Calendar Data

Economic Calendar Data is not available for testing with Expert Advisors within Strategy Tester, by default. We look at how Databases could help in providing a work around this limitation. So, for this article we explore how SQLite databases can be used to archive Economic Calendar news such that wizard assembled Expert Advisors can use this to generate trade signals.

Neural Networks in Trading: Contrastive Pattern Transformer (Final Part)

In the previous last article within this series, we looked at the Atom-Motif Contrastive Transformer (AMCT) framework, which uses contrastive learning to discover key patterns at all levels, from basic elements to complex structures. In this article, we continue implementing AMCT approaches using MQL5.

MQL5 Wizard Techniques you should know (Part 58): Reinforcement Learning (DDPG) with Moving Average and Stochastic Oscillator Patterns

Moving Average and Stochastic Oscillator are very common indicators whose collective patterns we explored in the prior article, via a supervised learning network, to see which “patterns-would-stick”. We take our analyses from that article, a step further by considering the effects' reinforcement learning, when used with this trained network, would have on performance. Readers should note our testing is over a very limited time window. Nonetheless, we continue to harness the minimal coding requirements afforded by the MQL5 wizard in showcasing this.

Data Science and ML (Part 35): NumPy in MQL5 – The Art of Making Complex Algorithms with Less Code

NumPy library is powering almost all the machine learning algorithms to the core in Python programming language, In this article we are going to implement a similar module which has a collection of all the complex code to aid us in building sophisticated models and algorithms of any kind.

Reimagining Classic Strategies (Part IX): Multiple Time Frame Analysis (II)

In today's discussion, we examine the strategy of multiple time-frame analysis to learn on which time frame our AI model performs best. Our analysis leads us to conclude that the Monthly and Hourly time-frames produce models with relatively low error rates on the EURUSD pair. We used this to our advantage and created a trading algorithm that makes AI predictions on the Monthly time frame, and executes its trades on the Hourly time frame.

Data Science and ML (Part 39): News + Artificial Intelligence, Would You Bet on it?

News drives the financial markets, especially major releases like Non-Farm Payrolls (NFPs). We've all witnessed how a single headline can trigger sharp price movements. In this article, we dive into the powerful intersection of news data and Artificial Intelligence.

Neural networks made easy (Part 79): Feature Aggregated Queries (FAQ) in the context of state

In the previous article, we got acquainted with one of the methods for detecting objects in an image. However, processing a static image is somewhat different from working with dynamic time series, such as the dynamics of the prices we analyze. In this article, we will consider the method of detecting objects in video, which is somewhat closer to the problem we are solving.

Big Bang - Big Crunch (BBBC) algorithm

The article presents the Big Bang - Big Crunch method, which has two key phases: cyclic generation of random points and their compression to the optimal solution. This approach combines exploration and refinement, allowing us to gradually find better solutions and open up new optimization opportunities.

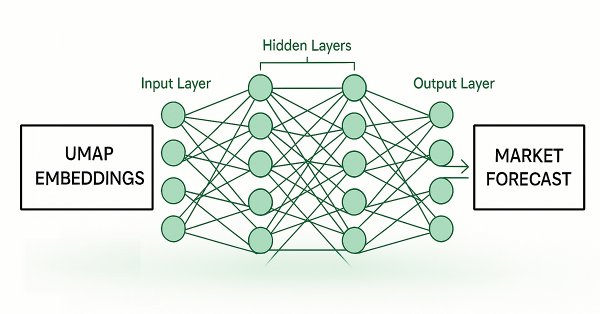

Feature Engineering With Python And MQL5 (Part IV): Candlestick Pattern Recognition With UMAP Regression

Dimension reduction techniques are widely used to improve the performance of machine learning models. Let us discuss a relatively new technique known as Uniform Manifold Approximation and Projection (UMAP). This new technique has been developed to explicitly overcome the limitations of legacy methods that create artifacts and distortions in the data. UMAP is a powerful dimension reduction technique, and it helps us group similar candle sticks in a novel and effective way that reduces our error rates on out of sample data and improves our trading performance.

Fibonacci in Forex (Part I): Examining the Price-Time Relationship

How does the market observe Fibonacci-based relationships? This sequence, where each subsequent number is equal to the sum of the two previous ones (1, 1, 2, 3, 5, 8, 13, 21...), not only describes the growth of the rabbit population. We will consider the Pythagorean hypothesis that everything in the world is subject to certain relationships of numbers...

The base class of population algorithms as the backbone of efficient optimization

The article represents a unique research attempt to combine a variety of population algorithms into a single class to simplify the application of optimization methods. This approach not only opens up opportunities for the development of new algorithms, including hybrid variants, but also creates a universal basic test stand. This stand becomes a key tool for choosing the optimal algorithm depending on a specific task.

MQL5 Wizard Techniques you should know (Part 79): Using Gator Oscillator and Accumulation/Distribution Oscillator with Supervised Learning

In the last piece, we concluded our look at the pairing of the gator oscillator and the accumulation/distribution oscillator when used in their typical setting of the raw signals they generate. These two indicators are complimentary as trend and volume indicators, respectively. We now follow up that piece, by examining the effect that supervised learning can have on enhancing some of the feature patterns we had reviewed. Our supervised learning approach is a CNN that engages with kernel regression and dot product similarity to size its kernels and channels. As always, we do this in a custom signal class file that works with the MQL5 wizard to assemble an Expert Advisor.

Gain an Edge Over Any Market (Part III): Visa Spending Index

In the world of big data, there are millions of alternative datasets that hold the potential to enhance our trading strategies. In this series of articles, we will help you identify the most informative public datasets.

Reimagining Classic Strategies (Part 17): Modelling Technical Indicators

In this discussion, we focus on how we can break the glass ceiling imposed by classical machine learning techniques in finance. It appears that the greatest limitation to the value we can extract from statistical models does not lie in the models themselves — neither in the data nor in the complexity of the algorithms — but rather in the methodology we use to apply them. In other words, the true bottleneck may be how we employ the model, not the model’s intrinsic capability.

Feature Engineering With Python And MQL5 (Part II): Angle Of Price

There are many posts in the MQL5 Forum asking for help calculating the slope of price changes. This article will demonstrate one possible way of calculating the angle formed by the changes in price in any market you wish to trade. Additionally, we will answer if engineering this new feature is worth the extra effort and time invested. We will explore if the slope of the price can improve any of our AI model's accuracy when forecasting the USDZAR pair on the M1.

Neural Networks Made Easy (Part 81): Context-Guided Motion Analysis (CCMR)

In previous works, we always assessed the current state of the environment. At the same time, the dynamics of changes in indicators always remained "behind the scenes". In this article I want to introduce you to an algorithm that allows you to evaluate the direct change in data between 2 successive environmental states.

Royal Flush Optimization (RFO)

The original Royal Flush Optimization algorithm offers a new approach to solving optimization problems, replacing the classic binary coding of genetic algorithms with a sector-based approach inspired by poker principles. RFO demonstrates how simplifying basic principles can lead to an efficient and practical optimization method. The article presents a detailed analysis of the algorithm and test results.

Chemical reaction optimization (CRO) algorithm (Part II): Assembling and results

In the second part, we will collect chemical operators into a single algorithm and present a detailed analysis of its results. Let's find out how the Chemical reaction optimization (CRO) method copes with solving complex problems on test functions.

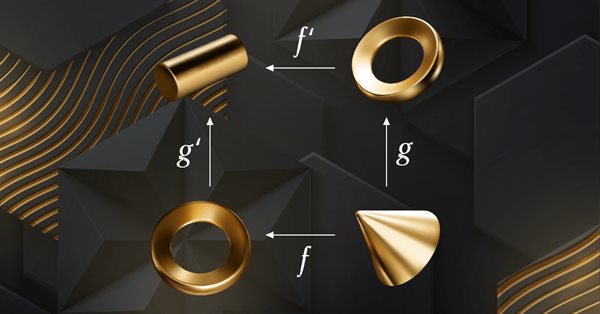

Category Theory in MQL5 (Part 6): Monomorphic Pull-Backs and Epimorphic Push-Outs

Category Theory is a diverse and expanding branch of Mathematics which is only recently getting some coverage in the MQL5 community. These series of articles look to explore and examine some of its concepts & axioms with the overall goal of establishing an open library that provides insight while also hopefully furthering the use of this remarkable field in Traders' strategy development.

MQL5 Wizard Techniques you should know (Part 59): Reinforcement Learning (DDPG) with Moving Average and Stochastic Oscillator Patterns

We continue our last article on DDPG with MA and stochastic indicators by examining other key Reinforcement Learning classes crucial for implementing DDPG. Though we are mostly coding in python, the final product, of a trained network will be exported to as an ONNX to MQL5 where we integrate it as a resource in a wizard assembled Expert Advisor.

Chaos Game Optimization (CGO)

The article presents a new metaheuristic algorithm, Chaos Game Optimization (CGO), which demonstrates a unique ability to maintain high efficiency when dealing with high-dimensional problems. Unlike most optimization algorithms, CGO not only does not lose, but sometimes even increases performance when scaling a problem, which is its key feature.