Integrate Your Own LLM into EA (Part 5): Develop and Test Trading Strategy with LLMs(IV) — Test Trading Strategy

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

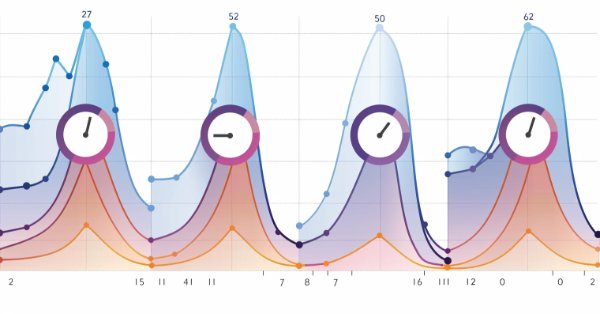

William Gann methods (Part III): Does Astrology Work?

Do the positions of planets and stars affect financial markets? Let's arm ourselves with statistics and big data, and embark on an exciting journey into the world where stars and stock charts intersect.

Neural networks made easy (Part 35): Intrinsic Curiosity Module

We continue to study reinforcement learning algorithms. All the algorithms we have considered so far required the creation of a reward policy to enable the agent to evaluate each of its actions at each transition from one system state to another. However, this approach is rather artificial. In practice, there is some time lag between an action and a reward. In this article, we will get acquainted with a model training algorithm which can work with various time delays from the action to the reward.

Data Science and ML (Part 42): Forex Time series Forecasting using ARIMA in Python, Everything you need to Know

ARIMA, short for Auto Regressive Integrated Moving Average, is a powerful traditional time series forecasting model. With the ability to detect spikes and fluctuations in a time series data, this model can make accurate predictions on the next values. In this article, we are going to understand what is it, how it operates, what you can do with it when it comes to predicting the next prices in the market with high accuracy and much more.

Data Science and Machine Learning (Part 19): Supercharge Your AI models with AdaBoost

AdaBoost, a powerful boosting algorithm designed to elevate the performance of your AI models. AdaBoost, short for Adaptive Boosting, is a sophisticated ensemble learning technique that seamlessly integrates weak learners, enhancing their collective predictive strength.

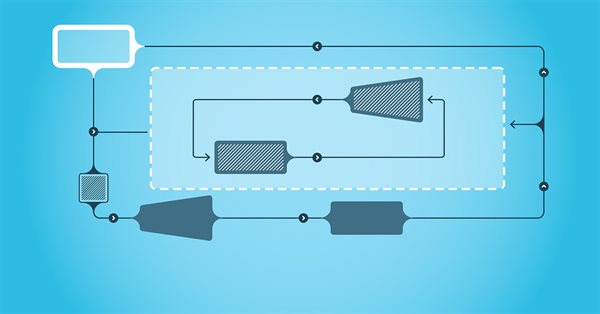

Neural Networks Made Easy (Part 87): Time Series Patching

Forecasting plays an important role in time series analysis. In the new article, we will talk about the benefits of time series patching.

Using PSAR, Heiken Ashi, and Deep Learning Together for Trading

This project explores the fusion of deep learning and technical analysis to test trading strategies in forex. A Python script is used for rapid experimentation, employing an ONNX model alongside traditional indicators like PSAR, SMA, and RSI to predict EUR/USD movements. A MetaTrader 5 script then brings this strategy into a live environment, using historical data and technical analysis to make informed trading decisions. The backtesting results indicate a cautious yet consistent approach, with a focus on risk management and steady growth rather than aggressive profit-seeking.

Pipelines in MQL5

In this piece, we look at a key data preparation step for machine learning that is gaining rapid significance. Data Preprocessing Pipelines. These in essence are a streamlined sequence of data transformation steps that prepare raw data before it is fed to a model. As uninteresting as this may initially seem to the uninducted, this ‘data standardization’ not only saves on training time and execution costs, but it goes a long way in ensuring better generalization. In this article we are focusing on some SCIKIT-LEARN preprocessing functions, and while we are not exploiting the MQL5 Wizard, we will return to it in coming articles.

Data Science and ML (Part 33): Pandas Dataframe in MQL5, Data Collection for ML Usage made easier

When working with machine learning models, it’s essential to ensure consistency in the data used for training, validation, and testing. In this article, we will create our own version of the Pandas library in MQL5 to ensure a unified approach for handling machine learning data, for ensuring the same data is applied inside and outside MQL5, where most of the training occurs.

Neural networks made easy (Part 44): Learning skills with dynamics in mind

In the previous article, we introduced the DIAYN method, which offers the algorithm for learning a variety of skills. The acquired skills can be used for various tasks. But such skills can be quite unpredictable, which can make them difficult to use. In this article, we will look at an algorithm for learning predictable skills.

Population optimization algorithms: Cuckoo Optimization Algorithm (COA)

The next algorithm I will consider is cuckoo search optimization using Levy flights. This is one of the latest optimization algorithms and a new leader in the leaderboard.

Self Optimizing Expert Advisors in MQL5 (Part 17): Ensemble Intelligence

All algorithmic trading strategies are difficult to set up and maintain, regardless of complexity—a challenge shared by beginners and experts alike. This article introduces an ensemble framework where supervised models and human intuition work together to overcome their shared limitations. By aligning a moving average channel strategy with a Ridge Regression model on the same indicators, we achieve centralized control, faster self-correction, and profitability from otherwise unprofitable systems.

Neural Networks in Trading: Hybrid Graph Sequence Models (GSM++)

Hybrid graph sequence models (GSM++) combine the advantages of different architectures to provide high-fidelity data analysis and optimized computational costs. These models adapt effectively to dynamic market data, improving the presentation and processing of financial information.

Neural networks made easy (Part 38): Self-Supervised Exploration via Disagreement

One of the key problems within reinforcement learning is environmental exploration. Previously, we have already seen the research method based on Intrinsic Curiosity. Today I propose to look at another algorithm: Exploration via Disagreement.

Neural networks made easy (Part 50): Soft Actor-Critic (model optimization)

In the previous article, we implemented the Soft Actor-Critic algorithm, but were unable to train a profitable model. Here we will optimize the previously created model to obtain the desired results.

Neural Networks in Trading: Hierarchical Vector Transformer (HiVT)

We invite you to get acquainted with the Hierarchical Vector Transformer (HiVT) method, which was developed for fast and accurate forecasting of multimodal time series.

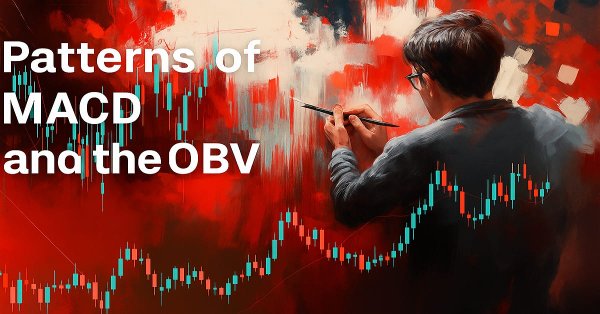

MQL5 Wizard Techniques you should know (Part 71): Using Patterns of MACD and the OBV

The Moving-Average-Convergence-Divergence (MACD) oscillator and the On-Balance-Volume (OBV) oscillator are another pair of indicators that could be used in conjunction within an MQL5 Expert Advisor. This pairing, as is practice in these article series, is complementary with the MACD affirming trends while OBV checks volume. As usual, we use the MQL5 wizard to build and test any potential these two may possess.

Neural networks made easy (Part 43): Mastering skills without the reward function

The problem of reinforcement learning lies in the need to define a reward function. It can be complex or difficult to formalize. To address this problem, activity-based and environment-based approaches are being explored to learn skills without an explicit reward function.

MQL5 Wizard Techniques you should know (Part 08): Perceptrons

Perceptrons, single hidden layer networks, can be a good segue for anyone familiar with basic automated trading and is looking to dip into neural networks. We take a step by step look at how this could be realized in a signal class assembly that is part of the MQL5 Wizard classes for expert advisors.

Neural networks made easy (Part 48): Methods for reducing overestimation of Q-function values

In the previous article, we introduced the DDPG method, which allows training models in a continuous action space. However, like other Q-learning methods, DDPG is prone to overestimating Q-function values. This problem often results in training an agent with a suboptimal strategy. In this article, we will look at some approaches to overcome the mentioned issue.

Overcoming ONNX Integration Challenges

ONNX is a great tool for integrating complex AI code between different platforms, it is a great tool that comes with some challenges that one must address to get the most out of it, In this article we discuss the common issues you might face and how to mitigate them.

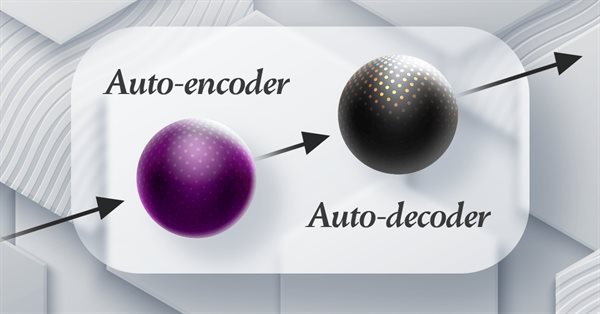

Neural Networks in Trading: Hyperbolic Latent Diffusion Model (Final Part)

The use of anisotropic diffusion processes for encoding the initial data in a hyperbolic latent space, as proposed in the HypDIff framework, assists in preserving the topological features of the current market situation and improves the quality of its analysis. In the previous article, we started implementing the proposed approaches using MQL5. Today we will continue the work we started and will bring it to its logical conclusion.

Neural networks made easy (Part 47): Continuous action space

In this article, we expand the range of tasks of our agent. The training process will include some aspects of money and risk management, which are an integral part of any trading strategy.

Quantum computing and trading: A fresh approach to price forecasts

The article describes an innovative approach to forecasting price movements in financial markets using quantum computing. The main focus is on the application of the Quantum Phase Estimation (QPE) algorithm to find prototypes of price patterns allowing traders to significantly speed up the market data analysis.

Neural Networks in Trading: Enhancing Transformer Efficiency by Reducing Sharpness (Final Part)

SAMformer offers a solution to the key drawbacks of Transformer models in long-term time series forecasting, such as training complexity and poor generalization on small datasets. Its shallow architecture and sharpness-aware optimization help avoid suboptimal local minima. In this article, we will continue to implement approaches using MQL5 and evaluate their practical value.

Data Science and Machine Learning (Part 17): Money in the Trees? The Art and Science of Random Forests in Forex Trading

Discover the secrets of algorithmic alchemy as we guide you through the blend of artistry and precision in decoding financial landscapes. Unearth how Random Forests transform data into predictive prowess, offering a unique perspective on navigating the complex terrain of stock markets. Join us on this journey into the heart of financial wizardry, where we demystify the role of Random Forests in shaping market destiny and unlocking the doors to lucrative opportunities

Integrating MQL5 with data processing packages (Part 2): Machine Learning and Predictive Analytics

In our series on integrating MQL5 with data processing packages, we delve in to the powerful combination of machine learning and predictive analysis. We will explore how to seamlessly connect MQL5 with popular machine learning libraries, to enable sophisticated predictive models for financial markets.

Category Theory in MQL5 (Part 20): A detour to Self-Attention and the Transformer

We digress in our series by pondering at part of the algorithm to chatGPT. Are there any similarities or concepts borrowed from natural transformations? We attempt to answer these and other questions in a fun piece, with our code in a signal class format.

Feature Engineering With Python And MQL5 (Part I): Forecasting Moving Averages For Long-Range AI Models

The moving averages are by far the best indicators for our AI models to predict. However, we can improve our accuracy even further by carefully transforming our data. This article will demonstrate, how you can build AI Models capable of forecasting further into the future than you may currently be practicing without significant drops to your accuracy levels. It is truly remarkable, how useful the moving averages are.

Neural Networks in Trading: An Agent with Layered Memory (Final Part)

We continue our work on creating the FinMem framework, which uses layered memory approaches that mimic human cognitive processes. This allows the model not only to effectively process complex financial data but also to adapt to new signals, significantly improving the accuracy and effectiveness of investment decisions in dynamically changing markets.

Seasonality Filtering and time period for Deep Learning ONNX models with python for EA

Can we benefit from seasonality when creating models for Deep Learning with Python? Does filtering data for the ONNX models help to get better results? What time period should we use? We will cover all of this over this article.

Neural Networks in Trading: Using Language Models for Time Series Forecasting

We continue to study time series forecasting models. In this article, we get acquainted with a complex algorithm built on the use of a pre-trained language model.

Gain An Edge Over Any Market (Part II): Forecasting Technical Indicators

Did you know that we can gain more accuracy forecasting certain technical indicators than predicting the underlying price of a traded symbol? Join us to explore how to leverage this insight for better trading strategies.

Pure implementation of RSA encryption in MQL5

MQL5 lacks built-in asymmetric cryptography, making secure data exchange over insecure channels like HTTP difficult. This article presents a pure MQL5 implementation of RSA using PKCS#1 v1.5 padding, enabling safe transmission of AES session keys and small data blocks without external libraries. This approach provides HTTPS-like security over standard HTTP and even more, it fills an important gap in secure communication for MQL5 applications.

Population optimization algorithms: Saplings Sowing and Growing up (SSG)

Saplings Sowing and Growing up (SSG) algorithm is inspired by one of the most resilient organisms on the planet demonstrating outstanding capability for survival in a wide variety of conditions.

Neural Networks in Trading: A Multi-Agent Self-Adaptive Model (Final Part)

In the previous article, we introduced the multi-agent self-adaptive framework MASA, which combines reinforcement learning approaches and self-adaptive strategies, providing a harmonious balance between profitability and risk in turbulent market conditions. We have built the functionality of individual agents within this framework. In this article, we will continue the work we started, bringing it to its logical conclusion.

Category Theory in MQL5 (Part 2)

Category Theory is a diverse and expanding branch of Mathematics which as of yet is relatively uncovered in the MQL5 community. These series of articles look to introduce and examine some of its concepts with the overall goal of establishing an open library that attracts comments and discussion while hopefully furthering the use of this remarkable field in Traders' strategy development.

Trading Insights Through Volume: Moving Beyond OHLC Charts

Algorithmic trading system that combines volume analysis with machine learning techniques, specifically LSTM neural networks. Unlike traditional trading approaches that primarily focus on price movements, this system emphasizes volume patterns and their derivatives to predict market movements. The methodology incorporates three main components: volume derivatives analysis (first and second derivatives), LSTM predictions for volume patterns, and traditional technical indicators.

Neural Networks in Trading: Transformer with Relative Encoding

Self-supervised learning can be an effective way to analyze large amounts of unlabeled data. The efficiency is provided by the adaptation of models to the specific features of financial markets, which helps improve the effectiveness of traditional methods. This article introduces an alternative attention mechanism that takes into account the relative dependencies and relationships between inputs.

Data Science and ML (Part 31): Using CatBoost AI Models for Trading

CatBoost AI models have gained massive popularity recently among machine learning communities due to their predictive accuracy, efficiency, and robustness to scattered and difficult datasets. In this article, we are going to discuss in detail how to implement these types of models in an attempt to beat the forex market.