Data Science and ML (Part 40): Using Fibonacci Retracements in Machine Learning data

Fibonacci retracements are a popular tool in technical analysis, helping traders identify potential reversal zones. In this article, we’ll explore how these retracement levels can be transformed into target variables for machine learning models to help them understand the market better using this powerful tool.

Volumetric neural network analysis as a key to future trends

The article explores the possibility of improving price forecasting based on trading volume analysis by integrating technical analysis principles with LSTM neural network architecture. Particular attention is paid to the detection and interpretation of anomalous volumes, the use of clustering and the creation of features based on volumes and their definition in the context of machine learning.

From Python to MQL5: A Journey into Quantum-Inspired Trading Systems

The article explores the development of a quantum-inspired trading system, transitioning from a Python prototype to an MQL5 implementation for real-world trading. The system uses quantum computing principles like superposition and entanglement to analyze market states, though it runs on classical computers using quantum simulators. Key features include a three-qubit system for analyzing eight market states simultaneously, 24-hour lookback periods, and seven technical indicators for market analysis. While the accuracy rates might seem modest, they provide a significant edge when combined with proper risk management strategies.

Neural networks made easy (Part 37): Sparse Attention

In the previous article, we discussed relational models which use attention mechanisms in their architecture. One of the specific features of these models is the intensive utilization of computing resources. In this article, we will consider one of the mechanisms for reducing the number of computational operations inside the Self-Attention block. This will increase the general performance of the model.

Neural Networks in Trading: Enhancing Transformer Efficiency by Reducing Sharpness (SAMformer)

Training Transformer models requires large amounts of data and is often difficult since the models are not good at generalizing to small datasets. The SAMformer framework helps solve this problem by avoiding poor local minima. This improves the efficiency of models even on limited training datasets.

Frequency domain representations of time series: The Power Spectrum

In this article we discuss methods related to the analysis of timeseries in the frequency domain. Emphasizing the utility of examining the power spectra of time series when building predictive models. In this article we will discuss some of the useful perspectives to be gained by analyzing time series in the frequency domain using the discrete fourier transform (dft).

Cyclic Parthenogenesis Algorithm (CPA)

The article considers a new population optimization algorithm - Cyclic Parthenogenesis Algorithm (CPA), inspired by the unique reproductive strategy of aphids. The algorithm combines two reproduction mechanisms — parthenogenesis and sexual reproduction — and also utilizes the colonial structure of the population with the possibility of migration between colonies. The key features of the algorithm are adaptive switching between different reproductive strategies and a system of information exchange between colonies through the flight mechanism.

Population optimization algorithms: Invasive Weed Optimization (IWO)

The amazing ability of weeds to survive in a wide variety of conditions has become the idea for a powerful optimization algorithm. IWO is one of the best algorithms among the previously reviewed ones.

Reimagining Classic Strategies (Part 16): Double Bollinger Band Breakouts

This article walks the reader through a reimagined version of the classical Bollinger Band breakout strategy. It identifies key weaknesses in the original approach, such as its well-known susceptibility to false breakouts. The article aims to introduce a possible solution: the Double Bollinger Band trading strategy. This relatively lesser known approach supplements the weaknesses of the classical version and offers a more dynamic perspective on financial markets. It helps us overcome the old limitations defined by the original rules, providing traders with a stronger and more adaptive framework.

Neural Networks in Trading: A Parameter-Efficient Transformer with Segmented Attention (PSformer)

This article introduces the new PSformer framework, which adapts the architecture of the vanilla Transformer to solving problems related to multivariate time series forecasting. The framework is based on two key innovations: the Parameter Sharing (PS) mechanism and the Segment Attention (SegAtt).

Neural networks made easy (Part 18): Association rules

As a continuation of this series of articles, let's consider another type of problems within unsupervised learning methods: mining association rules. This problem type was first used in retail, namely supermarkets, to analyze market baskets. In this article, we will talk about the applicability of such algorithms in trading.

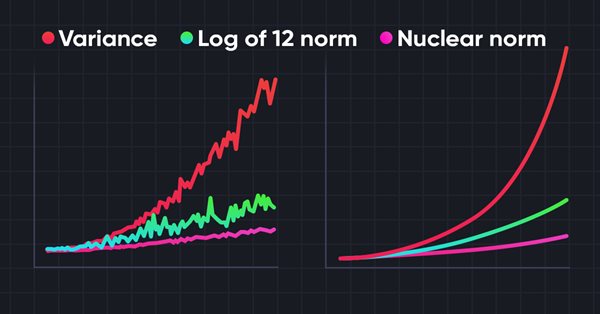

Neural networks made easy (Part 56): Using nuclear norm to drive research

The study of the environment in reinforcement learning is a pressing problem. We have already looked at some approaches previously. In this article, we will have a look at yet another method based on maximizing the nuclear norm. It allows agents to identify environmental states with a high degree of novelty and diversity.

ALGLIB library optimization methods (Part II)

In this article, we will continue to study the remaining optimization methods from the ALGLIB library, paying special attention to their testing on complex multidimensional functions. This will allow us not only to evaluate the efficiency of each algorithm, but also to identify their strengths and weaknesses in different conditions.

Building A Candlestick Trend Constraint Model (Part 9): Multiple Strategies Expert Advisor (I)

Today, we will explore the possibilities of incorporating multiple strategies into an Expert Advisor (EA) using MQL5. Expert Advisors provide broader capabilities than just indicators and scripts, allowing for more sophisticated trading approaches that can adapt to changing market conditions. Find, more in this article discussion.

Neural networks made easy (Part 54): Using random encoder for efficient research (RE3)

Whenever we consider reinforcement learning methods, we are faced with the issue of efficiently exploring the environment. Solving this issue often leads to complication of the algorithm and training of additional models. In this article, we will look at an alternative approach to solving this problem.

Data label for time series mining (Part 3):Example for using label data

This series of articles introduces several time series labeling methods, which can create data that meets most artificial intelligence models, and targeted data labeling according to needs can make the trained artificial intelligence model more in line with the expected design, improve the accuracy of our model, and even help the model make a qualitative leap!

Introduction to MQL5 (Part 5): A Beginner's Guide to Array Functions in MQL5

Explore the world of MQL5 arrays in Part 5, designed for absolute beginners. Simplifying complex coding concepts, this article focuses on clarity and inclusivity. Join our community of learners, where questions are embraced, and knowledge is shared!

Hidden Markov Models for Trend-Following Volatility Prediction

Hidden Markov Models (HMMs) are powerful statistical tools that identify underlying market states by analyzing observable price movements. In trading, HMMs enhance volatility prediction and inform trend-following strategies by modeling and anticipating shifts in market regimes. In this article, we will present the complete procedure for developing a trend-following strategy that utilizes HMMs to predict volatility as a filter.

Integrate Your Own LLM into EA (Part 1): Hardware and Environment Deployment

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

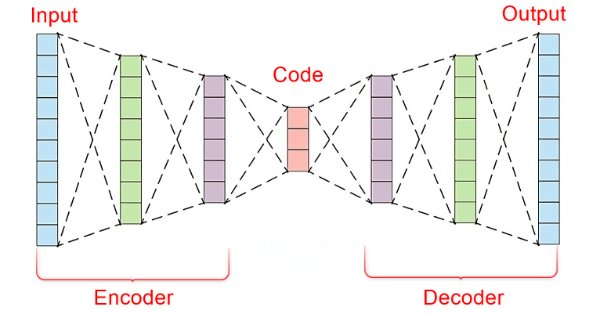

Data Science and Machine Learning (Part 22): Leveraging Autoencoders Neural Networks for Smarter Trades by Moving from Noise to Signal

In the fast-paced world of financial markets, separating meaningful signals from the noise is crucial for successful trading. By employing sophisticated neural network architectures, autoencoders excel at uncovering hidden patterns within market data, transforming noisy input into actionable insights. In this article, we explore how autoencoders are revolutionizing trading practices, offering traders a powerful tool to enhance decision-making and gain a competitive edge in today's dynamic markets.

Measuring Indicator Information

Machine learning has become a popular method for strategy development. Whilst there has been more emphasis on maximizing profitability and prediction accuracy , the importance of processing the data used to build predictive models has not received a lot of attention. In this article we consider using the concept of entropy to evaluate the appropriateness of indicators to be used in predictive model building as documented in the book Testing and Tuning Market Trading Systems by Timothy Masters.

Neural networks made easy (Part 22): Unsupervised learning of recurrent models

We continue to study unsupervised learning algorithms. This time I suggest that we discuss the features of autoencoders when applied to recurrent model training.

Neural networks made easy (Part 75): Improving the performance of trajectory prediction models

The models we create are becoming larger and more complex. This increases the costs of not only their training as well as operation. However, the time required to make a decision is often critical. In this regard, let us consider methods for optimizing model performance without loss of quality.

Introduction to MQL5 (Part 2): Navigating Predefined Variables, Common Functions, and Control Flow Statements

Embark on an illuminating journey with Part Two of our MQL5 series. These articles are not just tutorials, they're doorways to an enchanted realm where programming novices and wizards alike unite. What makes this journey truly magical? Part Two of our MQL5 series stands out with its refreshing simplicity, making complex concepts accessible to all. Engage with us interactively as we answer your questions, ensuring an enriching and personalized learning experience. Let's build a community where understanding MQL5 is an adventure for everyone. Welcome to the enchantment!

Neural Networks in Trading: Models Using Wavelet Transform and Multi-Task Attention (Final Part)

In the previous article, we explored the theoretical foundations and began implementing the approaches of the Multitask-Stockformer framework, which combines the wavelet transform and the Self-Attention multitask model. We continue to implement the algorithms of this framework and evaluate their effectiveness on real historical data.

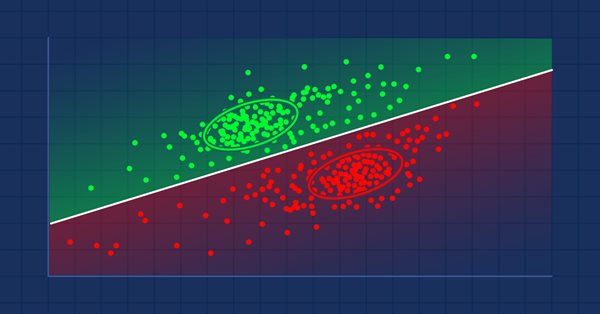

MQL5 Wizard techniques you should know (Part 04): Linear Discriminant Analysis

Todays trader is a philomath who is almost always looking up new ideas, trying them out, choosing to modify them or discard them; an exploratory process that should cost a fair amount of diligence. These series of articles will proposition that the MQL5 wizard should be a mainstay for traders in this effort.

Population optimization algorithms: Monkey algorithm (MA)

In this article, I will consider the Monkey Algorithm (MA) optimization algorithm. The ability of these animals to overcome difficult obstacles and get to the most inaccessible tree tops formed the basis of the idea of the MA algorithm.

Population optimization algorithms: Shuffled Frog-Leaping algorithm (SFL)

The article presents a detailed description of the shuffled frog-leaping (SFL) algorithm and its capabilities in solving optimization problems. The SFL algorithm is inspired by the behavior of frogs in their natural environment and offers a new approach to function optimization. The SFL algorithm is an efficient and flexible tool capable of processing a variety of data types and achieving optimal solutions.

Data Science and ML (Part 41): Forex and Stock Markets Pattern Detection using YOLOv8

Detecting patterns in financial markets is challenging because it involves seeing what's on the chart, something that's difficult to undertake in MQL5 due to image limitations. In this article, we are going to discuss a decent model made in Python that helps us detect patterns present on the chart with minimal effort.

Neural Networks in Trading: An Ensemble of Agents with Attention Mechanisms (MASAAT)

We introduce the Multi-Agent Self-Adaptive Portfolio Optimization Framework (MASAAT), which combines attention mechanisms and time series analysis. MASAAT generates a set of agents that analyze price series and directional changes, enabling the identification of significant fluctuations in asset prices at different levels of detail.

Neural networks made easy (Part 17): Dimensionality reduction

In this part we continue discussing Artificial Intelligence models. Namely, we study unsupervised learning algorithms. We have already discussed one of the clustering algorithms. In this article, I am sharing a variant of solving problems related to dimensionality reduction.

Developing a robot in Python and MQL5 (Part 2): Model selection, creation and training, Python custom tester

We continue the series of articles on developing a trading robot in Python and MQL5. Today we will solve the problem of selecting and training a model, testing it, implementing cross-validation, grid search, as well as the problem of model ensemble.

Circle Search Algorithm (CSA)

The article presents a new metaheuristic optimization Circle Search Algorithm (CSA) based on the geometric properties of a circle. The algorithm uses the principle of moving points along tangents to find the optimal solution, combining the phases of global exploration and local exploitation.

Data Science and Machine Learning (Part 18): The battle of Mastering Market Complexity, Truncated SVD Versus NMF

Truncated Singular Value Decomposition (SVD) and Non-Negative Matrix Factorization (NMF) are dimensionality reduction techniques. They both play significant roles in shaping data-driven trading strategies. Discover the art of dimensionality reduction, unraveling insights, and optimizing quantitative analyses for an informed approach to navigating the intricacies of financial markets.

Neural Networks in Trading: A Multimodal, Tool-Augmented Agent for Financial Markets (FinAgent)

We invite you to explore FinAgent, a multimodal financial trading agent framework designed to analyze various types of data reflecting market dynamics and historical trading patterns.

Population optimization algorithms: ElectroMagnetism-like algorithm (ЕМ)

The article describes the principles, methods and possibilities of using the Electromagnetic Algorithm in various optimization problems. The EM algorithm is an efficient optimization tool capable of working with large amounts of data and multidimensional functions.

SP500 Trading Strategy in MQL5 For Beginners

Discover how to leverage MQL5 to forecast the S&P 500 with precision, blending in classical technical analysis for added stability and combining algorithms with time-tested principles for robust market insights.

Triangular arbitrage with predictions

This article simplifies triangular arbitrage, showing you how to use predictions and specialized software to trade currencies smarter, even if you're new to the market. Ready to trade with expertise?

Experiments with neural networks (Part 7): Passing indicators

Examples of passing indicators to a perceptron. The article describes general concepts and showcases the simplest ready-made Expert Advisor followed by the results of its optimization and forward test.

Build Self Optimizing Expert Advisors in MQL5 (Part 8): Multiple Strategy Analysis

How best can we combine multiple strategies to create a powerful ensemble strategy? Join us in this discussion as we look to fit together three different strategies into our trading application. Traders often employ specialized strategies for opening and closing positions, and we want to know if our machines can perform this task better. For our opening discussion, we will get familiar with the faculties of the strategy tester and the principles of OOP we will need for this task.