精通日志记录(第六部分):数据库日志存储方案

概述

想象一个熙熙攘攘的数字交易与金融魔法集市,每一步操作都被追踪、记录并详细剖析以求成功。如果能不仅查阅智能交易系统(EA)每一次决策与错误的编年史,更能手握利器、实时优化与精调这些机器人,将会怎样?欢迎进入《精通日志记录(第一部分):MQL5中的基础概念与入门步骤》,在这篇中,我们开始打造专为MQL5开发定制的精密日志库。

此次征程旨在突破MetaTrader 5默认日志界面的限制,锻造稳健、可扩展且灵活的日志方案,为MQL5注入新活力。我们已嵌入核心需求:可靠的Singleton结构保障代码一致性、先进的数据库日志实现全面审计、多样化的输出灵活性、日志等级分类,以及可定制格式以满足不同项目需求。

加入我们,继续深入探索如何将原始数据转化为可执行洞察,前所未有地理解、掌控并提升EA性能。

在本文中,从基础概念到实际应用,我们将进行全面剖析,打造能直接读写与查询数据库的日志处理器。

什么是数据库

日志是系统的脉搏,记录着幕后发生的一切。但如何高效存储日志则是另一回事。到目前为止,我们把日志保存在文本文件中——对许多场景来说简单且够用。但是当数据量膨胀时,成千上万行里搜索信息就成了性能和管理的噩梦。

于是轮到数据库登场。能够提供结构化、经优化的方式来存储、查询和组织信息。无需手动翻文件,一条查询即可瞬间命中所需。可数据库到底是什么?为何如此关键?

数据库的结构

数据库如同智能仓储系统,数据按逻辑归类,便于搜索与操作。将其想象成编目完善的文档集,每条信息都有固定的位置。就日志而言,无需再散落文件,可按结构存储,并按日期、错误类型或其他条件秒级过滤。

为了便于理解,把数据库结构拆成三大基础组件:表、列、行。

-

表:根基所在,类似电子表格,把相关数据归为一组。在日志场景中,我们可以创建一张名为"logs"的表,仅用于存储日志记录。

每表针对特定的数据类型设计,确保信息访问的组织性与高效性。

列:表中的数据字段包含列,用于存储代表不同类别的信息。每一列都相当于一个数据字段,并且定义了一种特定类型的信息。例如,在日志表里,包含如下列:

- id → 日志的唯一编号

- timestamp → 记录日期时间

- level → 日志级别(DEBUG, INFO, ERROR...)

- message → 日志正文

- source → 来源(由哪个系统/模块产生)

每一列都有清晰的职责。例如,timestamp存储日期,message存储文本。该结构能够消除冗余,并提升搜索性能。

-

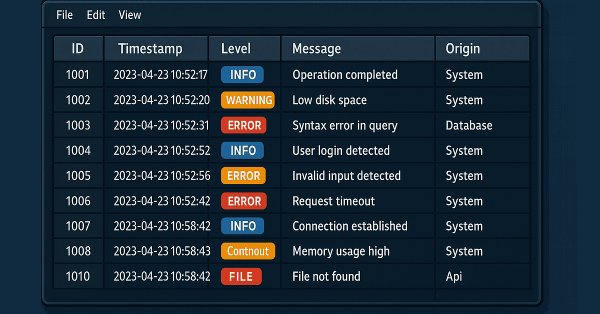

行:存储具体记录。列定义“存什么”,行则是表内的一条条记录。每一行包含对应列的完整值集。请参见日志表示例:

ID 时间戳 等级 消息 来源 1 2025-02-12 10:15 DEBUG RSI指标计算值:72.56 指标 2 2025-02-12 10:16 INFO 买入订单发送成功 订单管理 3 2025-02-12 10:17 ALERT 止损已调整至盈亏平衡点 风险管理 4 2025-02-12 10:18 ERROR 卖出订单发送失败 订单管理 5 2025-02-12 10:19 FATAL 初始化EA失败:无效设置 初始化 每一行代表一条独立记录,用于描述特定事件。

既然我们已经了解了数据库的结构,接下来可以探讨如何将其应用于在MQL5中,以高效存储和查询日志。让我们来看一下实际运行情况:

MQL5中的数据库

MQL5支持以结构化方式存储和检索数据,但在进入实现阶段前,需先理解其数据库支持的特殊性。

与面向网页或企业级应用的语言不同,MQL5并不原生支持如MySQL或PostgreSQL等强大的关系型数据库。但这并不意味着我们只能依赖文本文件!我们可以通过两种方式绕过这一限制:

使用 SQLite——一种MQL5原生支持的轻量级文件型数据库(详见MQL5数据库函数),或者通过 API 建立外部连接,以集成更强大的数据库系统。对于高效存储和查询日志的需求,SQLite是理想选择。其简单、快速且无需专用服务器,完全契合我们的使用场景。在进入实现阶段前,让我们先了解基于.sqlite文件的数据库特性。

- 优势

- 无需服务器:SQLite是“嵌入式”数据库,无需安装或配置服务器即可使用。

- 开箱即用:只需创建.sqlite文件即可开始存储数据。

- 读取速度快: 由于数据存储在单个文件中,SQLite对中小规模数据的读取操作极为高效。

- 低延迟:对于简单查询,其速度可能优于传统关系型数据库。

- 高兼容性: 支持多种编程语言。

- 劣势

- 文件损坏风险:如果文件损坏,数据恢复可能较为复杂。

- 需手动备份:SQLite无原生自动复制功能,备份需手动复制.sqlite文件。

- 扩展性有限:对于大规模数据和高并发访问,SQLite并非最优选择。但是鉴于我们的目标是本地日志存储,这些问题不会造成影响。

现在,我们已经了解MQL5数据库的优缺点,接下来将深入探讨实现高效日志存储与检索所需的基础操作。

我们所需的数据库基础操作

在实现处理器之前,我们需要先了解将要对数据库执行的基本操作。这些操作包括:创建表、插入新记录、检索数据,以及在必要时删除或更新日志。

在日志记录场景中,我们通常需要存储以下信息:日期与时间、日志级别、消息,以及可能生成该条目的文件或组件名称。为此,必须以有利于快速且高效查询的方式设计表结构。

首先,我们在Experts/Logify文件夹中创建一个简单的测试EA,名为 DatabaseTest.mq5 。文件创建完成后,其结构类似如下所示:

//+------------------------------------------------------------------+ //| DatabaseTest.mq5 | //| joaopedrodev | //| https://www.mql5.com/en/users/joaopedrodev | //+------------------------------------------------------------------+ #property copyright "joaopedrodev" #property link "https://www.mql5.com/en/users/joaopedrodev" #property version "1.00" //+------------------------------------------------------------------+ //| Import CLogify | //+------------------------------------------------------------------+ #include <Logify/Logify.mqh> //+------------------------------------------------------------------+ //| Expert initialization function | //+------------------------------------------------------------------+ int OnInit() { //--- //--- return(INIT_SUCCEEDED); } //+------------------------------------------------------------------+

创建并连接数据库

第一步是创建数据库并建立连接。为此,我们使用DatabaseOpen()函数,该函数接受两个参数:

- filename:数据库文件名(相对于MQL5\Files文件夹的路径)。

- flags:由ENUM_DATABASE_OPEN_FLAGS枚举类型组合而成的标识。这些标识用于确定数据库的访问方式。可用标识如下:

- DATABASE_OPEN_READONLY——只读访问。

- DATABASE_OPEN_READWRITE——允许读写操作。

- DATABASE_OPEN_CREATE——如果数据库不存在,则在磁盘上创建新数据库。

- DATABASE_OPEN_MEMORY——在内存中创建临时数据库。

- DATABASE_OPEN_COMMON——文件将存储在所有终端共享的文件夹中。

在本示例中,我们使用DATABASE_OPEN_READWRITE | DATABASE_OPEN_CREATE。这样可以确保在数据库不存在时自动创建,无需手动检查。

DatabaseOpen()函数会返回一个数据库句柄,我们需将其存储在变量中以便后续操作使用。此外,务必在使用结束后关闭连接,可以通过DatabaseClose()函数实现。

当前代码示例如下:

//+------------------------------------------------------------------+ //| Expert initialization function | //+------------------------------------------------------------------+ int OnInit() { //--- Opening a database connection int dbHandle = DatabaseOpen(path,DATABASE_OPEN_READWRITE|DATABASE_OPEN_CREATE); if(dbHandle == INVALID_HANDLE) { Print("[ERROR] ["+TimeToString(TimeCurrent())+"] Database error (Code: "+IntegerToString(GetLastError())+")"); return(INIT_FAILED); } Print("Open database file"); //--- Closing database after use DatabaseClose(handle_db); Print("Closed database file"); return(INIT_SUCCEEDED); } //+------------------------------------------------------------------+

到现在为止,我们已经成功打开和关闭了数据库,是时候对存储的数据进行结构化了。让我们从创建第一张表开始:logs。

创建数据表

但在盲目创建数据表之前,我们需要先检查目标表是否已经存在。为此,我们使用DatabaseTableExists()函数进行验证。如果数据库中尚未存在表,则通过简单的SQL命令创建它。说到SQL(结构化查询语言),这是与数据库交互的标准语言,可用于插入、查询、修改或删除数据。可将SQL视为数据库的“点餐菜单”:您提交订单(SQL语句),数据库就会按要求返回结果——当然,前提是订单格式正确!

现在,我们将通过实际代码,演示如何确保在需要时正确创建日志表。

就我们的需求而言,只需掌握几条SQL命令即可,第一条用于创建表:

CREATE TABLE {table_name} ({column_name} {type_data}, …); - {table_name}:待创建的表名称。

- {column_name} {type_data}:列定义,其中{数据类型}指定存储类型(文本、数字、日期等)

接下来,我们使用DatabaseExecute()函数执行建表命令。该表结构基于MqlLogifyModel,包含以下字段:

- id:行的唯一标识。

- formated:格式化后的消息。

- levelname:日志级别名称。

- msg:原始消息。

- args:消息参数。

- timestamp:数值格式的日期和时间。

- date_time:格式化日期和时间。

- level:日志的严重级别。

- origin:日志来源。

- filename:源文件名。

- function:生成日志的函数。

- line:生成日志的代码行号。

当前代码示例如下:

//+------------------------------------------------------------------+ //| Expert initialization function | //+------------------------------------------------------------------+ int OnInit() { //--- Open the database connection int dbHandle = DatabaseOpen("db\\logs.sqlite", DATABASE_OPEN_READWRITE | DATABASE_OPEN_CREATE); if(dbHandle == INVALID_HANDLE) { Print("[ERROR] [" + TimeToString(TimeCurrent()) + "] Unable to open database (Error Code: " + IntegerToString(GetLastError()) + ")"); return(INIT_FAILED); } Print("[INFO] Database connection opened successfully"); //--- Create the 'logs' table if it does not exist if(!DatabaseTableExists(dbHandle, "logs")) { DatabaseExecute(dbHandle, "CREATE TABLE logs (" "id INTEGER PRIMARY KEY AUTOINCREMENT," // Auto-incrementing unique ID "formated TEXT," // Formatted log message "levelname TEXT," // Log level (INFO, ERROR, etc.) "msg TEXT," // Main log message "args TEXT," // Additional details "timestamp BIGINT," // Log event timestamp (Unix time) "date_time DATETIME,"// Human-readable date and time "level BIGINT," // Log level as an integer "origin TEXT," // Module or component name "filename TEXT," // Source file name "function TEXT," // Function where the log was recorded "line BIGINT);"); // Source code line number Print("[INFO] 'logs' table created successfully"); } //--- Close the database connection DatabaseClose(dbHandle); Print("[INFO] Database connection closed successfully"); return(INIT_SUCCEEDED); } //+------------------------------------------------------------------+

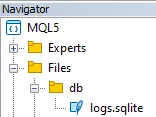

至此,数据库及‘logs’表的创建步骤已完成。创建表之后,在文件浏览器的Files文件夹中应能看到生成的数据库文件:

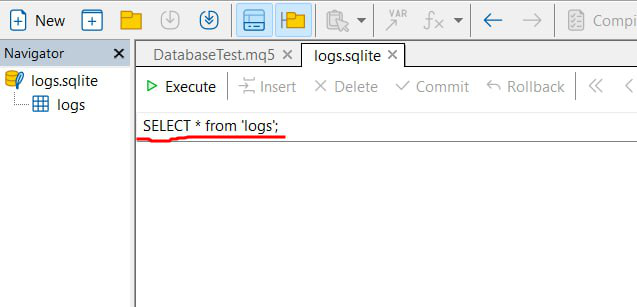

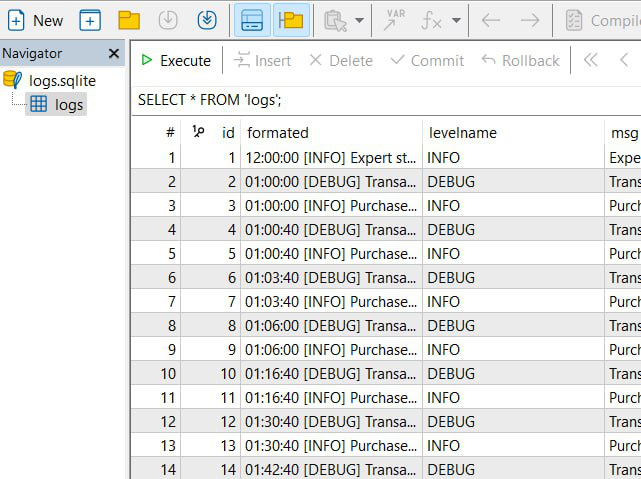

当您点击该数据库文件时,MetaEditor原生支持此格式,会在一个类似下图的界面中打开:

我们提供了一个交互界面(图中红色高亮部分),可用于查看数据库内容并执行各类SQL指令。在开发过程中,我们将频繁使用此功能来调试编辑器中的数据。

如何向数据库插入数据

在SQL中,向表中插入数据的标准命令为:

INSERT INTO {table_name} ({column}, ...) VALUES ({value}, ...) 而在MQL5环境中,我们可通过专用函数简化此操作,使代码更直观且减少错误风险。主要使用的函数包括:

- DatabasePrepare() —— 该函数为SQL查询创建唯一标识符,完成查询的预编译工作。这是数据库解析查询指令的首要环节。

- DatabaseBind()—— 通过此函数,可将实际值与查询参数进行绑定。在SQL命令中,参数以占位符(如?1、?2等)表示,执行时会被提供的真实数据替换。

- DatabaseRead() —— 负责执行已预编译的查询。对于不返回记录集的命令(如INSERT),该函数确保指令执行完毕,并在必要时推进到下一条记录。

- DatabaseFinalize() —— 使用完毕后,必须释放查询占用的资源。此函数会彻底终止预编译查询,避免内存泄漏。

创建插入查询时,我们可以通过占位符 标记待绑定值的位置。以下示例展示向日志表中插入新记录(列与前文创建的表结构一致):

INSERT INTO logs (formated, levelname, msg, args, timestamp, date_time, level, origin, filename, function, line) VALUES (?1, ?2, ?3, ?4, ?5, ?6, ?7, ?8, ?9, ?10, ?11);

请注意,表中已列出所有字段,但自动生成的id字段除外(该字段由数据库系统自动维护)。此外,待插入的值通过?1、?2等占位符标记,每个占位符对应一个索引序号,后续将通过DatabaseBind()函数按此索引绑定实际数据值。

//--- Prepare SQL statement for inserting a log entry string sql = "INSERT INTO logs (formated, levelname, msg, args, timestamp, date_time, level, origin, filename, function, line) " "VALUES (?1, ?2, ?3, ?4, ?5, ?6, ?7, ?8, ?9, ?10, ?11);"; int sqlRequest = DatabasePrepare(dbHandle, sql); if(sqlRequest == INVALID_HANDLE) { Print("[ERROR] Failed to prepare SQL statement for log insertion"); } //--- Bind values to the SQL statement DatabaseBind(sqlRequest, 0, "06:24:00 [INFO] Buy order sent successfully"); // Formatted log message DatabaseBind(sqlRequest, 1, "INFO"); // Log level name DatabaseBind(sqlRequest, 2, "Buy order sent successfully"); // Main log message DatabaseBind(sqlRequest, 3, "Symbol: EURUSD, Volume: 0.1"); // Additional details DatabaseBind(sqlRequest, 4, 1739471040); // Unix timestamp DatabaseBind(sqlRequest, 5, "2025.02.13 18:24:00"); // Readable date and time DatabaseBind(sqlRequest, 6, 1); // Log level as integer DatabaseBind(sqlRequest, 7, "Order Management"); // Module or component name DatabaseBind(sqlRequest, 8, "File.mq5"); // Source file name DatabaseBind(sqlRequest, 9, "OnInit"); // Function name DatabaseBind(sqlRequest, 10, 100); // Line number当完成所有值的绑定后,调用DatabaseRead()函数执行预编译的查询。如果执行成功,系统将打印确认消息;若失败,则报告错误信息:

//+------------------------------------------------------------------+ //| Expert initialization function | //+------------------------------------------------------------------+ int OnInit() { //--- Open the database connection int dbHandle = DatabaseOpen("db\\logs.sqlite", DATABASE_OPEN_READWRITE | DATABASE_OPEN_CREATE); if(dbHandle == INVALID_HANDLE) { Print("[ERROR] [" + TimeToString(TimeCurrent()) + "] Unable to open database (Error Code: " + IntegerToString(GetLastError()) + ")"); return(INIT_FAILED); } Print("[INFO] Database connection opened successfully"); //--- Create the 'logs' table if it does not exist if(!DatabaseTableExists(dbHandle, "logs")) { DatabaseExecute(dbHandle, "CREATE TABLE logs (" "id INTEGER PRIMARY KEY AUTOINCREMENT," // Auto-incrementing unique ID "formated TEXT," // Formatted log message "levelname TEXT," // Log level (INFO, ERROR, etc.) "msg TEXT," // Main log message "args TEXT," // Additional details "timestamp BIGINT," // Log event timestamp (Unix time) "date_time DATETIME,"// Human-readable date and time "level BIGINT," // Log level as an integer "origin TEXT," // Module or component name "filename TEXT," // Source file name "function TEXT," // Function where the log was recorded "line BIGINT);"); // Source code line number Print("[INFO] 'logs' table created successfully"); } //--- Prepare SQL statement for inserting a log entry string sql = "INSERT INTO logs (formated, levelname, msg, args, timestamp, date_time, level, origin, filename, function, line) " "VALUES (?1, ?2, ?3, ?4, ?5, ?6, ?7, ?8, ?9, ?10, ?11);"; int sqlRequest = DatabasePrepare(dbHandle, sql); if(sqlRequest == INVALID_HANDLE) { Print("[ERROR] Failed to prepare SQL statement for log insertion"); } //--- Bind values to the SQL statement DatabaseBind(sqlRequest, 0, "06:24:00 [INFO] Buy order sent successfully"); // Formatted log message DatabaseBind(sqlRequest, 1, "INFO"); // Log level name DatabaseBind(sqlRequest, 2, "Buy order sent successfully"); // Main log message DatabaseBind(sqlRequest, 3, "Symbol: EURUSD, Volume: 0.1"); // Additional details DatabaseBind(sqlRequest, 4, 1739471040); // Unix timestamp DatabaseBind(sqlRequest, 5, "2025.02.13 18:24:00"); // Readable date and time DatabaseBind(sqlRequest, 6, 1); // Log level as integer DatabaseBind(sqlRequest, 7, "Order Management"); // Module or component name DatabaseBind(sqlRequest, 8, "File.mq5"); // Source file name DatabaseBind(sqlRequest, 9, "OnInit"); // Function name DatabaseBind(sqlRequest, 10, 100); // Line number //--- Execute the SQL statement if(!DatabaseRead(sqlRequest)) { Print("[ERROR] SQL insertion request failed"); } else { Print("[INFO] Log entry inserted successfully"); } //--- Close the database connection DatabaseClose(dbHandle); Print("[INFO] Database connection closed successfully"); return(INIT_SUCCEEDED); } //+------------------------------------------------------------------+运行该EA后,控制台中将显示以下消息:

[INFO] Database file opened successfully [INFO] Table 'logs' created successfully [INFO] Log entry inserted successfully [INFO] Database file closed successfully

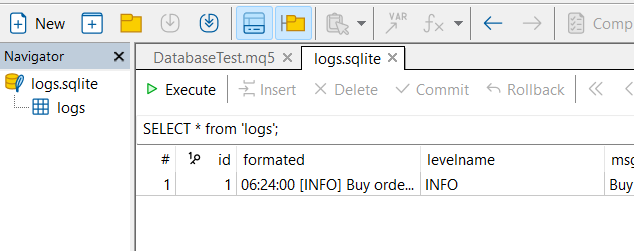

此外,当您在编辑器中打开数据库时,将能够查看包含所有已录入数据的日志表,如下图所示:

如何从数据库中读取数据

从数据库读取数据的过程与插入记录非常相似,但目标是从已存储的信息中检索数据。在MQL5中,读取数据的基本流程包括:

- 准备SQL查询:使用DatabasePrepare()函数,为将要执行的查询创建一个标识符。

- 执行查询:使用预编译的语句标识符后,DatabaseRead()函数会执行查询并将游标定位到结果集的第一条记录。

- 提取数据: 从当前记录中,您需要使用特定函数根据预期的数据类型获取各列的值。这些函数包括:

- DatabaseColumnText() —— 以字符串形式获取当前记录的字段值

- DatabaseColumnInteger() —— 从当前记录中获取一个整型值

- DatabaseColumnLong() —— 从当前记录中获取一个长整型值

- DatabaseColumnDouble() —— 从当前记录中获取一个双精度值

- DatabaseColumnBlob() —— 以数组形式获取当前记录的字段值

通过以上步骤,您即可构建一个简单高效的数据检索流程,并根据应用需求灵活地使用获取的信息。

举个例子,假设您需要从日志表中检索所有记录。对应的SQL查询非常简单:

SELECT * FROM logs

该查询会选取表中的所有列和所有记录。在MQL5中,我们使用DatabasePrepare()函数创建查询标识符,这与插入数据时的操作方式完全一致。

最终,代码示例如下:

//+------------------------------------------------------------------+ //| Expert initialization function | //+------------------------------------------------------------------+ int OnInit() { //--- Open the database connection int dbHandle = DatabaseOpen("db\\logs.sqlite", DATABASE_OPEN_READWRITE | DATABASE_OPEN_CREATE); if(dbHandle == INVALID_HANDLE) { Print("[ERROR] [" + TimeToString(TimeCurrent()) + "] Unable to open database (Error Code: " + IntegerToString(GetLastError()) + ")"); return INIT_FAILED; } Print("[INFO] Database connection opened successfully."); //--- Create the 'logs' table if it doesn't exist if(!DatabaseTableExists(dbHandle, "logs")) { string createTableSQL = "CREATE TABLE logs (" "id INTEGER PRIMARY KEY AUTOINCREMENT," // Auto-incrementing unique ID "formated TEXT," // Formatted log message "levelname TEXT," // Log level name (INFO, ERROR, etc.) "msg TEXT," // Main log message "args TEXT," // Additional arguments/details "timestamp BIGINT," // Timestamp of the log event "date_time DATETIME," // Human-readable date and time "level BIGINT," // Log level as an integer "origin TEXT," // Module or component name "filename TEXT," // Source file name "function TEXT," // Function where the log was recorded "line BIGINT);"; // Line number in the source code DatabaseExecute(dbHandle, createTableSQL); Print("[INFO] 'logs' table created successfully."); } //--- Prepare SQL statement to retrieve log entries string sqlQuery = "SELECT * FROM logs"; int sqlRequest = DatabasePrepare(dbHandle, sqlQuery); if(sqlRequest == INVALID_HANDLE) { Print("[ERROR] Failed to prepare SQL statement."); } //--- Execute the SQL statement if(!DatabaseRead(sqlRequest)) { Print("[ERROR] SQL query execution failed."); } else { Print("[INFO] SQL query executed successfully."); //--- Bind SQL query results to the log data model MqlLogifyModel logData; DatabaseColumnText(sqlRequest, 1, logData.formated); DatabaseColumnText(sqlRequest, 2, logData.levelname); DatabaseColumnText(sqlRequest, 3, logData.msg); DatabaseColumnText(sqlRequest, 4, logData.args); DatabaseColumnLong(sqlRequest, 5, logData.timestamp); string dateTimeStr; DatabaseColumnText(sqlRequest, 6, dateTimeStr); logData.date_time = StringToTime(dateTimeStr); DatabaseColumnInteger(sqlRequest, 7, logData.level); DatabaseColumnText(sqlRequest, 8, logData.origin); DatabaseColumnText(sqlRequest, 9, logData.filename); DatabaseColumnText(sqlRequest, 10, logData.function); DatabaseColumnLong(sqlRequest, 11, logData.line); Print("[INFO] Data retrieved: Formatted = ", logData.formated, " | Level = ", logData.level, " | Origin = ", logData.origin); } //--- Close the database connection DatabaseClose(dbHandle); Print("[INFO] Database connection closed successfully."); return INIT_SUCCEEDED; } //+------------------------------------------------------------------+

OK,运行代码后,我们得到以下结果:

[INFO] Database file opened successfully [INFO] SQL request successfully [INFO] Data read! | Formated: 06:24:00 [INFO] Buy order sent successfully | Level: 1 | Origin: Order Management [INFO] Database file closed successfully

在掌握这些基础操作后,我们即可着手配置数据库处理器。接下来,我们将搭建整合数据库与日志库所需的环境。

配置数据库处理器

如果要通过数据库存储日志,需要正确配置处理器参数。这就需要定义一个配置结构体,其设计思路与文件处理器的配置类似。让我们创建一个名为“MqlLogifyHandleDatabaseConfig”的配置结构体,并基于现有模板进行必要修改:

struct MqlLogifyHandleDatabaseConfig { string directory; // Directory for log files string base_filename; // Base file name ENUM_LOG_FILE_EXTENSION file_extension; // File extension type ENUM_LOG_ROTATION_MODE rotation_mode; // Rotation mode int messages_per_flush; // Messages before flushing uint codepage; // Encoding (e.g., UTF-8, ANSI) ulong max_file_size_mb; // Max file size in MB for rotation int max_file_count; // Max number of files before deletion //--- Default constructor MqlLogifyHandleDatabaseConfig(void) { directory = "logs"; // Default directory base_filename = "expert"; // Default base name file_extension = LOG_FILE_EXTENSION_LOG;// Default to .log extension rotation_mode = LOG_ROTATION_MODE_SIZE;// Default size-based rotation messages_per_flush = 100; // Default flush threshold codepage = CP_UTF8; // Default UTF-8 encoding max_file_size_mb = 5; // Default max file size in MB max_file_count = 10; // Default max file count } };

我已经用红色标记了需要移除的属性(如日志轮转rotation、文件类型file_type、最大文件数max_files、编码模式encoding_mode等),因为这些属性在数据库场景下没有实际意义。完成属性定义后,我们将调整ValidityConfig()方法,最终代码如下:

//+------------------------------------------------------------------+ //| Struct: MqlLogifyHandleDatabaseConfig | //+------------------------------------------------------------------+ struct MqlLogifyHandleDatabaseConfig { string directory; // Directory for log files string base_filename; // Base file name int messages_per_flush; // Messages before flushing //--- Default constructor MqlLogifyHandleDatabaseConfig(void) { directory = "logs"; // Default directory base_filename = "expert"; // Default base name messages_per_flush = 100; // Default flush threshold } //--- Destructor ~MqlLogifyHandleDatabaseConfig(void) { } //--- Validate configuration bool ValidateConfig(string &error_message) { //--- Saves the return value bool is_valid = true; //--- Check if the directory is not empty if(directory == "") { directory = "logs"; error_message = "The directory cannot be empty."; is_valid = false; } //--- Check if the base filename is not empty if(base_filename == "") { base_filename = "expert"; error_message = "The base filename cannot be empty."; is_valid = false; } //--- Check if the number of messages per flush is positive if(messages_per_flush <= 0) { messages_per_flush = 100; error_message = "The number of messages per flush must be greater than zero."; is_valid = false; } //--- No errors found return(is_valid); } };

完成配置准备后,我们终于可以开始实现数据库处理器。

实现数据库处理器

既然配置结构已就绪,那么接下来进入实战环节:实现数据库处理器。我将详细拆解每个实现步骤,解释设计决策,并确保处理器具备可扩展性以支持未来优化。

我们首先创建CLogifyHandlerDatabase类,继承自基础日志处理器CLogifyHandler。该类需要存储处理器配置、时间控制工具(CIntervalWatcher)和日志消息缓存。该缓存用于避免对数据库的频繁写入操作,通过临时存储日志消息,再批量写入数据库。

class CLogifyHandlerDatabase : public CLogifyHandler { private: //--- Config MqlLogifyHandleDatabaseConfig m_config; //--- Update utilities CIntervalWatcher m_interval_watcher; //--- Cache data MqlLogifyModel m_cache[]; int m_index_cache; public: CLogifyHandlerDatabase(void); ~CLogifyHandlerDatabase(void); //--- Configuration management void SetConfig(MqlLogifyHandleDatabaseConfig &config); MqlLogifyHandleDatabaseConfig GetConfig(void); virtual void Emit(MqlLogifyModel &data); // Processes a log message and sends it to the specified destination virtual void Flush(void); // Clears or completes any pending operations virtual void Close(void); // Closes the handler and releases any resources };

构造函数负责初始化类属性:确保处理器名称设置为 "database",为m_interval_watcher设置定时器间隔,并清空缓存队列。在析构函数中,会调用Close() 方法,确保所有待写入的日志在对象销毁前完成写入操作。

另一个关键方法是SetConfig(),允许用户配置处理器参数,存储配置信息并验证其有效性以避免错误。而GetConfig()方法则直接返回当前配置。

CLogifyHandlerDatabase::CLogifyHandlerDatabase(void) { m_name = "database"; m_interval_watcher.SetInterval(PERIOD_D1); ArrayFree(m_cache); m_index_cache = 0; } CLogifyHandlerDatabase::~CLogifyHandlerDatabase(void) { this.Close(); } void CLogifyHandlerDatabase::SetConfig(MqlLogifyHandleDatabaseConfig &config) { m_config = config; string err_msg = ""; if(!m_config.ValidateConfig(err_msg)) { Print("[ERROR] ["+TimeToString(TimeCurrent())+"] Log system error: "+err_msg); } } MqlLogifyHandleDatabaseConfig CLogifyHandlerDatabase::GetConfig(void) { return(m_config); }

现在,让我们进入数据库处理器的核心功能——直接存储日志记录。为此,我们将实现每个处理器必备的三个基础方法:

- Emit(MqlLogifyModel &data):处理日志消息并将其发送至缓存队列。

- Flush():结束或清理所有操作,将缓存数据写入目标存储(如文件、控制台、数据库等)。

- Close():关闭处理器并释放关联资源。

首先是Emit()方法,其负责将数据添加到缓存中,若缓存达到预设容量上限,则触发Flush()执行写入操作。

//+------------------------------------------------------------------+ //| Processes a log message and sends it to the specified destination| //+------------------------------------------------------------------+ void CLogifyHandlerDatabase::Emit(MqlLogifyModel &data) { //--- Checks if the configured level allows if(data.level >= this.GetLevel()) { //--- Resize cache if necessary int size = ArraySize(m_cache); if(size != m_config.messages_per_flush) { ArrayResize(m_cache, m_config.messages_per_flush); size = m_config.messages_per_flush; } //--- Add log to cache m_cache[m_index_cache++] = data; //--- Flush if cache limit is reached or update condition is met if(m_index_cache >= m_config.messages_per_flush || m_interval_watcher.Inspect()) { //--- Save cache Flush(); //--- Reset cache m_index_cache = 0; for(int i=0;i<size;i++) { m_cache[i].Reset(); } } } } //+------------------------------------------------------------------+

继续讲解 Flus()方法,其会从缓存中读取数据,并按照本文开头“如何向数据库插入数据”章节中介绍的流程,使用DatabasePrepare()函数将数据写入数据库。

//+------------------------------------------------------------------+ //| Clears or completes any pending operations | //+------------------------------------------------------------------+ void CLogifyHandlerDatabase::Flush(void) { //--- Get the full path of the file string path = m_config.directory+"\\"+m_config.base_filename+".sqlite"; //--- Open database ResetLastError(); int handle_db = DatabaseOpen(path,DATABASE_OPEN_CREATE|DATABASE_OPEN_READWRITE); if(handle_db == INVALID_HANDLE) { Print("[ERROR] ["+TimeToString(TimeCurrent())+"] Log system error: Unable to open log file '"+path+"'. Ensure the directory exists and is writable. (Code: "+IntegerToString(GetLastError())+")"); return; } if(!DatabaseTableExists(handle_db,"logs")) { DatabaseExecute(handle_db, "CREATE TABLE logs (" "id INTEGER PRIMARY KEY AUTOINCREMENT," "formated TEXT," "levelname TEXT," "msg TEXT," "args TEXT," "timestamp BIGINT," "date_time DATETIME," "level BIGINT," "origin TEXT," "filename TEXT," "function TEXT," "line BIGINT);"); } //--- string sql="INSERT INTO logs (formated, levelname, msg, args, timestamp, date_time, level, origin, filename, function, line) VALUES (?1, ?2, ?3, ?4, ?5, ?6, ?7, ?8, ?9, ?10, ?11);"; // parâmetro de consulta int request = DatabasePrepare(handle_db,sql); if(request == INVALID_HANDLE) { Print("Erro"); } //--- Loop through all cached messages int size = ArraySize(m_cache); for(int i=0;i<size;i++) { if(m_cache[i].timestamp > 0) { DatabaseBind(request,0,m_cache[i].formated); DatabaseBind(request,1,m_cache[i].levelname); DatabaseBind(request,2,m_cache[i].msg); DatabaseBind(request,3,m_cache[i].args); DatabaseBind(request,4,m_cache[i].timestamp); DatabaseBind(request,5,TimeToString(m_cache[i].date_time,TIME_DATE|TIME_MINUTES|TIME_SECONDS)); DatabaseBind(request,6,(int)m_cache[i].level); DatabaseBind(request,7,m_cache[i].origin); DatabaseBind(request,8,m_cache[i].filename); DatabaseBind(request,9,m_cache[i].function); DatabaseBind(request,10,m_cache[i].line); DatabaseRead(request); DatabaseReset(request); } } //--- DatabaseFinalize(request); //--- Close database DatabaseClose(handle_db); } //+------------------------------------------------------------------+

最后,Close()方法会确保在退出前写入所有挂起的日志。

void CLogifyHandlerDatabase::Close(void) { Flush(); }

至此,我们已实现了一个健壮的日志处理器,确保日志能够高效存储且无数据丢失。现在,既然数据库处理器已准备好记录日志,下一步我们需要创建高效的方法来查询这些记录。我们的设计思路是:定义一个通用的基础方法Query(),它接收字符串格式的SQL命令,并返回一个MqlLogifyModel类型的数组。基于它,我们可以构建特定方法以简化高频查询操作。Query()方法将负责打开数据库连接、执行查询,并将结果存储到日志数据结构中(具体实现如下)。

class CLogifyHandlerDatabase : public CLogifyHandler { public: //--- Query methods bool Query(string query, MqlLogifyModel &data[]); }; //+------------------------------------------------------------------+ //| Get data by sql command | //+------------------------------------------------------------------+ bool CLogifyHandlerDatabase::Query(string query, MqlLogifyModel &data[]) { //--- Get the full path of the file string path = m_config.directory+"\\"+m_config.base_filename+".sqlite"; //--- Open database ResetLastError(); int handle_db = DatabaseOpen(path,DATABASE_OPEN_READWRITE); if(handle_db == INVALID_HANDLE) { Print("[ERROR] ["+TimeToString(TimeCurrent())+"] Log system error: Unable to open log file '"+path+"'. Ensure the directory exists and is writable. (Code: "+IntegerToString(GetLastError())+")"); return(false); } //--- Prepare the SQL query int request = DatabasePrepare(handle_db,query); if(request == INVALID_HANDLE) { Print("Erro query"); return(false); } //--- Clears array before inserting new data ArrayFree(data); //--- Reads query results line by line for(int i=0;DatabaseRead(request);i++) { int size = ArraySize(data); ArrayResize(data,size+1,size); //--- Maps database data to the MqlLogifyModel model DatabaseColumnText(request,1,data[size].formated); DatabaseColumnText(request,2,data[size].levelname); DatabaseColumnText(request,3,data[size].msg); DatabaseColumnText(request,4,data[size].args); DatabaseColumnLong(request,5,data[size].timestamp); string value; DatabaseColumnText(request,6,value); data[size].date_time = StringToTime(value); DatabaseColumnInteger(request,7,data[size].level); DatabaseColumnText(request,8,data[size].origin); DatabaseColumnText(request,9,data[size].filename); DatabaseColumnText(request,10,data[size].function); DatabaseColumnLong(request,11,data[size].line); } //--- Ends the query and closes the database DatabaseFinalize(handle_db); DatabaseClose(handle_db); return(true); } //+------------------------------------------------------------------+

该方法为我们提供了完全的灵活性,允许在日志数据库中执行任意SQL查询。然而,为了提升易用性,我们将创建辅助方法,封装常见的查询逻辑。

为避免开发者每次查询日志时都需编写SQL语句,我设计了一些方法,内置了最常用的SQL命令。这些方法可作为快捷方式,支持通过日志级别、日期、来源、消息内容、参数、文件名和函数名等条件筛选日志。以下是各筛选条件对应的SQL命令:

SELECT * FROM 'logs' WHERE level=1;

SELECT * FROM 'logs' WHERE timestamp BETWEEN '{start_time}' AND '{stop_time}';

SELECT * FROM 'logs' WHERE origin LIKE '%{origin}%';

SELECT * FROM 'logs' WHERE msg LIKE '%{msg}%';

SELECT * FROM 'logs' WHERE args LIKE '%{args}%';

SELECT * FROM 'logs' WHERE filename LIKE '%{filename}%';

SELECT * FROM 'logs' WHERE function LIKE '%{function}%'; 现在,我们实现使用这些命令的具体方法:

class CLogifyHandlerDatabase : public CLogifyHandler { public: //--- Query methods bool Query(string query, MqlLogifyModel &data[]); bool QueryByLevel(ENUM_LOG_LEVEL level, MqlLogifyModel &data[]); bool QueryByDate(datetime start_time, datetime stop_time, MqlLogifyModel &data[]); bool QueryByOrigin(string origin, MqlLogifyModel &data[]); bool QueryByMsg(string msg, MqlLogifyModel &data[]); bool QueryByArgs(string args, MqlLogifyModel &data[]); bool QueryByFile(string file, MqlLogifyModel &data[]); bool QueryByFunction(string function, MqlLogifyModel &data[]); }; //+------------------------------------------------------------------+ //| Get logs filtering by level | //+------------------------------------------------------------------+ bool CLogifyHandlerDatabase::QueryByLevel(ENUM_LOG_LEVEL level, MqlLogifyModel &data[]) { return(this.Query("SELECT * FROM 'logs' WHERE level="+IntegerToString(level)+";",data)); } //+------------------------------------------------------------------+ //| Get logs filtering by start end stop time | //+------------------------------------------------------------------+ bool CLogifyHandlerDatabase::QueryByDate(datetime start_time, datetime stop_time, MqlLogifyModel &data[]) { return(this.Query("SELECT * FROM 'logs' WHERE timestamp BETWEEN '"+IntegerToString((ulong)start_time)+"' AND '"+IntegerToString((ulong)stop_time)+"';",data)); } //+------------------------------------------------------------------+ //| Get logs filtering by origin | //+------------------------------------------------------------------+ bool CLogifyHandlerDatabase::QueryByOrigin(string origin, MqlLogifyModel &data[]) { return(this.Query("SELECT * FROM 'logs' WHERE origin LIKE '%"+origin+"%';",data)); } //+------------------------------------------------------------------+ //| Get logs filtering by message | //+------------------------------------------------------------------+ bool CLogifyHandlerDatabase::QueryByMsg(string msg, MqlLogifyModel &data[]) { return(this.Query("SELECT * FROM 'logs' WHERE msg LIKE '%"+msg+"%';",data)); } //+------------------------------------------------------------------+ //| Get logs filtering by args | //+------------------------------------------------------------------+ bool CLogifyHandlerDatabase::QueryByArgs(string args, MqlLogifyModel &data[]) { return(this.Query("SELECT * FROM 'logs' WHERE args LIKE '%"+args+"%';",data)); } //+------------------------------------------------------------------+ //| Get logs filtering by file name | //+------------------------------------------------------------------+ bool CLogifyHandlerDatabase::QueryByFile(string file, MqlLogifyModel &data[]) { return(this.Query("SELECT * FROM 'logs' WHERE filename LIKE '%"+file+"%';",data)); } //+------------------------------------------------------------------+ //| Get logs filtering by function name | //+------------------------------------------------------------------+ bool CLogifyHandlerDatabase::QueryByFunction(string function, MqlLogifyModel &data[]) { return(this.Query("SELECT * FROM 'logs' WHERE function LIKE '%"+function+"%';",data)); } //+------------------------------------------------------------------+

如今,我们已经拥有了一套高效且灵活的方法,可直接从数据库访问日志。Query()方法允许执行任意SQL命令,甚至支持根据具体需求传递更复杂的SQL语句(包含更多筛选条件);而辅助方法则封装了常见查询,使用更直观且减少了出错风险。

既然日志处理器已实现,现在需要测试其是否正常运行。让我们可视化结果,确保日志按预期存储和检索。

可视化结果

实现处理器后,下一步需验证其是否符合预期。需测试日志插入功能,验证记录是否正确存入数据库,并确保查询既快速又准确。

在测试中,我将沿用相同的LogifyTest.mq5 文件,仅在开头添加一些日志消息。我们还会执行一些简单策略的操作,如果无持仓则开仓,并为持仓设置止盈和止损以触发平仓。

//+------------------------------------------------------------------+ //| Import CLogify | //+------------------------------------------------------------------+ #include <Logify/Logify.mqh> #include <Trade/Trade.mqh> CLogify logify; CTrade trade; //+------------------------------------------------------------------+ //| Expert initialization function | //+------------------------------------------------------------------+ int OnInit() { //--- Configs MqlLogifyHandleDatabaseConfig m_config; m_config.directory = "db"; m_config.base_filename = "logs"; m_config.messages_per_flush = 5; //--- Handler Database CLogifyHandlerDatabase *handler_database = new CLogifyHandlerDatabase(); handler_database.SetConfig(m_config); handler_database.SetLevel(LOG_LEVEL_DEBUG); handler_database.SetFormatter(new CLogifyFormatter("hh:mm:ss","{date_time} [{levelname}] {msg}")); //--- Add handler in base class logify.AddHandler(handler_database); //--- Using logs logify.Info("Expert starting successfully", "Boot", "",__FILE__,__FUNCTION__,__LINE__); return(INIT_SUCCEEDED); } //+------------------------------------------------------------------+ //| Expert deinitialization function | //+------------------------------------------------------------------+ void OnDeinit(const int reason) { //--- } //+------------------------------------------------------------------+ //| Expert tick function | //+------------------------------------------------------------------+ void OnTick() { //--- No positions if(PositionsTotal() == 0) { double price_entry = SymbolInfoDouble(_Symbol,SYMBOL_ASK); double volume = 1; if(trade.Buy(volume,_Symbol,price_entry,price_entry - 100 * _Point, price_entry + 100 * _Point,"Buy at market")) { logify.Debug("Transaction data | Price: "+DoubleToString(price_entry,_Digits)+" | Symbol: "+_Symbol+" | Volume: "+DoubleToString(volume,2), "CTrade", "",__FILE__,__FUNCTION__,__LINE__); logify.Info("Purchase order sent successfully", "CTrade", "",__FILE__,__FUNCTION__,__LINE__); } else { logify.Debug("Error code: "+IntegerToString(trade.ResultRetcode(),_Digits)+" | Description: "+trade.ResultRetcodeDescription(), "CTrade", "",__FILE__,__FUNCTION__,__LINE__); logify.Error("Failed to send purchase order", "CTrade", "",__FILE__,__FUNCTION__,__LINE__); } } } //+------------------------------------------------------------------+

在欧元兑美元(EURUSD)上对策略测试器进行1天的测试时,生成909条日志记录已足够。根据配置,这些日志已保存至.sqlite文件中。要访问日志,只需进入终端文件夹,或按“Ctrl/Cmd + Shift + D”调出文件浏览器。按路径“MQL5/Files/db/logs.sqlite”查找文件。拿到文件后,可以像之前一样直接在MetaEditor中打开:

至此,我们的日志库又迈出了关键一步。现在,日志可高效存储于数据库中并快速检索,显著提升了可扩展性和组织性。

结论

在本文中,我们深入探讨了将数据库集成至日志库的全过程——从基础概念到专用处理器的实现。首先,我们探讨了数据库作为日志存储方案的显著优势:相较于传统文本文件,数据库具备更强的可扩展性和结构化能力,能够更高效地管理海量日志数据。随后,我们聚焦MQL5环境下的数据库应用,分析了其技术限制及对应的解决方案,确保集成方案在特定平台下的可行性。

最终,我们通过实践验证了实现效果:日志不仅被正确存储,还能实现快速、高效的检索。此外,我们探讨了日志的查看方式——既可通过直接数据库查询,也可借助专用日志监控工具。这一验证环节至关重要,确保了解决方案在实际场景中的功能性与有效性。

至此,我们的日志库开发迈入了新阶段。数据库的引入为日志管理带来了显著的提升——数据组织更清晰、访问更便捷、扩展更灵活。这一架构不仅大幅优化了海量数据的处理效率,还简化了系统日志的分析与监控流程。

本文由MetaQuotes Ltd译自英文

原文地址: https://www.mql5.com/en/articles/17709

注意: MetaQuotes Ltd.将保留所有关于这些材料的权利。全部或部分复制或者转载这些材料将被禁止。

本文由网站的一位用户撰写,反映了他们的个人观点。MetaQuotes Ltd 不对所提供信息的准确性负责,也不对因使用所述解决方案、策略或建议而产生的任何后果负责。

智能系统健壮性测试

智能系统健壮性测试

您应当知道的 MQL5 向导技术(第 53 部分):市场促进指数

您应当知道的 MQL5 向导技术(第 53 部分):市场促进指数

MQL5 简介(第 11 部分):MQL5 中使用内置指标的初学者指南(二)

MQL5 简介(第 11 部分):MQL5 中使用内置指标的初学者指南(二)

MQL5交易管理面板开发(第九部分):代码组织(4):交易管理面板类

MQL5交易管理面板开发(第九部分):代码组织(4):交易管理面板类