数据科学和机器学习(第 19 部分):利用 AdaBoost 为您的 AI 模型增压

什么是 Adaboost?

Adaboost 是 Adaptive Boosting 的缩写,是一种融合机器学习模型,它尝试基于弱分类器构建强分类器。它是如何工作的?

- 该算法为实例分配权重时,基于它们的分类对错。

- 它采用加权总和把弱学习器结合在一起。

- 最终的强学习器是弱学习器的线性组合,其权重是在训练过程中判定的。

为什么在使用 Adaboost 时所有人都应小心?

Adaboost 提供多项优势,包括:

- 提高准确性 — 通过组合若干个弱模型,来推进模型的整体预测准确性。针对所有模型做出的预测求均值,以便进行回归,或为分类给它们投票,从而提升最终模型的准确性。

- 过拟合的稳健性 — 通过为误分类的输入分配权重,降低过度拟合的风险。

- 更好地处理不平衡数据 — 通过更多地关注误分类的数据点,处理不平衡的数据。

- 更好的可解释性 — 通过将模型决策过程分解为多个过程,提升模型的可解释性。

什么是决策树桩?

决策树桩是一个简单的机器学习模型,在 Adaboost 等融合方法中用作弱学习器。本质上它是一个机器学习模型,经过简化后,可以基于单个特征和阈值制定决策。将决策树桩当作弱学习器的目标,是捕获数据中有助于改进整体融合模型的基本形态。

以下是决策树桩分类器背后的理论简要解释,以决策树分类器为例:

1. 结构:

- 决策树桩基于单个特征和阈值做出二元决策。

- 它将数据分为两组:特征值低于阈值的组,以及高于阈值的组。

- 在这个函数库中,它通常部署在大多数分类器的构造函数之中:

CDecisionTreeClassifier(uint min_samples_split=2, uint max_depth=2, mode mode_=MODE_GINI); ~CDecisionTreeClassifier(void);

2. 训练:

- 在训练期间,决策树桩识别可令某个准则最小化的特征和阈值,往往是加权的误分类误差。

- 针对每个可能的分叉,计算误分类误差,并选择误差最小的分叉。

- fit 函数是完成所有训练的场所:

void fit(matrix &x, vector &y);

3. 预测:

- 对于预测,数据点分类,是基于其特征值是高于、或低于所选阈值。

double predict(vector &x); vector predict(matrix &x);

融合方法如 AdaBoost 中常用的弱学习器包括:

1.决策树桩/决策树:

- 如上所述,由于决策树桩、或浅层决策树的简单性,故常常用到。

2. 线性模型:

- 线性模型如逻辑回归、或线性 SVM,都可当作弱学习器。

3. 多项式模型:

- 高阶多项式模型可以捕获更复杂的关系,而低阶多项式可以充当弱学习器。

4. 神经网络(浅层):

- 有时会使用具有少量层和神经元的浅层神经网络。

5. 高斯模型:

- 高斯模型(例如高斯朴素贝叶斯)可当作弱学习器。

弱学习器的选择取决于数据的具体特征和手头的问题。在实践中,决策树桩因其在提升算法方面的简单性和效率而广为流行。AdaBoost 的融合方式通过组合这些弱学习器的预测来提高性能。

由于决策树桩是一个模型,因此我们需要为 Adaboost 类构造函数提供我们的弱模型参数。

class AdaBoost { protected: vector m_alphas; vector classes_in_data; uint m_min_split, m_max_depth; CDecisionTreeClassifier *weak_learners[]; //store weak_learner pointers for memory allocation tracking CDecisionTreeClassifier *weak_learner; uint m_estimators; public: AdaBoost(uint min_split, uint max_depth, uint n_estimators=50); ~AdaBoost(void); void fit(matrix &x, vector &y); int predict(vector &x); vector predict(matrix &x); };

该示例使用决策树,但可以部署任何分类机器学习模型。

构建 AdaBoost 类:

该项 number of estimators(估计器数量)是指为了创建最终强力学习器,在融合学习算法中结合的弱学习器(基本模型、或决策树桩)的数量。在 AdaBoost 或梯度提升等算法的上下文中,该参数通常表示为 n_estimators。

AdaBoost::AdaBoost(uint min_split, uint max_depth, uint n_estimators=50) :m_estimators(n_estimators), m_min_split(min_split), m_max_depth(max_depth) { ArrayResize(weak_learners, n_estimators); //Resizing the array to retain the number of base weak_learners }

从类的受保护部分,您已经看到了向量 m_alphas,同时还在本文中多次听到术语权重,以下是说明:

Alpha 值:

alpha 值表示分配给融合中每个弱学习器的贡献度或权重,这些数值是基于每个弱学习器在训练期间的表现计算得出的。

较高的 alpha 值分配给那些在减少训练误方面表现良好的弱学习者。

计算 alpha 的公式常常如下给出:

![]()

其中:

![]() 是第 t 个弱学习器的权重误差。

是第 t 个弱学习器的权重误差。

实现:

double alpha = 0.5 * log((1-error) / (error + 1e-10));

权重:

权重向量表示每次迭代时每个训练实例的重要性。

在训练期间,误分类实例的权重会增加,以便在下一次迭代中专注于难以分类的样本。

更新实例权重的公式常常如下给出:

![]()

![]() 是在 i 次迭代时的实例权重

是在 i 次迭代时的实例权重

![]() 是分配给第 t 个弱学习器的权重。

是分配给第 t 个弱学习器的权重。

![]() 是真实的实例标签

是真实的实例标签

![]() 是实例上第 t 个弱学习器的预测

是实例上第 t 个弱学习器的预测

![]() 是一个常规化因子,以确保权重之和为 1。

是一个常规化因子,以确保权重之和为 1。

实现:

for (ulong j=0; j<m; j++) misclassified[j] = (preds[j] != y[j]); error = (misclassified * weights).Sum() / (double)weights.Sum(); //--- Calculate the weight of a weak learner in the final model double alpha = 0.5 * log((1-error) / (error + 1e-10)); //--- Update instance weights weights *= exp(-alpha * y * preds); weights /= weights.Sum();

训练 Adaboost 模型:

就像其它融合技术一样,n 个模型(在下面函数中指的是 m_estimators)用来依据相同的数据进行预测,可以部署多数投票或其它技术来判定最佳的可能结局。

void AdaBoost::fit(matrix &x,vector &y) { m_alphas.Resize(m_estimators); classes_in_data = MatrixExtend::Unique(y); //Find the target variables in the class ulong m = x.Rows(), n = x.Cols(); vector weights(m); weights = weights.Fill(1.0) / m; //Initialize instance weights vector preds(m); vector misclassified(m); double error = 0; for (uint i=0; i<m_estimators; i++) { //--- weak_learner = new CDecisionTreeClassifier(this.m_min_split, m_max_depth); weak_learner.fit(x, y); //fitting the randomized data to the i-th weak_learner preds = weak_learner.predict(x); //making predictions for the i-th weak_learner for (ulong j=0; j<m; j++) misclassified[j] = (preds[j] != y[j]); error = (misclassified * weights).Sum() / (double)weights.Sum(); //--- Calculate the weight of a weak learner in the final weak_learner double alpha = 0.5 * log((1-error) / (error + 1e-10)); //--- Update instance weights weights *= exp(-alpha * y* preds); weights /= weights.Sum(); //--- save a weak learner and its weight this.m_alphas[i] = alpha; this.weak_learners[i] = weak_learner; } }

就像任何其它融合技术一样,bootstrapping 也是一件至关重要的事情,没有它,所有模型和数据都是简同的,这可能会导致所有模型的性能变得彼此无法区分,故需要部署 Bootstrapping:

void AdaBoost::fit(matrix &x,vector &y) { m_alphas.Resize(m_estimators); classes_in_data = MatrixExtend::Unique(y); //Find the target variables in the class ulong m = x.Rows(), n = x.Cols(); vector weights(m); weights = weights.Fill(1.0) / m; //Initialize instance weights vector preds(m); vector misclassified(m); //--- matrix data = MatrixExtend::concatenate(x, y); matrix temp_data; matrix x_subset; vector y_subset; double error = 0; for (uint i=0; i<m_estimators; i++) { temp_data = data; MatrixExtend::Randomize(temp_data, this.m_random_state, this.m_boostrapping); if (!MatrixExtend::XandYSplitMatrices(temp_data, x_subset, y_subset)) //Get randomized subsets { ArrayRemove(weak_learners,i,1); //Delete the invalid weak_learner printf("%s %d Failed to split data",__FUNCTION__,__LINE__); continue; } //--- weak_learner = new CDecisionTreeClassifier(this.m_min_split, m_max_depth); weak_learner.fit(x_subset, y_subset); //fitting the randomized data to the i-th weak_learner preds = weak_learner.predict(x_subset); //making predictions for the i-th weak_learner //printf("[%d] Accuracy %.3f ",i,Metrics::accuracy_score(y_subset, preds)); for (ulong j=0; j<m; j++) misclassified[j] = (preds[j] != y_subset[j]); error = (misclassified * weights).Sum() / (double)weights.Sum(); //--- Calculate the weight of a weak learner in the final weak_learner double alpha = 0.5 * log((1-error) / (error + 1e-10)); //--- Update instance weights weights *= exp(-alpha * y_subset * preds); weights /= weights.Sum(); //--- save a weak learner and its weight this.m_alphas[i] = alpha; this.weak_learners[i] = weak_learner; } }类构造函数也必须修改:

class AdaBoost { protected: vector m_alphas; vector classes_in_data; int m_random_state; bool m_boostrapping; uint m_min_split, m_max_depth; CDecisionTreeClassifier *weak_learners[]; //store weak_learner pointers for memory allocation tracking CDecisionTreeClassifier *weak_learner; uint m_estimators; public: AdaBoost(uint min_split, uint max_depth, uint n_estimators=50, int random_state=42, bool bootstrapping=true); ~AdaBoost(void); void fit(matrix &x, vector &y); int predict(vector &x); vector predict(matrix &x); }; //+------------------------------------------------------------------+ //| | //+------------------------------------------------------------------+ AdaBoost::AdaBoost(uint min_split, uint max_depth, uint n_estimators=50, int random_state=42, bool bootstrapping=true) :m_estimators(n_estimators), m_random_state(random_state), m_boostrapping(bootstrapping), m_min_split(min_split), m_max_depth(max_depth) { ArrayResize(weak_learners, n_estimators); //Resizing the array to retain the number of base weak_learners }

获取多数预测

使用经过训练的权重,Adaboost 分类器判定具有最多投票的类,这意味着它比其余类更有可能出现。

int AdaBoost::predict(vector &x) { // Combine weak learners using weighted sum vector weak_preds(m_estimators), final_preds(m_estimators); for (uint i=0; i<this.m_estimators; i++) weak_preds[i] = this.weak_learners[i].predict(x); return (int)weak_preds[(this.m_alphas*weak_preds).ArgMax()]; //Majority decision class }

现在我们看看如何在智能系统中运用该模型:

#include <MALE5\Ensemble\AdaBoost.mqh> DecisionTree::AdaBoost *ada_boost_tree; LogisticRegression::AdaBoost *ada_boost_logit; //+------------------------------------------------------------------+ //| Expert initialization function | //+------------------------------------------------------------------+ int OnInit() { //--- string headers; matrix data = MatrixExtend::ReadCsv("iris.csv",headers); matrix x; vector y; MatrixExtend::XandYSplitMatrices(data,x,y); ada_boost_tree = new DecisionTree::AdaBoost(2,1,10,42); ada_boost_tree.fit(x,y); vector predictions = ada_boost_tree.predict(x); printf("Adaboost acc = %.3f",Metrics::accuracy_score(y, predictions)); //--- return(INIT_SUCCEEDED); }

使用 iris.csv 数据集仅出于构建模型和调试目的。其结局为:

2024.01.17 17:52:27.914 AdaBoost Test (EURUSD,H1) Adaboost acc = 0.960

到目前为止,我们的模型看似表现良好,准确率值约为 90%,在我将 random_state 设置为 -1 之后,其将导致每次运行 EA 时都用 GetTickCount 当作随机种子,如此我就可以在更随机的环境中评估模型。

QK 0 17:52:27.914 AdaBoost Test (EURUSD,H1) Adaboost acc = 0.960 LL 0 17:52:35.436 AdaBoost Test (EURUSD,H1) Adaboost acc = 0.947 JD 0 17:52:42.806 AdaBoost Test (EURUSD,H1) Adaboost acc = 0.960 IL 0 17:52:50.071 AdaBoost Test (EURUSD,H1) Adaboost acc = 0.933 MD 0 17:52:57.822 AdaBoost Test (EURUSD,H1) Adaboost acc = 0.967

在我们的函数库中,对于存在的其它弱学习器,可以遵循相同的编码范式和结构,请参阅何时将逻辑回归用作弱学习器:

整个类的唯一区别是在 fit 函数下发现的,为弱学习器部署的模型类型是逻辑回归:

void AdaBoost::fit(matrix &x,vector &y) { m_alphas.Resize(m_estimators); classes_in_data = MatrixExtend::Unique(y); //Find the target variables in the class ulong m = x.Rows(), n = x.Cols(); vector weights(m); weights = weights.Fill(1.0) / m; //Initialize instance weights vector preds(m); vector misclassified(m); //--- matrix data = MatrixExtend::concatenate(x, y); matrix temp_data; matrix x_subset; vector y_subset; double error = 0; for (uint i=0; i<m_estimators; i++) { temp_data = data; MatrixExtend::Randomize(temp_data, this.m_random_state, this.m_boostrapping); if (!MatrixExtend::XandYSplitMatrices(temp_data, x_subset, y_subset)) //Get randomized subsets { ArrayRemove(weak_learners,i,1); //Delete the invalid weak_learner printf("%s %d Failed to split data",__FUNCTION__,__LINE__); continue; } //--- weak_learner = new CLogisticRegression(); weak_learner.fit(x_subset, y_subset); //fitting the randomized data to the i-th weak_learner preds = weak_learner.predict(x_subset); //making predictions for the i-th weak_learner for (ulong j=0; j<m; j++) misclassified[j] = (preds[j] != y_subset[j]); error = (misclassified * weights).Sum() / (double)weights.Sum(); //--- Calculate the weight of a weak learner in the final weak_learner double alpha = 0.5 * log((1-error) / (error + 1e-10)); //--- Update instance weights weights *= exp(-alpha * y_subset * preds); weights /= weights.Sum(); //--- save a weak learner and its weight this.m_alphas[i] = alpha; this.weak_learners[i] = weak_learner; } }

在策略测试器中的 Adaboosted AI-模型。

由应用于开盘价的布林带指标,我们尝试训练我们的模型来学习预测下一根收盘蜡烛,无论是看涨、看跌、亦或两者都不是(持平)。

我们构建一个智能系统,在交易环境中测试我们的模型,从收集训练数据的代码开始:

收集训练数据:

//--- Data Collection for training the model //--- x variables Bollinger band only matrix dataset; indicator_v.CopyIndicatorBuffer(bb_handle,0,0,train_bars); //Main LINE dataset = MatrixExtend::concatenate(dataset, indicator_v); indicator_v.CopyIndicatorBuffer(bb_handle,1,0,train_bars); //UPPER BB dataset = MatrixExtend::concatenate(dataset, indicator_v); indicator_v.CopyIndicatorBuffer(bb_handle,2,0,train_bars); //LOWER BB dataset = MatrixExtend::concatenate(dataset, indicator_v); //--- Target Variable int size = CopyRates(Symbol(),PERIOD_CURRENT,0,train_bars,rates); vector y(size); switch(model) { case DECISION_TREE: { for (ulong i=0; i<y.Size(); i++) { if (rates[i].close > rates[i].open) y[i] = 1; //buy signal else if (rates[i].close < rates[i].open) y[i] = 2; //sell signal else y[i] = 0; //Hold signal } } break; case LOGISTIC_REGRESSION: for (ulong i=0; i<indicator_v.Size(); i++) { y[i] = (rates[i].close > rates[i].open); //if close > open buy else sell } break; } dataset = MatrixExtend::concatenate(dataset, y); //Add the target variable to the dataset if (MQLInfoInteger(MQL_DEBUG)) { Print("Data Head"); MatrixExtend::PrintShort(dataset); }

注意到目标变量的生成发生变化了吗?由于决策树用途广泛,且能很好地处理目标变量中的多个类,故它有能力针对市场中的三种形态进行分类,即 多头、空头、和持平。不像逻辑回归模型,其 sigmoid 函数为核心,能很好地把 0 和 1 分为两类,最好的事情就是准备目标变量条件,就可是多头、亦可是空头。

训练和测试已训练模型:

房间里的一头大象:

MatrixExtend::TrainTestSplitMatrices(dataset,train_x,train_y,test_x,test_y,0.7,_random_state); 该函数将数据拆分为训练样本和测试样本,按给定随机状态洗牌,70% 的数据当作测试样本,其余 30% 用于测试。

但在我们使用新收集的数据之前,对于模型性能,常规化仍然至关重要。

//--- Training and testing the trained model matrix train_x, test_x; vector train_y, test_y; MatrixExtend::TrainTestSplitMatrices(dataset,train_x,train_y,test_x,test_y,0.7,_random_state); //Train test split data | This function splits the data into training and testing sample given a random state and 70% of data to test while the rest 30% for testing train_x = scaler.fit_transform(train_x); //Standardize the training data test_x = scaler.transform(test_x); //Do the same for the test data Print("-----> ",EnumToString(model)); vector preds; switch(model) { case DECISION_TREE: ada_boost_tree = new DecisionTree::AdaBoost(tree_min_split, tree_max_depth, _n_estimators, -1, _bootstrapping); //Building the //--- Training ada_boost_tree.fit(train_x, train_y); preds = ada_boost_tree.predict(train_x); printf("Train Accuracy %.3f",Metrics::accuracy_score(train_y, preds)); //--- Testing preds = ada_boost_tree.predict(test_x); printf("Test Accuracy %.3f",Metrics::accuracy_score(test_y, preds)); break; case LOGISTIC_REGRESSION: ada_boost_logit = new LogisticRegression::AdaBoost(_n_estimators,-1, _bootstrapping); //--- Training ada_boost_logit.fit(train_x, train_y); preds = ada_boost_logit.predict(train_x); printf("Train Accuracy %.3f",Metrics::accuracy_score(train_y, preds)); //--- Testing preds = ada_boost_logit.predict(test_x); printf("Test Accuracy %.3f",Metrics::accuracy_score(test_y, preds)); break; }

输出:

PO 0 22:59:11.807 AdaBoost Test (EURUSD,H1) -----> DECISION_TREE CI 0 22:59:20.204 AdaBoost Test (EURUSD,H1) Building Estimator [1/10] Accuracy Score 0.561 OD 0 22:59:27.883 AdaBoost Test (EURUSD,H1) Building Estimator [2/10] Accuracy Score 0.601 NP 0 22:59:38.316 AdaBoost Test (EURUSD,H1) Building Estimator [3/10] Accuracy Score 0.541 LO 0 22:59:48.327 AdaBoost Test (EURUSD,H1) Building Estimator [4/10] Accuracy Score 0.549 LK 0 22:59:56.813 AdaBoost Test (EURUSD,H1) Building Estimator [5/10] Accuracy Score 0.570 OF 0 23:00:09.552 AdaBoost Test (EURUSD,H1) Building Estimator [6/10] Accuracy Score 0.517 GR 0 23:00:18.322 AdaBoost Test (EURUSD,H1) Building Estimator [7/10] Accuracy Score 0.571 GI 0 23:00:29.254 AdaBoost Test (EURUSD,H1) Building Estimator [8/10] Accuracy Score 0.556 HE 0 23:00:37.632 AdaBoost Test (EURUSD,H1) Building Estimator [9/10] Accuracy Score 0.599 DS 0 23:00:47.522 AdaBoost Test (EURUSD,H1) Building Estimator [10/10] Accuracy Score 0.567 OP 0 23:00:47.524 AdaBoost Test (EURUSD,H1) Train Accuracy 0.590 OG 0 23:00:47.525 AdaBoost Test (EURUSD,H1) Test Accuracy 0.513 MK 0 23:24:06.573 AdaBoost Test (EURUSD,H1) Building Estimator [1/10] Accuracy Score 0.491 HK 0 23:24:06.575 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [1/10] mse 0.43700 QO 0 23:24:06.575 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [2/10] mse 0.43432 KP 0 23:24:06.576 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [3/10] mse 0.43168 MD 0 23:24:06.577 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [4/10] mse 0.42909 FI 0 23:24:06.578 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [5/10] mse 0.42652 QJ 0 23:24:06.579 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [6/10] mse 0.42400 IN 0 23:24:06.580 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [7/10] mse 0.42151 NS 0 23:24:06.581 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [8/10] mse 0.41906 GD 0 23:24:06.582 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [9/10] mse 0.41664 DK 0 23:24:06.582 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [10/10] mse 0.41425 IQ 0 23:24:06.585 AdaBoost Test (EURUSD,H1) Building Estimator [2/10] Accuracy Score 0.477 JP 0 23:24:06.586 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [1/10] mse 0.43700 DE 0 23:24:06.587 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [2/10] mse 0.43432 RF 0 23:24:06.588 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [3/10] mse 0.43168 KJ 0 23:24:06.588 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [4/10] mse 0.42909 FO 0 23:24:06.589 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [5/10] mse 0.42652 NP 0 23:24:06.590 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [6/10] mse 0.42400 CD 0 23:24:06.591 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [7/10] mse 0.42151 KI 0 23:24:06.591 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [8/10] mse 0.41906 NM 0 23:24:06.592 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [9/10] mse 0.41664 EM 0 23:24:06.592 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [10/10] mse 0.41425 EO 0 23:24:06.593 AdaBoost Test (EURUSD,H1) Building Estimator [3/10] Accuracy Score 0.477 KF 0 23:24:06.594 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [1/10] mse 0.41931 HK 0 23:24:06.594 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [2/10] mse 0.41690 RL 0 23:24:06.595 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [3/10] mse 0.41452 IP 0 23:24:06.596 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [4/10] mse 0.41217 KE 0 23:24:06.596 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [5/10] mse 0.40985 DI 0 23:24:06.597 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [6/10] mse 0.40757 IJ 0 23:24:06.597 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [7/10] mse 0.40533 MO 0 23:24:06.598 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [8/10] mse 0.40311 PS 0 23:24:06.599 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [9/10] mse 0.40093 CG 0 23:24:06.600 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [10/10] mse 0.39877 NE 0 23:24:06.601 AdaBoost Test (EURUSD,H1) Building Estimator [4/10] Accuracy Score 0.499 EL 0 23:24:06.602 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [1/10] mse 0.41931 MQ 0 23:24:06.603 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [2/10] mse 0.41690 PE 0 23:24:06.603 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [3/10] mse 0.41452 OF 0 23:24:06.604 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [4/10] mse 0.41217 JK 0 23:24:06.605 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [5/10] mse 0.40985 KO 0 23:24:06.606 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [6/10] mse 0.40757 FP 0 23:24:06.606 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [7/10] mse 0.40533 PE 0 23:24:06.607 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [8/10] mse 0.40311 CI 0 23:24:06.608 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [9/10] mse 0.40093 NI 0 23:24:06.609 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [10/10] mse 0.39877 KS 0 23:24:06.610 AdaBoost Test (EURUSD,H1) Building Estimator [5/10] Accuracy Score 0.499 QR 0 23:24:06.611 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [1/10] mse 0.42037 MG 0 23:24:06.611 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [2/10] mse 0.41794 LK 0 23:24:06.612 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [3/10] mse 0.41555 ML 0 23:24:06.613 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [4/10] mse 0.41318 PQ 0 23:24:06.614 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [5/10] mse 0.41085 FE 0 23:24:06.614 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [6/10] mse 0.40856 FF 0 23:24:06.615 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [7/10] mse 0.40630 FJ 0 23:24:06.616 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [8/10] mse 0.40407 KO 0 23:24:06.617 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [9/10] mse 0.40187 NS 0 23:24:06.618 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [10/10] mse 0.39970 EH 0 23:24:06.619 AdaBoost Test (EURUSD,H1) Building Estimator [6/10] Accuracy Score 0.497 FH 0 23:24:06.620 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [1/10] mse 0.41565 LM 0 23:24:06.621 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [2/10] mse 0.41329 IQ 0 23:24:06.622 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [3/10] mse 0.41096 KR 0 23:24:06.622 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [4/10] mse 0.40867 LF 0 23:24:06.623 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [5/10] mse 0.40640 NK 0 23:24:06.624 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [6/10] mse 0.40417 OL 0 23:24:06.625 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [7/10] mse 0.40197 RP 0 23:24:06.627 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [8/10] mse 0.39980 OE 0 23:24:06.628 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [9/10] mse 0.39767 EE 0 23:24:06.628 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [10/10] mse 0.39556 QF 0 23:24:06.629 AdaBoost Test (EURUSD,H1) Building Estimator [7/10] Accuracy Score 0.503 CN 0 23:24:06.630 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [1/10] mse 0.41565 IR 0 23:24:06.631 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [2/10] mse 0.41329 HG 0 23:24:06.632 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [3/10] mse 0.41096 RH 0 23:24:06.632 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [4/10] mse 0.40867 ML 0 23:24:06.633 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [5/10] mse 0.40640 FQ 0 23:24:06.633 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [6/10] mse 0.40417 QR 0 23:24:06.634 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [7/10] mse 0.40197 NF 0 23:24:06.634 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [8/10] mse 0.39980 EK 0 23:24:06.635 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [9/10] mse 0.39767 CL 0 23:24:06.635 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [10/10] mse 0.39556 LL 0 23:24:06.636 AdaBoost Test (EURUSD,H1) Building Estimator [8/10] Accuracy Score 0.503 HD 0 23:24:06.637 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [1/10] mse 0.44403 IH 0 23:24:06.638 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [2/10] mse 0.44125 CM 0 23:24:06.638 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [3/10] mse 0.43851 DN 0 23:24:06.639 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [4/10] mse 0.43580 DR 0 23:24:06.639 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [5/10] mse 0.43314 CG 0 23:24:06.640 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [6/10] mse 0.43051 EK 0 23:24:06.640 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [7/10] mse 0.42792 JL 0 23:24:06.641 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [8/10] mse 0.42537 EQ 0 23:24:06.641 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [9/10] mse 0.42285 OF 0 23:24:06.642 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [10/10] mse 0.42037 GJ 0 23:24:06.642 AdaBoost Test (EURUSD,H1) Building Estimator [9/10] Accuracy Score 0.469 GJ 0 23:24:06.643 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [1/10] mse 0.44403 ON 0 23:24:06.643 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [2/10] mse 0.44125 LS 0 23:24:06.644 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [3/10] mse 0.43851 HG 0 23:24:06.644 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [4/10] mse 0.43580 KH 0 23:24:06.645 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [5/10] mse 0.43314 FM 0 23:24:06.645 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [6/10] mse 0.43051 IQ 0 23:24:06.646 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [7/10] mse 0.42792 QR 0 23:24:06.646 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [8/10] mse 0.42537 IG 0 23:24:06.647 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [9/10] mse 0.42285 RH 0 23:24:06.647 AdaBoost Test (EURUSD,H1) ---> Logistic regression build epoch [10/10] mse 0.42037 KS 0 23:24:06.648 AdaBoost Test (EURUSD,H1) Building Estimator [10/10] Accuracy Score 0.469 NP 0 23:24:06.652 AdaBoost Test (EURUSD,H1) Train Accuracy 0.491 GG 0 23:24:06.654 AdaBoost Test (EURUSD,H1) Test Accuracy 0.447

目前为止,一切良好!我们用能够执行交易的代码行来完成我们的智能系统:

获取交易信号:

void OnTick() { //--- x variables Bollinger band only | The current buffer only this time indicator_v.CopyIndicatorBuffer(bb_handle,0,0,1); //Main LINE x_inputs[0] = indicator_v[0]; indicator_v.CopyIndicatorBuffer(bb_handle,1,0,1); //UPPER BB x_inputs[1] = indicator_v[0]; indicator_v.CopyIndicatorBuffer(bb_handle,2,0,1); //LOWER BB x_inputs[2] = indicator_v[0]; //--- int signal = INT_MAX; switch(model) { case DECISION_TREE: x_inputs = scaler.transform(x_inputs); //New inputs data must be normalized the same way signal = ada_boost_tree.predict(x_inputs); break; case LOGISTIC_REGRESSION: x_inputs = scaler.transform(x_inputs); //New inputs data must be normalized the same way signal = ada_boost_logit.predict(x_inputs); break; } }

记住,1 代表对应决策树的多头信号,0 代表对应逻辑回归的多头信号;2 代表对应决策树的空头信号;1 代表对应逻辑回归的空头信号。我们不关心 0 信号,它代表对应决策树的持平。 我们来统一这些信号,分别标识 0 和 1 对应多头和空头信号。

case DECISION_TREE: x_inputs = scaler.transform(x_inputs); //New inputs data must be normalized the same way signal = ada_boost_tree.predict(x_inputs); if (signal == 1) //buy signal for decision tree signal = 0; else if (signal == 2) signal = 1; else signal = INT_MAX; //UNKNOWN NUMBER FOR HOLD break;

据我们的模型制定的简单策略:

SymbolInfoTick(Symbol(), ticks); if (isnewBar(PERIOD_CURRENT)) { if (signal == 0) //buy signal { if (!PosExists(MAGIC_NUMBER, POSITION_TYPE_BUY)) m_trade.Buy(lot_size, Symbol(), ticks.ask, ticks.ask-stop_loss*Point(), ticks.ask+take_profit*Point()); } if (signal == 1) { if (!PosExists(MAGIC_NUMBER, POSITION_TYPE_SELL)) m_trade.Sell(lot_size, Symbol(), ticks.bid, ticks.bid+stop_loss*Point(), ticks.bid-take_profit*Point()); } }

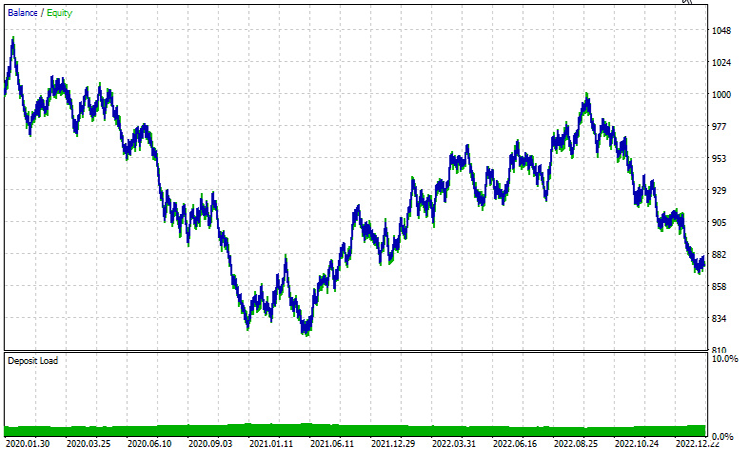

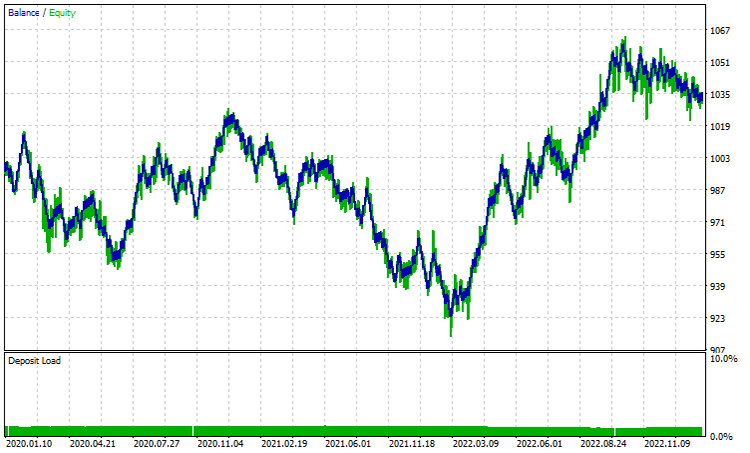

策略测试器中的结果,从 2020 年 1 月到 2023 年 2 月,时间帧 H1 小时:

决策树 Adaboost:

逻辑回归 Adaboost:

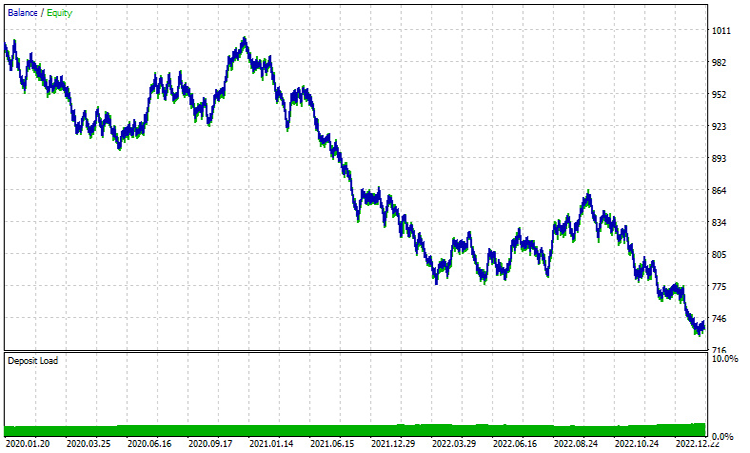

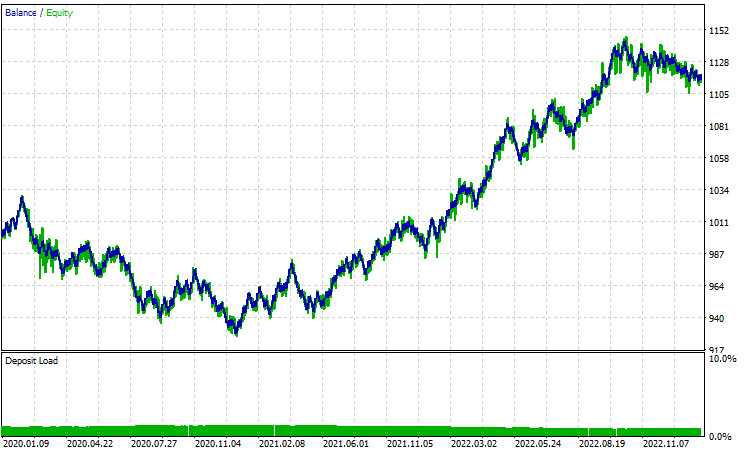

两者在H1 时间帧的表现都与优秀相去甚远。其主要原因在于我们的策略是基于当前柱线,凭我的经验,这样类型的策略最适合较高的时间帧,因为单根柱线代表重大变动,不像 H1 时间帧柱线,一天内出现 24 根,我们来尝试 H12 时间帧。

除了 train_bars 从 1000 减少到 100 之外,所有参数都保持不变,因为更高的时间帧无法请求太多过去的价格历史柱线。

决策树 Adaboost:

逻辑回归 Adaboost:

结论:

AdaBoost 是一种强力的算法,能够显著提高本系列文章中讨论过的 AI 模型的性能。虽然它确实会带来计算成本,但如果审慎地实现,这项投入被证明是值得的。AdaBoost 已发现了跨不同部门和行业的应用,包括金融、娱乐、教育、以及更多。

在我们圆满结束探索时,必须承认该算法的多功能性,及其利用弱学习器的集体力量来解决复杂分类挑战的能力。以下提供的常见问题(FAQ)旨在提供明示和见解,解决您在探索 AdaBoost 期间可能出现的常见问题。

有关 Adaboost 的常见问题(FAQ):

问题:AdaBoost 的工作原理是什么?

答:AdaBoost 的工作原理是基于数据集上迭代训练弱学习器(通常是决策树桩等简单模型),在每次迭代时调整误分类实例的权重。最终模型是弱学习器的权重和,权重越高,给出的准确性越好。

问题:什么是 AdaBoost 中的弱学习器?

答:弱学习器是性能略好于随机机会的简单模型。决策树桩(浅层决策树)在 AdaBoost 中通常当作弱学习器,但其它算法也可以用于此目的。

问题:实例权重在 AdaBoost 中的作用是什么?

答:AdaBoost 中的实例权重控制学习过程中每个训练实例的重要性。最初,设置所有权重等同,并在每次迭代时进行调整,以便更多地关注误分类实例,从而提高模型的普适能力。

问题:AdaBoost 如何处理弱学习器所犯的错误?

答:AdaBoost 为误分类实例分配较高的权重,迫使后续的弱学习器更加专注于纠正这些错误。最终模型为误差率较低的弱学习器提供更多权重。

问题:AdaBoost 对噪声和异常值敏感吗?

答:AdaBoost 可能对噪声和异常值很敏感,因为它会尝试纠正误分类。异常值可能会获得较高的权重,从而影响最终模型。也许应用健壮的弱学习器、或数据预处理技术来减轻这种敏感性。

问题:AdaBoost 是否存在过度拟合问题?

答:如果弱学习器太复杂、或数据集包含噪声,则 AdaBoost 可能容易出现过度拟合。使用更简单的弱学习器,并应用交叉验证等技术有助于防止过度拟合。

问题:AdaBoost 可以用于回归问题吗?

答:AdaBoost 主要用于分类任务,但可以通过修改算法来预测连续值,从而适配回归。针对回归问题,存在 AdaBoost.R2 等技术。

问题:AdaBoost 有替代品吗?

答:是的,还有其它融合学习方法,例如 随机森林、梯度增压 和 XGBoost。每种方法都有其优点和缺点,选择取决于数据的具体特征和手头的问题。

问题:AdaBoost 在哪些情况下特别有效?

答:AdaBoost 在处理各种弱学习器,以及需要组合多个分类器以便创建健壮模型的情况下非常有效。它常常用于人脸检测、文本分类、和其它现实世界应用。

若要随时了解该算法,以及许多其它算法的开发进度和漏洞修复,请访问 https://github.com/MegaJoctan/MALE5 所在的 GitHub 存储库。

出行平安。

附件:

| 文件 | 说明/用法 |

|---|---|

| Adaboost.mqh | 包含用于 Logistic 回归和决策树的 adaboost 命名空间类。 |

| Logistic Regression .mqh | 包含主要逻辑回归类 |

| MatrixExtend.mqh | 包含额外的矩阵操作函数 |

| metrics.mqh | 包含用于测量机器学习模型性能的代码的库 |

| preprocessing.mqh | 一个类,其中包含用于预处理数据以使其适合机器学习的函数 |

| tree.mqh | 决策树库可以在此文件中找到 |

| AdaBoost Test.mq5(EA) | 主要的测试 Expert Advisor,此处解释的所有代码,都在此文件中执行 |

本文由MetaQuotes Ltd译自英文

原文地址: https://www.mql5.com/en/articles/14034

注意: MetaQuotes Ltd.将保留所有关于这些材料的权利。全部或部分复制或者转载这些材料将被禁止。

本文由网站的一位用户撰写,反映了他们的个人观点。MetaQuotes Ltd 不对所提供信息的准确性负责,也不对因使用所述解决方案、策略或建议而产生的任何后果负责。

分歧问题:深入探讨人工智能的复杂性可解释性

分歧问题:深入探讨人工智能的复杂性可解释性

使用 LSTM 神经网络创建时间序列预测:规范化价格和令牌化时间

使用 LSTM 神经网络创建时间序列预测:规范化价格和令牌化时间