Developing a trading Expert Advisor from scratch (Part 14): Adding Volume At Price (II)

Today we will add some more resources to our EA. This interesting article can provide some new ideas and methods of presenting information. At the same time, it can assist in fixing minor flaws in your projects.

MQL5 Wizard techniques you should know (Part 02): Kohonen Maps

These series of articles will proposition that the MQL5 Wizard should be a mainstay for traders. Why? Because not only does the trader save time by assembling his new ideas with the MQL5 Wizard, and greatly reduce mistakes from duplicate coding; he is ultimately set-up to channel his energy on the few critical areas of his trading philosophy.

Learn how to design a trading system by Williams PR

A new article in our series about learning how to design a trading system by the most popular technical indicators by MQL5 to be used in the MetaTrader 5. In this article, we will learn how to design a trading system by the Williams' %R indicator.

Developing a trading Expert Advisor from scratch (Part 13): Time and Trade (II)

Today we will construct the second part of the Times & Trade system for market analysis. In the previous article "Times & Trade (I)" we discussed an alternative chart organization system, which would allow having an indicator for the quickest possible interpretation of deals executed in the market.

Developing a trading Expert Advisor from scratch (Part 12): Times and Trade (I)

Today we will create Times & Trade with fast interpretation to read the order flow. It is the first part in which we will build the system. In the next article, we will complete the system with the missing information. To implement this new functionality, we will need to add several new things to the code of our Expert Advisor.

Developing a trading Expert Advisor from scratch (Part 11): Cross order system

In this article we will create a system of cross orders. There is one type of assets that makes traders' life very difficult for traders — futures contracts. But why do they make life difficult?

Developing a trading Expert Advisor from scratch (Part 10): Accessing custom indicators

How to access custom indicators directly in an Expert Advisor? A trading EA can be truly useful only if it can use custom indicators; otherwise, it is just a set of codes and instructions.

Data Science and Machine Learning (Part 05): Decision Trees

Decision trees imitate the way humans think to classify data. Let's see how to build trees and use them to classify and predict some data. The main goal of the decision trees algorithm is to separate the data with impurity and into pure or close to nodes.

Learn how to design a trading system by Ichimoku

Here is a new article in our series about how to design a trading system b the most popular indicators, we will talk about the Ichimoku indicator in detail and how to design a trading system by this indicator.

How to master Machine Learning

Check out this selection of useful materials which can assist traders in improving their algorithmic trading knowledge. The era of simple algorithms is passing, and it is becoming harder to succeed without the use of Machine Learning techniques and Neural Networks.

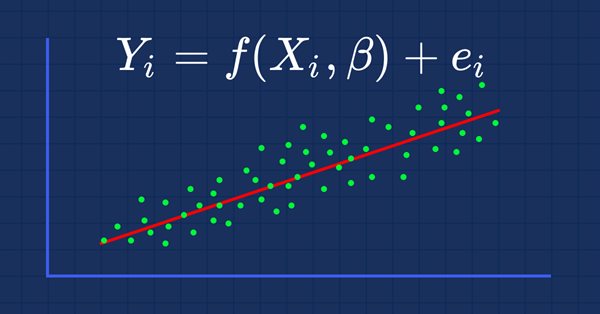

MQL5 Wizard techniques you should know (Part 01): Regression Analysis

Todays trader is a philomath who is almost always (either consciously or not...) looking up new ideas, trying them out, choosing to modify them or discard them; an exploratory process that should cost a fair amount of diligence. This clearly places a premium on the trader's time and the need to avoid mistakes. These series of articles will proposition that the MQL5 wizard should be a mainstay for traders. Why? Because not only does the trader save time by assembling his new ideas with the MQL5 wizard, and greatly reduce mistakes from duplicate coding; he is ultimately set-up to channel his energy on the few critical areas of his trading philosophy.

Learn how to design a trading system by Volumes

Here is a new article from our series about learning how to design a trading system based on the most popular technical indicators. The current article will be devoted to the Volumes indicator. Volume as a concept is one of the very important factors in financial markets trading and we have to pay attention to it. Through this article, we will learn how to design a simple trading system by Volumes indicator.

Learn how to design a trading system by MFI

The new article from our series about designing a trading system based on the most popular technical indicators considers a new technical indicator - the Money Flow Index (MFI). We will learn it in detail and develop a simple trading system by means of MQL5 to execute it in MetaTrader 5.

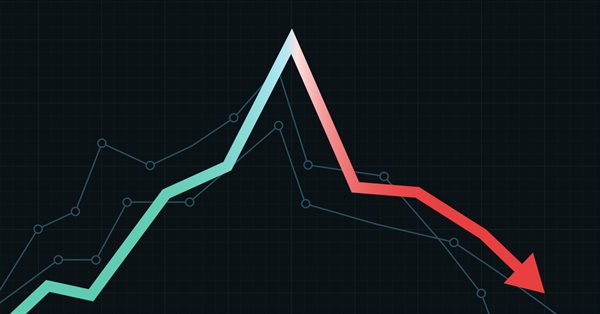

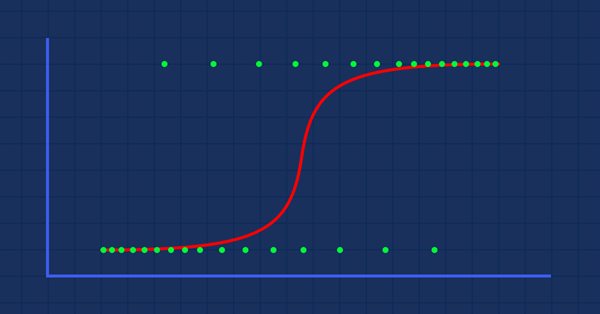

Data Science and Machine Learning (Part 04): Predicting Current Stock Market Crash

In this article I am going to attempt to use our logistic model to predict the stock market crash based upon the fundamentals of the US economy, the NETFLIX and APPLE are the stocks we are going to focus on, Using the previous market crashes of 2019 and 2020 let's see how our model will perform in the current dooms and glooms.

Learn how to design a trading system by Accumulation/Distribution (AD)

Welcome to the new article from our series about learning how to design trading systems based on the most popular technical indicators. In this article, we will learn about a new technical indicator called Accumulation/Distribution indicator and find out how to design an MQL5 trading system based on simple AD trading strategies.

Developing a trading Expert Advisor from scratch (Part 7): Adding Volume at Price (I)

This is one of the most powerful indicators currently existing. Anyone who trades trying to have a certain degree of confidence must have this indicator on their chart. Most often the indicator is used by those who prefer “tape reading” while trading. Also, this indicator can be utilized by those who use only Price Action while trading.

Learn how to design a trading system by OBV

This is a new article to continue our series for beginners about how to design a trading system based on some of the popular indicators. We will learn a new indicator that is On Balance Volume (OBV), and we will learn how we can use it and design a trading system based on it.

Multiple indicators on one chart (Part 06): Turning MetaTrader 5 into a RAD system (II)

In my previous article, I showed you how to create a Chart Trade using MetaTrader 5 objects and thus to turn the platform into a RAD system. The system works very well, and for sure many of the readers might have thought about creating a library, which would allow having extended functionality in the proposed system. Based on this, it would be possible to develop a more intuitive Expert Advisor with a nicer and easier to use interface.

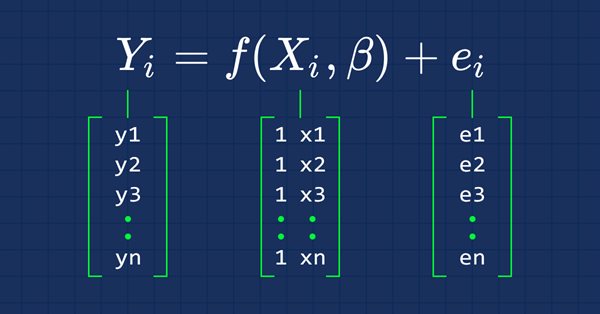

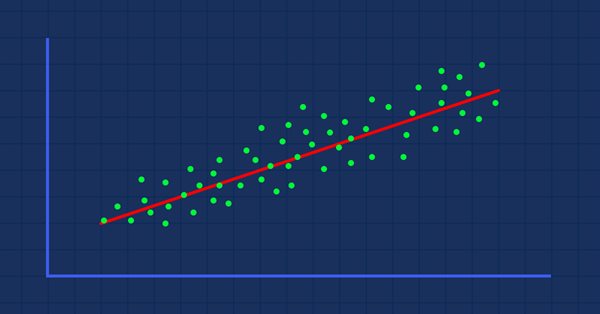

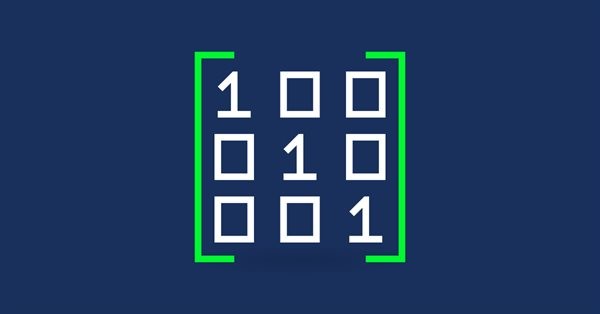

Data Science and Machine Learning (Part 03): Matrix Regressions

This time our models are being made by matrices, which allows flexibility while it allows us to make powerful models that can handle not only five independent variables but also many variables as long as we stay within the calculations limits of a computer, this article is going to be an interesting read, that's for sure.

Learn how to design a trading system by Parabolic SAR

In this article, we will continue our series about how to design a trading system using the most popular indicators. In this article, we will learn about the Parabolic SAR indicator in detail and how we can design a trading system to be used in MetaTrader 5 using some simple strategies.

Multiple indicators on one chart (Part 05): Turning MetaTrader 5 into a RAD system (I)

There are a lot of people who do not know how to program but they are quite creative and have great ideas. However, the lack of programming knowledge prevents them from implementing these ideas. Let's see together how to create a Chart Trade using the MetaTrader 5 platform itself, as if it were an IDE.

Multiple indicators on one chart (Part 04): Advancing to an Expert Advisor

In my previous articles, I have explained how to create an indicator with multiple subwindows, which becomes interesting when using custom indicators. This time we will see how to add multiple windows to an Expert Advisor.

Learn how to design a trading system by ATR

In this article, we will learn a new technical tool that can be used in trading, as a continuation within the series in which we learn how to design simple trading systems. This time we will work with another popular technical indicator: Average True Range (ATR).

Learn how to design a trading system by ADX

In this article, we will continue our series about designing a trading system using the most popular indicators and we will talk about the average directional index (ADX) indicator. We will learn this indicator in detail to understand it well and we will learn how we to use it through a simple strategy. By learning something deeply we can get more insights and we can use it better.

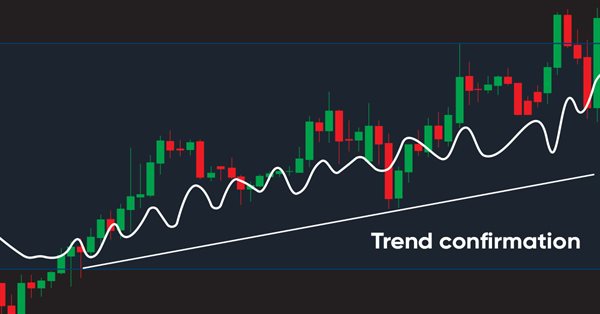

What you can do with Moving Averages

The article considers several methods of applying the Moving Average indicator. Each method involving a curve analysis is accompanied by indicators visualizing the idea. In most cases, the ideas shown here belong to their respected authors. My sole task was to bring them together to let you see the main approaches and, hopefully, make more reasonable trading decisions. MQL5 proficiency level — basic.

Learn how to design a trading system by Stochastic

In this article, we continue our learning series — this time we will learn how to design a trading system using one of the most popular and useful indicators, which is the Stochastic Oscillator indicator, to build a new block in our knowledge of basics.

Learn how to design a trading system by MACD

In this article, we will learn a new tool from our series: we will learn how to design a trading system based on one of the most popular technical indicators Moving Average Convergence Divergence (MACD).

Data Science and Machine Learning (Part 02): Logistic Regression

Data Classification is a crucial thing for an algo trader and a programmer. In this article, we are going to focus on one of classification logistic algorithms that can probability help us identify the Yes's or No's, the Ups and Downs, Buys and Sells.

Learn how to design a trading system by CCI

In this new article from our series for learning how to design trading systems, I will present the Commodities Channel Index (CCI), explain its specifics, and share with you how to create a trading system based on this indicator.

Mathematics in trading: Sharpe and Sortino ratios

Return on investments is the most obvious indicator which investors and novice traders use for the analysis of trading efficiency. Professional traders use more reliable tools to analyze strategies, such as Sharpe and Sortino ratios, among others.

MVC design pattern and its application (Part 2): Diagram of interaction between the three components

This article is a continuation and completion of the topic discussed in the previous article: the MVC pattern in MQL programs. In this article, we will consider a diagram of possible interaction between the three components of the pattern.

Learn how to design a trading system by Momentum

In my previous article, I mentioned the importance of identifying the trend which is the direction of prices. In this article I will share one of the most important concepts and indicators which is the Momentum indicator. I will share how to design a trading system based on this Momentum indicator.

Data Science and Machine Learning (Part 01): Linear Regression

It's time for us as traders to train our systems and ourselves to make decisions based on what number says. Not on our eyes, and what our guts make us believe, this is where the world is heading so, let us move perpendicular to the direction of the wave.

The correct way to choose an Expert Advisor from the Market

In this article, we will consider some of the essential points you should pay attention to when purchasing an Expert Advisor. We will also look for ways to increase profit, to spend money wisely, and to earn from this spending. Also, after reading the article, you will see that it is possible to earn even using simple and free products.

Learn how to design a trading system by RSI

In this article, I will share with you one of the most popular and commonly used indicators in the world of trading which is RSI. You will learn how to design a trading system using this indicator.

Learn how to design a trading system by Envelopes

In this article, I will share with you one of the methods of how to trade bands. This time we will consider Envelopes and will see how easy it is to create some strategies based on the Envelopes.

Developing a trading Expert Advisor from scratch

In this article, we will discuss how to develop a trading robot with minimum programming. Of course, MetaTrader 5 provides a high level of control over trading positions. However, using only the manual ability to place orders can be quite difficult and risky for less experienced users.

Learn how to design a trading system by Bollinger Bands

In this article, we will learn about Bollinger Bands which is one of the most popular indicators in the trading world. We will consider technical analysis and see how to design an algorithmic trading system based on the Bollinger Bands indicator.

Matrices and vectors in MQL5

By using special data types 'matrix' and 'vector', it is possible to create code which is very close to mathematical notation. With these methods, you can avoid the need to create nested loops or to mind correct indexing of arrays in calculations. Therefore, the use of matrix and vector methods increases the reliability and speed in developing complex programs.

Learn how to design different Moving Average systems

There are many strategies that can be used to filter generated signals based on any strategy, even by using the moving average itself which is the subject of this article. So, the objective of this article is to share with you some of Moving Average Strategies and how to design an algorithmic trading system.