名义变量的序数编码

概述

在机器学习中处理分类数据时,我们经常会遇到名义变量。尽管这些变量可以成为建模的宝贵信息来源,但许多机器学习算法(尤其是仅处理数值数据的算法)无法直接处理它们。为了解决这一问题,我们通常会将名义变量转换为序数变量。在本文中,我们将深入探讨将名义变量转换为序数变量的复杂性。我们将探讨这种转换背后的原理,讨论为序数值赋值的各种技术,并强调每种方法的潜在优势和劣势。此外,我们将主要使用Python代码演示这些方法,同时也会用纯MQL5实现两种通用的转换方法。

理解名义变量和序数变量

名义变量表示的是各类别之间不存在内在顺序或等级的分类数据。金融时间序列数据集中的具体例子可能包括:

- 价格K线类型(例如,针形K线、纺锤线、锤子线)

- 星期几(例如,星期一、星期二、星期三)

这些变量纯粹是定性的,即各类别之间不存在隐含的层级或顺序。例如,针形K线形态并不天生优于纺锤线,多头K线也并不比空头K线更好。

在数值计算中,通常的做法是为不同类别分配任意整数。然而,如果将这些整数用作机器学习算法的输入,则存在一种风险,即分配的值可能会扭曲原始数据所传达的信息。算法可能会错误地推断出较大的值意味着某种特定的关系或等级,即使这并非初衷。

另一方面,序数变量则是各类别之间存在内在顺序或等级的分类数据。示例包括:

- 趋势强度(例如,强势趋势、温和趋势、弱势趋势)

- 波动性(例如,高波动性、低波动性)

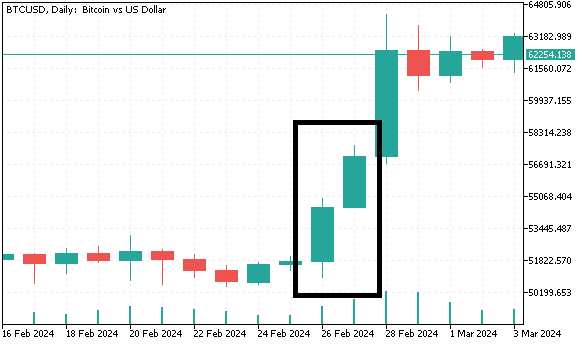

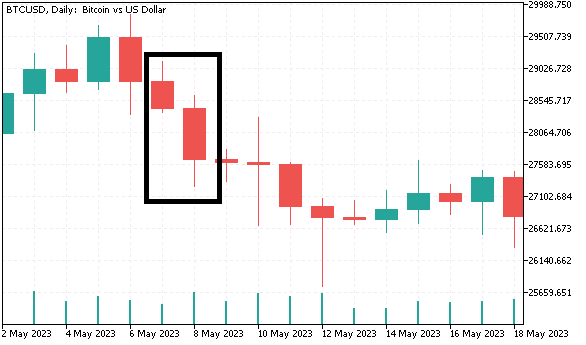

理解这一区别后,我们就能明白为什么简单地将整数分配给名义类别并不总是合适的。为了更好地理解这一点,我们将在接下来的章节中创建一个包含分类变量的数据集,并使用不同的方法将其转换为序数格式。我们将收集比特币的每日K线数据(开盘价、最高价、最低价、收盘价),并生成名义变量,用于预测次日收益率。第一个名义变量将K线分为多头或空头两类。第二个名义变量包含四个不同的类别,并根据K线实体大小与完整大小的比值对K线进行分组。最后一个名义变量则创建了三个类别:

- 如果当前K线和前一根K线均为多头,且当前K线的最低价和最高价均高于前一根K线的最低价和最高价时,我们将当前K线指定为“更高的高点”。

- 与此相反的情况被标记为“更低的低点”。

- 任何其他两根K线图的模式都属于第三类。

生成这个数据集的Python代码如下所示。

# Copyright 2024, MetaQuotes Ltd. # https://www.mql5.com # imports from datetime import datetime import MetaTrader5 as mt5 import pandas as pd import numpy as np import pytz import os from category_encoders import OrdinalEncoder, OneHotEncoder, BinaryEncoder,TargetEncoder, CountEncoder, HashingEncoder, LeaveOneOutEncoder,JamesSteinEncoder if not mt5.initialize(): print("initialize() failed ") mt5.shutdown() exit() #set up timezone infomation tz=pytz.timezone("Etc/UTC") #use time zone to set correct date for history data extraction startdate = datetime(2023,12,31,hour=23,minute=59,second=59,tzinfo=tz) stopdate = datetime(2017,12,31,hour=23,minute=59,second=59,tzinfo=tz) #list the symbol symbol = "BTCUSD" #get price history prices = pd.DataFrame(mt5.copy_rates_range(symbol,mt5.TIMEFRAME_D1,stopdate,startdate)) if len(prices) < 1: print(" Error downloading rates history ") mt5.shutdown() exit() #shutdown mt5 tether mt5.shutdown() #drop unnecessary columns prices.drop(labels=["time","tick_volume","spread","real_volume"],axis=1,inplace=True) #initialize categorical features prices["bar_type"] = np.where(prices["close"]>=prices["open"],"bullish","bearish") prices["body_type"] = np.empty((len(prices),),dtype='str') prices["bar_pattern"] = np.empty((len(prices),),dtype='str') #set feature values for i in np.arange(len(prices)): bodyratio = np.abs(prices.iloc[i,3]-prices.iloc[i,0])/np.abs(prices.iloc[i,1]-prices.iloc[i,2]) if bodyratio >= 0.75: prices.iloc[i,5] = ">=0.75" elif bodyratio < 0.75 and bodyratio >= 0.5: prices.iloc[i,5]=">=0.5<0.75" elif bodyratio < 0.5 and bodyratio >= 0.25: prices.iloc[i,5]=">=0.25<0.5" else: prices.iloc[i,5]="<0.25" if i < 1: prices.iloc[i,6] = None continue if(prices.iloc[i,4]=="bullish" and prices.iloc[i-1,4]=="bullish") and (prices.iloc[i,1]>prices.iloc[i-1,1]) and (prices.iloc[i,2]>prices.iloc[i-1,2]): prices.iloc[i,6] = "higherHigh" elif(prices.iloc[i,4]=="bearish" and prices.iloc[i-1,4]=="bearish") and (prices.iloc[i,2]<prices.iloc[i-1,2]) and (prices.iloc[i,1]<prices.iloc[i-1,1]): prices.iloc[i,6] = "lowerLow" else : prices.iloc[i,6] = "flat" #calculate target look_ahead = 1 prices["target"] = np.log(prices["close"]) prices["target"] = prices["target"].diff(look_ahead) prices["target"] = prices["target"].shift(-look_ahead) #drop rows with NA values prices.dropna(axis=0,inplace=True,ignore_index=True) print("Full feature matrix \n",prices.head())

请注意,在Python中,类别被赋予了文字名称,而在随后的MQL5代码列表中,使用整数来区分类别。

//get relative shift of is and oos sets int trainstart,trainstop; trainstart=iBarShift(SetSymbol!=""?SetSymbol:NULL,tf,TrainingSampleStartDate); trainstop=iBarShift(SetSymbol!=""?SetSymbol:NULL,tf,TrainingSampleStopDate); //check for errors from ibarshift calls if(trainstart<0 || trainstop<0) { Print(ErrorDescription(GetLastError())); return; } //---set the size of the sample sets size_insample=(trainstop - trainstart) + 1; //---check for input errors if(size_insample<=0) { Print("Invalid inputs "); return; } //--- if(!predictors.Resize(size_insample,3)) { Print("ArrayResize error ",ErrorDescription(GetLastError())); return; } //--- if(!prices.CopyRates(SetSymbol,tf,COPY_RATES_VERTICAL|COPY_RATES_OHLC,TrainingSampleStartDate,TrainingSampleStopDate)) { Print("Copyrates error ",ErrorDescription(GetLastError())); return; } //--- targets = log(prices.Col(3)); targets = np::diff(targets); //--- double bodyratio = 0.0; for(ulong i = 0; i<prices.Rows(); i++) { if(prices[i][3]<prices[i][0]) predictors[i][0] = 0.0; else predictors[i][0] = 1.0; bodyratio = MathAbs(prices[i][3]-prices[i][0])/MathAbs(prices[i][1]-prices[i][2]); if(bodyratio >=0.75) predictors[i][1] = 0.0; else if(bodyratio<0.75 && bodyratio>=0.5) predictors[i][1] = 1.0; else if(bodyratio<0.5 && bodyratio>=0.25) predictors[i][1] = 2.0; else predictors[i][1] = 3.0; if(i<1) { predictors[i][2] = 0.0; continue; } if(predictors[i][0]==1.0 && predictors[i-1][0]==1.0 && prices[i][1]>prices[i-1][1] && prices[i][2]>prices[i-1][2]) predictors[i][2] = 2.0; else if(predictors[i][0]==0.0 && predictors[i-1][0]==0.0 && prices[i][2]<prices[i-1][2] && prices[i][1]>prices[i-1][1]) predictors[i][2] = 1.0; else predictors[i][2] = 0.0; } targets = np::sliceVector(targets,1); prices = np::sliceMatrixRows(prices,1,predictors.Rows()-1); predictors = np::sliceMatrixRows(predictors,1,predictors.Rows()-1); matrix fullFeatureMatrix(predictors.Rows(),predictors.Cols()+prices.Cols()); if(!np::matrixCopyCols(fullFeatureMatrix,prices,0,prices.Cols()) || !np::matrixCopyCols(fullFeatureMatrix,predictors,prices.Cols())) { Print("Failed to merge matrices"); return; }

以下是数据集的一个片段。

为什么要将名义变量转换为序数变量

一些机器学习算法,如决策树,可以直接处理名义数据。然而,其他算法——尤其是像逻辑回归或神经网络这样的线性模型——需要数值输入。将名义变量转换为序数变量,可以用这些模型解释它们,从而使算法能够更有效地从数据中学习。尽管序数变量代表类别,但它们还提供了明确的进展或排名,为算法提供了更多内容以便更好地理解关系。当名义变量与目标变量共享大量信息时,通常对其测量水平的提升是有利的。如果名义变量包含有意义的数值,那么这些数值可以直接作为模型的输入。然而,即使这些值缺乏内在的数值意义,我们也可以根据它们与目标变量的关系为其分配序数值。

通过将名义变量提升到序数尺度,我们引入了类别之间的顺序或等级概念。这样能够增强模型捕捉该变量与目标变量之间潜在模式和关系的能力。尽管从理论上讲,可以将名义变量提升到与目标变量相同的测量级别,但在实际操作中,将其转换为序数量表通常就足够了。这种方法在保留变量信息内容与减少噪声之间取得了平衡。在接下来的章节中,我们将探讨将名义变量转换为序数形式的常用技术,以及为保持数据完整性所需考虑的关键因素。

名义变量转换技术

我们先从最简单的方法开始:序数编码(ordinal encoding)。在这种方法中,我们只需为每个类别分配一个整数值。如前所述,这会给类别强加一种等级排序。如果使用者熟悉数据,并事先了解各类别与目标变量之间的关系,那么这种方法就足够了。然而,在无监督学习的情况下不应使用序数编码,因为它很容易在没有顺序关系的情况下引入偏差(即暗示了一种本不存在的顺序)。

要在Python中转换名义变量数据集,我们将使用category_encoders包。该包提供了广泛的类别转换实现方法,适用于大多数的任务。读者可以在该项目的GitHub仓库中找到更多信息。

将变量转换为序数数值格式需要使用OrdinalEncoder对象。

#Ordinal encoding ord_encoder = OrdinalEncoder(cols = ["bar_type","body_type","bar_pattern"]) ordinal_data = ord_encoder.fit_transform(prices) print(" ordinal encoding\n ", ordinal_data.head())

转换后的数据:

用于无监督学习算法的适当转换技术包括二进制编码、独热编码和频率编码。独热编码(One-hot encoding )将每个类别转换为一个二进制列,其中类别存在用1标记,不存在用0标记。这种方法的主要缺点是它显著增加了输入变量的数量。每个类别的变量都会创建一个新的变量。例如,如果我们对一年中的月份进行编码,我们将得到11个额外的输入变量。

OneHotEncoder对象在category_encoders包中处理独热编码。

#One-Hot encoding onehot_encoder = OneHotEncoder(cols = ["bar_type","body_type","bar_pattern"]) onehot_data = onehot_encoder.fit_transform(prices) print(" ordinal encoding\n ", onehot_data.head())

转换后的数据:

二进制编码(Binary encoding)是一种更高效的替代方法,尤其在处理大量类别时效果明显。在这种方法中,每个类别首先被转换为一个唯一的整数,然后将该整数表示为二进制数。这种二进制表示会分布在多个列中,与独热编码相比,通常所需的列数更少。例如,要对12个月份进行编码,仅需4个二进制列即可。当分类变量具有许多唯一类别,且您希望限制输入变量的数量时,二进制编码非常适用。

对我们的BTCUSD数据集进行二进制编码 。

#Binary encoding binary_encoder = BinaryEncoder(cols = ["bar_type","body_type","bar_pattern"]) binary_data = binary_encoder.fit_transform(prices) print(" binary encoding\n ", binary_data.head())

转换后的数据:

频率编码(Frequency encoding)通过对数据集中每个类别的出现频率来替换该类别,从而实现对分类变量的转换。这种方法不会创建多个列,而是用每个类别出现的比例或次数来替换该类别。当某个类别的出现频率与目标变量之间存在有意义的关系时,这种方法非常有用,因为它能以更紧凑的形式保留有价值的信息。然而,如果数据集中某些类别占主导地位,这种方法可能会引入偏差。

在无监督学习的场景中,它通常被用作更复杂的特征工程流程中的第一步。

在此我们使用'CountEncoder'对象。

#Frequency encoding freq_encoder = CountEncoder(cols = ["bar_type","body_type","bar_pattern"]) freq_data = freq_encoder.fit_transform(prices) print(" frequency encoding\n ", freq_data.head())

转换后的数据:

二进制编码、独热编码和频率编码作为多功能技术,可应用于大多数分类数据,且不会引入可能影响学习结果的意外情况。这些转换方法具有一个重要特性,即它们独立于目标变量。

然而,在某些情况下,机器学习算法可能会受益于能够反映变量与目标之间关联的转换方法。这些方法利用目标变量将分类数据转换为具有一定关联性的数值,从而可能增强模型的预测能力。

其中一种方法是目标编码(target encoding),也称为均值编码(mean encoding)。这种方法用每个类别对应的目标变量的均值来替换该类别。例如,如果正在预测股票收盘价上涨的可能性(二元目标变量),那么我们可以将名义变量(如交易量范围)中的每个类别替换为每个范围对应的平均收盘上涨概率。对于高基数(高类别数量)的分类变量,目标编码尤其强大,因为它能在不增加数据集维度的情况下整合有用信息。这种技术有助于捕捉分类变量与目标之间的关系,使其在监督学习任务中效果显著。当类别与目标之间存在强相关性时,这种方法最有效。但同时,它也必须与防止过拟合的措施相结合,以确保更好的泛化能力。

#Target encoding target_encoder = TargetEncoder(cols = ["bar_type","body_type","bar_pattern"]) target_data = target_encoder.fit_transform(prices[["open","high","low","close","bar_type","body_type","bar_pattern"]], prices["target"]) print(" target encoding\n ", target_data.head())

转换后的数据:

另一种依赖目标变量的方法是留一法编码(leave-one-out encoding)。其工作原理与目标编码类似,但在计算某个类别的均值时,会排除当前行的目标值来进行调整。这有助于减少过拟合,尤其是在数据集较小或某些类别占比过高的情况下。留一法编码确保转换过程与正在处理的行相互独立,从而维护学习过程的完整性。

#LeaveOneOut encoding oneout_encoder = LeaveOneOutEncoder(cols = ["bar_type","body_type","bar_pattern"]) oneout_data = oneout_encoder.fit_transform(prices[["open","high","low","close","bar_type","body_type","bar_pattern"]], prices["target"]) print(" LeaveOneOut encoding\n ", oneout_data.head())

转换后的数据:

詹姆斯-斯坦因编码(James-Stein encoding)是一种基于贝叶斯方法的编码技术,它会根据每个类别可用的数据量,将该类别目标均值的估计值向总体均值收缩。对于低基数数据集而言,这种技术尤为有用。在低基数数据集中,传统方法(如目标编码或留一法编码)可能会导致过拟合,尤其是在数据集规模较小或类别分布严重不均的情况下。詹姆斯-斯坦因编码通过基于总体均值调整类别均值,降低了极端值对模型产生不利影响的风险。由此得出的估计值更为稳定可靠,因此,在处理稀疏数据或观测值较少的类别时,该技术是一种有效的替代方案。

#James Stein encoding james_encoder = JamesSteinEncoder(cols = ["bar_type","body_type","bar_pattern"]) james_data = james_encoder.fit_transform(prices[["open","high","low","close","bar_type","body_type","bar_pattern"]], prices["target"]) print(" James Stein encoding\n ", james_data.head())

转换后的数据:

category_encoders库提供了多种针对不同类型的分类数据和机器学习任务的编码技术。选择合适的编码方法取决于数据的性质、所使用的机器学习算法以及当前任务的具体要求。总而言之,独热编码是一种适用于多种应用场景的多功能方法,特别是在处理名义变量时效果显著。当需要强调变量与目标变量之间的关系时,应采用目标编码、留一法编码和詹姆斯-斯坦因编码。最后,当目标是在保留有意义信息的同时又能降低维度,二进制编码和哈希编码是实用的技术。

MQL5中的名义变量转序数变量

在本节中,我们将在MQL5中实现两种名义变量编码方法,这两种方法均封装在头文件nom2ord.mqh中声明的类中。COneHotEncoder类实现了针对数据集的独热编码,并提供了两个用户应熟悉的主要方法。

//+------------------------------------------------------------------+ //| one hot encoder class | //+------------------------------------------------------------------+ class COneHotEncoder { private: vector m_mapping[]; ulong m_cat_cols[]; ulong m_vars,m_cols; public: //+------------------------------------------------------------------+ //| Constructor | //+------------------------------------------------------------------+ COneHotEncoder(void) { } //+------------------------------------------------------------------+ //| Destructor | //+------------------------------------------------------------------+ ~COneHotEncoder(void) { ArrayFree(m_mapping); } //+------------------------------------------------------------------+ //| map categorical features of a training dataset | //+------------------------------------------------------------------+ bool fit(matrix &in_data,ulong &cols[]) { m_cols = in_data.Cols(); matrix data = np::selectMatrixCols(in_data,cols); if(data.Cols()!=ulong(cols.Size())) { Print(__FUNCTION__, " invalid input data "); return false; } m_vars = ulong(cols.Size()); if(ArrayCopy(m_cat_cols,cols)<=0 || !ArraySort(m_cat_cols)) { Print(__FUNCTION__, " ArrayCopy or ArraySort failure ", GetLastError()); return false; } if(ArrayResize(m_mapping,int(m_vars))<0) { Print(__FUNCTION__, " Vector array resize failure ", GetLastError()); return false; } for(ulong i = 0; i<m_vars; i++) { vector unique = data.Col(i); m_mapping[i] = np::unique(unique); } return true; } //+------------------------------------------------------------------+ //| Transform abitrary feature matrix to learned category m_mapping | //+------------------------------------------------------------------+ matrix transform(matrix &in_data) { if(in_data.Cols()!=m_cols) { Print(__FUNCTION__," Column dimension of input not equal to ", m_cols); return matrix::Zeros(1,1); } matrix out,input_copy; matrix data = np::selectMatrixCols(in_data,m_cat_cols); if(data.Cols()!=ulong(m_cat_cols.Size())) { Print(__FUNCTION__, " invalid input data "); return matrix::Zeros(1,1); } ulong unchanged_feature_cols[]; for(ulong i = 0; i<in_data.Cols(); i++) { int found = ArrayBsearch(m_cat_cols,i); if(m_cat_cols[found]!=i) { if(!unchanged_feature_cols.Push(i)) { Print(__FUNCTION__, " Failed array insertion ", GetLastError()); return matrix::Zeros(1,1); } } } input_copy = unchanged_feature_cols.Size()?np::selectMatrixCols(in_data,unchanged_feature_cols):input_copy; ulong numcols = 0; vector cumsum = vector::Zeros(ulong(MathMin(m_vars,data.Cols()))); for(ulong i = 0; i<cumsum.Size(); i++) { cumsum[i] = double(numcols); numcols+=m_mapping[i].Size(); } out = matrix::Zeros(data.Rows(),numcols); for(ulong i = 0;i<data.Rows(); i++) { vector row = data.Row(i); for(ulong col = 0; col<row.Size(); col++) { for(ulong k = 0; k<m_mapping[col].Size(); k++) { if(MathAbs(row[col]-m_mapping[col][k])<=1.e-15) { out[i][ulong(cumsum[col])+k]=1.0; break; } } } } matrix newfeaturematrix(out.Rows(),input_copy.Cols()+out.Cols()); if((input_copy.Cols()>0 && !np::matrixCopyCols(newfeaturematrix,input_copy,0,input_copy.Cols())) || !np::matrixCopyCols(newfeaturematrix,out,input_copy.Cols())) { Print(__FUNCTION__, " Failed matrix copy "); return matrix::Zeros(1,1); } return newfeaturematrix; } }; //+------------------------------------------------------------------+

第一个方法是fit(),在创建该类的实例后应调用此方法。该方法需要两个输入:一个特征矩阵(训练数据)和一个数组。特征矩阵可以是完整的数据集,包括分类变量和非分类变量。如果情况如此,则该数组应包含矩阵中名义变量的列索引。如果提供的是空数组,则假定所有变量均为名义变量。在fit()方法成功执行并返回真值后,可以调用transform()方法以获取转换后的数据集。该方法需要一个与提供给fit()方法的矩阵具有相同列数的矩阵。如果维度不匹配,则会报错。

让我们通过使用本文前面准备的BTCUSD数据集进行演示,来考察COneHotEncoder类的工作原理。以下代码段展示了转换过程。这段代码摘自MetaTrader 5脚本OneHotEncoding_demo.mq5。

//+------------------------------------------------------------------+ //| OneHotEncoding_demo.mq5 | //| Copyright 2024, MetaQuotes Ltd. | //| https://www.mql5.com | //+------------------------------------------------------------------+ #property copyright "Copyright 2024, MetaQuotes Ltd." #property link "https://www.mql5.com" #property version "1.00" #property script_show_inputs #include<np.mqh> #include<nom2ord.mqh> #include<ErrorDescription.mqh> //--- input parameters input datetime TrainingSampleStartDate=D'2023.12.31'; input datetime TrainingSampleStopDate=D'2017.12.31'; input ENUM_TIMEFRAMES tf = PERIOD_D1; input string SetSymbol="BTCUSD"; //+------------------------------------------------------------------+ //|global integer variables | //+------------------------------------------------------------------+ int size_insample, //training set size size_observations, //size of of both training and testing sets combined price_handle=INVALID_HANDLE; //log prices indicator handle //+------------------------------------------------------------------+ //|double global variables | //+------------------------------------------------------------------+ matrix prices; //array for log transformed prices vector targets; //differenced prices kept here matrix predictors; //flat array arranged as matrix of all predictors ie size_observations by size_predictors //+------------------------------------------------------------------+ //| Script program start function | //+------------------------------------------------------------------+ void OnStart() { //get relative shift of is and oos sets int trainstart,trainstop; trainstart=iBarShift(SetSymbol!=""?SetSymbol:NULL,tf,TrainingSampleStartDate); trainstop=iBarShift(SetSymbol!=""?SetSymbol:NULL,tf,TrainingSampleStopDate); //check for errors from ibarshift calls if(trainstart<0 || trainstop<0) { Print(ErrorDescription(GetLastError())); return; } //---set the size of the sample sets size_insample=(trainstop - trainstart) + 1; //---check for input errors if(size_insample<=0) { Print("Invalid inputs "); return; } //--- if(!predictors.Resize(size_insample,3)) { Print("ArrayResize error ",ErrorDescription(GetLastError())); return; } //--- if(!prices.CopyRates(SetSymbol,tf,COPY_RATES_VERTICAL|COPY_RATES_OHLC,TrainingSampleStartDate,TrainingSampleStopDate)) { Print("Copyrates error ",ErrorDescription(GetLastError())); return; } //--- targets = log(prices.Col(3)); targets = np::diff(targets); //--- double bodyratio = 0.0; for(ulong i = 0; i<prices.Rows(); i++) { if(prices[i][3]<prices[i][0]) predictors[i][0] = 0.0; else predictors[i][0] = 1.0; bodyratio = MathAbs(prices[i][3]-prices[i][0])/MathAbs(prices[i][1]-prices[i][2]); if(bodyratio >=0.75) predictors[i][1] = 0.0; else if(bodyratio<0.75 && bodyratio>=0.5) predictors[i][1] = 1.0; else if(bodyratio<0.5 && bodyratio>=0.25) predictors[i][1] = 2.0; else predictors[i][1] = 3.0; if(i<1) { predictors[i][2] = 0.0; continue; } if(predictors[i][0]==1.0 && predictors[i-1][0]==1.0 && prices[i][1]>prices[i-1][1] && prices[i][2]>prices[i-1][2]) predictors[i][2] = 2.0; else if(predictors[i][0]==0.0 && predictors[i-1][0]==0.0 && prices[i][2]<prices[i-1][2] && prices[i][1]>prices[i-1][1]) predictors[i][2] = 1.0; else predictors[i][2] = 0.0; } targets = np::sliceVector(targets,1); prices = np::sliceMatrixRows(prices,1,predictors.Rows()-1); predictors = np::sliceMatrixRows(predictors,1,predictors.Rows()-1); matrix fullFeatureMatrix(predictors.Rows(),predictors.Cols()+prices.Cols()); if(!np::matrixCopyCols(fullFeatureMatrix,prices,0,prices.Cols()) || !np::matrixCopyCols(fullFeatureMatrix,predictors,prices.Cols())) { Print("Failed to merge matrices"); return; } if(predictors.Rows()!=targets.Size()) { Print(" Error in aligning data structures "); return; } COneHotEncoder enc; ulong selectedcols[] = {4,5,6}; if(!enc.fit(fullFeatureMatrix,selectedcols)) return; matrix transformed = enc.transform(fullFeatureMatrix); Print(" Original predictors \n", fullFeatureMatrix); Print(" transformed predictors \n", transformed); } //+------------------------------------------------------------------+

之前的特征矩阵:

RQ 0 16:40:41.760 OneHotEncoding_demo (BTCUSD,D1) Original predictors ED 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [[13743,13855,12362.69,13347,0,2,0] RN 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [13348,15381,12535.67,14689,1,2,0] DG 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [14232.48,15408,14110.57,15130,1,1,2] HH 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [15114,15370,13786.18,15139,1,3,0] QN 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [15055.8,16894,14349.84,16725,1,1,2] RP 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [15699.53,16474,15672.99,16186,1,1,0] OI 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [16187,16258,13639.83,14900,0,2,0] ML 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [14884,15334,13777.33,14405,0,2,0] GS 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [14405,14876,12969.58,14876,1,3,0] KF 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [14876,14927,12417.22,13245,0,1,0] ON 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [12776.79,14078.5,12355.38,13681,1,1,0…]]

之后的特征矩阵

PH 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) transformed predictors KP 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [[13743,13855,12362.69,13347,1,0,1,0,0,0,1,0,0] KP 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [13348,15381,12535.67,14689,0,1,1,0,0,0,1,0,0] NF 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [14232.48,15408,14110.57,15130,0,1,0,1,0,0,0,1,0] JI 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [15114,15370,13786.18,15139,0,1,0,0,1,0,1,0,0] CL 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [15055.8,16894,14349.84,16725,0,1,0,1,0,0,0,1,0] RL 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [15699.53,16474,15672.99,16186,0,1,0,1,0,0,1,0,0] IS 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [16187,16258,13639.83,14900,1,0,1,0,0,0,1,0,0] GG 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [14884,15334,13777.33,14405,1,0,1,0,0,0,1,0,0] QK 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [14405,14876,12969.58,14876,0,1,0,0,1,0,1,0,0] PL 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [14876,14927,12417.22,13245,1,0,0,1,0,0,1,0,0] GS 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [12776.79,14078.5,12355.38,13681,0,1,0,1,0,0,1,0,0.]]

MQL5中实现的第二种转换方法有两种运行模式,这两种模式均是目标编码的变体,并经过修改以减少过拟合的影响。该技术封装在nom2ord.mqh文件中定义的CNomOrd类中。该类采用常用的fit()和transform()方法,在不对分类输入的维度进行缩减的情况下完成变量转换。

public: //+------------------------------------------------------------------+ //| constructor | //+------------------------------------------------------------------+ CNomOrd(void) { } //+------------------------------------------------------------------+ //| destructor | //+------------------------------------------------------------------+ ~CNomOrd(void) { } //+------------------------------------------------------------------+ //| fit mapping to training data | //+------------------------------------------------------------------+ bool fit(matrix &preds_in, ulong &cols[], vector &target) { m_dim_reduce = 0; mapped = false; //--- if(cols.Size()==0 && preds_in.Cols()) { m_pred = int(preds_in.Cols()); if(!np::arange(m_colindices,m_pred,ulong(0),ulong(1))) { Print(__FUNCTION__, " arange error "); return mapped; } } else { m_pred = int(cols.Size()); } //--- m_rows = int(preds_in.Rows()) ; m_cols = int(preds_in.Cols()); //--- if(ArrayResize(m_mean_rankings,m_pred)<0 || ArrayResize(m_rankings,m_rows)<0 || ArrayResize(m_indices,m_rows)<0 || ArrayResize(m_mapping,m_pred)<0 || ArrayResize(m_class_counts,m_pred)<0 || !m_median.Resize(m_pred) || !m_class_ids.Resize(m_rows,m_pred) || !shuffle_target.Resize(m_rows,2) || (cols.Size()>0 && ArrayCopy(m_colindices,cols)<0) || !ArraySort(m_colindices)) { Print(__FUNCTION__, " Memory allocation failure ", GetLastError()); return mapped; } //--- for(uint col = 0; col<m_colindices.Size(); col++) { vector var = preds_in.Col(m_colindices[col]); m_mapping[col] = np::unique(var); m_class_counts[col] = vector::Zeros(m_mapping[col].Size()); for(ulong i = 0; i<var.Size(); i++) { for(ulong j = 0; j<m_mapping[col].Size(); j++) { if(MathAbs(var[i]-m_mapping[col][j])<=1.e-15) { m_class_ids[i][col]=double(j); ++m_class_counts[col][j]; break; } } } } m_target = target; for(uint i = 0; i<m_colindices.Size(); i++) { vector cid = m_class_ids.Col(i); vector ccounts = m_class_counts[i]; m_mean_rankings[i] = train(cid,ccounts,m_target,m_median[i]); } mapped = true; return mapped; } //+------------------------------------------------------------------+ //| transform nominal to ordinal based on learned mapping | //+------------------------------------------------------------------+ matrix transform(matrix &data_in) { if(m_dim_reduce) { Print(__FUNCTION__, " Invalid method call, Use fitTransform() or call fit() "); return matrix::Zeros(1,1); } if(!mapped) { Print(__FUNCTION__, " Invalid method call, training either failed or was not done. Call fit() first. "); return matrix::Zeros(1,1); } if(data_in.Cols()!=ulong(m_cols)) { Print(__FUNCTION__, " Unexpected input data shape, doesnot match training data "); return matrix::Zeros(1,1); } //--- matrix out = data_in; //--- for(uint col = 0; col<m_colindices.Size(); col++) { vector var = data_in.Col(m_colindices[col]); for(ulong i = 0; i<var.Size(); i++) { for(ulong j = 0; j<m_mapping[col].Size(); j++) { if(MathAbs(var[i]-m_mapping[col][j])<=1.e-15) { out[i][m_colindices[col]]=m_mean_rankings[col][j]; break; } } } } //--- return out; }

fit()方法需要一个附加的输入:一个表示相应目标变量的向量。该编码方案不同于标准的目标编码,旨在最大程度地减少过拟合现象,而过拟合通常是由目标变量分布中的异常值引起的。为了解决这一问题,该类采用了百分位数转换方法。目标值被转换到一个基于百分位数的量表上,其中最小值被赋予百分位数排名0,最大值被赋予排名100,中间值则按比例缩放。这种方法在保留值之间的序数关系的同时,有效削弱了异常值的影响。

//+------------------------------------------------------------------+ //| test for a genuine relationship between predictor and target | //+------------------------------------------------------------------+ double score(int reps, vector &test_target,ulong selectedVar=0) { if(!mapped) { Print(__FUNCTION__, " Invalid method call, training either failed or was not done. Call fit() first. "); return -1.0; } if(m_dim_reduce==0 && selectedVar>=ulong(m_colindices.Size())) { Print(__FUNCTION__, " invalid predictor selection "); return -1.0; } if(test_target.Size()!=m_rows) { Print(__FUNCTION__, " invalid targets parameter, Does not match shape of training data. "); return -1.0; } int i, j, irep, unif_error ; double dtemp, min_neg, max_neg, min_pos, max_pos, medn ; dtemp = 0.0; min_neg = 0.0; max_neg = -DBL_MIN; min_pos = DBL_MAX; max_pos = DBL_MIN ; vector id = (m_dim_reduce)?m_class_ids.Col(0):m_class_ids.Col(selectedVar); vector cc = (m_dim_reduce)?m_class_counts[0]:m_class_counts[selectedVar]; int nclasses = int(cc.Size()); if(reps < 1) reps = 1 ; for(irep=0 ; irep<reps ; irep++) { if(!shuffle_target.Col(test_target,0)) { Print(__FUNCTION__, " error filling shuffle_target column ", GetLastError()); return -1.0; } if(irep) { i = m_rows ; while(i > 1) { j = (int)(MathRandomUniform(0.0,1.0,unif_error) * i) ; if(unif_error) { Print(__FUNCTION__, " mathrandomuniform() error ", unif_error); return -1.0; } if(j >= i) j = i - 1 ; dtemp = shuffle_target[--i][0] ; shuffle_target[i][0] = shuffle_target[j][0] ; shuffle_target[j][0] = dtemp ; } } vector totrain = shuffle_target.Col(0); vector m_ranks = train(id,cc,totrain,medn) ; if(irep == 0) { for(i=0 ; i<nclasses ; i++) { if(i == 0) min_pos = max_pos = m_ranks[i] ; else { if(m_ranks[i] > max_pos) max_pos = m_ranks[i] ; if(m_ranks[i] < min_pos) min_pos = m_ranks[i] ; } } // For i<nclasses orig_max_class = max_pos - min_pos ; count_max_class = 1 ; } else { for(i=0 ; i<nclasses ; i++) { if(i == 0) min_pos = max_pos = m_ranks[i]; else { if(m_ranks[i] > max_pos) max_pos = m_ranks[i] ; if(m_ranks[i] < min_pos) min_pos = m_ranks[i] ; } } // For i<nclasses if(max_pos - min_pos >= orig_max_class) ++count_max_class ; } } if(reps <= 1) return -1.0; return double(count_max_class)/ double(reps); }

score()方法用于评估序数变量与目标变量之间隐含关系的统计显著性。该方法采用蒙特卡洛置换检验,通过反复打乱目标变量数据并重新计算观察到的关系来进行检验。通过将观察到的关系与通过随机置换得到的关系分布进行比较,我们可以估算出观察到的关系仅仅是由于偶然因素导致的概率。为了量化观察到的关系,我们计算名义变量所有类别中目标变量百分位数最大均值与最小均值之间的差值。因此,score()方法返回一个概率值,作为假设检验的结果,其中原假设为:观察到的差异可能仅仅是由无关的名义变量和目标变量偶然产生的。

下面,我们通过将该类应用于BTCUSD数据集,来看看其具体是如何工作的。本演示在MQL5脚本TargetBasedNominalVariableConversion_demo.mq5中提供。

CNomOrd enc; ulong selectedcols[] = {4,5,6}; if(!enc.fit(fullFeatureMatrix,selectedcols,targets)) return; matrix transformed = enc.transform(fullFeatureMatrix); Print(" Original predictors \n", fullFeatureMatrix); Print(" transformed predictors \n", transformed); for(uint i = 0; i<selectedcols.Size(); i++) Print(" Probability that predicator at ", selectedcols[i] , " is associated with target ", enc.score(10000,targets,ulong(i)));

转换后的数据:

IQ 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) transformed predictors LM 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [[13743,13855,12362.69,13347,52.28360492434251,50.66453470243147,50.45172139701621] CN 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [13348,15381,12535.67,14689,47.85025875164135,50.66453470243147,50.45172139701621] IH 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [14232.48,15408,14110.57,15130,47.85025875164135,49.77386885151457,48.16372967916465] FF 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [15114,15370,13786.18,15139,47.85025875164135,49.23046392011166,50.45172139701621] HR 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [15055.8,16894,14349.84,16725,47.85025875164135,49.77386885151457,48.16372967916465] EM 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [15699.53,16474,15672.99,16186,47.85025875164135,49.77386885151457,50.45172139701621] RK 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [16187,16258,13639.83,14900,52.28360492434251,50.66453470243147,50.45172139701621] LG 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [14884,15334,13777.33,14405,52.28360492434251,50.66453470243147,50.45172139701621] QD 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [14405,14876,12969.58,14876,47.85025875164135,49.23046392011166,50.45172139701621] HP 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [14876,14927,12417.22,13245,52.28360492434251,49.77386885151457,50.45172139701621] PO 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [12776.79,14078.5,12355.38,13681,47.85025875164135,49.77386885151457,50.45172139701621…]]

用于估算转换后变量与目标变量之间关系的p值。

NS 0 16:44:29.287 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) Probability that predicator at 4 is associated with target 0.0005 IR 0 16:44:32.829 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) Probability that predicator at 5 is associated with target 0.7714 JS 0 16:44:36.406 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) Probability that predicator at 6 is associated with target 0.749

结果表明,在所有分类变量中,只有多头/空头分类与目标变量显著相关。相比之下,双K线形态和K线实体大小与目标变量之间并未表现出明显的对应关系。这表明转换后的变量与随机变量并无太大差异。

最后的演示展示了如何使用该类将一组名义变量进行转换,并结合降维处理。这一功能通过调用fitTransform()方法实现,该方法接受与fit()方法相同的输入参数,并返回一个矩阵,其中包含从转换后的名义变量中派生出的单一代表性变量。该操作始终能将任意数量的名义变量缩减为一个序数变量。

//+------------------------------------------------------------------+ //| categorical conversion with dimensionality reduction | //+------------------------------------------------------------------+ matrix fitTransform(matrix &preds_in, ulong &cols[], vector &target) { //--- if(preds_in.Cols()<2) { if(!fit(preds_in,cols,target)) { Print(__FUNCTION__, " error at ", __LINE__); return matrix::Zeros(1,1); } //--- return transform(preds_in); } //--- m_dim_reduce = 1; mapped = false; //--- if(cols.Size()==0 && preds_in.Cols()) { m_pred = int(preds_in.Cols()); if(!np::arange(m_colindices,m_pred,ulong(0),ulong(1))) { Print(__FUNCTION__, " arange error "); return matrix::Zeros(1,1); } } else { m_pred = int(cols.Size()); } //--- m_rows = int(preds_in.Rows()) ; m_cols = int(preds_in.Cols()); //--- if(ArrayResize(m_mean_rankings,1)<0 || ArrayResize(m_rankings,m_rows)<0 || ArrayResize(m_indices,m_rows)<0 || ArrayResize(m_mapping,m_pred)<0 || ArrayResize(m_class_counts,1)<0 || !m_median.Resize(m_pred) || !m_class_ids.Resize(m_rows,m_pred) || !shuffle_target.Resize(m_rows,2) || (cols.Size()>0 && ArrayCopy(m_colindices,cols)<0) || !ArraySort(m_colindices)) { Print(__FUNCTION__, " Memory allocation failure ", GetLastError()); return matrix::Zeros(1,1); } //--- for(uint col = 0; col<m_colindices.Size(); col++) { vector var = preds_in.Col(m_colindices[col]); m_mapping[col] = np::unique(var); for(ulong i = 0; i<var.Size(); i++) { for(ulong j = 0; j<m_mapping[col].Size(); j++) { if(MathAbs(var[i]-m_mapping[col][j])<=1.e-15) { m_class_ids[i][col]=double(j); break; } } } } m_class_counts[0] = vector::Zeros(ulong(m_colindices.Size())); if(!m_class_ids.Col(m_class_ids.ArgMax(1),0)) { Print(__FUNCTION__, " failed to insert new class id values ", GetLastError()); return matrix::Zeros(1,1); } for(ulong i = 0; i<m_class_ids.Rows(); i++) ++m_class_counts[0][ulong(m_class_ids[i][0])]; m_target = target; vector cid = m_class_ids.Col(0); m_mean_rankings[0] = train(cid,m_class_counts[0],m_target,m_median[0]); mapped = true; ulong unchanged_feature_cols[]; for(ulong i = 0; i<preds_in.Cols(); i++) { int found = ArrayBsearch(m_colindices,i); if(m_colindices[found]!=i) { if(!unchanged_feature_cols.Push(i)) { Print(__FUNCTION__, " Failed array insertion ", GetLastError()); return matrix::Zeros(1,1); } } } matrix out(preds_in.Rows(),unchanged_feature_cols.Size()+1); ulong nfeatureIndex = unchanged_feature_cols.Size(); if(nfeatureIndex) { matrix input_copy = np::selectMatrixCols(preds_in,unchanged_feature_cols); if(!np::matrixCopyCols(out,input_copy,0,nfeatureIndex)) { Print(__FUNCTION__, " failed to copy matrix columns "); return matrix::Zeros(1,1); } } for(ulong i = 0; i<out.Rows(); i++) { ulong r = ulong(m_class_ids[i][0]); if(r>=m_mean_rankings[0].Size()) { Print(__FUNCTION__, " critical error , index out of bounds "); return matrix::Zeros(1,1); } out[i][nfeatureIndex] = m_mean_rankings[0][r]; } return out; }

脚本TargetBasedNominalVariableConversionWithDimReduc_demo展示了实现这一过程的方式。

CNomOrd enc; ulong selectedcols[] = {4,5,6}; matrix transformed = enc.fitTransform(fullFeatureMatrix,selectedcols,targets); Print(" Original predictors \n", fullFeatureMatrix); Print(" transformed predictors \n", transformed); Print(" Probability that predicator is associated with target ", enc.score(10000,targets));

转换后的变量。

JR 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) transformed predictors JO 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [[13743,13855,12362.69,13347,49.36939702213909] NS 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [13348,15381,12535.67,14689,49.36939702213909] OP 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [14232.48,15408,14110.57,15130,49.36939702213909] RM 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [15114,15370,13786.18,15139,50.64271980734179] RL 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [15055.8,16894,14349.84,16725,49.36939702213909] ON 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [15699.53,16474,15672.99,16186,49.36939702213909] DO 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [16187,16258,13639.83,14900,49.36939702213909] JN 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [14884,15334,13777.33,14405,49.36939702213909] EN 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [14405,14876,12969.58,14876,50.64271980734179] PI 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [14876,14927,12417.22,13245,50.64271980734179] FK 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [12776.79,14078.5,12355.38,13681,49.36939702213909…]] NQ 0 16:51:09.741 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) Probability that predicator is associated with target 0.4981

检查新变量与目标变量的关系后,得出的p值相对较高,这凸显了降维的一个局限性:信息丢失。因此,使用该方法时需谨慎。

结论

将名义变量转换为序数变量以用于机器学习,是一个强大但需细致处理的过程。它使模型能够有意义地处理分类数据,但需要谨慎考虑以避免引入偏差或错误表述。通过采用适当的转换技术——无论是手动排序、基于频率的编码、聚类还是目标编码——您可以确保机器学习模型有效处理名义变量,同时保持数据完整性。本文仅概述了常见的名义变量转换方法,但值得注意的是,还有更多高级技术可供选择。本文的主要目标是向使用者介绍序数编码的概念,以及在选择最适合其特定用例的方法时需要考虑的因素。通过了解不同编码技术的含义,使用者可以做出更明智的决策,并提高其机器学习模型的性能和可解释性。本文引用的所有代码文件均附于下方。

| 文件 | 描述 |

|---|---|

| MQL5/scripts/CategoricalVariableConversion.py | 包含分类转换示例的Python脚本 |

| MQL5/scripts/CategoricalVariableConversion.ipynb | 上述Python脚本的Jupyter笔记本版本 |

| MQL5/scripts/ OneHotEncoding_demo.mq5 | 使用独热编码在MQL5中转换名义变量的演示脚本 |

| MQL5/scripts/TargetBasedNominalVariableConversion_demo.mq5 | 使用自定义基于目标的编码方法转换名义变量的演示脚本 |

| MQL5/scripts/TargetBasedNominalVariableConversionWithDimReduc_demo.mq5 | 使用实现降维的自定义基于目标的编码方法转换名义变量的演示脚本 |

| MQL5/include/nom2ord.mqh | 包含CNomOrd和COneHotEncoder类定义的头文件 |

| MQL5/include/np.mqh | 向量和矩阵实用函数的头文件 |

本文由MetaQuotes Ltd译自英文

原文地址: https://www.mql5.com/en/articles/16056

注意: MetaQuotes Ltd.将保留所有关于这些材料的权利。全部或部分复制或者转载这些材料将被禁止。

本文由网站的一位用户撰写,反映了他们的个人观点。MetaQuotes Ltd 不对所提供信息的准确性负责,也不对因使用所述解决方案、策略或建议而产生的任何后果负责。

如何将“聪明钱”概念(OB)与斐波那契指标相结合,实现最优进场策略

如何将“聪明钱”概念(OB)与斐波那契指标相结合,实现最优进场策略

创建一个基于布林带PIRANHA策略的MQL5 EA

创建一个基于布林带PIRANHA策略的MQL5 EA

交易中的神经网络:对比形态变换器(终章)

交易中的神经网络:对比形态变换器(终章)

人工喷淋算法(ASHA)

人工喷淋算法(ASHA)