Mastering Log Records (Part 4): Saving logs to files

Introduction

In the first article of this series, Mastering Log Records (Part 1): Fundamental Concepts and First Steps in MQL5, we embarked on the creation of a custom log library for Expert Advisor (EA) development. In it, we explored the motivation behind creating such an essential tool: to overcome the limitations of MetaTrader 5’s native logs and bring a robust, customizable, and powerful solution to the MQL5 universe.

To recap the main points covered, we laid the foundation for our library by establishing the following fundamental requirements:

- Robust structure using the Singleton pattern, ensuring consistency between code components.

- Advanced persistence for storing logs in databases, providing traceable history for in-depth audits and analysis.

- Flexibility in outputs, allowing logs to be stored or displayed conveniently, whether in the console, in files, in the terminal or in a database.

- Classification by log levels, differentiating informative messages from critical alerts and errors.

- Customization of the output format, to meet the unique needs of each developer or project.

With this well-established foundation, it became clear that the logging framework we are developing will be much more than a simple event log; it will be a strategic tool for understanding, monitoring and optimizing the behavior of EAs in real time.

So far, we've explored the basics of logs, learned how to format them, and understood how handlers control the destination of messages. But where do we store these logs for future reference? Now, in this fourth article, we'll take a closer look at the process of saving logs to files. Let's get started!

Why Save Logs to Files?

Saving logs to files is an essential practice for any system that values robustness and efficient maintenance. Imagine the following scenario: your Expert Advisor has been running for days, and suddenly an unexpected error occurs. How can you understand what happened? Without permanent records, it would be like trying to solve a puzzle without having all the pieces.

Log files are not just a way to store messages. They represent the system's memory. Here are the main reasons to adopt them:

-

Persistence and History

Logs saved to files remain available even after the program has run. This allows historical queries to check performance, understand past behaviors and identify patterns over time.

-

Audit and Transparency

In critical projects, such as in the financial market, keeping a detailed history is essential for audits or justifications of automated decisions. A well-stored log can be your greatest defense in case of questions.

-

Diagnostics and Debugging

With log files, you can track specific errors, monitor critical events, and analyze each step of system execution.

-

Access Flexibility

Unlike logs displayed on the console or terminal, files can be accessed remotely or shared with teams, generating a shared and detailed view of important events.

-

Automation and Integration

Files can be read and analyzed by automated tools, sending alerts for critical problems or creating detailed reports on performance.

By saving logs to files, you transform a simple resource into a tool for management, tracking, and improvement. I don't need to go into much detail here justifying the importance of saving this data to a file; let's get to the point in the next topic, understanding how to implement this resource efficiently in our library.

Before going directly to the code, it is important to define the functionalities that the file handler should offer. Below, I have detailed each of the necessary requirements:

-

Directory, Name and File Type Customization

Allow users to configure:

- The directory where the logs will be stored.

- The name of the log files, ensuring greater control and organization.

- The output file format, with support for .log , .txt and .json .

-

Encoding Configuration

Support different types of encoding for log files, such as:

- UTF-8 (recommended standard).

- UTF-7 , ANSI Code Page (ACP) or others, as needed.

-

Library Error Reporting

The library must include a system to identify and report errors in its own execution:

- Error messages displayed directly in the terminal console.

- Clear information to facilitate diagnosis and problem resolution.

Working with files in MQL5

In MQL5, dealing with files requires a basic understanding of how the language handles these operations. If you want to really delve into the intricate operations of reading, writing, and using flags, I can't help but recommend reading the article MQL5 Programming Basics: Files by Dmitry Fedoseev. It provides a complete and detailed overview of the topic, in a way that will transform complexity into something clear, without losing depth.

But what we're looking for here is something a little more direct and objective. We won't get lost in the minute details, because my mission is to teach you the essentials: opening, manipulating, and closing files in a simple and practical way.

-

Understanding File Directories in MQL5 In MQL5, all files handled by standard functions are automatically stored in the MQL5/Files folder, which is located inside the terminal installation directory. This means that when working with files in MQL5, you only need to specify the relative path from this base folder, without the need to include the full path. For example, when saving to logs/expert.log , the full path will be:

<terminal folder>/MQL5/Files/logs/expert.log

-

Creating and Opening Files The function for opening or creating files is FileOpen. It requires as a mandatory argument the file path (after MQL5/Files ) and some flags that determine how the file will be handled. The flags we will use are:

- FILE_READ: Allows opening the file for reading.

- FILE_WRITE: Allows opening the file for writing.

- FILE_ANSI: Specifies that the content will be written using strings in ANSI format (each character occupies one byte).

A useful feature of MQL5 is that, when combining FILE_READ and FILE_WRITE , it automatically creates the file if it does not exist. This eliminates the need for manual existence checks.

-

Closing the file Finally, when you finish operations with the file, use the FileClose() function to close the processing with the file.

Here is a practical example of how to open (or create) and close a file in MQL5:

int OnInit() { //--- Open the file and store the handler int handle_file = FileOpen("logs\\expert.log", FILE_READ|FILE_WRITE|FILE_ANSI, '\t', CP_UTF8); //--- If opening fails, display an error in the terminal log if(handle_file == INVALID_HANDLE) { Print("[ERROR] Unable to open log file. Ensure the directory exists and is writable. (Code: "+IntegerToString(GetLastError())+")"); return(INIT_FAILED); } //--- Close file FileClose(handle_file); return(INIT_SUCCEEDED); }

Now that we have opened the file, it is time to learn how to write to it.

- Positioning the Write Pointer: Before writing, we need to define where the data will be inserted. We use the FileSeek() function to position the write pointer at the end of the file. This avoids overwriting existing content.

- Writing Data: The FileWrite() method writes strings to the file. There is no need to use “\n” to break the line. When using this method, the next time the data is written, it will automatically be written to another line, to ensure better organization.

Here is how to do this in practice:

int OnInit() { //--- Open the file and store the handler int handle_file = FileOpen("logs\\expert.log", FILE_READ|FILE_WRITE|FILE_ANSI, '\t', CP_UTF8); //--- If opening fails, display an error in the terminal log if(handle_file == INVALID_HANDLE) { Print("[ERROR] Unable to open log file. Ensure the directory exists and is writable. (Code: "+IntegerToString(GetLastError())+")"); return(INIT_FAILED); } //--- Move the writing pointer FileSeek(handle_file, 0, SEEK_END); //--- Writes the content inside the file FileWrite(handle_file, "[2025-01-02 12:35:27] DEBUG (CTradeManager): Order sent successfully, server responded in 32ms"); //--- Close file FileClose(handle_file); return(INIT_SUCCEEDED); }

After running the code, you will see a file created in the Files folder. The full path will be something like:

<Terminal folder>/MQL5/Files/logs/expert.log If you open the file, you will see exactly what we wrote:

[2025-01-02 12:35:27] DEBUG (CTradeManager): Order sent successfully, server responded in 32ms

Now we have learned how to handle files in a very simple way in MQL5, let's add this work to the handler class responsible for saving files, CLogifyHandlerFile .

Creating the CLogifyHandlerFile class configurations

Now, let's detail how we can configure this class to efficiently handle the file rotation I mentioned in the requirements section. But what exactly does “file rotation” mean? Let me explain in more detail. Rotation is an essential practice to avoid that chaotic scenario in which a single log file grows indefinitely, avoiding the excessive accumulation of data in a single log file, which can make later analysis difficult, turning the logs into a real slow, difficult to handle and almost impossible to decipher nightmare.

Imagine this scenario: an Expert Advisor running for weeks or months, recording every event, error or notification in the same file. Soon, that log starts to reach considerable sizes, making reading and interpreting information quite complex. This is where rotation comes in. It allows us to divide this information into smaller and organized pieces, making everything much easier to read and analyze.

The two most common ways to do this are:

- By Size: You set a size limit, usually in megabytes (MB), for the log file. When this limit is reached, a new file is automatically created, and the cycle starts over. This approach is very practical when the focus is on controlling log growth, without having to stick to a calendar. As soon as the current file reaches the size limit (in megabytes), the following flow occurs: The current log file is renamed, gaining an index, such as "log1.log". The existing files in the directory are also renumbered, such as "log1.log" becoming "log2.log". If the number of files reaches the maximum allowed, the oldest files are deleted. This approach is useful for limiting both the space occupied by the logs and the number of files saved.

- By Date: In this case, a new log file is created every day. Each one has the date it was created in its name, for example log_2025-01-19.log , which solves most of the headache of organizing logs. This approach is perfect for when you need to take a specific look at a particular day, without getting lost in a single gigantic file. This is the method I use most when saving the logs of my Experts Adivisors, everything is cleaner, more direct and easier to navigate.

In addition, you can also limit the number of log files stored. This control is very important to prevent the accumulation of old logs unnecessarily. Imagine if you set it to keep the 30 most recent files, when the 31st appears the system automatically discards the oldest one, which prevents the accumulation of very old logs on the disk, and the most recent ones are kept.

Another crucial detail is the use of a cache. Instead of writing each message directly to the file as soon as it arrives, the messages are temporarily stored in the cache. When the cache reaches a set limit, it dumps all the contents of the file at once. This results in fewer read and write operations to disk, improved performance, and a longer lifespan for your storage devices.

Now that we understand the concept of file rotation, let's create a structure called MqlLogifyHandleFileConfig to store all the configurations for the CLogifyHandlerFile class. This structure will be responsible for holding the parameters that define how logs will be managed.

The first part of the structure will involve defining enums for the rotation types and file extensions to be used:

//+------------------------------------------------------------------+ //| ENUMS for log rotation and file extension | //+------------------------------------------------------------------+ enum ENUM_LOG_ROTATION_MODE { LOG_ROTATION_MODE_NONE = 0, // No rotation LOG_ROTATION_MODE_DATE, // Rotate based on date LOG_ROTATION_MODE_SIZE, // Rotate based on file size }; enum ENUM_LOG_FILE_EXTENSION { LOG_FILE_EXTENSION_TXT = 0, // .txt file LOG_FILE_EXTENSION_LOG, // .log file LOG_FILE_EXTENSION_JSON, // .json file };

The MqlLogifyHandleFileConfig structure itself will contain the following parameters:

- directory: Directory where the log files will be stored.

- base_filename: Base file name, without the extension.

- file_extension: Log file extension type (such as .txt, .log, or .json).

- rotation_mode: File rotation mode.

- messages_per_flush: Number of log messages to cache before writing them to the file.

- codepage: Encoding used for the log files (such as UTF-8 or ANSI).

- max_file_size_mb: Maximum size of each log file, if rotation is based on size.

- max_file_count: Maximum number of log files to keep before deleting the oldest ones.

In addition to the constructors and destructors, I will add auxiliary methods to the structure to configure each of the rotation modes, designed to make the configuration process more practical and, above all, reliable. These methods are not there just for the sake of elegance, they ensure that no critical detail is overlooked during the configuration.

For example, if the rotation mode is set to by date ( LOG_ROTATION_MODE_DATE ), trying to configure the max_file_size_mb attribute makes no sense at all, after all, this parameter is only relevant in the by size mode ( LOG_ROTATION_MODE_SIZE ). The role of these methods is to avoid inconsistencies like this, protecting the system against invalid configurations.

If, by chance, an essential parameter is not specified, the system takes action. It can automatically fill in a default value, issuing a warning to the developer, thus ensuring that the flow is robust and without room for unpleasant surprises.

The auxiliary methods that we will implement are:

- CreateNoRotationConfig(): Configuration for rotation without files (all logs go to the same file without rotation).

- CreateDateRotationConfig(): Configuration for rotation based on dates.

- CreateSizeRotationConfig(): Configuration for rotation based on file size.

- ValidateConfig(): Method that validates if all configurations are correct and ready to use. (this is a method that will be used by the class automatically and not by the developer who will be using the library)

Here is the complete implementation of the structure:

//+------------------------------------------------------------------+ //| Struct: MqlLogifyHandleFileConfig | //+------------------------------------------------------------------+ struct MqlLogifyHandleFileConfig { string directory; // Directory for log files string base_filename; // Base file name ENUM_LOG_FILE_EXTENSION file_extension; // File extension type ENUM_LOG_ROTATION_MODE rotation_mode; // Rotation mode int messages_per_flush; // Messages before flushing uint codepage; // Encoding (e.g., UTF-8, ANSI) ulong max_file_size_mb; // Max file size in MB for rotation int max_file_count; // Max number of files before deletion //--- Default constructor MqlLogifyHandleFileConfig(void) { directory = "logs"; // Default directory base_filename = "expert"; // Default base name file_extension = LOG_FILE_EXTENSION_LOG;// Default to .log extension rotation_mode = LOG_ROTATION_MODE_SIZE;// Default size-based rotation messages_per_flush = 100; // Default flush threshold codepage = CP_UTF8; // Default UTF-8 encoding max_file_size_mb = 5; // Default max file size in MB max_file_count = 10; // Default max file count } //--- Destructor ~MqlLogifyHandleFileConfig(void) { } //--- Create configuration for no rotation void CreateNoRotationConfig(string base_name="expert", string dir="logs", ENUM_LOG_FILE_EXTENSION extension=LOG_FILE_EXTENSION_LOG, int msg_per_flush=100, uint cp=CP_UTF8) { directory = dir; base_filename = base_name; file_extension = extension; rotation_mode = LOG_ROTATION_MODE_NONE; messages_per_flush = msg_per_flush; codepage = cp; } //--- Create configuration for date-based rotation void CreateDateRotationConfig(string base_name="expert", string dir="logs", ENUM_LOG_FILE_EXTENSION extension=LOG_FILE_EXTENSION_LOG, int max_files=10, int msg_per_flush=100, uint cp=CP_UTF8) { directory = dir; base_filename = base_name; file_extension = extension; rotation_mode = LOG_ROTATION_MODE_DATE; messages_per_flush = msg_per_flush; codepage = cp; max_file_count = max_files; } //--- Create configuration for size-based rotation void CreateSizeRotationConfig(string base_name="expert", string dir="logs", ENUM_LOG_FILE_EXTENSION extension=LOG_FILE_EXTENSION_LOG, ulong max_size=5, int max_files=10, int msg_per_flush=100, uint cp=CP_UTF8) { directory = dir; base_filename = base_name; file_extension = extension; rotation_mode = LOG_ROTATION_MODE_SIZE; messages_per_flush = msg_per_flush; codepage = cp; max_file_size_mb = max_size; max_file_count = max_files; } //--- Validate configuration bool ValidateConfig(string &error_message) { //--- Saves the return value bool is_valid = true; //--- Check if the directory is not empty if(directory == "") { directory = "logs"; error_message = "The directory cannot be empty."; is_valid = false; } //--- Check if the base filename is not empty if(base_filename == "") { base_filename = "expert"; error_message = "The base filename cannot be empty."; is_valid = false; } //--- Check if the number of messages per flush is positive if(messages_per_flush <= 0) { messages_per_flush = 100; error_message = "The number of messages per flush must be greater than zero."; is_valid = false; } //--- Check if the codepage is valid (verify against expected values) if(codepage != CP_ACP && codepage != CP_MACCP && codepage != CP_OEMCP && codepage != CP_SYMBOL && codepage != CP_THREAD_ACP && codepage != CP_UTF7 && codepage != CP_UTF8) { codepage = CP_UTF8; error_message = "The specified codepage is invalid."; is_valid = false; } //--- Validate limits for size-based rotation if(rotation_mode == LOG_ROTATION_MODE_SIZE) { if(max_file_size_mb <= 0) { max_file_size_mb = 5; error_message = "The maximum file size (in MB) must be greater than zero."; is_valid = false; } if(max_file_count <= 0) { max_file_count = 10; error_message = "The maximum number of files must be greater than zero."; is_valid = false; } } //--- Validate limits for date-based rotation if(rotation_mode == LOG_ROTATION_MODE_DATE) { if(max_file_count <= 0) { max_file_count = 10; error_message = "The maximum number of files for date-based rotation must be greater than zero."; is_valid = false; } } //--- No errors found error_message = ""; return(is_valid); } }; //+------------------------------------------------------------------+

An interesting detail that I want to highlight here is how the ValidateConfig() function works. When analyzing this function, notice something interesting: when it detects an error in some configuration value, it is not as if it immediately returns false, saying that something went wrong. It acts first, taking corrective measures to solve the problem automatically, all this before actually returning a definitive result.

First, it resets the invalid value, putting it back to its default value. This, in a way, temporarily “fixes” the configuration, without letting the error prevent the program process from following its flow. Then, so as not to leave the situation unexplained, the function assigns a detailed message, clearly indicating where the error occurred and what needs to be adjusted. And, finally, the function marks the variable called is_valid as false , signaling that something went wrong. Only at the end, after taking all these measures, does it return this variable, with the final status, which will tell whether the configuration passed and is valid or not.

But what makes this even more interesting is how the function handles multiple errors. If there is more than one incorrect value at the same time, it does not focus on correcting the first error that appears, leaving the others for later. On the contrary, it goes after them all at once, correcting everything at the same time. At the end, the function returns the message explaining which was the last error corrected, ensuring that nothing is left out.

This type of approach is valuable and helps the developer's work. During the development of a system, it is common for some values to be defined incorrectly or by mistake. The beauty here is that the function has an extra layer of security, correcting errors automatically, without waiting for the programmer to notice them one by one. After all, small errors, if left untreated, can cause bigger failures – such as, for example, the failure to save log records. This automation in error handling that I created ends up preventing small failures from interrupting the system's operation, helping to keep everything running.

Implementing the CLogifyHandlerFile class

We have the class that was already created in the last article, we will just make modifications to make it functional. Here, I will detail each adjustment made to ensure that you understand how everything works.

In the private scope of the class, we add variables and some important auxiliary methods:

- Configuration: We create a variable m_config of type MqlLogifyHandleFileConfig to store the settings related to the logging system.

- I also implemented the methods SetConfig() and GetConfig() to define and access the class settings.

Here is the initial structure of the class, with the basic definitions and methods:

//+------------------------------------------------------------------+ //| class : CLogifyHandlerFile | //| | //| [PROPERTY] | //| Name : CLogifyHandlerFile | //| Heritage : CLogifyHandler | //| Description : Log handler, inserts data into file, supports | //| rotation modes. | //| | //+------------------------------------------------------------------+ class CLogifyHandlerFile : public CLogifyHandler { private: //--- Config MqlLogifyHandleFileConfig m_config; public: //--- Configuration management void SetConfig(MqlLogifyHandleFileConfig &config); MqlLogifyHandleFileConfig GetConfig(void); }; //+------------------------------------------------------------------+ //| Set configuration | //+------------------------------------------------------------------+ void CLogifyHandlerFile::SetConfig(MqlLogifyHandleFileConfig &config) { m_config = config; //--- Validade string err_msg = ""; if(!m_config.ValidateConfig(err_msg)) { Print("[ERROR] ["+TimeToString(TimeCurrent())+"] Log system error: "+err_msg); } } //+------------------------------------------------------------------+ //| Get configuration | //+------------------------------------------------------------------+ MqlLogifyHandleFileConfig CLogifyHandlerFile::GetConfig(void) { return(m_config); } //+------------------------------------------------------------------+

I will list the helper methods and explain in more detail how they work. I have implemented three useful methods that will be used in file management:

-

LogFileExtensionToStr(): Converts the value of the ENUM_LOG_FILE_EXTENSION enum to a string representing the file extension. The enum defines the possible values for the file type, such as .log , .txt , and .json .

//+------------------------------------------------------------------+ //| Convert log file extension enum to string | //+------------------------------------------------------------------+ string CLogifyHandlerFile::LogFileExtensionToStr(ENUM_LOG_FILE_EXTENSION file_extension) { switch(file_extension) { case LOG_FILE_EXTENSION_LOG: return(".log"); case LOG_FILE_EXTENSION_TXT: return(".txt"); case LOG_FILE_EXTENSION_JSON: return(".json"); } //--- Default return return(".txt"); } //+------------------------------------------------------------------+

-

LogPath(): This function is responsible for generating the full path of the log file based on the current class settings. First, it converts the configured file extension using the LogFileExtensionToStr() function. Then, it checks the configured rotation type. If the rotation is based on the file size or there is no rotation, it returns only the file name in the configured directory. If the rotation is based on the date, it includes the current date (YYYY-MM-DD format) as a prefix in the file name.

//+------------------------------------------------------------------+ //| Generate log file path based on config | //+------------------------------------------------------------------+ string CLogifyHandlerFile::LogPath(void) { string file_extension = this.LogFileExtensionToStr(m_config.file_extension); string base_name = m_config.base_filename + file_extension; if(m_config.rotation_mode == LOG_ROTATION_MODE_SIZE || m_config.rotation_mode == LOG_ROTATION_MODE_NONE) { return(m_config.directory + "\\\\" + base_name); } else if(m_config.rotation_mode == LOG_ROTATION_MODE_DATE) { MqlDateTime date; TimeCurrent(date); string date_str = IntegerToString(date.year) + "-" + IntegerToString(date.mon, 2, '0') + "-" + IntegerToString(date.day, 2, '0'); base_name = date_str + (m_config.base_filename != "" ? "-" + m_config.base_filename : "") + file_extension; return(m_config.directory + "\\\\" + base_name); } //--- Default return return(base_name); } //+------------------------------------------------------------------+

The Emit() method is responsible for logging log messages to a file. In the current code, it simply displays the logs in the terminal console. Let's improve this so that it opens the log file, adds a new line with the formatted data, and closes the file after writing. If the file cannot be opened, an error message will be displayed in the console.

void CLogifyHandlerFile::Emit(MqlLogifyModel &data) { //--- Checks if the configured level allows if(data.level >= this.GetLevel()) { //--- Get the full path of the file string log_path = this.LogPath(); //--- Open file ResetLastError(); int handle_file = FileOpen(log_path, FILE_READ|FILE_WRITE|FILE_ANSI, '\t', m_config.codepage); if(handle_file == INVALID_HANDLE) { Print("[ERROR] ["+TimeToString(TimeCurrent())+"] Log system error: Unable to open log file '"+log_path+"'. Ensure the directory exists and is writable. (Code: "+IntegerToString(GetLastError())+")"); return; } //--- Write FileSeek(handle_file, 0, SEEK_END); FileWrite(handle_file, data.formated); //--- Close file FileClose(handle_file); } }

So we have the simplest version of the class adding the logs to a file, let's perform some simple tests to verify if the basics are working correctly.

First test with files

We will use the test file that we have already used in the previous examples, LogifyTest.mqh . The goal is to configure the logging system to save records in files, using the CLogify base class and the file handler that we have just implemented.

- We create a variable of type MqlLogifyHandleFileConfig to store the specific settings of the file handler.

- We configure the handler to use the desired format and rules, such as file rotation by size.

- We add this handler to the CLogify base class.

- We configure a formatter to determine how each record will be displayed in the file.

See the complete code:

//+------------------------------------------------------------------+ //| Import CLogify | //+------------------------------------------------------------------+ #include <Logify/Logify.mqh> CLogify logify; //+------------------------------------------------------------------+ //| Expert initialization function | //+------------------------------------------------------------------+ int OnInit() { //--- Configs MqlLogifyHandleFileConfig m_config; m_config.CreateSizeRotationConfig("expert","logs",LOG_FILE_EXTENSION_LOG,5,5,10); //--- Handler File CLogifyHandlerFile *handler_file = new CLogifyHandlerFile(); handler_file.SetConfig(m_config); handler_file.SetLevel(LOG_LEVEL_DEBUG); //--- Add handler in base class logify.AddHandler(handler_file); logify.SetFormatter(new CLogifyFormatter("hh:mm:ss","{date_time} [{levelname}] {msg}")); //--- Using logs logify.Debug("RSI indicator value calculated: 72.56", "Indicators", "Period: 14"); logify.Infor("Buy order sent successfully", "Order Management", "Symbol: EURUSD, Volume: 0.1"); logify.Alert("Stop Loss adjusted to breakeven level", "Risk Management", "Order ID: 12345678"); logify.Error("Failed to send sell order", "Order Management", "Reason: Insufficient balance"); logify.Fatal("Failed to initialize EA: Invalid settings", "Initialization", "Missing or incorrect parameters"); return(INIT_SUCCEEDED); } //+------------------------------------------------------------------+

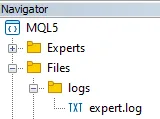

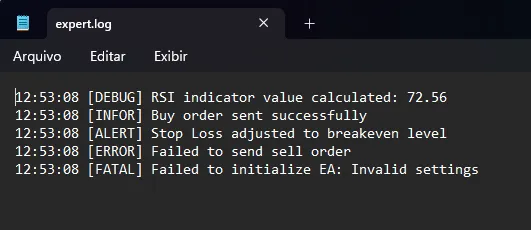

When you run the above code, a new log file will be created in the configured directory ( logs ). It can be viewed in the file browser.

When opening the file in Notepad or any text editor, we will see the content generated by the message tests:

Before moving on to the improvements, I'm going to do a performance test so I can understand how much this improves performance, so we have a reference to compare later. Inside the OnTick() function I'm going to add a record to the log, so that with each new tick the log file is opened, written and closed.

//+------------------------------------------------------------------+ //| Expert tick function | //+------------------------------------------------------------------+ void OnTick() { //--- Logs logify.Debug("Debug Message"); } //+------------------------------------------------------------------+

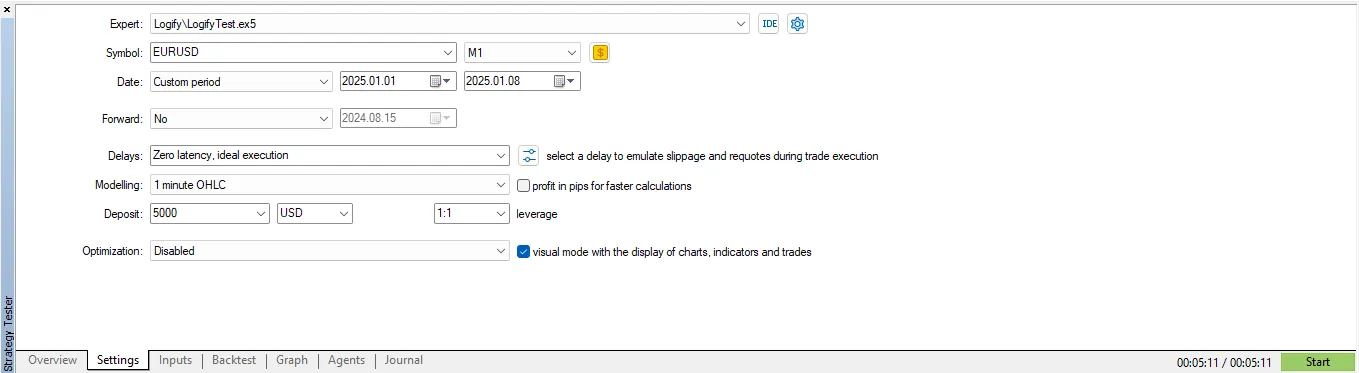

I will use the strategy tester to perform this test, even in the backtest the file creation system works normally, but the files are saved in another folder, later I will show how to access it. The test will be done with the following settings:

Considering the “OHLC for 1 minute” modeling, on the EURUSD symbol with 7 days of testing, it took 5 minutes and 11 seconds to complete the test, considering that at each tick it generates a new log record and saves it to the file immediately.

Testing with JSON files

Finally, I want to show the use of JSON log files in practice, as they can be useful for some specific scenarios. To save as JSON, just change the file type in the settings and define a valid formatter for the JSON format, here is an implementation example:

//+------------------------------------------------------------------+ //| Import CLogify | //+------------------------------------------------------------------+ #include <Logify/Logify.mqh> CLogify logify; //+------------------------------------------------------------------+ //| Expert initialization function | //+------------------------------------------------------------------+ int OnInit() { //--- Configs MqlLogifyHandleFileConfig m_config; m_config.CreateSizeRotationConfig("expert","logs",LOG_FILE_EXTENSION_JSON,5,5,10); //--- Handler File CLogifyHandlerFile *handler_file = new CLogifyHandlerFile(); handler_file.SetConfig(m_config); handler_file.SetLevel(LOG_LEVEL_DEBUG); //--- Add handler in base class logify.AddHandler(handler_file); logify.SetFormatter(new CLogifyFormatter("hh:mm:ss","{\\"datetime\\":\\"{date_time}\\", \\"level\\":\\"{levelname}\\", \\"msg\\":\\"{msg}\\"}")); //--- Using logs logify.Debug("RSI indicator value calculated: 72.56", "Indicators", "Period: 14"); logify.Infor("Buy order sent successfully", "Order Management", "Symbol: EURUSD, Volume: 0.1"); logify.Alert("Stop Loss adjusted to breakeven level", "Risk Management", "Order ID: 12345678"); logify.Error("Failed to send sell order", "Order Management", "Reason: Insufficient balance"); logify.Fatal("Failed to initialize EA: Invalid settings", "Initialization", "Missing or incorrect parameters"); return(INIT_SUCCEEDED); } //+------------------------------------------------------------------+

With the same log messages, this is the result of the file after running the expert on the chart:

{"datetime":"08:24:10", "level":"DEBUG", "msg":"RSI indicator value calculated: 72.56"}

{"datetime":"08:24:10", "level":"INFOR", "msg":"Buy order sent successfully"}

{"datetime":"08:24:10", "level":"ALERT", "msg":"Stop Loss adjusted to breakeven level"}

{"datetime":"08:24:10", "level":"ERROR", "msg":"Failed to send sell order"}

{"datetime":"08:24:10", "level":"FATAL", "msg":"Failed to initialize EA: Invalid settings"}

Conclusion

In this article, we presented a practical and detailed guide on how to perform basic file operations: opening, manipulating the content and, finally, closing the file in a simple way. I also discussed the importance of configuring the "handler" structure, through this configuration, it is possible to adapt several characteristics, such as the type of file to be used (for example, text, log or even json) and the directory in which the file will be saved, making the library very flexible.

In addition, we made specific improvements to the class called CLogifyHandlerFile. These changes made it possible to record each message directly in a log file. After this implementation, as part of the study, I also conducted a performance test to measure the efficiency of the solution. We used a specific scenario, where the system simulated the execution of a trading strategy on the EURUSD asset for a period of one week. During this test, a log record was generated for each new market "tick". This process is extremely intensive, since each change in the asset price requires a new line to be saved in the file.

The final result was recorded: the entire process took 5 minutes and 11 seconds to complete. This result will serve as a reference point for the next article, where we will implement a cache system (temporary memory). The purpose of the cache is to temporarily store records, eliminating the need to access the file constantly, improving overall performance.

Stay tuned for the next article, where we will explore even more advanced techniques to increase system efficiency and performance. See you there!

| File Name | Description |

|---|---|

| Experts/Logify/LogiftTest.mq5 | File where we test the library's features, containing a practical example |

| Include/Logify/Formatter/LogifyFormatter.mqh | Class responsible for formatting log records, replacing placeholders with specific values |

| Include/Logify/Handlers/LogifyHandler.mqh | Base class for managing log handlers, including level setting and log sending |

| Include/Logify/Handlers/LogifyHandlerConsole.mqh | Log handler that sends formatted logs directly to the terminal console in MetaTrader |

| Include/Logify/Handlers/LogifyHandlerDatabase.mqh | Log handler that sends formatted logs to a database (Currently it only contains a printout, but soon we will save it to a real sqlite database) |

| Include/Logify/Handlers/LogifyHandlerFile.mqh | Log handler that sends formatted logs to a file |

| Include/Logify/Logify.mqh | Core class for log management, integrating levels, models and formatting |

| Include/Logify/LogifyLevel.mqh | File that defines the log levels of the Logify library, allowing for detailed control |

| Include/Logify/LogifyModel.mqh | Structure that models log records, including details such as level, message, timestamp, and context |

Warning: All rights to these materials are reserved by MetaQuotes Ltd. Copying or reprinting of these materials in whole or in part is prohibited.

This article was written by a user of the site and reflects their personal views. MetaQuotes Ltd is not responsible for the accuracy of the information presented, nor for any consequences resulting from the use of the solutions, strategies or recommendations described.

Integrate Your Own LLM into EA (Part 5): Develop and Test Trading Strategy with LLMs(IV) — Test Trading Strategy

Integrate Your Own LLM into EA (Part 5): Develop and Test Trading Strategy with LLMs(IV) — Test Trading Strategy

Gating mechanisms in ensemble learning

Gating mechanisms in ensemble learning

Automating Trading Strategies in MQL5 (Part 4): Building a Multi-Level Zone Recovery System

Automating Trading Strategies in MQL5 (Part 4): Building a Multi-Level Zone Recovery System

The Inverse Fair Value Gap Trading Strategy

The Inverse Fair Value Gap Trading Strategy

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

Check out the new article: Mastering Log Records (Part 4): Saving logs to files.

Author: joaopedrodev