From Novice to Expert: Mastering Detailed Trading Reports with Reporting EA

Contents:

- Introduction

- Overview of the Reporting EA update

- Updating the Reporting EA code

- Updating the reports_processor Python script

- Testing

- Conclusion

- Key Lessons

- Attachments

Introduction

Today’s discussion focuses on addressing the challenges associated with delivering trading reports in MetaTrader 5. In our previous publication, we outlined the overall workflow and requirements for the system to function effectively. We introduced the Reporting EA as a tool designed to generate and deliver trading reports in PDF format, with a frequency that can be customized by the user.

In this follow-up, our goal is to build upon that foundation by enhancing both the depth of information contained in the reports and the efficiency of their delivery. This improvement is made possible through the integration of MQL5 with powerful Python libraries, enabling richer outputs and smoother automation. Along the way, we will uncover valuable lessons on how cross-language integration can be leveraged to create robust and seamless trading solutions.

This topic is worth pursuing once you recognize how impactful detailed reporting can be on a trader’s mindset and decision-making process. In the previous version, the generated PDF included only four data points—a clear limitation and a strong reminder of the need for more comprehensive reporting. Going forward, our reports will incorporate expanded metrics, visualizations, graphs, and multiple analytical components, as outlined in paragraph 2 of the earlier article about understanding the reporting features.

Ultimately, the aim is to deliver a flexible and information-rich reporting tool that every analyst or trader can adapt to their unique trading strategies and workflow.

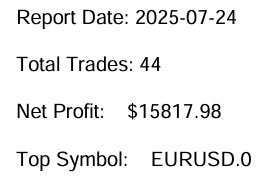

Figure 1: Reports screenshot from the trade report produced by the early version of the Reporting EA

The image above was taken from the PDF report generated by the first version of our reports_processor.py script. The corresponding code snippet is shared below. Today, our focus is on refining this code so that it produces more detailed and insightful reports.

Through this discussion, we will explore additional capabilities that Python can bring to the reporting process and demonstrate how to streamline the interaction between MQL5 and Python for greater efficiency and flexibility.

def generate_pdf(report, output_path): pdf = FPDF() pdf.add_page() pdf.set_font("Arial", size=12) pdf.cell(0, 10, f"Report Date: {report['date']}", ln=True) pdf.cell(0, 10, f"Total Trades: {report['trade_count']}", ln=True) pdf.cell(0, 10, f"Net Profit: ${report['net_profit']:.2f}", ln=True) pdf.cell(0, 10, f"Top Symbol: {report['top_symbol']}", ln=True) pdf.output(output_path)

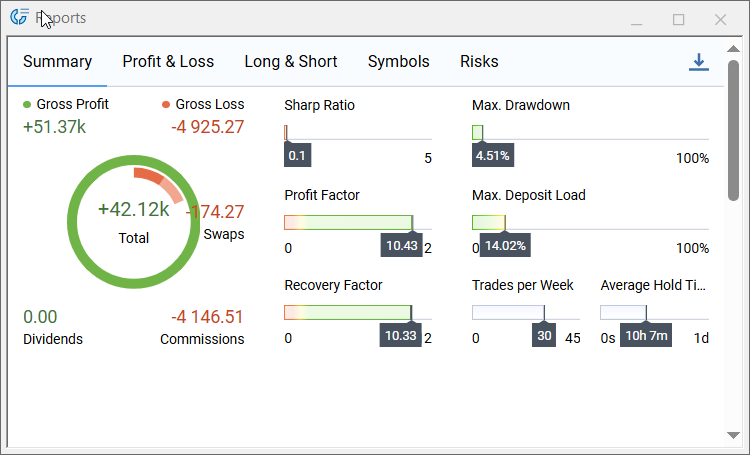

When we compare the reporting features shown in Figure 1 above and Figure 2 below, it becomes clear that there is still significant room for improvement. Our objective is not to reinvent existing solutions, but to provide a customizable and flexible alternative for generating trading reports.

The image from the MetaTrader 5 terminal’s reporting section illustrates the current features, which we will use as a baseline for the enhancements we plan to implement.

Figure 2: MQL5 trading reports features

Overview of the Reporting EA Update

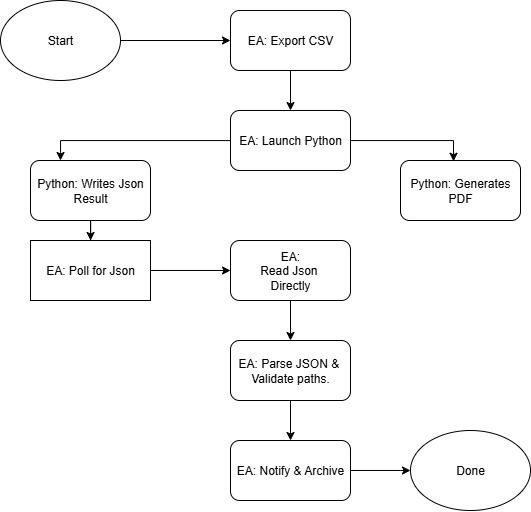

Figure 3 (flow diagram) below presents the processing workflow to be implemented by the Reporting EA update. The EA exports a dated CSV of trading history and launches the Python report generator, which processes the CSV to compute analytics, produce charts, and write the final report (PDF) plus a compact JSON result containing pdf_path and email_sent status. The EA polls for that JSON, reads and parses it, validates the reported output paths and file sizes, and—on successful validation—sends notifications and optionally archives or opens the report to complete the automated reporting cycle.

Figure 3: Process flow chart for the Reporting EA

Updating the Reporting EA code

At this stage we will implement the MQL5 side of the update to deliver high-quality report output. We’ll break the EA into logical sections and explain the code that directly supports our reporting goals. Remember that MQL5 Expert Advisors are not limited to placing trades—they can automate many routine trading tasks, and reporting is a perfect example. Although our current focus is a lightweight, dedicated reporting EA, the same reporting module can be integrated later into a more feature-rich advisor. Designing a specialized, focused EA lets us decompose a large problem into manageable pieces, build and refine the reporting feature in isolation, validate it through smoke tests and targeted debugging, and then plug the polished component into more sophisticated systems with minimal drawbacks.

1. Header, Windows imports, and user inputs

This section declares the EA metadata, imports the minimal Windows API calls we require (to test absolute-file attributes and to launch cmd.exe), and exposes user-configurable inputs. Keep these values accurate for your environment (Python path, script path, output directory) so the EA can validate them at startup. The ReportFormats, EnableEmail, and TestOnInit inputs let you control behavior without code changes.

#property copyright "Clemence Benjamin" #property version "1.01" #property description "Exports trading history in CSV and generates reports via Python" #property strict #define SW_HIDE 0 #define INVALID_FILE_ATTRIBUTES 0xFFFFFFFF #import "kernel32.dll" uint GetFileAttributesW(string lpFileName); #import #import "shell32.dll" int ShellExecuteW(int hwnd, string lpOperation, string lpFile, string lpParameters, string lpDirectory, int nShowCmd); #import //--- User inputs input string PythonPath = "C:\\Users\\YOUR_COMPUTER_NAME\\AppData\\Local\\Programs\\Python\\Python312\\python.exe"; input string ScriptPath = "C:\\Users\\YOUR_COMPUTER_NAME\\PATH_TO\\reports_processor.py"; input string ReportFormats= "html,pdf"; input string OutputDir = "C:\\Users\\YOUR_COMPUTER_NAME\\PATH_TO_WHERE_YOUR_Reports_are_ saved"; input bool EnableEmail = true; input bool TestOnInit = true;

2. Initialization and environment validation (OnInit)

OnInit() warms up the EA, seeds the RNG, cleans the configured paths, logs the terminal files directory, verifies the Python executable and output directory exist, and confirms write permissions to MQL5\Files. When TestOnInit is enabled, it triggers a test run—a helpful smoke check to confirm the end-to-end chain is wired correctly.

int OnInit() { Print(">> Reporting EA initializing…"); MathSrand((uint)TimeLocal()); string cleanPythonPath = CleanPath(PythonPath); string cleanScriptPath = CleanPath(ScriptPath); string cleanOutputDir = CleanPath(OutputDir); Print("PythonPath set to: ", cleanPythonPath); Print("ScriptPath set to: ", cleanScriptPath); Print("OutputDir set to: ", cleanOutputDir); string filesDir = TerminalInfoString(TERMINAL_DATA_PATH) + "\\MQL5\\Files\\"; Print("Terminal Files directory: ", filesDir); if(GetFileAttributesW(cleanPythonPath) == INVALID_FILE_ATTRIBUTES) Print("!! Python executable not found at: ", cleanPythonPath); else Print("✔ Found Python at: ", cleanPythonPath); // Write-permission test (MQL5\\Files) int h = FileOpen("test_perm.txt", FILE_WRITE|FILE_TXT); if(h == INVALID_HANDLE) Print("!! Cannot write to MQL5\\Files directory! Error: ", GetLastError()); else { FileWrite(h, "OK"); FileFlush(h); FileClose(h); FileDelete("test_perm.txt"); Print("✔ Write permission confirmed."); } if(TestOnInit) { Print(">> Test mode: running initial export."); RunDailyExport(); lastRunTime = TimeCurrent(); } return(INIT_SUCCEEDED); }

3. Path utilities and basic helpers

Clean, normalized paths and robust file checks avoid a whole class of runtime errors. CleanPath() normalizes slashes and warns about spaces; FileExists() wraps GetFileAttributesW for absolute paths and falls back to FileOpen for terminal-local files. Keep these helpers central and reuse them throughout the EA.

string CleanPath(string path) { string cleaned = path; StringReplace(cleaned, "/", "\\"); while(StringFind(cleaned,"\\\\")>=0) StringReplace(cleaned, "\\\\", "\\"); StringTrimLeft(cleaned); StringTrimRight(cleaned); return cleaned; } bool FileExists(string path) { if(StringFind(path, "\\", 0) >= 0) { uint attrs = GetFileAttributesW(path); return(attrs != INVALID_FILE_ATTRIBUTES); } else { int fh = FileOpen(path, FILE_READ|FILE_TXT); if(fh == INVALID_HANDLE) return false; FileClose(fh); return true; } }

4. CSV creation and export logic

ExportHistoryToCSV() handles history selection and writes a CSV with a header and per-deal rows. It uses MakeUniqueFilename() to avoid collisions (useful for repeated runs) and returns the chosen filename in the global LastExportedCSV. You want your CSV writer to be deterministic and to include the columns the Python script expects.

string MakeUniqueFilename(string baseName) { int dot = StringFind(baseName, ".", 0); string name = dot >= 0 ? StringSubstr(baseName, 0, dot) : baseName; string ext = dot >= 0 ? StringSubstr(baseName, dot) : ""; string ts = TimeToString(TimeCurrent(), TIME_DATE | TIME_SECONDS); StringReplace(ts, ".", ""); StringReplace(ts, " ", "_"); StringReplace(ts, ":", ""); int suffix = MathAbs(MathRand()) % 9000 + 1000; return name + "_" + ts + "_" + IntegerToString(suffix) + ext; } bool ExportHistoryToCSV(string filename) { datetime end = TimeCurrent(); datetime start = end - 7776000; // 90 days if(!HistorySelect(start, end)) { Print("!! HistorySelect failed."); return false; } int total = HistoryDealsTotal(); if(total == 0) { Print("!! No trading history found."); return false; } int attempts = 3; string tryName = filename; int fh = INVALID_HANDLE; for(int a=0; a<attempts; a++) { fh = FileOpen(tryName, FILE_WRITE|FILE_CSV|FILE_ANSI, ","); if(fh != INVALID_HANDLE) break; tryName = MakeUniqueFilename(filename); Sleep(200); } if(fh == INVALID_HANDLE) { Print("!! FileOpen failed after retries."); return false; } FileWrite(fh, "Ticket,Time,Type,Symbol,Volume,Price,Profit,Commission,Swap,Balance,Equity"); // ... iterate deals and FileWrite rows ... FileFlush(fh); FileClose(fh); LastExportedCSV = tryName; return true; }

5. Python invocation and orchestration (RunDailyExport)

RunDailyExport() is the orchestration hub: it builds the CSV path, launches Python with cmd.exe and redirects stdout/stderr into python_run.log in MQL5\Files so the EA can still inspect the script output. The method then polls for the preferred report_result_YYYY-MM-DD.json (produced by Python) and falls back to PDF polling if JSON isn’t available. This design prioritizes a structured JSON handshake so the EA can reliably confirm success and attachments.

void RunDailyExport() { string filesDir = TerminalInfoString(TERMINAL_DATA_PATH) + "\\MQL5\\Files\\"; string dateStr = TimeToString(TimeCurrent(), TIME_DATE); StringReplace(dateStr, ".", ""); string csvBase = "History_" + dateStr + ".csv"; LastExportedCSV = ""; if(!ExportHistoryToCSV(csvBase)) { Print("!! CSV export failed"); return; } string csvFull = filesDir + LastExportedCSV; string cleanOutputDir = CleanPath(OutputDir); string pyLogName = "python_run.log"; string pyLogFull = filesDir + pyLogName; string pythonCmd = StringFormat("\"%s\" \"%s\" \"%s\" --formats %s --outdir \"%s\" %s > \"%s\" 2>&1", CleanPath(PythonPath), CleanPath(ScriptPath), csvFull, "pdf", cleanOutputDir, EnableEmail ? "--email" : "", pyLogFull); string fullCmd = "/c " + pythonCmd; PrintFormat("→ Launching: cmd.exe %s", fullCmd); int result = ShellExecute(fullCmd); PrintFormat("← ShellExecute returned: %d", result); // Then poll for JSON or fallback to PDF; see next section for JSON handling... }

6. JSON handling and fallback read strategy

To avoid brittle reliance on human-readable logs, the EA prefers a machine-readable JSON result. ReadFileText() attempts to read absolute JSON paths directly; when MetaTrader 5 refuses absolute FileOpen, CopyToFilesAndRead() uses a cmd copy to copy the JSON into MQL5\Files and then reads it with FileOpen. JsonExtractString / JsonExtractBool are small, pragmatic extractors sufficient for the simple JSON contract (pdf_path, email_sent).

string ReadFileText(string fullpath) { if(StringFind(fullpath, "\\", 0) >= 0) { uint attrs = GetFileAttributesW(fullpath); if(attrs == INVALID_FILE_ATTRIBUTES) return(""); int fh = FileOpen(fullpath, FILE_READ|FILE_TXT); if(fh == INVALID_HANDLE) return(""); string txt = ""; while(!FileIsEnding(fh)) { txt += FileReadString(fh); if(!FileIsEnding(fh)) txt += "\n"; } FileClose(fh); return txt; } else { int fh = FileOpen(fullpath, FILE_READ|FILE_TXT); if(fh == INVALID_HANDLE) return(""); // ... read and return ... } } string CopyToFilesAndRead(string absPath, string filesName) { string filesDir = TerminalInfoString(TERMINAL_DATA_PATH) + "\\MQL5\\Files\\"; string destAbs = filesDir + filesName; string cmd = "/c copy /Y \"" + absPath + "\" \"" + destAbs + "\" > nul 2>&1"; int r = ShellExecute(cmd); // wait briefly for copy then read local file return ReadTerminalLogFile(filesName); } string JsonExtractString(string json, string key) { /* tiny extractor: locate "key": "value" */ } bool JsonExtractBool(string json, string key) { /* tiny extractor: locate "key": true/false */ }

7. Verification, notification, and graceful fallback

After parsing the JSON, the EA validates the pdf_path and html_path values with FileExists() and optionally notifies the trader via SendNotification(). If JSON read or validation fails the EA gracefully falls back to tailing python_run.log and polling for the expected Report_YYYY-MM-DD.pdf (size > 0). This layered approach balances reliability (JSON) with robustness (PDF/log fallback).

if(StringLen(pdfp) > 0) { if(FileExists(pdfp)) { PrintFormat("✔ PDF exists at (from JSON): %s", pdfp); SendNotification("Report PDF created: " + pdfp); } else Print("!! JSON says PDF path but file not found: ", pdfp); } // fallback: if JSON unavailable -> poll expectedPdf in OutputDir and check size

8. Support helpers and teardown

Utility routines such as GetFileSizeByOpen() (safe file size check), ReadTerminalLogFile() (read files from MQL5\Files), and the ShellExecute() wrapper are small but important. OnDeinit() prints a message on cleanup. Keep these compact and well-documented; they simplify debugging and reduce duplication.

long GetFileSizeByOpen(string filename) { int fh = FileOpen(filename, FILE_READ|FILE_BIN); if(fh == INVALID_HANDLE) return -1; int pos = FileSeek(fh, 0, SEEK_END); FileClose(fh); return (pos < 0) ? -1 : (long)pos; } string ReadTerminalLogFile(string filename) { int fh = FileOpen(filename, FILE_READ | FILE_TXT); if(fh == INVALID_HANDLE) return(""); string content = ""; while(!FileIsEnding(fh)) { content += FileReadString(fh); if(!FileIsEnding(fh)) content += "\n"; } FileClose(fh); return content; } int ShellExecute(string command) { return ShellExecuteW(0, "open", "cmd.exe", command, NULL, SW_HIDE); }

Updating the reports_processor Python script

The EA works hand-in-hand with the Python reports_processor.py script, so now that we have updated the EA we must adapt the Python side to save and report results in a way that the EA can consume efficiently. In this section we will explain the script code in detail and show exactly how each part integrates with the EA — from CSV ingestion and analytics through PDF rendering and JSON result emission — to ensure a reliable, automatable reporting pipeline.

Before diving into the breakdown of our script code, we first need to set up the Python environment to ensure the system can fully support the script’s functionality. In the following steps, we will walk through the setup process starting from installation. Completing this crucial step gives us confidence that the environment is properly configured, allowing us to seamlessly perform tests during the development of the reports_processor script.

Step-by-Step Guide: Setting Up Python on Windows for the reports_processor Script

1. Install Python 3.9+ from python.org and ensure python is on PATH.

2. Open PowerShell and create + activate venv:

python -m venv venv .\venv\Scripts\Activate.ps1

3. Upgrade pip:

python -m pip install --upgrade pip

4. Install core packages (fpdf is default PDF backend; add others if desired):

pip install pandas numpy jinja2 matplotlib fpdf yagmail

Optional backends:

pip install pdfkit weasyprint

If using wkhtmltopdf, download its Windows installer and add the executable to PATH or set WKHTMLTOPDF_PATH env var.

5. Set environment variables for email (example: set in System Properties → Environment Variables or PowerShell):

setx YAGMAIL_USERNAME "you@example.com" setx YAGMAIL_PASSWORD "your-email-password" setx WKHTMLTOPDF_PATH "C:\Program Files\wkhtmltopdf\bin\wkhtmltopdf.exe" # if using wkhtmltopdf

6. Test run (from project folder, with venv active):

python reports_generator.py "C:\path\to\MQL5\Files\History_20250821.csv" --formats pdf --outdir "C:\path\to\Reports" --email

7. Verify outputs in C:\path\to\Reports:

- Report_YYYY-MM-DD.pdf (primary)

- equity_curve_YYYY-MM-DD.png (chart)

- report_result_YYYY-MM-DD.json (EA handshake)

Reports_processor.py

1. Startup, imports, and backend detection

On startup the script loads Python standard libraries (os, sys, logging, datetime, argparse, glob) and core data libraries (pandas, numpy). It then attempts to import optional toolchains — WeasyPrint, pdfkit (wkhtmltopdf), FPDF, and yagmail—and records availability flags for each backend. These flags let the script pick an appropriate PDF generation or emailing route at runtime based on what is installed, while still running in environments where some optional packages are absent. Logging is configured early so the script emits timestamped informational and error messages that the EA can capture in python_run.log.

#!/usr/bin/env python # reports_processor.py __version__ = "1.0.1" import sys import os import traceback import argparse import glob from datetime import datetime, timedelta import logging # core data libs import pandas as pd import numpy as np # templating & plotting from jinja2 import Environment, FileSystemLoader import matplotlib.pyplot as plt # optional libraries: import safely WEASY_AVAILABLE = False PDFKIT_AVAILABLE = False FPDF_AVAILABLE = False YAGMAIL_AVAILABLE = False try: from weasyprint import HTML # may fail if native libs aren't installed WEASY_AVAILABLE = True except Exception: WEASY_AVAILABLE = False try: import pdfkit # wrapper for wkhtmltopdf PDFKIT_AVAILABLE = True except Exception: PDFKIT_AVAILABLE = False try: from fpdf import FPDF # pure-python PDF writer (no native deps) FPDF_AVAILABLE = True except Exception: FPDF_AVAILABLE = False try: import yagmail YAGMAIL_AVAILABLE = True except Exception: YAGMAIL_AVAILABLE = False # Configure logging logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')

2. Argument parsing and command contract

The script exposes a small CLI: its positional csv_path accepts either a CSV file path or a folder to search; --formats controls which outputs to create (HTML, PDF, or both); --outdir sets where to write artifacts; --email toggles sending reports; and --pdf-backend selects which PDF path to use. This contract matches the EA invocation pattern: the EA provides the CSV path, the output directory, and an optional email flag, and then monitors the output directory for a JSON result.

def parse_args(): """Parse command-line arguments.""" parser = argparse.ArgumentParser(description="Generate trading reports (HTML/PDF) from CSV.") parser.add_argument("csv_path", help="Path to the trades CSV file or a folder to search for History_*.csv") parser.add_argument("-f", "--formats", nargs="+", choices=["html", "pdf"], default=["pdf"], help="Report formats to generate (html, pdf, or both)") parser.add_argument("-o", "--outdir", default=".", help="Directory to write report files") parser.add_argument("--email", action="store_true", help="Send report via email") parser.add_argument("--pdf-backend", choices=["weasyprint","wkhtmltopdf","fpdf"], default="fpdf", help="PDF backend to use. 'weasyprint', 'wkhtmltopdf', or 'fpdf' (no native deps). Default: fpdf") return parser.parse_args()

3. CSV resolution (finding the input file)

When given a folder or a flexible csv_path, the script resolves the actual CSV to process. If csv_path is a directory, it searches for History_*.csv files (falling back to any .csv if none match) and picks the most recently modified one. If a full CSV path is provided, it is accepted as-is. This makes the script tolerant and easy to call both from the EA (which passes a specific CSV) and for manual runs.

def resolve_csv_path(csv_path): """ Ensure csv_path points to an existing file. If not, try to locate the most recent History_*.csv in the same directory or the provided folder. Returns resolved path or raises FileNotFoundError. """ # If csv_path is a directory -> look there if os.path.isdir(csv_path): search_dir = csv_path else: # If path exists as file -> return if os.path.isfile(csv_path): return csv_path # otherwise, take parent directory to search search_dir = os.path.dirname(csv_path) or "." pattern = os.path.join(search_dir, "History_*.csv") candidates = glob.glob(pattern) if not candidates: # also allow any .csv if no History_*.csv found candidates = glob.glob(os.path.join(search_dir, "*.csv")) if not candidates: raise FileNotFoundError(f"No CSV files found in {search_dir} (tried {pattern})") # pick the newest file by modification time candidates.sort(key=os.path.getmtime, reverse=True) chosen = candidates[0] logging.info(f"Resolved CSV path: {chosen} (searched: {search_dir})") return chosen

4. Loading and normalizing trade data

The loader reads the CSV using pandas, attempting to parse a time column into datetimes. It ensures required columns exist (for example profit, symbol, balance, and equity) by filling defaults or computing values when possible (e.g., cumulative sums for balance/equity when needed). After normalization, the DataFrame is sorted by time, producing a consistent, time-ordered dataset for analytics and plotting.

def load_data(csv_path): """Load and validate CSV data, computing missing columns if necessary.""" try: if not os.path.isfile(csv_path): logging.error(f"CSV file not found: {csv_path}") raise FileNotFoundError(f"CSV file not found: {csv_path}") # try parsing Time; if fails, read without parse and try to coerce later try: df = pd.read_csv(csv_path, parse_dates=["Time"]) except Exception: df = pd.read_csv(csv_path) if "Time" in df.columns: df["Time"] = pd.to_datetime(df["Time"], errors="coerce") # Provide minimal defaults if columns missing if "Profit" not in df.columns: df["Profit"] = 0.0 if "Symbol" not in df.columns: df["Symbol"] = "N/A" required_cols = ["Time", "Symbol", "Profit", "Balance", "Equity"] for col in required_cols: if col not in df.columns: logging.warning(f"Missing column: {col}. Attempting to compute.") if col == "Balance": # create cumsum of profit + commission + swap if possible df["Commission"] = df.get("Commission", 0) df["Swap"] = df.get("Swap", 0) df["Balance"] = (df["Profit"] + df["Commission"] + df["Swap"]).cumsum() elif col == "Equity": df["Equity"] = df.get("Balance", df["Profit"].cumsum()) # ensure Time column exists and is sorted if "Time" in df.columns: df = df.sort_values(by="Time") return df except Exception as e: logging.error(f"Error loading CSV: {e}") raise

5. Computing analytics and metrics

The compute_stats step derives the key report metrics the EA and the user care about: a report date string, net profit, total trade count, the top-performing symbol (by aggregated profit), maximum drawdown computed from the equity curve, and a simple Sharpe-like ratio estimate. These values are packaged into a stats dictionary and later embedded into both HTML templates and PDF pages to present a quick executive summary.

def compute_stats(df): """Compute trading statistics from the DataFrame.""" try: top_symbol = None try: top_symbol = df.groupby("Symbol")["Profit"].sum().idxmax() except Exception: top_symbol = "N/A" eq = df.get("Equity") if eq is None: eq = df.get("Balance", df["Profit"].cumsum()) max_dd = float((eq.cummax() - eq).max()) if len(eq) > 0 else 0.0 sharpe = 0.0 if len(df) > 1 and "Profit" in df.columns: dif = df["Profit"].diff().dropna() if dif.std() != 0: sharpe = float((dif.mean() / dif.std()) * np.sqrt(252)) stats = { "date": datetime.now().strftime("%Y-%m-%d"), "net_profit": float(df["Profit"].sum()) if "Profit" in df.columns else 0.0, "trade_count": int(len(df)), "top_symbol": top_symbol, "max_drawdown": max_dd, "sharpe_ratio": sharpe } return stats except Exception as e: logging.error(f"Error computing stats: {e}") raise

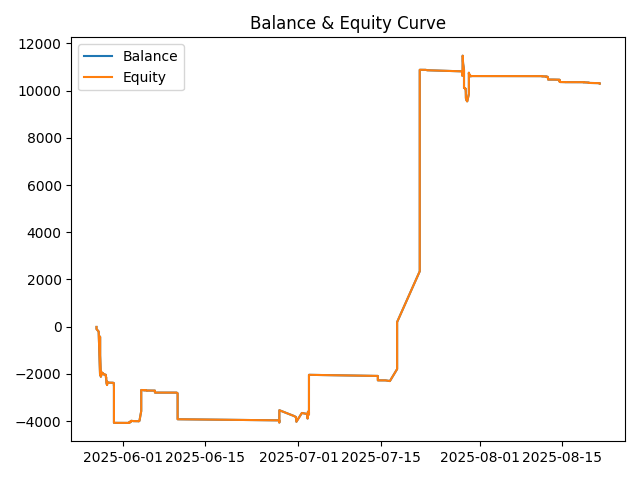

6. Charting—equity / balance curve

The script produces an equity curve PNG using matplotlib. It prefers plotting time vs balance and equity when timestamps are present; otherwise, it plots index vs. balance/equity. The generated PNG is saved into the --outdir and later referenced by the HTML template or embedded into the PDF, giving readers a visual summary of account performance over the reporting window.

# Generate equity curve plot curve_image = os.path.join(args.outdir, f"equity_curve_{stats['date']}.png") plt.figure() # handle possibility of NaT in Time if "Time" in df.columns and not df["Time"].isnull().all(): plt.plot(df["Time"], df["Balance"], label="Balance") plt.plot(df["Time"], df["Equity"], label="Equity") else: # fallback: plot index vs balance/equity plt.plot(df.index, df["Balance"], label="Balance") plt.plot(df.index, df["Equity"], label="Equity") plt.title("Balance & Equity Curve") plt.legend() plt.tight_layout() plt.savefig(curve_image) plt.close() logging.info(f"Equity curve saved: {curve_image}")

7. HTML rendering with Jinja2

If HTML output is requested (or produced as an intermediate artifact), the script uses Jinja2 to render report_template.html. The template receives the stats dictionary, the per-symbol profit aggregates, and the curve image filename. The rendered HTML is written to the output folder and logged. This HTML can be used as the final deliverable or as the source input for HTML-to-PDF converters.

def render_html(df, stats, outpath, curve_image): """Render the HTML report using a Jinja2 template.""" try: env = Environment(loader=FileSystemLoader(os.path.dirname(__file__))) tmpl_path = os.path.join(os.path.dirname(__file__), "report_template.html") if not os.path.isfile(tmpl_path): logging.error(f"Template not found: {tmpl_path}") raise FileNotFoundError(f"Missing template: {tmpl_path}") tmpl = env.get_template("report_template.html") html = tmpl.render(stats=stats, symbol_profits=df.groupby("Symbol")["Profit"].sum().to_dict(), curve_image=curve_image) os.makedirs(os.path.dirname(outpath), exist_ok=True) with open(outpath, "w", encoding="utf-8") as f: f.write(html) logging.info(f"HTML written: {outpath}") except Exception as e: logging.error(f"Error rendering HTML: {e}") raise

8. PDF generation—multiple backends

The script supports three PDF generation strategies and selects one based on the --pdf-backend argument and detected availability:

- WeasyPrint: converts the rendered HTML directly to PDF (HTML → PDF) using WeasyPrint if present.

- wkhtmltopdf (pdfkit): similarly converts HTML to PDF via the wkhtmltopdf binary when configured.

- FPDF: a pure-Python path that builds a PDF programmatically and inserts charts and data tables without relying on HTML/CSS rendering. This path produces a multi-page A4 PDF with a title page, key-stat blocks, the equity chart, symbol profit tables, and a recent-trades sample.

def convert_pdf_weasy(html_path, pdf_path): if not WEASY_AVAILABLE: raise RuntimeError("WeasyPrint backend requested but weasyprint is not available.") os.makedirs(os.path.dirname(pdf_path), exist_ok=True) HTML(html_path).write_pdf(pdf_path) logging.info(f"PDF written: {pdf_path}") def convert_pdf_wkhtml(html_path, pdf_path, wk_path=None): if not PDFKIT_AVAILABLE: raise RuntimeError("wkhtmltopdf/pdfkit backend requested but pdfkit is not available.") config = None if wk_path and os.path.isfile(wk_path): config = pdfkit.configuration(wkhtmltopdf=wk_path) pdfkit.from_file(html_path, pdf_path, configuration=config) logging.info(f"PDF written: {pdf_path}") def convert_pdf_with_fpdf(df, stats, curve_image_path, pdf_path): if not FPDF_AVAILABLE: raise RuntimeError("FPDF backend requested but fpdf is not installed.") pdf = FPDF(orientation='P', unit='mm', format='A4') pdf.set_auto_page_break(auto=True, margin=15) pdf.add_page() pdf.set_font("Arial", "B", 16) pdf.cell(0, 8, f"Trading Report - {stats.get('date','')}", ln=True, align='C') # ... add stats, insert curve image and tables ... if curve_image_path and os.path.isfile(curve_image_path): try: pdf.image(curve_image_path, x=10, y=None, w=190) except Exception as e: logging.warning(f"Could not insert curve image into PDF: {e}") pdf.output(pdf_path) logging.info(f"PDF written: {pdf_path}")

Each backend writes the final Report_YYYY-MM-DD.pdf file into the configured output directory and reports its completion via logging.

9. Email sending

If the --email flag is set, the script uses an email helper routine to send the generated report files to the configured recipient using the available emailing library. The routine logs whether sending succeeded, and the result is captured later in the JSON handshake so the EA can determine whether an email was dispatched during that run.

def send_email(files, stats): """Send the generated reports via email.""" try: if not YAGMAIL_AVAILABLE: logging.error("yagmail not available; cannot send email. Install yagmail or remove --email flag.") return user = os.getenv("Your YAGMAIL ACCOUNT") pw = os.getenv("Your Email password") if not user or not pw: logging.error("Email credentials not set in environment variables (YAGMAIL_USERNAME, YAGMAIL_PASSWORD).") return yag = yagmail.SMTP(user, pw) subject = f"Trading Report {stats['date']}" contents = [f"See attached report for {stats['date']}"] + files yag.send(to=user, subject=subject, contents=contents) logging.info("Email sent.") except Exception as e: logging.error(f"Error sending email: {e}")

10. Result JSON writer—the EA handshake

After generating outputs, the script compiles a compact, machine-readable JSON containing pdf_path, html_path, email_sent, timestamp, and an exit_code. The JSON is written atomically (via a temporary file + rename) into the --outdir as report_result_YYYY-MM-DD.json. Because the write is atomic, the EA can safely poll for the presence of the JSON file as a reliable signal that the Python side finished and then read its contents to learn the exact output locations and whether emailing occurred.

def write_result_json(outdir, pdf_path=None, html_path=None, email_sent=False, exit_code=0): """Write a small JSON file with the report result so MQL5 can poll/verify.""" import json, tempfile try: result = { "pdf_path": pdf_path or "", "html_path": html_path or "", "email_sent": bool(email_sent), "timestamp": datetime.now().isoformat(), "exit_code": int(exit_code) } os.makedirs(outdir, exist_ok=True) fname = os.path.join(outdir, f"report_result_{datetime.now().strftime('%Y-%m-%d')}.json") # atomic write fd, tmp = tempfile.mkstemp(prefix="._report_result_", dir=outdir, text=True) with os.fdopen(fd, "w", encoding="utf-8") as f: json.dump(result, f, indent=2) os.replace(tmp, fname) logging.info(f"Result JSON written: {fname}") return fname except Exception as e: logging.error(f"Failed to write result JSON: {e}") return None

11. Main flow

The main() function strings everything together: it parses arguments, resolves the CSV, loads & normalizes the data, computes stats, generates the equity chart, renders HTML (if requested), generates PDF(s) via the selected backend, optionally sends email, cleans up older reports in the output directory, writes the result JSON, and exits with a success or failure code. The sequence and logging ensure that the EA—which launched the script—can follow the run's progress (via the python_run.log) and react to the final report_result_...json.

def main(): """Main function to generate reports.""" args = parse_args() try: # Ensure output directory exists os.makedirs(args.outdir, exist_ok=True) # Resolve csv_path in case a folder or variable-name was provided try: csv_path_resolved = resolve_csv_path(args.csv_path) except FileNotFoundError as e: logging.error(str(e)) return 1 # Load data and compute stats df = load_data(csv_path_resolved) stats = compute_stats(df) # Generate equity curve plot curve_image = os.path.join(args.outdir, f"equity_curve_{stats['date']}.png") # ... plotting code ... base = os.path.join(args.outdir, f"Report_{stats['date']}") files_out = [] # Generate HTML report (if requested or needed by PDF backend) html_path = base + ".html" if "html" in args.formats: render_html(df, stats, html_path, os.path.basename(curve_image)) files_out.append(html_path) # Decide PDF backend and generate PDF backend = args.pdf_backend.lower() if hasattr(args, "pdf_backend") else "fpdf" if "pdf" in args.formats: pdf_path = base + ".pdf" if backend == "weasyprint": convert_pdf_weasy(html_path, pdf_path) elif backend == "wkhtmltopdf": convert_pdf_wkhtml(html_path, pdf_path, wk_path=os.getenv("WKHTMLTOPDF_PATH")) elif backend == "fpdf": convert_pdf_with_fpdf(df, stats, curve_image, pdf_path) files_out.append(pdf_path) # Send email if enabled if args.email: send_email(files_out, stats) # write result JSON for MQL5 pdf_file = next((f for f in files_out if f.endswith(".pdf")), "") html_file = next((f for f in files_out if f.endswith(".html")), "") write_result_json(args.outdir, pdf_path=pdf_file, html_path=html_file, email_sent=args.email, exit_code=0) return 0 except Exception as e: logging.error(f"Main execution error: {e}") traceback.print_exc() try: write_result_json(args.outdir, exit_code=1) except: pass return 1 if __name__ == "__main__": sys.exit(main())

12. Error handling and guaranteed JSON on failure

Throughout main(), exceptions are caught at the top level so that if any unhandled error occurs, the script logs the traceback and still writes a report_result_YYYY-MM-DD.json with an exit_code indicating failure. This ensures the EA always receives a deterministic JSON object to inspect (success or failure), which simplifies the EA's verification and fallback logic.

except Exception as e: logging.error(f"Main execution error: {e}") traceback.print_exc() # ensure a JSON is still written so MQL5 knows it failed try: write_result_json(args.outdir, exit_code=1) except: pass return 1

13. Cleanup and retention policy

At the end of a successful run, the script performs a retention sweep of the output directory, removing older Report_ files beyond a configurable window (the current script uses 30 days). This keeps the output folder tidy and prevents unbounded disk growth on systems that run reports frequently.

# Clean up old reports (older than 30 days) cutoff = datetime.now() - timedelta(days=30) for f in os.listdir(args.outdir): if f.startswith("Report_") and f.endswith(tuple(args.formats)): full = os.path.join(args.outdir, f) if datetime.fromtimestamp(os.path.getmtime(full)) < cutoff: os.remove(full) logging.info(f"Deleted old file: {full}")

How the EA and Python script integrate at runtime

The runtime handshake is straightforward: the EA exports a CSV and launches the script (passing the CSV path, --outdir, and any flags). The Python script processes the CSV and writes artifacts (PNG, PDF) into the output folder and finally writes report_result_YYYY-MM-DD.json. The EA polls for that JSON; once present, it reads the JSON, validates the reported paths and file sizes, and performs post-actions (notifications, archiving, email confirmation). The logging (python_run.log) and the JSON together provide a robust, machine-consumable contract between the two components.

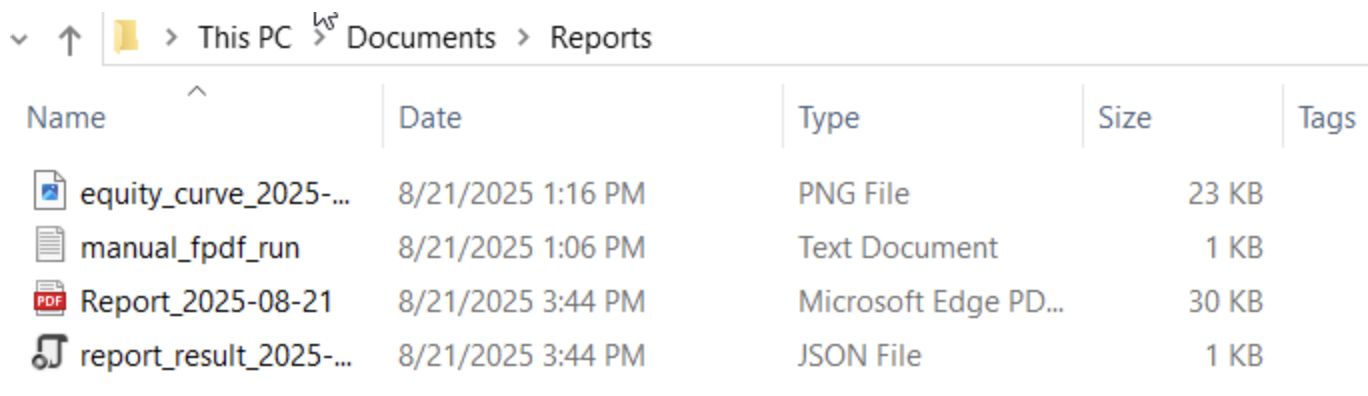

Testing

Our testing is carried out by deploying the EA on the MetaTrader 5 platform, where the Python script is launched and executed in the background. The results can be monitored through the Experts Log in the terminal, as well as by checking the designated output directories for generated files. In my test, I located the output report named Report_2025-08-21. The contents of this file are far more extensive than those produced in earlier versions. The report now includes well-structured tables, equity curves, and multiple calculated metrics, all presented in a clear format that provides valuable insights for traders. Below are screenshots showcasing the generated report files and partly the PDF contents.

Figure 4: Output files viewed in Windows Explorer

Figure 5: Animated screenshot from the reports PDF generated

Figure 6: Equity Curve produced in the reports

Conclusion

We have successfully demonstrated the integration of MQL5 and Python to deliver feature-rich trading reports that go far beyond the basic outputs provided by the previous version of our Reporting EA. One of the standout features is the automated equity curve, which visually tracks balance and equity trends over time, offering traders an immediate grasp of performance dynamics. The system proves the feasibility of linking an Expert Advisor with an external Python reporting pipeline and also showcases how this collaboration can be refined and expanded further.

By continuously refining the EA code and leveraging powerful Python libraries such as Pandas, Matplotlib, and FPDF, we can compute advanced statistics, generate insightful tables, and embed clear visualizations into reports. The addition of a lightweight JSON handshake between the EA and the Python script ensures reliable confirmation of outputs, making the workflow seamless and transparent. Furthermore, optional features such as automated email delivery and flexible PDF backends demonstrate the adaptability of the framework to different environments.

This development lays a solid foundation for long-term reporting solutions. The resulting documents can serve as comprehensive tools for trading performance analysis, helping traders and investors alike to spot trends, measure risks such as drawdowns, and evaluate strategies with greater clarity. Ultimately, the Reporting EA combined with the reports_processor.py script shows how cross-language integration can enhance automation, improve decision-making, and open the door to even more sophisticated analytics in the future.

Key Lessons

| Key lesson | Description: |

|---|---|

| Validate external dependencies at startup. | Always verify locations of external tools and folders (Python executable, script path, output directory) during OnInit using file-attribute checks. Early validation with GetFileAttributesW prevents obscure runtime failures. |

| Prefer a machine-readable handshake (JSON) | Use a small, structured result file (JSON) as the canonical signal from Python to MQL5 instead of parsing free-form logs. It makes validation deterministic and programmatic. |

| Write result files atomically | Write the JSON with a temporary filename then rename/replace it atomically. This avoids partial-read races when the EA polls for completion. |

| Account for MQL5 absolute-path limitations | MQL5 FileOpen often cannot read arbitrary absolute paths. Provide a fallback: copy the file into MQL5\\Files or read via a WinAPI ReadFile approach so the EA can consistently access outputs. |

| Use robust file existence and size checks | Do not assume presence implies completeness. Check file attributes and file size (open + seek to end) to ensure a report or binary is fully written before acting on it. |

| Employ unique filenames to avoid collisions | Generate CSV and report filenames that include timestamps and random suffixes so repeated runs don't overwrite results and historical artifacts are preserved for auditing. |

| Export CSVs consistently for Python | Design CSV columns (headers, formats, date/time style) that the Python script expects. A consistent export contract eliminates parsing ambiguity and reduces data-munging errors. |

| Centralize helper utilities | Keep path normalization, file-existence helpers, JSON extractors and small read/write wrappers in one place so every part of the EA reuses the same robust logic (CleanPath, FileExists, ReadTerminalLogFile). |

| Implement polling with sensible timeouts | When waiting for an external process, use a loop with a short poll interval and an overall timeout. This balances responsiveness with CPU usage and avoids infinite waits. |

| Provide fallbacks and layered verification | Design layered checks: prefer JSON-based confirmation; if missing, fall back to tailing python_run.log; if that fails, poll for the expected PDF. Multiple verification paths increase robustness. |

| Log liberally and surface diagnostics | Write informative log entries and print tails of Python logs to the Experts Log. Clear diagnostics accelerate troubleshooting when runs fail in production. |

| Never hardcode credentials | Keep secrets out of source: use environment variables for email credentials and document which variables must be set. This improves security and portability. |

| Support multiple PDF backends and detect availability | Make the Python script backend-agnostic: detect weasyprint, wkhtmltopdf/pdfkit, or a pure-Python fpdf path and pick the best available option at runtime. |

| Use virtual environments and document setup | Document a reproducible Windows setup: Python version, virtualenv creation, pip install list and native prerequisites for optional backends so deployments are predictable. |

| Smoke-test the end-to-end flow | Run a quick end-to-end “smoke test”: deploy the EA, have it export a CSV, launch Python, and verify the Experts Log plus the output folder for PDF/JSON. Smoke tests give fast confidence before deeper QA. |

| Guarantee deterministic failure reporting | On exceptions always write a result JSON with an exit_code and diagnostic fields. That ensures the EA can detect failures and apply fallback logic rather than hanging indefinitely. |

Attachments

| Filename | Version | Description |

|---|---|---|

| Reporting_EA.mq5 | 1.01 | Expert Advisor that exports trading history to uniquely named CSV files, launches the Python report processor, and verifies outputs. Features include path normalization, dependency checks, atomic filename generation, JSON-first handshake with Python, copy-fallback for absolute-path reads, PDF polling fallback, detailed logging to Experts, and optional push notifications. |

| Reports_processor.py | 1.0.1 | Python reporting engine that ingests the EA CSV, computes analytics (net profit, drawdown, Sharpe, per-symbol aggregates), produces charts, renders HTML and/or PDF (multiple backend options: fpdf, wkhtmltopdf, WeasyPrint), optionally sends email, and writes an atomic report_result_YYYY-MM-DD.json for the EA handshake. Includes retention cleanup and robust error handling with guaranteed JSON on failure. |

Warning: All rights to these materials are reserved by MetaQuotes Ltd. Copying or reprinting of these materials in whole or in part is prohibited.

This article was written by a user of the site and reflects their personal views. MetaQuotes Ltd is not responsible for the accuracy of the information presented, nor for any consequences resulting from the use of the solutions, strategies or recommendations described.

MetaTrader Meets Google Sheets with Pythonanywhere: A Guide to Secure Data Flow

MetaTrader Meets Google Sheets with Pythonanywhere: A Guide to Secure Data Flow

Chart Synchronization for Easier Technical Analysis

Chart Synchronization for Easier Technical Analysis

Multi-module trading robot in Python and MQL5 (Part I): Creating basic architecture and first modules

Multi-module trading robot in Python and MQL5 (Part I): Creating basic architecture and first modules

Developing a Replay System (Part 77): New Chart Trade (IV)

Developing a Replay System (Part 77): New Chart Trade (IV)

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use