Forum on trading, automated trading systems and testing trading strategies

Matrices and vectors in MQL5. Share your opinion

Slava , 2023.06.02 16:00

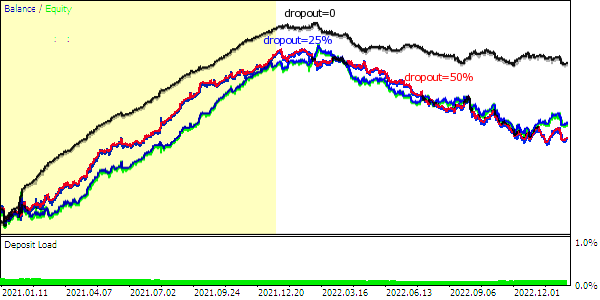

At the end of 2021, we started introducing new entities into MQL5 - matrices and vectors. In January 2022, a new section appeared in the documentation "Data types - matrices and vectors" and the first announcement "New version of the MetaTrader 5 platform build 3180: Vectors and matrices in MQL5 and improved usability" . In the summer of 2022, a full-fledged section "Methods of matrices and vectors" appeared in the documentation.

And the work continues. We write application codes (for internal consumption and for articles) and immediately see what is convenient, what is inconvenient, what is needed right now, what is necessary in principle, but can wait. However, we need more feedback. Members of the MQL5 community probably have ideas and suggestions. Share!

In turn, I can show the compact code of the classification model

//+------------------------------------------------------------------+ //| NeuralNetworkClassification.mqh | //| Copyright 2023, MetaQuotes Ltd. | //| https://www.mql5.com | //+------------------------------------------------------------------+ //+------------------------------------------------------------------+ //| neural network for classification model | //+------------------------------------------------------------------+ class CNeuralNetwork { protected : int m_layers; // layers count ENUM_ACTIVATION_FUNCTION m_activation_functions[]; // layers activation functions matrix m_weights[]; // weights matrices between layers vector m_values[]; // layer values vector m_values_a[]; // layer values after activation vector m_errors[]; // error values for back propagation public : //+------------------------------------------------------------------+ //| Constructor | //+------------------------------------------------------------------+ CNeuralNetwork( const int layers, const int & layer_sizes[], const ENUM_ACTIVATION_FUNCTION & act_functions[]) { m_layers=layers; ArrayCopy (m_activation_functions,act_functions); ArrayResize (m_weights,layers- 1 ); ArrayResize (m_values,layers); ArrayResize (m_values_a,layers); ArrayResize (m_errors,layers); //--- init weight matrices with uniform random for ( int i= 0 ; i<m_layers- 1 ; i++) { double div= 32767.0 * layer_sizes[i]; // divider m_weights[i].Init(layer_sizes[i],layer_sizes[i+ 1 ]); for ( ulong j= 0 ; j<m_weights[i].Rows()*m_weights[i].Cols(); j++) m_weights[i].Flat(j, rand ()/div); } } //+------------------------------------------------------------------+ //| Forward pass | //+------------------------------------------------------------------+ void ForwardFeed( const vector & input_data, vector & vector_pred) { m_values_a[ 0 ]=input_data; for ( int i= 0 ; i<m_layers- 1 ; i++) { m_values[i+ 1 ]=m_values_a[i].MatMul(m_weights[i]); m_values[i+ 1 ].Activation(m_values_a[i+ 1 ],m_activation_functions[i+ 1 ]); } //--- predicted class probabilities vector_pred=m_values_a[m_layers- 1 ]; } //+------------------------------------------------------------------+ //| error back propagation | //+------------------------------------------------------------------+ void BackPropagation( const vector & vector_true, double learning_rate) { //--- categorical cross entropy loss gradient m_errors[m_layers- 1 ]=vector_true-m_values_a[m_layers- 1 ]; for ( int i=m_layers- 1 ; i> 0 ; i--) m_errors[i- 1 ].GeMM(m_errors[i],m_weights[i- 1 ], 1 , 0 , TRANSP_B ); //--- weights update for ( int i=m_layers- 1 ; i> 0 ; i--) { vector back; m_values[i].Derivative(back,m_activation_functions[i]); back*=m_errors[i]*learning_rate; m_weights[i- 1 ].GeMM(m_values_a[i- 1 ],back, 1 , 1 ); } } }; //+------------------------------------------------------------------+

There are only 60 lines of pure code. Exclusively (except ArrayResize) matrix and vector operations and methods. It was done for "Hello world" neural networks - recognition of handwritten numbers on a set of MNIST pictures.

Here is the end of the neural network training and testing script log.

... 2023.06 . 02 16 : 54 : 21.608 mnist_original (GBPUSD,H1) Epoch # 15 2023.06 . 02 16 : 54 : 24.523 mnist_original (GBPUSD,H1) Time: 2.907 seconds. Right answers: 98.682 % 2023.06 . 02 16 : 54 : 24.523 mnist_original (GBPUSD,H1) Total time: 71 seconds 2023.06 . 02 16 : 54 : 24.558 mnist_original (GBPUSD,H1) Read 10000 images. Test begin 2023.06 . 02 16 : 54 : 24.796 mnist_original (GBPUSD,H1) Time: 0.234 seconds. Right answers: 96.630 %

Script source code and original mnist data - in the attachment

Forum on trading, automated trading systems and testing trading strategies

How to Start with Metatrader 5

Sergey Golubev, 2022.02.12 07:49

Matrices and vectors in MQL5By using special data types 'matrix' and 'vector', it is possible to create the code which is very close to mathematical notation while avoiding the need to create nested loops or to mind correct indexing of arrays in calculations. In this article, we will see how to create, initialize, and use matrix and vector objects in MQL5.

Forum on trading, automated trading systems and testing trading strategies

How to Start with Metatrader 5

Sergey Golubev, 2023.02.11 03:41

Matrix Utils, Extending the Matrices and Vector Standard Library Functionality - the article

In python a Utils class is a general purposed utility class with functions and lines of code which we can reuse without creating an instance of a class.

The Standard library for matrices provides us with some very important features and methods that we can use to Initialize, transform, manipulate matrices, and much more but like any other libraries ever built it can be extended to perform extra stuff that might be necessary/needed in some of the applications.

Forum on trading, automated trading systems and testing trading strategies

How to Start with Metatrader 5

Sergey Golubev, 2023.04.12 06:48

In this article, we will briefly recall the theory of backpropagation networks and will create universal classes for building networks using this theory: the above formulas will be almost identically reflected in the source code. Thus, beginners can go through all steps while learning this technology, without having to look for third-party publications.

If you already know the theory, then you can safely move on to the second part of the article, which discusses the practical use of classes in a script, an indicator, and an Expert Advisor.

Forum on trading, automated trading systems and testing trading strategies

Machine learning in trading: theory, models, practice and algorithmic trading

Renat Fatkhullin , 2023.06.02 14:35

New features in MQL5:

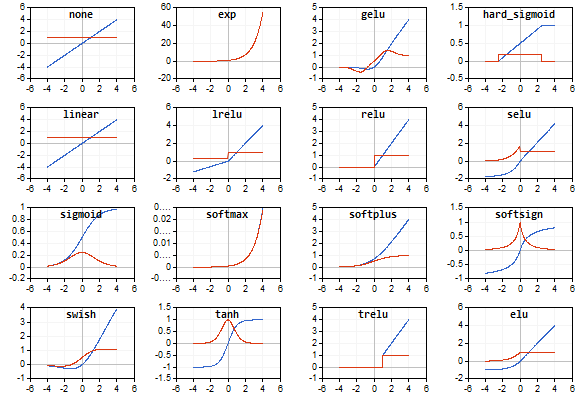

Matrices and vectors in MQL5: Activation functions

Here we will describe only one of the aspects of machine learning — activation functions. We will delve into the inner workings of the process.

- www.mql5.com

I would like to know, who is using vectors and matrices objects in MQL5??

- I am asking, because, I personally think, it is designed poorly in its overall concept. - Or maybe I am not seeing use-cases for the functionality and the API-Design that has been put together for these two objects.

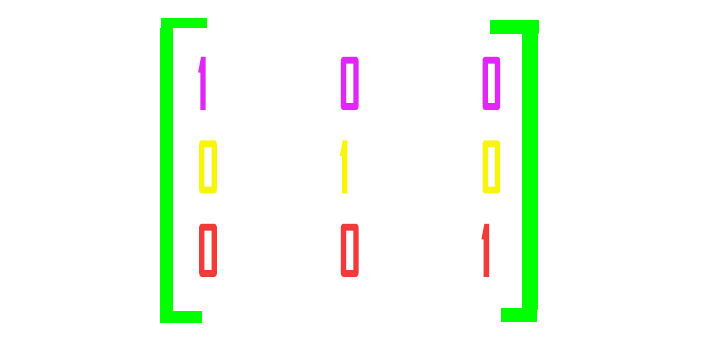

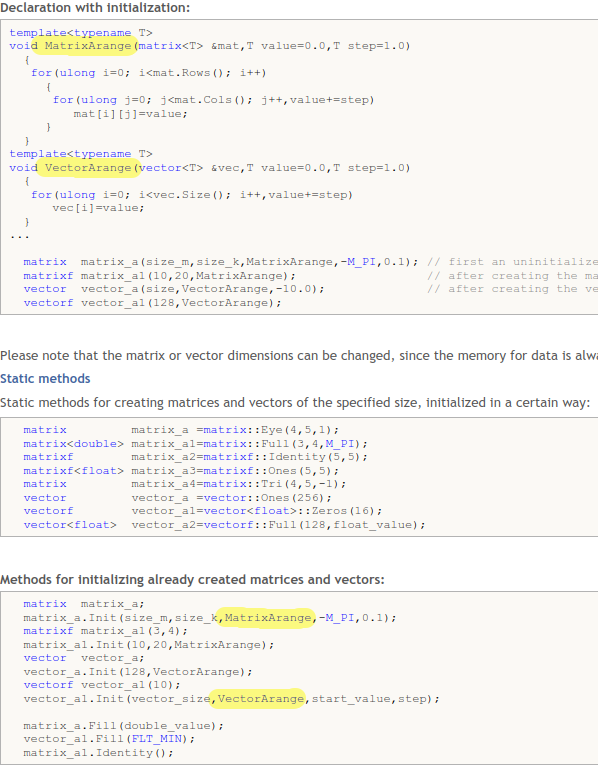

Documentation has a chapter on initialization:

https://www.mql5.com/en/docs/matrix/matrix_initialization

Where it states this:

Seriously???

What is the sense or use case for that??? - That could have been implemented with AVX. - I have no idea what edge case this is supposed to cover at all, ideas anyone?

What I mean is, there is no need for a variadict function signature, this could have been easily done directly with a member function, and any functionality that would have needed external customization could have been done with a simple function pointer, receiving previous value and counter and returning current value.

iE like this:

template <typename T> const T next_value(const ulong current_count, const T last_value) { return(last_value + 1.0); };

And BTW, how do you create a pointer to a templated function in MQL5?? - The compiler has been extended to specifically support matrix and vector object types.

Another annotation, vectors can be used as arrays, even with initialization lists, they are dynamically allocated. - To sad, they are limited to double, float and complex scalar types only. - Makes no sense.

Also the fact, matrix defaults to matrix<double> is a concept, that is not supported by MQL5, and therefore for us not available. - Why??? - What is the sense of implementing an object in such an "over the edge" way?

Ohh, and before I forget about that as well: ALGLIB on CodeBase https://www.mql5.com/en/code/1146 does not support matrix or vector types, it has its own representation object to deal with matrices.... In consequence the implementation for what ALGLIB is famous for (speed and flexibility) has more or less been stripped off, vectors and matices are supposed to support AVX when used, but to convert ALGLIB, these features had to be removed from the library, because they are not available in MQL5 directly.

In conclusion, I personally think this implementation does not fit well into MQL5 at the moment and needs some clean up, and overhaul.

- www.mql5.com

I would like to know, who is using vectors and matrices objects in MQL5??

- I am asking, because, I personally think, it is designed poorly in its overall concept. - Or maybe I am not seeing use-cases for the functionality and the API-Design that has been put together for these two objects.

Documentation has a chapter on initialization:

https://www.mql5.com/en/docs/matrix/matrix_initialization

Where it states this:

Seriously???

What is the sense or use case for that??? - That could have been implemented with AVX. - I have no idea what edge case this is supposed to cover at all, ideas anyone?

What I mean is, there is no need for a variadict function signature, this could have been easily done directly with a member function, and any functionality that would have needed external customization could have been done with a simple function pointer, receiving previous value and counter and returning current value.

iE like this:

And BTW, how do you create a pointer to a templated function in MQL5?? - The compiler has been extended to specifically support matrix and vector object types.

Another annotation, vectors can be used as arrays, even with initialization lists, they are dynamically allocated. - To sad, they are limited to double, float and complex scalar types only. - Makes no sense.

Also the fact, matrix defaults to matrix<double> is a concept, that is not supported by MQL5, and therefore for us not available. - Why??? - What is the sense of implementing an object in such an "over the edge" way?

Ohh, and before I forget about that as well: ALGLIB on CodeBase https://www.mql5.com/en/code/1146 does not support matrix or vector types, it has its own representation object to deal with matrices.... In consequence the implementation for what ALGLIB is famous for (speed and flexibility) has more or less been stripped off, vectors and matices are supposed to support AVX when used, but to convert ALGLIB, these features had to be removed from the library, because they are not available in MQL5 directly.

In conclusion, I personally think this implementation does not fit well into MQL5 at the moment and needs some clean up, and overhaul.

I think you send the function name , omit the matrix / vector that it will adjust (or init) but add the rest of the function parameters . (and the matrix vector it will adjust / init must be in the function params first)

i was working with it recently , give me 5 to dig up the code

It is a mutation between c++ and python coding

found it

template <typename T> void init_based_on_values(vector<T> &out,//the vector you will init / adjust you omit this on the init call vector &source,//you can have any other params which you add sep by commas on the init call vector &controller, double allow_value){ int total=0; for(int i=0;i<(int)source.Size();i++){ if(controller[i]==allow_value){ total++; out[total-1]=source[i]; } } out.Resize(total,0); } template <typename T> void your_function(vector<T> &out){ out.Fill(3); } int OnInit() { //--- vector source={1,2,3,4,5,6,7}; vector pass={0,1,0,1,0,1,0}; vector result(7,init_based_on_values,source,pass,1.0); Print(result); return(INIT_SUCCEEDED); }

So what this does , sorry should have written it initially :

- we have a source vector (we use a vector because its easy) with values

- we have a pass filter vector , not that it's needed but to test the multiple and diverse parameters , when it is 1.0 we pass the value to the out vector

- we don't pass the out vector reference in the parameters of the constructor (init) because what we are "constructing" is the first referenced parameter .

It is really neat , havent speed tested it but it is handy.

found it

So what this does , sorry should have written it initially :

- we have a source vector (we use a vector because its easy) with values

- we have a pass filter vector , not that it's needed but to test the multiple and diverse parameters , when it is 1.0 we pass the value to the out vector

- we don't pass the out vector reference in the parameters of the constructor (init) because what we are "constructing" is the first referenced parameter .

It is really neat , havent speed tested it but it is handy.

vector source={1,2,3,4,5,6,7}; vector pass={0,1,0,1,0,1,0}; vector result=source*pass;

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

This is the summary thread about Matrices and vectors in MQL5