Neural networks have been a hot topic for traders for more than a dozen years. Indeed, this author has written many articles about neural networks and how they can be used in trading strategies.

The subject received a lot of attention because the technology had the ability to “learn” from past data and model problems where the underlying equations were unknown. Neural networks generalize well because they can give answers to new cases not used when the model was developed. They also handle noisy data well. These, and other, promises by everyone advocating neural networks in their heyday help the approach earn a large following.

Since then, however, we have learned that neural networks handle noise better than conventional statistical methods, but noise still needs to be a concern.

Since the 1990s, massive advances in inexpensive computing power have helped neural networks evolve. While the dot-com boom and bust dried up a considerable amount of speculative capital in the markets and interest in neural networks, today’s choppy markets are again inspiring traders to consider neural networks for an edge. In this three part series on neural networks, we will begin with an overview of the basics. Then, we will review research on using neural networks for trading. Finally, we will develop a case study using this technology in a real application.

A SHORT HISTORY

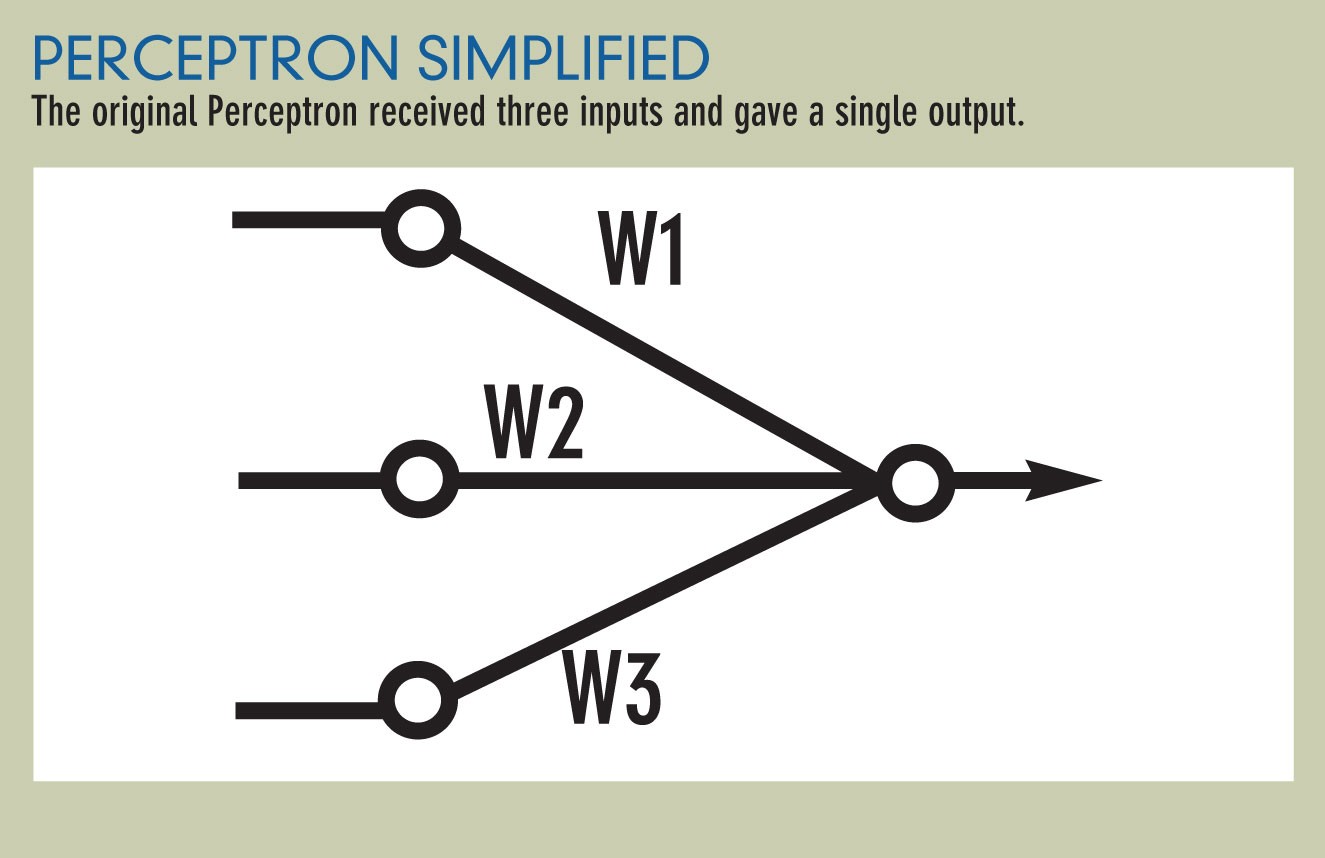

As the name implies, neural network technology takes its cue from the human brain by emulating its structure. Work on neural networks was started in the 1940s and was followed in 1957 by the advent of Frank Rosenblatt’s “Perceptron,” which was a linear classifier, or the simplest kind of feed forward neural network.

The neuron is the basic structural unit of a neural network. If the neuron receives enough signals, the neuron fires and triggers all of its outputs. A neuron receives any number of inputs, possessing weights based on their importance. With a real neuron, the weighted inputs are summed and output is based on a threshold function sent to every neuron downstream. Finally, all of the impulses are passed along until the output layer is reached and the output signals are translated into real work information.

Although this system worked well for simple problems, it was demonstrated in 1969 that non-linear classifications — called “exclusive/or” problems — were impossible to solve. The exclusive/or problem is a simple real-world problem. For example, it is possible to go shopping or to the movies, but it is not possible to do both at the same time. In other words:

Input1 Input2 Answer

TTF

TFT

FTT

FFF

The original Perceptron could not solve this simple problem because it was only able to solve linear problems.Neural networks represent a branch of computing science called “machine learning,” which includes two major branches — supervised and unsupervised learning. In supervised learning, applications learn by using a teacher and comparing the output with the current weights to reproduce the answer. This is how the original Perceptron worked and is the type of neural network that is most often used in financial analysis. The calculated value is compared to the actual value and the weights are adjusted to minimize the error across the complete training set.

The goal for machine learning is for the neural network to learn the training set well and produce good answers on new cases that have never been seen before. The original Perceptron used linear weighting functions because the math did not exist at the time to minimize the error and adjust the weight if a nonlinear summing function was used and a network interconnected with more than two layers. This advancement was required to solve the issues with neural networks that were revealed in 1969.

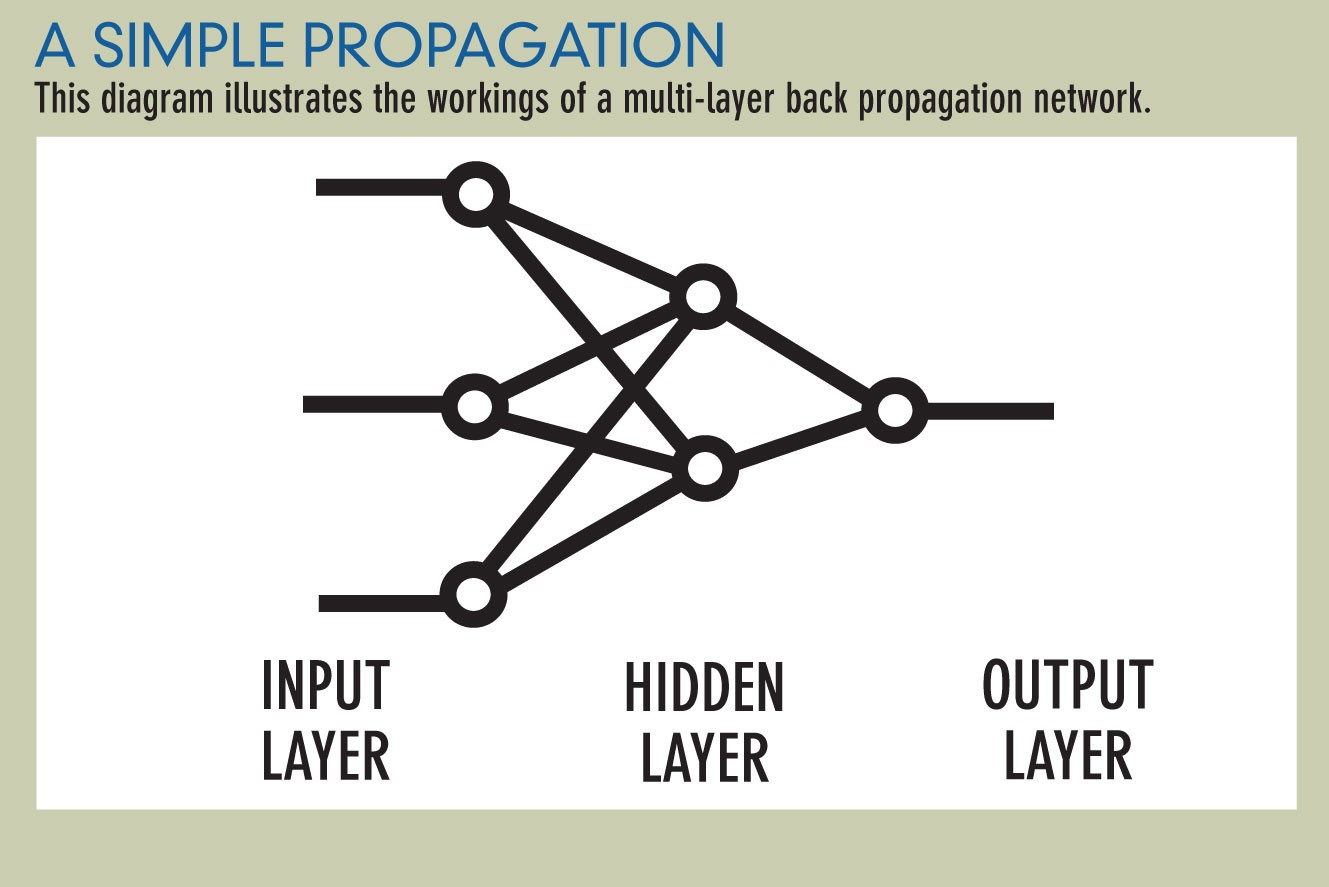

In 1986, a paper was presented on an algorithm called “back propagation,” announcing the discovery of a method allowing a network to learn to discriminate between not linearly separable classes. The method was called the “backward propagation of errors,” which was a generalization of the “least mean squares” (LMS) rule. While the 1986 paper has been credited with popularizing the concept, the credit for inventing back propagation goes to Paul Werbos, who presented the techniques in his 1974 Ph.D. dissertation at Harvard University.

The back propagation algorithm consists of a multi-layer perceptron, using non-linear threshold, activation functions (see “A simple propagation,” belwo). The most commonly used functions are the sigmoid, which ranges from 0 to 1, and the hyperbolic tangent, which ranges from -1 to 1. Because these functions are used in back propagation, all inputs and target outputs need to be mapped into the appropriate range when using these types of networks. The mapping range used is based on function.

The magic is the math was invented to calculate how to change the weight to minimize the error across the training set. Another important key is that this algorithm converges or creates a set of weights generating a reasonably low error. The algorithm does not find the absolute minimum error, but is able to isolate the local minimum. This means trading a neural network using back propagation is not exact and repeating the same experiment does not always yield the same answer. The starting point of the weight set is often the determining factor for the local minimum that is found. Because we want to find the best neural network, we start with a random set of weights. If you try enough different sets, a near optimal minimum eventually will be found.

Back propagation had many issues and better forward feed supervised learning algorithms have been found through the years. These are the algorithms being used in today’s neural network products.

THE FIRST BIG BOOM

Once it was believed that neural networks could solve non-linear problems, there was a great deal of excitement about the possibilities. People tried to use neural network technology in applications ranging from robotics to trading futures and equities.

The application of neural network technology to develop trading strategies was particularly appealing. In fact, this author’s introduction to trading in 1990 resulted from clients wanting to use the technology for trading. This initial boom witnessed a few successful applications of neural networking technology in trading, but many large traders and institutional banks spent millions of dollars on research and came up with nothing.

It’s not clear why this boom failed. Engineers and neural network designers did not understand the trading domain, which caused several problems. They treated the markets like a classic signal processing problem. This solution does not work because all of the factors involved in the markets are not understood or even known. The market model is not a simple time series forecast because it contains components of mass psychology and is nonstationary with characteristics that change through time.

Because all the factors involved in creating price action in a given market are not understood or known, solutions to different pieces of the model problem were being solved in different modes. The pure engineers did not understand the market well enough to build good market models while the traders did not understand neural networks and how they worked well enough to implement the models that made sense to them.

Everyone also was trying to develop neural networks that predicted price change or percent price change. They wanted to design the network to be the heart of the trading strategy. An example of such a strategy would be to produce a five-day percent change where the strategy would buy if the indicator was above 0 and sell if it was below. The problem is if a trading strategy based on this type of neural network ever fails, then the system suffers catastrophic losses.

Those of us who knew better realized such a fragile component — the neural network — could not be put in such a critical position. This is why a good rule based approach needs to be developed first and the network should be used to enhance it. Thus, if the network fails, system performance should be similar to the original system before the enhancement.

NEW PROBLEMS EMERGE

After the large institutional traders spent millions (and often lost millions more) on the neural gurus, they generally gave up on the technology. Until neural network vendors developed specialized neural network trading products, there was little interest in neural networks in trading.

However, even then the big money was not chasing neural networks. The technology became a fancy trading toy for individual traders to play with. The market devolved. Some vendors sold trained neural networks but they did not include them in a system or show backtested results. Results were often published based on what they called “out-of-sample” data. While true out-of-sample data is critical, careless use of it renders it invalid. For example, once it has been used in a test one time, it is no longer out-ofsample data; if the network is retrained to get a different local minimum, the data becomes part of the training set.

Neural network systems also have problems with curve fitting that are worse than those for rule-based systems, making true blind testing even more important.Neural networks are not systems and often are not sold as part of a system, which makes evaluation difficult. In addition, where simple rule-based systems might work successfully for years without re-optimizing, neural networks need to be retrained periodically. To properly evaluate neural network performance, issues like this would need to be addressed in our evaluation process.

For neural networks that are not a part of a system, their performance needs to be evaluated walking forward and the most accurate network is not always the most profitable. A network that finds a solution by shooting down the middle will miss the outliers and may have “good numbers” but will be less useful in trading because its losses are large and will eat up other profits.

During the bear market from 2000 through 2003, many traders lost a lot of money. As a result, the trading industry did not have the resources to try neural networks again even though new neural network tools existed and computing resources have gotten cheaper and faster. The problem is the futures markets and equities markets have gotten more difficult to trade and now is the time to be looking for an edge.

Now is the time to revisit neural networks.

SKILLS REQUIRED

When building a trading system that uses neural networks, it’s important to build the system without the neural network first. Once something is found that works, neural networks can be used to improve it.

Neural networks are really a type of regression or pattern recognition software, so you need to define what you want them to do in very narrow terms. For example, if we wanted use neural networks to identify chart patterns, a different neural network would be needed for each pattern.

An example of the system we might start with could be based on a technical indicator such as moving average convergence divergence (MACD). If the system made money with the standard indicator, we would try to create a forward-looking MACD using a neural network and plug it into the system.

After you have your core system, the first step in the design of a neural network is to decide what you want to predict and then evaluate the best way to accomplish that prediction using a neural network. Neural networks are not magic. They are complex multiple regression machines. You cannot ask a neural network to do too much, like directly predicting tomorrow’s low price. This would lead to a prediction that is not useful even if the error of the network is only 0.5%. An expected error of this size would still be too large. A better way would be to predict the difference between tomorrow’s low price and today’s low price, but you don’t want to try to predict the difference between them either. Instead you should make the neural network predict a normalized value that represents this difference. An example of this would be to predict: tomorrow’s low/today’s low.

Then, you would take that result and multiply it by today’s low to get a result for tomorrow’s low. Neural nets like to predict smooth targets. If possible, figure how to use a smoothed output. Neural networks also work better if the prediction is broken up and the problem is simplified. For example, create multiple networks and switch the patterns to use based on rules. Build one neural network to use when prices are above their 50-day average and another one for when prices are below their 50-day average.

SELECT PREDICTIVE INPUTS

The next step after deciding what you want to predict is the development of inputs. Find and select inputs that you believe to be predictive. This can be done though domain expertise or research. Intermediate calculations from technical indicators are often of value.

One thing to realize is that the values of the indicators are mapped to the range of the activation function, or -1 to 1 for the hyperbolic tangent. This means if the relative strength index is used as an input to the network and our trading cases have a maximum value of 90 and minimum value of 12, the values would be mapped to the extremes. In addition, the distribution of occurrences of cases in different ranges can also affect results.

Domain expertise is a good place to start developing inputs; scatter plots also provide a good tool for studying a given relationship. Also, if the scatter plots depict a non-linear relationship, this isn’t a concern as it is with standard multiple regression analysis. Neural networks handle non-linear relationships quite well. Once you have selected inputs, add them slowly to the model and make sure that the inputs are not highly correlated.

When using a neural network you need a training set and out-of-sample testing set. However, the testing set can become contaminated if it is used on repeated training iterations. A solution is to test each network structure one time using a walk-forward, out-of-sample testing methodology that gives a large out-of-sample set equivalent to all data. If a network is not well designed, the application will be unstable when it is trading. If these networks are judged using a walk-forward approach, unstable configurations will not produce good results across the data set.