You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

Neural Networks Made Easy (Part 95): Reducing Memory Consumption in Transformer Models

The introduction of the Transformer architecture back in 2017 led to the emergence of Large Language Models (LLMs), which demonstrate high results in solving natural language processing problems. Quite soon the advantages of Self-Attention approaches have been adopted by researchers in virtually every area of machine learning.

However, due to its autoregressive nature, the Transformer Decoder is limited by the memory bandwidth used to load and store the Key and Value entities at each time step (known as KV caching). Since this cache scales linearly with model size, batch size, and context length, it can even exceed the memory usage of the model weights.

Neural Network in Practice: Pseudoinverse (I)

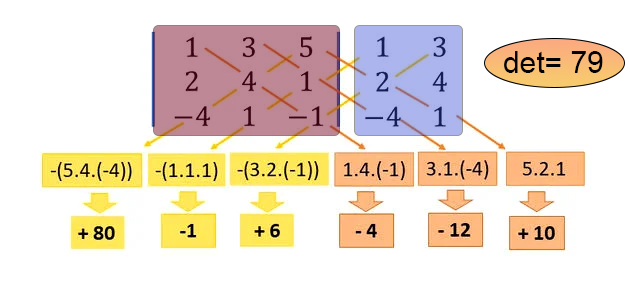

In the previous article "Neural network in practice: Straight Line Function", we were talking about how algebraic equations can be used to determine part of the information we are looking for. This is necessary in order to formulate an equation, which in our particular case is the equation of a straight line, since our small set of data can actually be expressed as a straight line. All the material related to explaining how neural networks work is not easy to present without understanding the level of knowledge of mathematics of each reader.Neural Networks Made Easy (Part 96): Multi-Scale Feature Extraction (MSFformer)

Neural Networks Made Easy (Part 97): Training Models With MSFformer

Neural Networks in Trading: Piecewise Linear Representation of Time Series

Neural Networks in Trading: Dual-Attention-Based Trend Prediction Model

Neural Network in Practice: Pseudoinverse (II)

Neural Networks in Trading: Using Language Models for Time Series Forecasting

Neural Networks in Trading: Practical Results of the TEMPO Method

Neural Network in Practice: Sketching a Neuron

In the previous article Neural Network in Practice: Pseudoinverse (II), I discussed the importance of dedicated computational systems and the reasons behind their development. In this new article related to neural networks, we will delve deeper into the subject. Creating material for this stage is no simple task. Despite appearing straightforward, explaining something that often causes significant confusion can be quite challenging.

What will we cover at this stage? In this series, I aim to demonstrate how a neural network learns. So far, we have explored how a neural network establishes correlations between different data points.