FX Data Mining with Apache Spark

Intro

Let us present our experience with massive offline Data Mining using several open source technologies on weekly batch of 1TB historical data for the past 10 years with +400 features. We will present the old flow and the improvements made by using Apache Spark and S3.

Problem

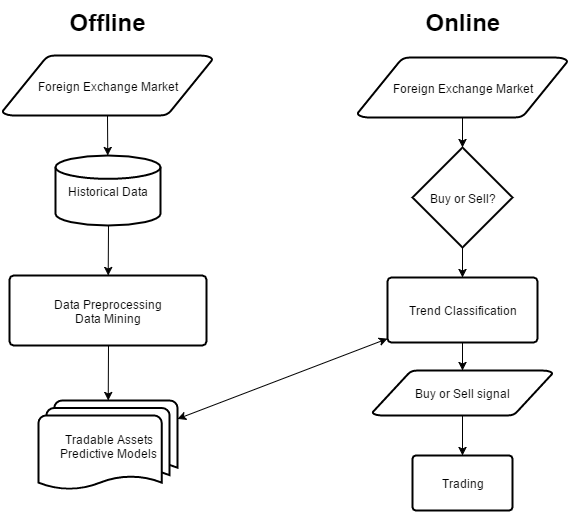

The problem we have been dealing with is a weekly batch ETL process for our back-end models training. We train classification models and use them in real-time trading for trend classification and position entry. This process is performed at the end of a trading week, but when the markets go crazy, we would rerun the training to get fresh decision making.

We have been using WEKA (Waikato Environment for Knowledge Analysis) as the main source for Machine Learning algorithms and Data Mining. WEKA has everything you want: filters, classifiers, ensembles and feature selectors under the Java API.

We begin with a simple Java application to filter, clean, transform and train classification models. The problem is that the application runs on single CPU and WEKA was not designed to be used in multi-threaded environments. Processing 1TB of historical data with average dataset size of 500MB each and every week leads to applications running for days, sometimes even building models before the trading week is ended.

Offline batch ETL and online trading engine flows

Solution

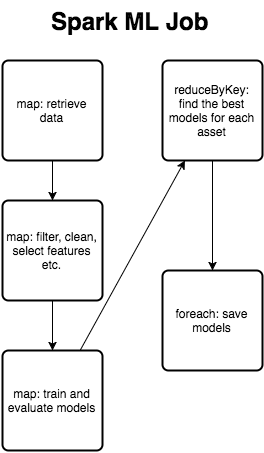

We decided to incorporate Apache Spark for the job. Moreover, instead of using HDD we moved to S3 in order to avoid storage limitations. Spark is a distributed computing engine on top of classical map-reduce technology. It improves the running time by utilizing in memory computations with small computation units called tasks. Spark’s key data structure is RDD (Resilient Distributed Dataset) which allows partitioning across multiple cluster nodes and separated task execution with atomic operators. Once your computations are independent, you can utilize the full power of your cluster by simultaneously processing data.

Apache Spark provides fast Machine Learning functionality by additional library called MLlib. The library provides encoders, classifiers and ensembles. Nevertheless, we can get more flexibility using WEKA in our use case.

Results

We have been trading with 6 chart periods: M5, M15, M30, H1, H4 and D1. Thus we have been running batch Data Mining processes for each period. The running time of a simple Java application was ranging from 1 hour to 22 hours on standard i5 core with 12GB memory. We have been running the Spark job on 32 Xeon cores with 60GB memory and the running time was reduced to range from 10 minutes to 2 hours. This is a huge improvement.

Check out the following links for more info:

WEKA: http://www.cs.waikato.ac.nz/ml/weka/

Apache Spark: http://spark.apache.org/

Algonell: http://www.algonell.com/