Researchers hope Deep Learning algorithms can run on FPGAs and Supercomputers

Machine learning has made big advances in the past few years, thanks in no small part to new methods for scaling out compute-intensive workloads across more cores. A batch of newly National Science Foundation-funded research project suggests we might just be seeing the tip of the iceberg in terms of what’s possible, as researchers try to scale techniques such as deep learning across more computers and new types of processors.

One particularly interesting project, which is being carried out by a team at the State University of New York at Stony Brook, aims to prove the field-programmable gate arrays are superior to graphics-processing units, or GPUs, when it comes to running deep learning algorithms faster and more efficiently. This flies in the face of current conventional wisdom, which holds that GPUs, with their thousands of cores per device, are the default choice for speeding up the power-hungry models.

According to the project abstract Get all the news you need about Cloud with the Gigaom newsletter Subscribe “The [principal investigators] anticipate demonstrating that the slowest portion of the algorithm on the GPU will achieve significant speedup on the FPGA, arising from the efficient support of irregular fine-grain parallelism. Meanwhile, the fastest portion of the algorithm on the GPU is anticipated to run with comparable performance on the FPGA, but at dramatically lower power consumption.” Actually, though, the idea of running these types of models on hardware other than GPUs isn’t entirely new.

IBM, for example, recently made a splash with a new brain-inspired chip it claims could be ideal for running neural networks and other cognitive-inspired workloads. And Microsoft Research demonstrated in July its Project Adam work, which reworked a popular deep learning technique to run on everyday Intel CPU processors.

Because of their customizable nature, FPGAs have been picking up a little momentum themselves, too. In June, Microsoft explained how it’s speeding up Bing search by offloading certain parts of the process to FPGAs. Later that month, at Gigaom’s Structure conference, Intel announced a forthcoming hybrid chip architecture that will co-locate an FPGA alongside a CPU (they’ll actually share memory), primarily targeting specialized big data workloads like those Microsoft has with Bing.

However, FPGAs aren’t the only possible new infrastructural choices for deep learning models. The NSF has also funded a project from a New York University researcher to test out deep learning algorithms, as well as other workloads, on Ethernet-based Remote Direct Memory Access. Most commonly used in supercomputers, but now making its way into some enterprise systems, RDMA interconnects speed up the transfer of data between computers by sending messages directly to memory and avoiding the CPU, switches, and other components that add latency to the process.

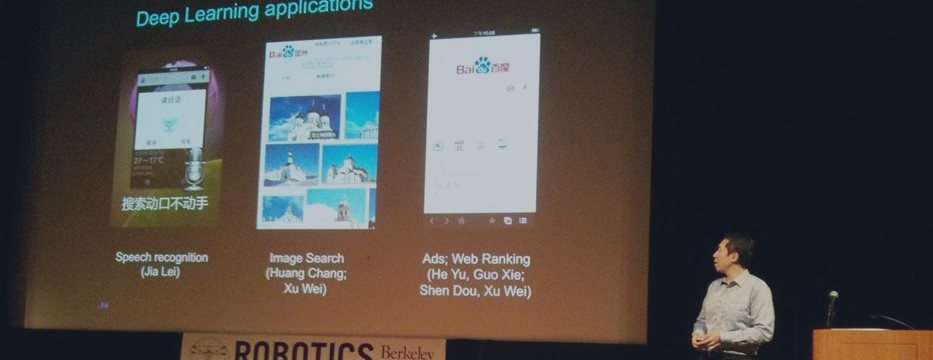

Speaking of supercomputers, another new NSF-funded project — this one led by machine learning expert Andrew Ng of Stanford (and Baidu and Coursera), and supercomputing gurus Jack Dongarra of the University of Tennessee and Geoffrey Fox of Indiana University — aims to make deep learning models programmable using Python and port them to supercomputers and scale-out cloud systems. The project, which received nearly $1 million in NSF grants, is called Rapid Python Deep Learning Infrastructure.

According to its abstract description: “RaPyDLI will be built as a set of open source modules that can be accessed from a Python user interface but executed interoperably in a C/C++ or Java environment on the largest supercomputers or clouds with interactive analysis and visualization. RaPyDLI will support GPU accelerators and Intel Phi coprocessors and a broad range of storage approaches including files, NoSQL, HDFS and databases.”

All the work being done to make deep learning algorithms more accessible and improve their performance — and these three projects are just a small fraction of it — will be critical if the the approach is ever going to make its way into commercial settings beyond giant web companies, or into research centers and national labs using computer to tackle truly complicated problems.

This broader future for deep learning, and artificial intelligence in general, is the theme of our upcoming Gigaom meetup, which takes place Sept. 17 in San Francisco. Ng will be one of the presenters, along with experts from Google and Microsoft Research, and several researchers and entrepreneurs trying to streamline the process of putting these techniques to work.