당사 팬 페이지에 가입하십시오

- 조회수:

- 6855

- 평가:

- 게시됨:

-

이 코드를 기반으로 한 로봇이나 지표가 필요하신가요? 프리랜스로 주문하세요 프리랜스로 이동

The idea and the simplest algorithm are provided in the article "Random decision forest in reinforcement learning"

The library has advanced functionality allowing you to create an unlimited number of "Agents".

In addition, variations of the "Arguments group accounting method" are used

Using the library:

#include <RL gmdh.mqh> CRLAgents *ag1=new CRLAgents("RlExp1iter",1,100,50,regularize,learn); //created 1 RL agent accepting 100 entries (predictor values) and containing 50 trees

An example of filling input values with normalized close prices:

void calcSignal() { sig1=0; double arr[]; CopyClose(NULL,0,1,10000,arr); ArraySetAsSeries(arr,true); normalizeArrays(arr); for(int i=0;i<ArraySize(ag1.agent);i++) { ArrayCopy(ag1.agent[i].inpVector,arr,0,0,ArraySize(ag1.agent[i].inpVector)); } sig1=ag1.getTradeSignal(); }

Training takes place in the tester in one pass with the parameter learn=true. After training, we need to change it to false.

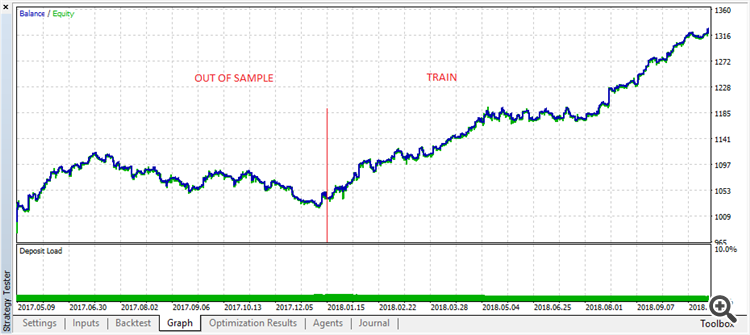

Demonstrating the trained "RL gmdh trader" EA operation on training and test samples.

MetaQuotes Ltd에서 러시아어로 번역함.

원본 코드: https://www.mql5.com/ru/code/22915

Contrarian trade MA

Contrarian trade MA

Working by iMA (Moving Average, MA) and OHLC of W1 timeframe

Exp_XFisher_org_v1

Exp_XFisher_org_v1

Exp_XFisher_org_v1 Expert Advisor based on XFisher_org_v1 oscillator signals.