What to feed to the input of the neural network? Your ideas... - page 68

You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

How did you do it before? Did you submit absolute prices to the study? Like 1.14241, 1.14248.

What you described is relative prices. You can do the difference (delta) of the current price to other bars or ratio, as you described.

I have always trained on deltas. The result is the same...

Input isnot the strength of the signal

Its strength is given to it by weights. But the input number itself a priori already(!) carries in itself a force element - its quantitative factor.Earlier I raised the problem of understanding input data.

By giving a number to the input - we already initially endow the input with a force value. This is a mistake, because the essence of our NS is just to find the power factor and distribute it among the inputs.

0.9 to the input - this is equivalent to the fact that the signal is extremely strong.

And here are legitimate questions:

1) Why is it strong?

2) Where is it strong? Buy? Sit? Hold? Close Buy? Close Sel?

So the numerical value to the input is already a ready "post-processing", preliminary giving the signal strength. But it prevents the NS from working with the data. This is the very "noise", the essence of which is "interference", "blurring", "degradation", "encryption".

This is equivalent to random initialisation of scales before the first epoch of learning. Only with weights it is important, but with inputs it is intentional noise.

And then the NS is tasked with "cleaning up" the input data instead of learning it.

And now let's actually move on to the destructive function of numerical data:

When the number 0.9 is given as input, what does it mean? "Actively BUY"? "Strongly BUY"?

From the point of view of any oscillator with a range from 0 to 1 - it is either strictly BUY or strictly SELL, depending on.... the trader! Only he "endows" oscillators with magic signals when he builds his Trading Strategy.

In practice, these numbers mean absolutely nothing. Any programmer/coder will run through the whole history and see that these signals are random.

When the number 0.9 is input, what does it mean? The NS has an opportunity to significantly "weaken" it with weights.

What does this entail? Taking into account that weights in all architectures are static - it causes that numbers less than 0.9 with significant weakening - the neural network will NOT work.

They simply will not affect the overall performance, because in the adder will move the total sum insignificantly.

Just imagine, the NS has set a weight of 0.1 to the input number, which has a maximum of "1", as a result, if the input is 0. 9, the number will become 0.09.9, the number will become 0.09, and if 0.1 comes, the number will be 0.01.

Literally ALL the range below 1 is simply killed.

And if the "workability" of a given input number lies in the range from 0.1 to 0.5, and falling into this zone, the number needs to be "strengthened"(!) for the overall architecture and further calculations in the next layers?

It won't work, the number 0.9 will break in and simply "break" the whole ruse. After all, it - more influences the solution of NS because of its constant quantitative dominance.

Even in the XOR problem, there is no 2, there is no 0.5 on the input. There's a 1-tuple. As a result, the input is along the lines of "yes, there is a signal" (1) and "no signal" (0). The same signal is always applied to one input in the problem.

If we translate this approach into the language of NS for forex, we get the following: if the number 0.9 comes - we apply to the first input, and if the number 0.1 comes - we apply to the second input. Otherwise - 0.

Please note: we getdynamic weights, i.e. at the initial stage there is filtering of "noise". If 0.9 is a "bad" number, we multiply it, let's say, by 0.0001 in order to "sprite" it to the very bottom, so that it does not interfere with learning with its excessive power factor.

And if the number is 0.1, we multiply it by the maximum weight "1.0" in order to set the maximum influence of the number on the NS.

So, there is something reasonable and potential in the dynamics of weights. IMHO, imho.

It just looks somehow reasonable.

As an example of a dynamic weight (filter):

5 inputs, all pass "filtering", but that's all - I haven't connected MLP further yet, because I haven't fully understood the problem of retraining in the dynamics of weights. All with MT5 tools, usual optimisation.

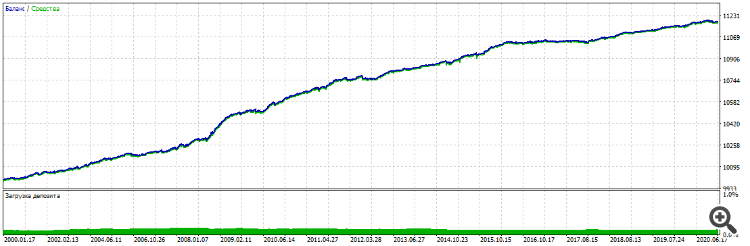

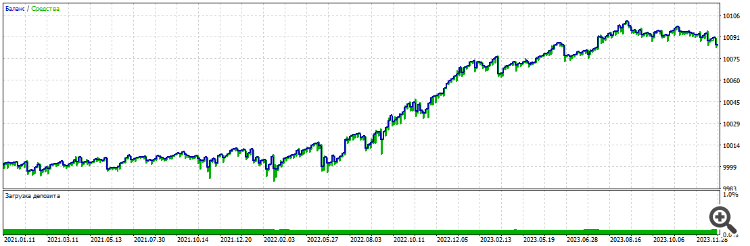

Filter Optimisation 2000-2021, EURUSD, H1:

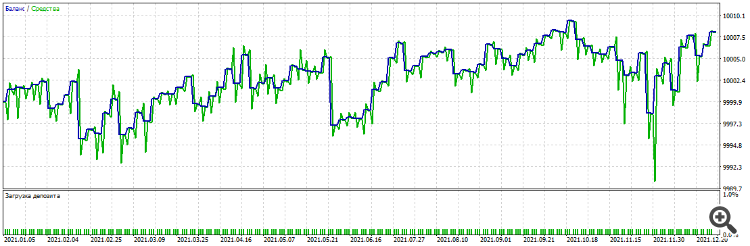

Forward first year 2021-2022

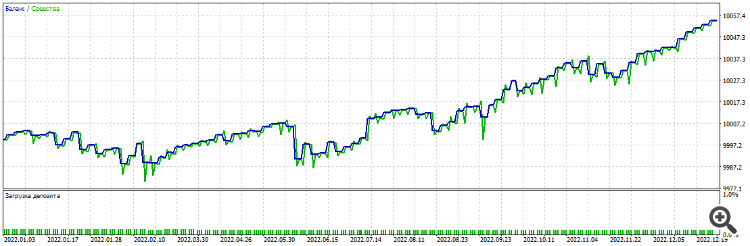

Forward second year 2022-2023

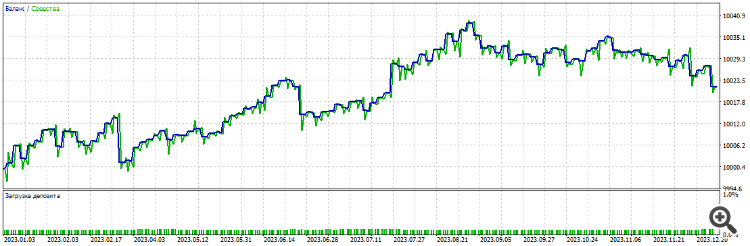

Forward third year 2023-2024

All three years of forward

UPD

So the point is that the number on the input is just the position of the indicator line, not the strength.

It's a building pattern. Qualitatively different from any other (number).

And each building pattern needs to be assigned a weight, with the result that their combined number organises the overall (large) working pattern .

Any luck with NN and DL? Anybody here...

---

except for python charts and fitted tests:-) At least "Expert Advisor trades on demo and plus".

---

or there is a feeling that this is a dead-end branch of evolution and all the output of mashobuch and neurons is in advertising, spam and "mutual_sending".

it's a dead-end branch of evolution.

Kind of like 21st century coffee grounds.

What if the "workability" of a given input number lies in the range from 0.1 to 0.5, and, falling into this zone, the number needs to be "strengthened"(!) for the overall architecture and further calculations in the next layers?

It won't work, the number 0.9 will break in and simply "break" the whole mess. After all, it is more influential on the NS solution because of its constant quantitative dominance.

With trees it is solved by splits - simply leaves with predictor value < 0.1 and > 0.5 will not produce signals.

In NS this is also possible if you use non-linear activation functions like sigmoid. But I haven't studied NS for a long time, so I can't say for sure. But in principle they also work at the level of wooden models, sometimes better, so they can cut off what is not necessary. That's why I switched to trees, because there you can understand why such a decision was made.

If it weren't for dumas, I'd be wasting my time on the MO god Gizlyk right now :)

Kind of like 21st century coffee grounds

In general - yes, but it doesn't let go.

You are sitting there, taking apart someone's manual TC, then: "Man, last time I didn't try this and that" - and here again.

With trees it is solved by splits - just leaves with predictor value < 0.1 and > 0.5 will not produce signals.

.

has anyone got anything about NN and DL ? at least someone here...

---

well, except charts in python and fitted tests:-) At least "EA trades on demo and plus".

---

or there is a feeling that this is a dead-end branch of evolution and all the output of mashobuch and neurons is in advertising, spam and "mutual_sending".

Rough trade - it turns out.

But a soft one, so that you can go to the market - not yet.