Machine learning in trading: theory, models, practice and algo-trading - page 2474

You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

https://www.mql5.com/ru/forum/231011

https://squeezemetrics.com/download/The_Implied_Order_Book.pdf

There is some truth here, but I checked my model, the main thing there is to know what forward we are counting on....

I don't really understand you, but it's my problem, I haven't studied well, I look a bit differently for trading algorithms, more towards asocial rules and genetic search rules or formulas, like symbolic regression etc. But if you managed to make ordinary models work with new data it's very interesting to hear about that...

https://www.mql5.com/ru/forum/231011

https://squeezemetrics.com/download/The_Implied_Order_Book.pdf

Thanks for reading...

I love Maytrade too, watched his vid probably 8 times, not a single extra word...

As for the doll, I think it's much simpler: the exchange liveth from the commission, and if most people want to buy, and this buy requests below the current price, the exchange is profitable to satisfy buyers because there are more of them, and so the commission (money) can earn more, so the price goes down and vice versa with sellers ...

How it does it, just prints the price or manipulates the tumbler or something else I do not know but I think the essence is exactly so ...

That's why the price runs counter to participants' positions

I don't understand you very well, it's my problem that I didn't study well, I look a little differently on algorithms for trading, more towards asocial rules and genetic search rules or formulas, like symbolic regression etc. But if you managed to make regular models work on new data, it's very interesting to hear about it.

Yes I did, the quality is not as good as on the training plot, but always part of that quality is retained, and my research is forward from 10 years of history can be up to a year, on average 2-3 months it works for a good enough quality, relative to the training plot percent 60-70 on average probably. A neural network will give better results, but there already need to add these criteria of retraining, to enter combined data something like weights, so that the amount of data gave a certain weight, then the quality of the final bexest, for example mathematical expectation or profitka, and of course the complexity of the final algorithm, which turned out ( the number of all weights of all perceptrons for example). To achieve this in a neural network, the type of neurons should be as different as possible, and the number of layers and their composition should be arbitrary, then it is possible. Basically everybody uses neural network of fixed architecture, but for some reason they do not understand that architecture must also be flexible, destroying this flexibility and thus destroying possibility to minimize retraining. In general, of course, these same criteria can be applied to simple models, even you should, then you'll get a good forward, my model allows a couple of months profit ahead and the settings may be updated in one day. One of the main tricks is to take as much data as possible (10 years of history or more), in this case there is a search for global patterns, and they are based on the physics of the market and in most cases still work for a long time.

Yes, I did it, the quality is not .....

I need a strong iron, I've got the iron ceiling, but there is a solution to this problem

If you haven't read it, you will like it very much I'm sure, it has interesting ideas about the criteria for model quality

I need strong iron, I too have problems with hardware, but there is a solution to this problem

Really I need servers) but I have none (I was just working on it and so far it all hangs on dead netbook but I only started DDD), I have to calculate very long and painfully. But in itself there is no other option, or from somewhere you take power and load them hard, while in the dynamics you need to check 20-30 configurations at least, and preferably a hundred, and for each signal to hang and monitor, the most stable which will pass the natural selection and leave, then try to add more power. There is no other way, otherwise it's just a road to nowhere, as many have already written here... it really can stretch for many years without understanding where to dig. The alternative is a degree in forum sciences and chatter about phases of the moon and their influence on the market in the spirit of Prokopenko's programs ))). What was the solution if not a secret?

What is the solution, if not a secret?

In the book to which I gave the link this solution is described more intelligently... I really recommend reading it...

In simple terms the solution is elementary - you need to split the problem, for example cluster the data and train different models for each cluster.

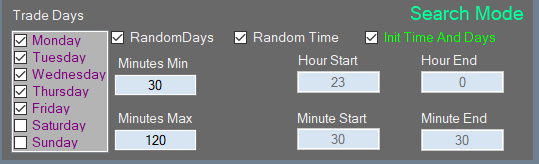

Or even simpler example: There are 5 trading days in a week, so we train one model daily for Mondays, the second for Tuesdays, etc. ... Finally we have reduced the training sample by 5 times and have 2 years of training, not 10 years, and so on is a matter of imagination.

In the book to which I gave the link this solution is described more intelligently... I really recommend reading it...

In simple terms, the solution is elementary - you need to split the problem, for example cluster the data and train different models for each cluster.

Or even simpler example: in a week of 5 trading days, we train one model strictly for Mondays, the second for Tuesdays, etc. It turned out to reduce the training sample of 5 times, and already 10 years, and 2 years of training, and so on is the imagination ...

I haven't read but I've already implemented all that, I came to it exactly for the same reason, it was necessary to reduce sampling somehow, but still be able to analyze deep into history, but I came to it experimentally, when I realized that the computer is absolutely powerless and can be done more competent )))), plus multithreading and everything ... all to get the most out of it.

I haven't read it, but it's already been done, I came to it for the same reason, it was necessary to reduce sampling somehow, but still be able to analyze deep into history, but I came to it experimentally, when I understood that the computer is not strong enough and can be done more competent)))), plus multithreading and all that... all in order to squeeze the maximum out.

well then

)))

=====

Seriously, the book describes how to download more efficiently without loss of quality, but unlikely it will save ... i think it's time to switch to euristic algorithms...

or something like a knowledge base, this is the smallest splitting, my ideas and thoughts go in this direction, as variants of events and signs will be for tens of gigabytes, and a model with 20 million signs can't be trained ...)) so a knowledge base look like a way out of the "curse of dimensionality" to me

or to something like a knowledge base, this is the smallest splitting, my ideas and thoughts go in this direction, because the variants of events and signs will be for tens of gigabytes, and a model with 20 million signs can not be trained...))

Forget about signs. The way I see it, it is a scalar quantity (or in other words a mathematical expression, even if it is logical, it can be reduced to a mathematical expression, only the accuracy will suffer). All of these scalar or logical values are derived from price, because we have no other data and trying to use more data may just make it worse, simply because the data is different and can be from different sources, in which case it is not clear which data is to be prioritized. Any numerical series can contain all other numerical series inside itself if you use all possible ways to transform this series... try to understand this. In this case, you don't need to give the algorithm this feature space, you need to let it find these features by itself... You don't need any base, you need power. If there is a system and it works at least partially, the next step is to decentralize calculations. Workers should be networked, and the database will help, but it should act as a repository for common results. This already sounds like mining.

Forget about signs. The way I see it, it is a scalar quantity (or in other words, a mathematical expression, even if it is logical, it can be reduced to mathematical, only the accuracy will suffer). All of these scalar or logical values are derived from price, because we have no other data and trying to use more data may just make it worse, simply because the data is different and can be from different sources, in which case it is not clear which data is to be prioritized. Any numerical series can contain all other numerical series inside itself if you use all possible ways to transform this series... try to understand this. In this case, you don't need to give the algorithm this feature space, you need to let it find these features by itself... You don't need any base, you need power. If there is a system and it works at least partially, the next step is to decentralize calculations. Workers should be networked, and the database will help, but it should act as a repository for common results. This already sounds like mining.

I don't understand your vision very well, so I won't argue...

I see my algorithm as a sequence of events, the event is a log rule, the sequence is not tied to time, it's either there or not

(as a trader setting a level, the price may reach it in 5 minutes or in a day, but it is the same situation)

So the ensemble of such working sequences will be the TS.

But to find such "non-dimensional" sequences it is necessary to go through trillions of variants, as a solution I see - to create a knowledge base on your hard drive...

All "typical" trainings of any algorithm in the moving window, I think they are not working variants, since the market is not stable, the output will be a moving average with a memory of the past, which will never be repeated in the future due to non-stationarity...