Agents reconnect to others servers automatically.

You would think so, but all machines assigned agent7.mql5.net refuse to connect to the cloud service.

I have 7 machines total, and 2 of them won't connect. The 2 that stay disconnected are the only ones who keep connecting to agent7. The others connect to agent6 just fine and are receiving work from cloud.

Even if reconnect is automatic, it keeps choosing agent7.

Maybe 'connected to agent7' was the wrong wording. Maybe it does connect, but the agents keep showing up as disconnected.

I've double checked everything at this point. Ports are forwarded, firewall rules set up (although not needed), and ticked the box for selling and entered my username.

You would think so, but all machines assigned agent7.mql5.net refuse to connect to the cloud service.

I have 7 machines total, and 2 of them won't connect. The 2 that stay disconnected are the only ones who keep connecting to agent7. The others connect to agent6 just fine and are receiving work from cloud.

Even if reconnect is automatic, it keeps choosing agent7.

Maybe 'connected to agent7' was the wrong wording. Maybe it does connect, but the agents keep showing up as disconnected.

I've double checked everything at this point. Ports are forwarded, firewall rules set up (although not needed), and ticked the box for selling and entered my username.

I have exactly the same problem, agent7.mql5.net auto selected and cloud status disconnected, worked just fine before July 19th CrowdStrike madness.

I'm almost 100% sure there is something wrong with the server at agent7.mql5.net.

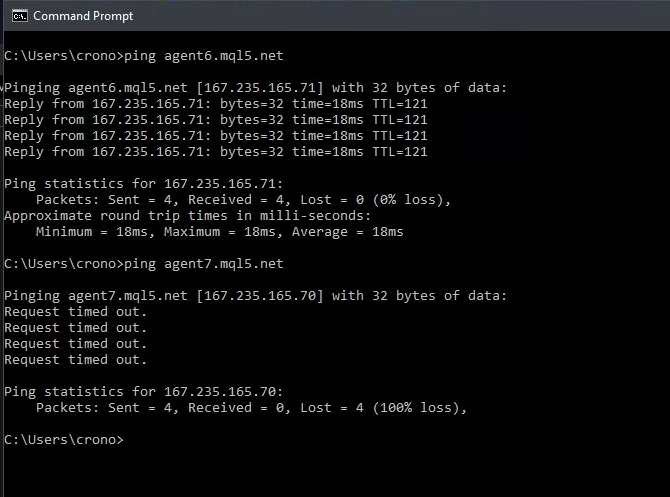

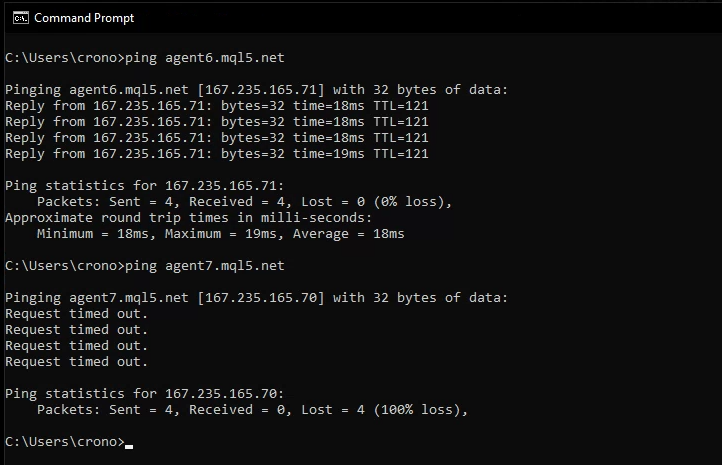

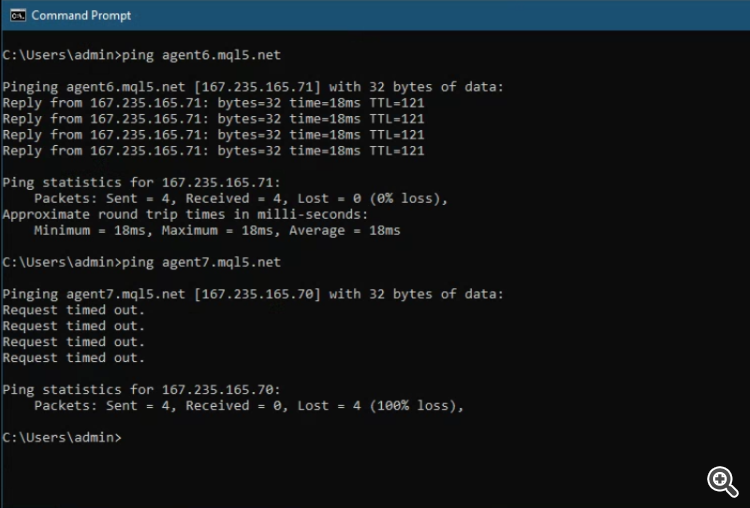

The following screenshot are from 3 of my machines. The first always tries to connect to agent7 and "fails successfully", no matter what, and the 2 others connect to agent6 and work fine.

Machines from first and second screenshot are on the same local network and ISP, but only #2 is working.

Third screenshot is from another location with different ISP where agent7 doesn't respond either - but agents there use agent6.

None of them can ping agent7, but one of the machines always decides to use it due to lowest ping, even though it can't be pinged manually.

Machine 1:

Machine 2:

Machine 3:

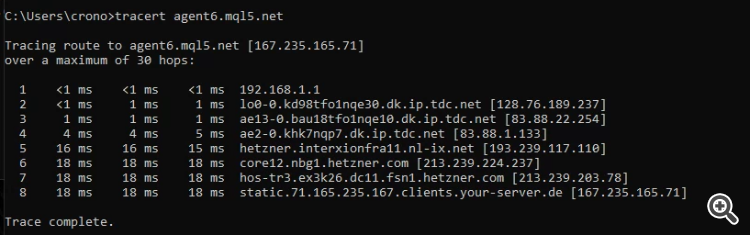

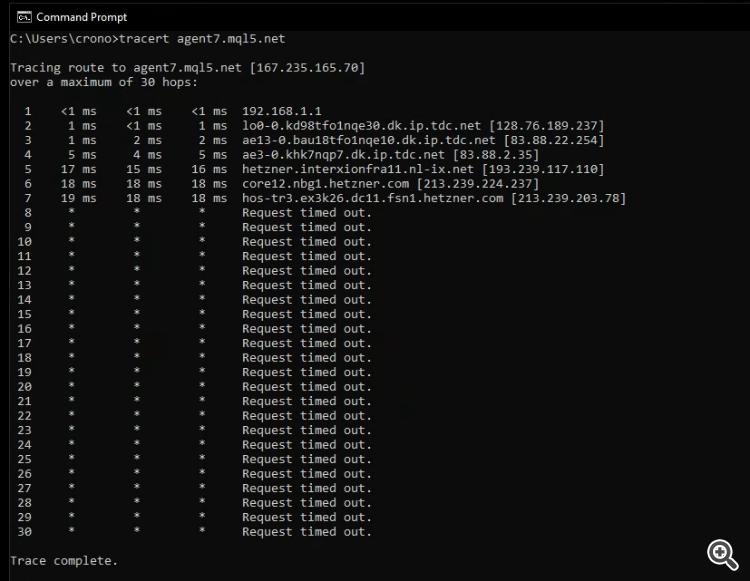

Trace route, just because..

Agent 6:

Agent 7:

This is totally untrue and has been untrue for as long as I've been a contributor to the MQL5 Cloud Network (7 years). There is no fallback mechanism in MetaTester for if the "best server" fails accept the incoming connection. It picks the "best server" via a convoluted method involving each agent slowly and repeatedly connecting to each server during initialization (which runs your servers out of ephemeral ports, resulting in connection stability issues/dropped connections to other agents regardless of how many additional servers you add, and makes each agent take a ridiculous 3-5 minutes to initialize). However, only once it is successfully authorized on the network does it slowly update its internal evaluation of server pings over time; until this occurs, it completely ignores the results of the ping test it just conducted and falls back on the cached ping numbers to decide which server is the "best server". Which means if the "best server" server is unavailable, it will never choose a different server because it never gets authorized on the network. Despite each agent connecting to each server 2-5 times during initialization and it knowing that it can't reach the "best server", it will drop all evaluation connections and attempt to connect to the previously cached "best server" anyway, resulting in no connection ever. Until some fluke causes it to authorize on a different server (which happens; it randomly ignores its cached ping chart sometimes and connects to a different server—which, if successful, is the only way it will break free from trying to connect to an unreachable "best server"). It appears you guys recently swapped agent1 (which was often unreachable) and agent7 (which was newly added), resulting in many people posting with this issue all the sudden (everyone whose MetaTester was favoring agent7 will have this issue).

You ought to change the software to use IMCP pings (with a 1000ms timeout) during the initialization phase instead of connecting to each server. This will increase the stability of your network. And it should skip the ping phase entirely if the cached ping figures are recent enough (say a few hours). If direct connection to a server fails, the agent should simply attempt connection to the next best server on the existing list.

I am a software developer and know what I'm talking about, but perhaps the above is a bit complicated to follow. Here is a flow chart of MetaTester's currently observed behavior:

01. Agent is started. 02. Reads cached DNS and server list with pings: agent1=18 ms, agent2=87 ms, agent3=29 ms, agent4=87 ms, agent5=173 ms, agent6=18 ms, agent7=17 ms. 03. Delay. 04. Connect to agent1. Delay. 05. Ping was 19ms. Disconnect. Delay. 06. Connect to agent2. Delay. 07. Ping was 83ms. Disconnect. Delay. 08. Connect to agent3. Delay. 09. Ping was 35ms. Disconnect. Delay. 10. Connect to agent4. Delay. 11. Ping was 89ms. Disconnect. Delay. 12. Connect to agent5. Delay. 13. Ping was 172ms. Disconnect. Delay. 14. Connect to agent6. Delay. 15. Ping was 16ms. Disconnect. Delay. 16. Connect to agent7. Delay. 17. It was unreachable. Delay. 18. We wasted some 50 seconds doing this. Now go back to step '04' two to four times and waste even more time all over again. 19. Ignore the pings we just calculated. Our cache says agent7 had the best ping of 17ms, so select agent7 as the best server. 20. Connect to agent7. 21. It was unreachable. Delay for 15+ minutes. 22. Go back to step 20. Assuming agent7 had been reachable, it would have looked like: 20. Connect to agent7. 21. Authorize on agent7. 22. Take the pings we just measured and nudge our cache in that direction (using a slow averaging method). Future agents will read and use these nudged numbers from the cache to determine which server to select. 23. Receive jobs from the cloud network. Agent is fully authorized on network 269 seconds from agent startup. We consumed several ephemeral ports on each server that will be tied up for the next 120 seconds, and each of our agents are going to do this to each server all over again. For no good reason besides bad coding.

Here's a flow chart of what you should change it to do if you want to fix this bug and improve network stability (reduce connections dropped due running out of ephemeral ports):

01. Agent is started. 02. Reads cached DNS and server list with pings: agent1=18 ms, agent2=87 ms, agent3=29 ms, agent4=87 ms, agent5=173 ms, agent6=18 ms, agent7=17 ms, last updated 8/1/2024 5:47am. 03. Is it more than 3 hours? Yes, time is now 2:40pm. a. Set flag in cache for next agent to wait for us to finish our evaluation before proceeding. b. Send IMCP ping to agent1 with a 1000ms timeout. Returned in 19ms. c. Send IMCP ping to agent2 with a 1000ms timeout. Returned in 83ms. d. Send IMCP ping to agent3 with a 1000ms timeout. Returned in 35ms. e. Send IMCP ping to agent4 with a 1000ms timeout. Returned in 89ms. f. Send IMCP ping to agent5 with a 1000ms timeout. Returned in 172ms. g. Send IMCP ping to agent6 with a 1000ms timeout. Returned in 16ms. h. Send IMCP ping to agent7 with a 1000ms timeout. Returned in 1000ms. i. Total time taken 1.5 seconds. Go back to 'a' nine more times, adding the ping values of each pass to our ping counters for each server. j. Total time taken 14.5 seconds. Divide the buffers by 10 to get average ping times for each server. k. Update cache with the new server ping figures and current time. 04. Select agent6 (16ms) as the best server. 05. Connect to agent6. 06. Authorize on agent6. 07. Receive jobs from the cloud network. Agent is fully authorized on the network in under 30 seconds from agent startup.

The next agent comes along (with the above code) and does the following:

01. Agent is started. 02. Reads cached DNS and server list with pings: agent1=18 ms, agent2=87 ms, agent3=29 ms, agent4=87 ms, agent5=173 ms, agent6=18 ms, agent7=17 ms, wait for update. 03. Wait for update flag found; delay for 1 minute (if not found, skip to step 05). 04. Go back to step 02 up to four more times (if this expires and the wait flag is still set, do our own evaluation as seen in step 03 in above example). 05. Select agent6 (16ms) as the best server. 06. Connect to agent6. 06. Authorize on agent6. 07. Receive jobs from the cloud network. Agent is fully authorized on the network in under 70 seconds from agent startup.

And assuming an agent with this code was "first on the scene" when agent7 originally became unreachable, this is what would have happened:

01. Agent is started. 02. Reads cached DNS and server list with pings: agent1=18 ms, agent2=87 ms, agent3=29 ms, agent4=87 ms, agent5=173 ms, agent6=18 ms, agent7=17 ms, last updated 8/1/2024 5:47am. 03. Is it more than 3 hours? No, time is now 7:29am. 04. Select agent7 (17ms) as the best server. 05. Connect to agent7. 06. Connection failed. Select next best server agent6 (18ms) as the best server. 07. Connect to agent6. 08. Authorize on agent6. 09. Receive jobs from the cloud network. Agent is fully authorized on the network in under 15 seconds from agent startup.

And going forward, the initialization process would look like:

01. Agent is started. 02. Reads cached DNS and server list with pings: agent1=19 ms, agent2=83 ms, agent3=35 ms, agent4=89 ms, agent5=172 ms, agent6=16 ms, agent7=1000 ms, last updated 8/1/2024 2:40pm. 03. Is it more than 3 hours? No, time is now 3:17pm. 04. Select agent6 (16ms) as the best server. 06. Connect to agent6. 07. Authorize on agent6. 08. Receive jobs from the cloud network. Agent is fully authorized on the network in under 10 seconds from agent startup.

This is totally untrue and has been untrue for as long as I've been a contributor...

Do you happen to know if there is a way to clear the cache? - Reinstall doesn't seem to work either.

I've tried everything else in the book to make it ignore agent7 (Hosts file redirecting agent7 IP to agent4 which has worse ping, Netlimiter, Firewall rules, etc.) but nothing seems to work.

Is it really just up to chance if it'll reconnect?

Also noticed that the disconnected machines won't update the application (MetaTester64.exe) either, so I've been manually copying over the latest versions from working machines to the disconnected machines in hopes that it would connect - but they still wont.

Do you happen to know if there is a way to clear the cache? - Reinstall doesn't seem to work either.

Yes. Formatting and reinstalling Windows would do it also.

I've tried everything else in the book to make it ignore agent7 (Hosts file redirecting agent7 IP to agent4 which has worse ping, Netlimiter, Firewall rules, etc.) but nothing seems to work.

Of course. MetaTester bypasses the traditional DNS mechanisms implemented by Windows and the standard protocols implemented by your network router and implements its own DNS lookup system. Consequently, nothing you do with a HOSTS file (on your PC or on your router) will have any effect on MetaTester. And since there is no fallback mechanism (well working one, anyway) in MetaTester, limiting or blocking the IP of the offending agent7 server at firewall level will be of no effect: It's already unreachable and look how the software is responding. The only thing that might be successful here is if you could create an IPtables rule on your router that swapped out the offending agent server's IP for a working agent server's IP on the outgoing TCP connection.

Yes. Every once in awhile an agent will randomly ignore the ping chart and select a different server. It could happen today, it might not happen all year. Once this happens and it successfully authorizes on an alternate server, the cached ping chart would get updated and the rest of the agents would switch over after being restarted and that PC would forget about agent7.

Yes. Formatting and reinstalling Windows would do it also.

Of course. MetaTester bypasses the traditional DNS mechanisms implemented by Windows and the standard protocols implemented by your network router and implements its own DNS lookup system. Consequently, nothing you do with a HOSTS file (on your PC or on your router) will have any effect on MetaTester. And since there is no fallback mechanism (well working one, anyway) in MetaTester, limiting or blocking the IP of the offending agent7 server at firewall level will be of no effect: It's already unreachable and look how the software is responding. The only thing that might be successful here is if you could create an IPtables rule on your router that swapped out the offending agent server's IP for a working agent server's IP on the outgoing TCP connection.

Yes. Every once in awhile an agent will randomly ignore the ping chart and select a different server. It could happen today, it might not happen all year. Once this happens and it successfully authorizes on an alternate server, the cached ping chart would get updated and the rest of the agents would switch over after being restarted and that PC would forget about agent7.

Wow. That really sucks. Thank you for your insight.

Would think it would be as easy as just fixing that one server.

No need to rewrite the entire MetaTester networking - just reboot the server dammit, haha.

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

Been having issues with MetaTester since Friday - and I think I finally figured out what is wrong: agent7.mql5.net cannot be connected to.

I have 4 machines on the same network, but only 2 of them will connect after 7/19/24.

All 4 had been running fine for months. I've tried everything suggested here (aside from reinstalling OS, which will not happen).

Hardware (all connected with 1000/500Mbit):

All working machines are using agent6.mql5.net, and the non-working ones are assigned agent7.mql5.net.

I have 3 additional machines on another network that are also assigned agent6.mql5.net and have been working non-stop (one of them for 3 years).

Here's the log from one of the disconnected machines. It never writes anything else but this to the log file: