Nice comparison of algorithms! I suspect that if you linearly damped the inertia weight term (w) throughout the search, it would enhance the swarms local search ability and speed up convergence. Most literature suggests imposing a lower limit of w=0.4. I have found the PSO scheme to be very effective for a wide class of problems with this value.

thanks for the comment! yes, it is possible to link coefficients to iterations, as it is done in the optimization algorithm by a pack of gray wolves. please look at my following articles.

unfortunately, not all articles have yet been published in English.

Population Optimization Algorithms: Ant Colony Optimization (ACO): https://www.mql5.com/ru/articles/11602

Population optimization algorithms: Artificial Bee Colony (ABC): https://www.mql5.com/ru/articles/11736

Population optimization algorithms: Optimization by a Pack of Gray Wolves (GreyWolf Optimizer - GWO): https://www.mql5.com/ru/articles/11785

Those articles about metaheuristic optimization techniques are awesome! You are doing a great job Andrey, it's mind blowing how much experience you have to share with us, thank you!

@METAQUOTES please consider implement those metaheuristic optimization targets to the optimizer! It would be great for the software.

Something easy that user can set inside OnTester() as:

OptimizerSetEngine("ACO"); // Ant Colony Optimization OptimizerSetEngine("COA"); // cuckoo optimization algorithm OptimizerSetEngine("ABC"); // artificial bee colony OptimizerSetEngine("GWO"); // grey wolf optimizer OptimizerSetEngine("PSO"); // particle swarm optimisation

Cheers from Brazil

Those articles about metaheuristic optimization techniques are awesome! You are doing a great job Andrey, it's mind blowing how much experience you have to share with us, thank you!

@METAQUOTES please consider implement those metaheuristic optimization targets to the optimizer! It would be great for the software.

Something easy that user can set inside OnTester() as:

Cheers from Brazil

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

New article Population optimization algorithms: Particle swarm (PSO) has been published:

In this article, I will consider the popular Particle Swarm Optimization (PSO) algorithm. Previously, we discussed such important characteristics of optimization algorithms as convergence, convergence rate, stability, scalability, as well as developed a test stand and considered the simplest RNG algorithm.

Since I use the same structure for constructing algorithms as in the first article of the series (and I will continue doing this in the future) described in Fig. 2, then it will not be difficult for us to connect the algorithm to the test stand.

When running the stand, we will see animations similar to those shown below. In this case, we can clearly see how a swarm of particles behaves. The swarm behaves really like a swarm in nature. On the heat map of the function, it moves in the form of a dense cloud.

As you might remember, the black circle denotes the global optimum (max) of the function, while the black dot denotes the best average coordinates of the search algorithm obtained at the time of the current iteration. Let me explain where the average values come from. The heat map is two-dimensional in coordinates, and the function being optimized can include hundreds of variables (measurements). Therefore, the result is averaged by coordinates.

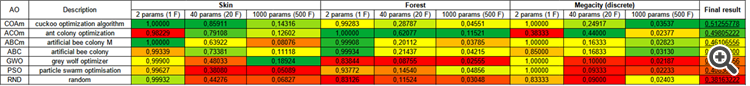

As you can see in the animation, the tests showed that PSO copes quite well with the smooth first function, but only when optimizing two variables. With an increase in the dimension of the search space, the efficiency of the algorithm drops sharply. This is especially noticeable on the second and discrete third functions. The results are noticeably worse than the random algorithm described in the previous article. We will return to the results and discuss them in detail when forming a comparative table of results.

Author: Andrey Dik