Machine learning in trading: theory, models, practice and algo-trading - page 743

You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

By mixing all the data, we try to get the real potential out of this set, not a lucky coincidence of circumstances in the form of orderliness. When you shuffle the data, you really see what the data can do... So it's like this....

If we talk about evaluating predictors with models, in my opinion the most advanced package is RandomUniformForest. It has a very detailed consideration of the importance of predictors from different points of view. I recommend to have a look. In one of my articles I described it in detail.

I refused to use a model selection of predictors. Limited to the specifics of the model used.

Good luck

I keep writing about something completely different: I am NOT interested in the intensity of predictor use when building a model, because I believe that the most "convenient" predictor to build a model is one that has little relationship to the target variable, since you can always find "convenient" values in such a predictor and ultimately the importance of predictors will reflect the "convenience" of the model when building it

I write all the time about predictive ability, the impact of ... of the predictor on the target variable. One of the ideas was expressed above (mutual information), I have stated my idea on this many times. These are mathematical ideas. Much more effective are economic ideas, because there you can pick predictors for the target variable that are ahead of the target variable.

So again: I'm not interested in the IMPORTANCE of the variable for the model, I'm interested in the IMPACT of the predictor on the target variable

PS.

I've checked your recommended package: the result is almost the same.

The point is that the model overfits if you don't separate train and test by time. An exaggerated but illustrative example: we have absolute increments as predictors, without any transformations. We use a sliding window width of 15, i.e. every time we feed 15 predictors-increases, and shift one value to the right. The class of neighboring lines in the dataset rather coincides than differs, i.e. if the nth line has class 1, then the n+1 line will very likely have class 1. The lines differ slightly, the n+1 line only differs from the nth in one value. There are 14 matching values. Respectively, if you take the first line from such dataset to train, and the second to test, the third to train, etc., then the model will work very well, because there are a lot of lines in test that actually coincide with those on which the model was trained in train. Only the model's OOS will suck (if you mean real OOS, which doesn't include test).

That's a very good point. That amazing, grail result I posted above with a random sample for learning testing and validations is exactly what explains it. And if you exclude close observations that happen to be in different sets, you get everything like I did - a disaster.

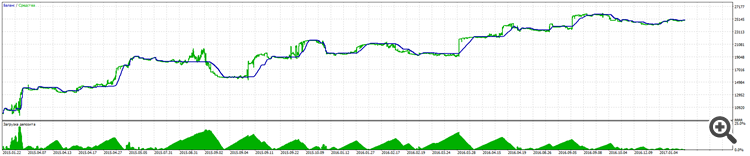

Remember I said I got a model that has been dialing in since 01.31.2018, and here's how this model has been working out these two weeks since 03.05.2018 to the present day. Tester result.

Pretty good for an old lady trained on 40 points and has been working for like 1.5 months on OOS.

And this is her full OOS from 01.31.2018

And you still think it's a fit???? Let me remind you that the screenshots show the OOS section

Well where is the normal backtest? you hit an uptrend in 3 months, you will suffer on the breaks

Take some simple stuff, like buy every Monday, and be surprised that it will work better than yours while the market is rising.

You check everything for overtraining and for errors in the sample itself, it is of course interesting for statistics and understanding what the algorithm does. but the final idea is to take the money. so why not check the predictability in the test? .... in my test it turns out probably 50/50, but, because of the fact that the target variable in the classes where the moose is less than the profit, it turns out quite smooth growth in the test. and by the way, in the test it is just a solid forward, the machine was trained on the data before the start of trading.... I should add, the most important are input predictors, their number and their real ability to describe the target.

Here is a picture

You check everything for overtraining and for errors in the sample itself, it is of course interesting for statistics and understanding what the algorithm does. but the final idea is to take the money. so why not check the predictability in the test? .... in my test it turns out probably 50/50, but, because of the fact that the target variable in the classes where the moose is less than the profit, it turns out quite smooth growth in the test. and by the way, in the test it is just a solid forward, the machine was trained on the data before the start of trading.... I should add, the most important are input predictors, their number and their real ability to describe the target.

However retraining is the important moment. For example in boosting (gradient forests) you can get a stunning model, but on forward it will be useless, but you know it yourself.

retraining is still an important point, because, for example in boosting (gradient forests) you can get a stunning model, but on forward will be a shade, but you know it yourself

nobody says to use forever, there is a period when you can retrain and go)))

Predictor selection is important, but forests don't know how to model the relationship factors between them, so it's a dumb fit, and smart modeling with variation in the form of variable relationships is unfortunately a very time consuming affair

And it's impossible to find these connections mathematically, so you have to use dumb fitting or market research :)

Stupid fitting is also a cool thing, in fact, if you use generalization