Machine learning in trading: theory, models, practice and algo-trading - page 2820

You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

Gradient Boosting

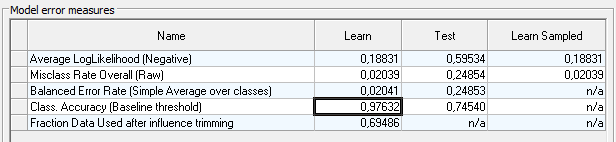

on the traine, the gainrises to 0.85

but drops to 0.75 on the test

As an option to raise the asssugasu, you can try to approximate the effect of significant variables, for each class -1, 0, 1

To use these splines as new variables.

For example, for class 1, the impact of RSI was as follows

Approximated, we got a new spline.

And so on, for each variable and each class.

As a result, we get a new set of splines, which we feed to the input instead of the original variables.

In these examples all possible relations between variables were set automatically.

Although you can disable them, or set specific variables for the relationship.

I played with tuning without approximation, increased the number of nodes per tree to the number of variables.

The model became more complex, trained for 12 minutes.

on the traine assugasurose to 0.97

but the test spoils everything at 0.74.

In general there is probably something to work on and think about. Maybe something will come out of yourdata.

There are a lot of different settings in the program, I just don't fully understand how to work with them.

I'm just studying the functionality myself since yesterday ))

And your dataset just happened to come up, to study the functionality, well, maybe something will come out of your data.

I don't quite understand what you mean by automatic feature builder?

Auto search for features themselves, or auto search for relations between existing features?

In these examples, all possible relationships between variables have been set automatically.

Although you can disable them, or set specific variables for the relationship.

No, that' s not what I meant.

I meant that I trained xgboost on other features to get akurasi 0.83 on new data.

Constructed the traits from OHLC and another indicator

according to the principle

O[i] - H[i-1]

L[i-5]-indic[i-10]

........

....

..

and so all possible combinations (all with all).

I got about 10,000 traits.

300 of them useful.

the model gave 0.83 on the new data.

===========

I don't quite understand what you mean by an automatic feature builder?

I want to automate the above described so that the computer itself constructs features, and then there will be not 10k features to choose from, but a billion for example....

Auto search for the features themselves, or auto search for dependencies between the available features?

automatic creation/construction of features ---> testing for suitability ---> selection of the best ones ---> possibly mutation of the best ones in search of even better ones ....

And it's all automatic.

Based on MSUA, if you've read it... but only based on it....

There are a lot of different settings in the prog, I just don't fully understand how to work with them.

I'm just studying the functionality myself since yesterday ))

And your dataset just by the way, to study the functionality, well, maybe something and squeeze out of your data.

What is this programme?

readgeometric probability

He's a real stump, clinging to every word.

You have total cognitive dystrophy, how can you even argue about anything?