1. ONNX Runtime

The video discusses the Open Neural Network Exchange (ONNX) project which is an open format for representing traditional and deep learning models. It also describes ONNX Runtime, the high-performance engine for running the models.

ONNX Runtime provides comprehensive coverage and support of all operators defined in ONNX and works with both CPU and GPU on many platforms including Linux, Windows and Mac.

It also provides a step-by-step guide to converting, loading, and running a model using ONNX Runtime in Azure ML and demonstrates its potential benefits, including improved performance and prediction efficiency for various models.

The video encouraged developers to try ONNX and contribute to the growing ONNX community.

- 2018.12.04

- www.youtube.com

2. Converting Models to #ONNX Format

Converting Models to #ONNX Format

The video discusses the process of converting machine learning models to the ONNX format, focusing on popular libraries like PyTorch, TensorFlow, and Scikit-learn. The example given is for PyTorch, where a pre-trained model is converted to ONNX format using torch.onnx.export. The video covers the different parameters that can be passed in this function as well as the conversion of TensorFlow and transformer models to ONNX format. Once the model is in ONNX format, it can be leveraged for cross-platform deployments and performance improvements through the ONNX runtime package. Further videos on innovative models using ONNX are also teased.

- 00:00:00 learning libraries to the ONNX format. In this section, we will focus on the popular libraries like PyTorch, TensorFlow, and Scikit-learn. We first need to get the converter library for the specific training framework we are using. The example given is for PyTorch where we get a pre-trained model, create a dummy input, and then use torch.onnx.export to convert it to ONNX format. The input and output names created during the conversion will be used later for inferencing using the ONNX runtime package. The ONNX model can now be leveraged for its capabilities. The section also covers the different parameters that can be sent in the torch.onnx.export function and how to convert TensorFlow and transformer models to ONNX format.

- 00:05:00 In this section, the speaker explains the process of converting a machine learning model into the ONNX format. The excerpt shows an example of importing Scikit-learn and creating an initial type or shape. The convert to sklearn method is used to send the model and initial type, followed by naming and writing the model into a file. Once the model is in an ONNX format, cross-platform deployments and performance improvements through ONNX runtime can be utilized. The speaker hints at more upcoming videos about innovative models that use ONNX in a march model madness theme.

3. ONNX – open format for machine learning models

3. ONNX – open format for machine learning models

The video discusses the benefits of using ONNX for inference, including hardware optimization and interoperability with various formats. The speaker emphasizes the importance of appropriate hardware to leverage ONNX benefits, especially for deployment on edge devices. The section also covers the optimization of deep learning models for iOS devices using Core ML, which is built into the operating system, making it easy to deploy ONNX models on iOS devices without the need to ship ML libraries. The next tutorial will focus on image classification and segmentation, encouraging college students to consider a project in iOS app development.

- 00:00:00 The speaker discusses ONNX, an open format for machine learning models. ONNX offers interoperability and hardware access, allowing users to convert any model into ONNX or vice versa. It also supports a wide range of formats, including TensorFlow and PyTorch. By converting models to ONNX, users can optimize hardware and speed up runtime.

- 00:05:00 In this section, the speaker discusses the advantages of using ONNX for inference, with optimization that can accelerate the process. Different GPUs and CPUs can be used, making batch sizes irrelevant to the execution speed of ONNX models. The speaker emphasizes that the hardware used must be appropriate to leverage ONNX benefits. Deployment on edge devices can demonstrate maximum benefit, which

the next tutorial will cover. Finally, the speaker explains a hypothetical scenario of deploying a trained model via an iOS app, where inference happens on the device itself for optimal performance. Apple devices that come with an Apple neural engine can optimize inference to be fast and reliable. - 00:10:00 The speaker discusses the optimization of deep learning models for iOS devices using Core ML, which is a framework for deep learning inference. By saving a trained model from Keras or PyTorch as ONNX, it can be easily converted into a Core ML model and deployed on iOS devices. Core ML is built into the operating system, eliminating the need to compile, link, or ship binaries for ML libraries. In the next tutorial, the speaker will focus on image classification and segmentation and encourages college students to consider a project in iOS app development.

- 2023.01.04

- www.youtube.com

4. (Deep) Machine Learned Model Deployment with ONNX

PyParis 2018 - (Deep) Machine Learned Model Deployment with ONNX

The speaker in the PyParis 2018 video discusses the challenges of deploying machine learning models, including varying prediction times in common libraries such as scikit-learn and XGBoost. They introduce ML.NET, an open-source machine learning library developed by Microsoft and recently released with ONNX runtime, which can offer better accuracy in certain cases but is slower than other libraries. The speaker also demonstrates the runtime for ONNX and explains that it reduces production time by half for MobileNet and SqueezeNet models. ONNX is designed to not only push models into production but also to store metadata for tracking model performance and can be run anywhere due to being platform-independent.

- 00:00:00 In this section, the speaker discusses the challenges in deploying machine learning models, specifically when using them in different conditions than during training. He demonstrates how common libraries such as scikit-learn and XGBoost have varying prediction times and introduces ML.NET, an open-source machine learning library developed by Microsoft and recently released with ONNX runtime. The speaker measures the prediction time for ML.NET and shows that it is slower than other libraries but can offer better accuracy in certain cases.

- 00:05:00 In this section, the speaker talks about the performance metrics obtained through a notebook with c-sharp and Python. The notebook shows the performance of different libraries such as Cyclone, GBM ML, and Trees. The speaker also demonstrates how batch prediction helps in reducing the average time spent in predicting positions. The discussion then goes on to describe the pipeline of machine learning model and how ONNX helps to describe the pipeline using minimal language. ONNX provides a common format for dumping the model based on Google product, and it is supported by Microsoft and Facebook. The speaker concludes by stating that the method for training requires bad prediction, good memory, and huge data, whereas for predicting one position, small memory and good prediction are desired.

- 00:10:00 In this section, the speaker demonstrates the runtime for ONNX, which stands for open neural network exchange. Using an XML file to convert models, the ONNX runtime was able to reduce production time by half for both MobileNet and SqueezeNet models. The Model Zoo is still unstable at this point, but will eventually stabilize in a few months. In the meantime, standard machine learning models and transformations that are available in Python and Keras are covered, as ONNX is available from Python, and there will not be any other dependencies to run the model.

- 00:15:00 In this section, the speaker explains that ONNX was designed not only to push models into production but also to store metadata that can be used to track model performance. The speaker also mentions that ONNX is a library that can be run anywhere because it is platform-independent. The ONNX model is optimized and made smaller using Google+ for serialization, and it only contains coefficients. In response to questions, the speaker explains that ONNX is being adopted by Microsoft and is being used to improve prediction time, and the speaker believes that it will continue to be widely used.

- 2018.11.20

- www.youtube.com

5. Recurrent Neural Networks | LSTM Price Movement Predictions For Trading Algorithms

Recurrent Neural Networks | LSTM Price Movement Predictions For Trading Algorithms

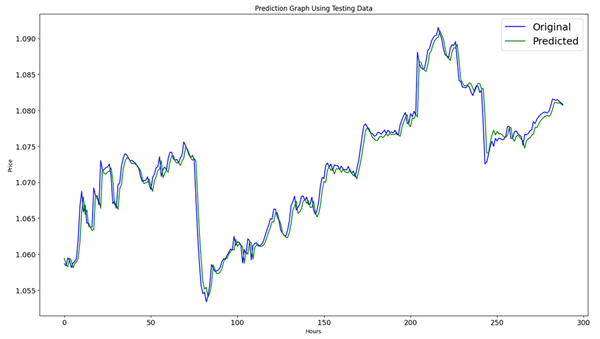

The video explains the use of recurrent neural networks and LSTM long-term memory networks to predict price movement trends in trading algorithms. The video walks through the steps to preprocess the data set before feeding it into the LSTM model. Unnecessary columns are discarded, and min-max scaling is applied to scale feature data between zero and one. The data is then put into 'x,' while the predicted data goes into 'y.' The video also covers the training and testing of the LSTM model with 80% of the data used for training and 20% for testing. The model is compiled and fitted with the training data before predicting the testing data, with the results presented in the form of a graph. The video shows some promising predictions, but the speaker notes that there is still room for improvement in future videos.

- 00:00:00 In this section, the author explains how to use recurrent neural networks and lstm long-term memory networks for price movement predictions. The input parameters could be price values, technical indicators, and custom indicators that can be included in the dataframe to predict the output, which would be the price movement trend. The lstm model takes in past data and tries to predict the closing price of the next day. The model requires two-dimensional input, and the training dataset is three-dimensional, taking into account the shape of the dataset. The YouTuber demonstrates how to import modules using numpy, pandas, matplotlib, and pandas TA technical analysis, and download data using YFinance to create a dataframe. The technical indicators are added to the dataframe using TA technical analysis. Finally, the next closing price is set as the target column and obtained by shifting the adjusted close column with an index minus one, and empty rows are dropped, and the dataframe is reset.

- 00:05:00 In this section, the speaker explains the steps to preprocess the data set before feeding it into the LSTM model. First, they discard unnecessary columns and use the MinMaxScaler from the Scikit-learn package to scale the feature data between zero and one. The input data consists of eight columns, including three moving averages, the RSI, open, high, and low prices, and is processed into a two-dimensional Numpy array. They then choose the target 'next close' column to be predicted and set the number of back candles, which represents the number of past days to be considered. The input data is put into 'x,' while the predicted data, which is the closing price of the next day, goes into 'y.' The code is written in Python, and the LSTM model's correct dimensions must be considered to avoid errors. Finally, the data is split for training and testing purposes.

- 00:10:00 In this section, the speaker trains and tests the LSTM model with 80% of the data used for training and 20% for testing. The input function shape is equal to the number of back candles, meaning the number of rows fed into the model, with the number of columns creating a two-dimensional input shape matrix for the model. After creating an intermediate and dense layer with specific nodes, the model is compiled before being fitted with the training data. The model is then used to predict the testing data, with the y-variable representing the following day's closing price. The results come in the form of a graph, which shows some promising predictions, although the speaker notes the model is failing in some areas and improvements should be made in future videos.

CodeBase

- Information about the ONNX model's inputs and outputs - script for MetaTrader 5

- Get info about inputs and outputs of onnx-model - script for MetaTrader 5

- www.mql5.com

How to use ONNX models in MQL5 - Metatrader 5

In this article, we will consider how to create such a model to forecast financial timeseries and how to use the created ONNX model in an MQL5 Expert Advisor.

- www.mql5.com

Everything You Want to Know About ONNX

Everything You Want to Know About ONNX

The ONNX format aims to address the challenges presented by the fragmented set of tools and frameworks in the deep learning environment by providing a portable file format that allows models to be exported from one framework to another. It is highly transparent and well-documented, standardizing syntaxes, semantics, and conventions used in the deep learning domain, and supporting both deep learning and traditional machine learning models. ONNX enables interoperability, efficiency, and optimization across diverse hardware environments without manual optimization or conversion. The video showcases a basic demo of converting a trained model from scikit-learn into ONNX format using the ONNX-specific converter and demonstrates how to perform final inference in a reactive use case where training is done using PI torch, and the inference is performed in TensorFlow.

The webinar concludes with a demonstration of how to convert an ONNX model into a TensorFlow format using the "prepare" function from the "ONNX TF backend" and saving the output as a ".pb" file. The speaker notes that the TensorFlow model can then be used for inference without making any reference to ONNX. The audience is thanked, and the speaker announces upcoming articles on ONNX on The New Stack, along with another upcoming webinar on Microsoft's distributed application runtime. Feedback from attendees was positive.

- 00:00:00 If you're struggling with the complexities of machine learning and deep learning due to the fragmentation and distributed set of tools and frameworks, ONNX might just be the solution you need. In a section of a webinar, the speaker explains the current challenges faced in the deep learning environment and how ONNX can address them. Assuming a general familiarity with machine learning and deep learning, the discussion provides a detailed overview of the promises offered by both ONNX and the ONNX runtime. By the end of this section, the audience is introduced to the idea of the convergence of machine intelligence and modern infrastructure, which is a recurring theme throughout the webinar series.

- 00:05:00 In this section, the speaker discusses the challenges of deep learning, especially in terms of training and production. When it comes to training, using a fragmented set of frameworks and toolkits is not easy, and infrastructure is essential when working with GPUs to accelerate training. Moreover, it's not only about hardware, but also about software, such as CUDA and KuDNN for NVIDIA GPUs and oneDNN for Intel's FPGAs. On the production side, the trained model needs to be optimized for the target environment to deliver high accuracy and low latency, which requires converting data types and using tools like TensorRT. Thus, deep learning requires understanding the hardware and software layers and optimizing the model to deliver the desired performance.

- 00:10:00 In this section, the speaker discusses the various tools, frameworks, and hardware environments that are required to optimize and deploy AI models effectively. The speaker highlights the need for optimization and quantization of the model, especially for CPUs, and mentions the popular toolkits such as Nvidia T4 and Intel's OpenVINO toolkit. The speaker further explains that different hardware environments require different toolkits, such as Nvidia's Jetson family for industrial automation and IoT use cases, Intel's vision processing unit for vision computing, and Google's Edge TPU for the edge. The process of optimization and deployment of AI models is a complex and continuous process that involves detecting the drift, retraining, optimizing, deploying, and scaling the model. The speaker stresses the importance of having a pipeline that takes care of this entire closed loop mechanism effectively.

- 00:15:00 In this section, the speaker introduces ONNX as the Open Neural Network Exchange that acts as an intermediary between different frameworks and target environments promoting efficiency, optimization, and interoperability. ONNX supports deep learning and traditional machine learning models and enables the exporting and importing of models from one framework to another. In addition, ONNX supports diverse hardware environments without manual optimization or conversion. The speaker notes that since its inception in 2017 with founding members AWS, Microsoft, and Facebook, ONNX has grown significantly with contributions from Nvidia, Intel, IBM, Qualcomm, Huawei, Baidu, and more, actively promoting this ecosystem within public cloud platforms, toolkits, and runtimes. ONNX has gone through a significant shift in response to advancements in other frameworks and toolkits, making it compact, cross-platform, and keeping up with the latest developments.

- 00:20:00 In this section, the speaker discusses ONNX, a portable file format that allows for the exporting of models trained in one framework or toolkit into another. ONNX is highly inspired by portable runtimes such as JVM and CLR and provides a level of abstraction and decoupling from higher-level toolkits. It is a highly transparent and well-documented format that standardizes syntaxes, semantics, and conventions used in the deep learning domain. ONNX ML is also an extension that supports traditional machine learning. When a model is exported as ONNX, it becomes a graph of multiple computation nodes that represent the entire model.

- 00:25:00 In this section, the speaker explains the structure of computation nodes within a graph in ONNX. Each node is self-contained and contains an operator that performs the actual computation, along with input data and parameters. The input data can be a tensor composed of various data types, which is processed by the operator to emit output that is sent to the next computation node. The speaker also notes that operators are essential in ONNX as they map the ONNX operator type with the higher-level framework or toolkit, making it easy to interpret the model and map the high-level graph structure to the actual operator available within ONNX.

- 00:30:00 In this section, the video discusses custom operators and how they can be defined within ONNX. Custom operators are useful for advanced users who are creating their own activation functions or techniques that aren't included in the available ONNX operators. Custom operators can then be used in exporting a model from a framework level into ONNX. The video then showcases a basic demo of using ONNX to convert a trained model from scikit-learn into ONNX format using the ONNX-specific converter.

- 00:35:00 In this section, the speaker highlights the transparency and interpretability of the ONNX format, which allows for easy parsing and exploration of the model's structure. The ONNX model can even be exported to protobuf, enabling programmatically iterating over the available nodes in the model. The video demonstrates inference using an existing model, and the code shows how to send input data for inference, which returns a numpy array with the classification for each element in the input data. One of the benefits of ONNX is that it is decoupled from Escalon, so you don't need to worry about where the model comes from, making it easy to consume the model. The ONNX format is similar to DLL or jar files, as you don't need to worry about the programming language it was written in before consumption, making it flexible and versatile.

- 00:40:00 In this section, the speaker talks about the ONNX file format and ONNX runtime. ONNX is a portable and independent format that defines a model's graph and operators in a well-defined specification. ONNX runtime, on the other hand, is a project driven and advocated by Microsoft. It is a different project from ONNX and takes ONNX 's promise to the next level by creating the actual runtime. Microsoft is betting big on ONNX runtime as it is becoming the de facto layer for minimal in Windows 10. The speaker explains the relationship between ONNX and ONNX runtime through a visual representation of their layers.

- 00:45:00 In this section, the speaker discusses the promise of ONNX runtime, which abstracts away the underlying hardware, making it easy to use with different hardware and optimizers. ONNX uses execution providers through a plug-in model, which can be built by hardware vendors to interface with their existing drivers and libraries, making the runtime extremely portable. Microsoft has published numerous benchmarks that prove ONNX runtime is highly performant and many product teams within Microsoft are now adopting it as their preferred deep learning runtime, from Bing to Office 365 to cognitive services. The speaker then goes on to describe the four ways to get ONNX models that you can run on top of ONNX runtime, starting with Model Zoo, and ending with using Azure ml to train and deploy the model in the cloud or at the edge.

- 00:50:00 In this section, the presenter discusses how to download a pre-trained ONNX model from the model zoo and use it for inference. The demo involves a pre-trained EMNIST ONNX model downloaded from the model zoo and test images of handwritten digits used for inference. The presenter walks through the code and explains the pre-processing steps needed before sending the input data to the ONNX model. The code uses ONNX runtime, NumPy, and OpenCV libraries, and the final output is the predicted digit values. The presenter demonstrates that the model is accurate and can correctly identify handwritten digits.

- 00:55:00 In this section, the speaker demonstrates how to perform the final inference in a very reactive use case where training is done using PI torch, and the inference is performed in TensorFlow. The speaker shows how to convert a PI torch model into an ONNX model and then further into a protobuf file that can directly be used in TensorFlow. The code is straightforward, and the conversion is done through an in-built export tool available in Pytorch.

- 01:00:00 In this section, the speaker explains how to convert an ONNX model into a TensorFlow format. This is done by simply importing ONNX and using the "prepare" function from the "ONNX TF backend" to load the model, then writing the output into a ".pb" file. The speaker demonstrates that the TensorFlow model can then be used for inference, without making any reference to ONNX. The session ends with a note on upcoming articles on ONNX by the speaker on The New Stack, where tutorials and all the source code will be available.

01:05:00 This section does not contain any information about ONNX, but rather serves as a conclusion to a webinar on the topic. The speaker thanks the audience for their attendance and support, and announces that the recorded video of the webinar will be uploaded soon. The audience is asked to rate the relevance and quality of the webinar, with most respondents giving positive feedback. The speaker concludes by announcing another upcoming webinar on Microsoft's distributed application runtime, which will take place next month.

- 2020.07.12

- www.youtube.com

ONNX and ONNX Runtime

The ONNX ecosystem is an interoperable standard format that converts models from any framework into a uniform model representation format optimized for each deployment target. The ONNX Runtime implementation of the ONNX standard is cross-platform and modular, with hardware accelerator support. The different levels of optimization that the transformers work on include graph transformation, graph partitioning, and assignment. The speakers also discuss various advantages of using ONNX such as C API, API stability to take advantage of new performance benefits without worrying about breaking binary compatibility, and the ONNX Go Live tool which can be used to determine the optimal configuration for running an ONNX model on different hardware. They also compare ONNX with frameworks and touch upon cross-platform compatibility.

- 00:00:00 In this section, Ton of Sharma, from the ONNX Runtime team, gives an introduction and survey of the ONNX ecosystem, explaining its motivation and widespread adoption in Microsoft, along with the technical design for both ONNX and ONNX Runtime. He highlights the issues of different teams using different frameworks for training their models, but when it comes to deploying models in production and using the hardware to its fullest, ONNX sits in the middle and converts models from any framework into a uniform model representation format, optimized for each deployment target, making it an interoperable standard format. The open-source project was started in December 2017, with Facebook, Microsoft, and Amazon among the first to join the consortium, which now has more than 40-50 companies actively participating and investing in the ONNX.

- 00:05:00 In this section, the speaker discusses the different ways to obtain an ONNX model once a model has been trained in the desired framework, such as using the ONNX Model Zoo, the Azure Custom Vision AutoML tool, or open source conversion tools to convert a model in a specific format to ONNX. The ONNX Runtime, which is the implementation of the ONNX standard, is introduced as a way to run the ONNX model as fast as possible on the desired device or deployment target. It is extensible, modular, and cross-platform, with built-in hardware accelerator support from various vendors such as Tensor RT and Intel MKL DNN. The latest version, ONNX Runtime 1.0, has full support for the ONNX specification and is the core library running in the Windows Machine Learning framework.

- 00:10:00 this section, the speaker talks about the deployment of ONNX after compiling it into a JET format using LLVM and TVM as the underlying frameworks. They have published reference points and notebooks for deploying ONNX to various types of devices, including Azure machine learning service, edge clouds, and edge IoT devices, which is part of their planning for the MNIST platform. Microsoft's ML minimal and ML dotnet already support ONNX, and they have about 60+ ONNX models in production so far with an average of 3x performance improvement. The design principles for ONNX were to be interoperable, compact, cross-platform, backwards compatible, and support both deep load and traditional machine learning models.

- 00:15:00 In this section, the speaker discusses the three parts of the ONNX specification, starting with the representation of the data flow graph itself. He explains that the representation can be extended and new operators and types can be added to it. The second part is the definition of standard types and attributes stored in the graph, while the third part is the schema for every operator in the ONNX model. The model file format is based on protobuf, which is easily inspectable, and includes the version, metadata, and acyclic computation graph, which consists of inputs, outputs, computation nodes, and graph name. Tensor types, complex, and non-tensor types such as sequences and maps are supported. The ONNX operator set includes around 140 operators, and anyone can add more operators by submitting a pull request to the ONNX open-source GitHub repository.

- 00:20:00 In this section, the speaker discusses the capabilities of ONNX, including the ability to add custom operations that may not be supported by ONNX's existing operations. The speaker also talks about the importance of versioning and how ONNX incorporates versioning at three different levels, including the IR version, OP set version, and operator version. The ONNX runtime is also discussed, with its primary goals being performance, backwards and forwards compatibility, cross-platform compatibility, and hybrid execution of models. The runtime includes a pluggable architecture for adding external hardware accelerators.

- 00:25:00 In this section, the speaker explains the two phases of running a model inside ONNX Runtime, which are creating a session with the model and loading the model, followed by calling the Run APIs. Upon loading the model, an inefficient graph representation of the protobuf is created, and then the graph goes through different levels of graph transformations, similar to compiler optimizations. ONNX Runtime provides different levels of graph transformation rules and fusions that can be customized, and the user can add their own rules through API. Once the graph has been optimized, it is partitioned into different hardware accelerators, and each node is assigned to a specific accelerator. The speaker also touches on the execution part and mentions the two modes of operation, the sequential mode, and the parallel execution mode. Finally, the speaker talks about ONNX Runtime's future work on telling the user which providers to run on.

- 00:30:00 In this section, the speaker explains the details about optimization in the ONNX Runtime. The transformation of the graph, graph partitioning, and assignment are the different levels of optimization that the transformers work on to rewrite the graph. The session and run are the two-fold API of the ONNX Runtime that loads and optimizes the model to make it ready to run with a simple call to the run function. The speaker also talks about the execution provider, which is the hardware accelerator interface of the ONNX Runtime and how vendors can add a new hardware accelerator by implementing an execution provider API. Finally, the speaker mentions the multiple extension points of the ONNX Runtime, such as adding new execution providers or custom ops and extending the level of fusions.

- 00:35:00 In this section, the speaker discusses the advantages of using the C API in ONNX Runtime, including API stability that allows users to take advantage of new performance benefits without worrying about breaking binary compatibility. He also explains the ONNX Go Live tool which can be used to determine the optimal configuration for running an ONNX model on different hardware. The speaker then touches on upcoming features including support for N in API on Android devices, Qualcomm, and training optimization, in addition to continued performance optimizations to make ONNX Runtime run as fast as possible.

- 00:40:00 In this section of the video, the speakers focus on the importance of optimizing operations within a certain operator (OP) to ensure that it runs as fast as possible. They compare ONNX with frameworks, attempting to identify whether or not it performs better than its predecessors. The speakers also discuss how training works, noting that although they are working on it, source training is not yet complete. They also answer questions regarding what the ONNX format produces and what kind of formats it can handle, like strings. There is some discussion on the impact of Microsoft on the design of ONNX Runtime as a marquee product, which currently lacks an open governance mode, and is ultimately controlled by Microsoft, though they remain welcoming external contributions. The speaker also touches upon cross-platform compatibility, noting that the runtime is designed for Linux, Windows, and Mac.

- 2019.12.06

- www.youtube.com

ONNX - ONNX Runtime, простой пример

ONNX - ONNX Runtime, простой пример

In this YouTube video, the speaker introduces ONNX, an open format for machine learning models that can optimize performance across multiple frameworks. The video provides examples of using ONNX in different scenarios, such as sentiment analysis of news articles and converting numerical vector classifiers to neural networks. The speaker emphasizes the importance of using established libraries for functions and measuring execution time accurately for code. Additionally, the speaker discusses the challenges of developing on different operating systems and recommends loading only necessary modules to improve runtime performance. Overall, this video provides valuable insights into the benefits and challenges of using ONNX for machine learning models.

- 00:00:00 In this section, the speaker describes ONNX, an open format for machine learning models that can be used across various frameworks to optimize the performance of the models. ONNX is based on the protocol buffer, which is an analog binary format of the XML format but faster and more adapted for smaller messages. ONNX models can be developed in one operating system and processor and used anywhere, including mobile devices and graphics cards. The ONNX runtime is a library for cross-platform acceleration of model training and inference. ONNX also allows for the quantization of the model to reduce its size without using hardware acceleration, and pruning a model to reduce its size by replacing some of its weight values with zero values. The speaker includes a link to the websites where more information about ONNX can be found.

- 00:05:00 In this section, the speaker discusses the steps required to work with a pre-trained model in ONNX format using ONNX Runtime. The model can be created using any framework, saved in ONNX format, and then used in a variety of ways, such as on a less powerful server or to handle heavy server loads. The speaker then provides an example of using the Hugging Face transformer library to determine the sentiment of news articles related to cryptocurrency. Lastly, the speaker demonstrates an application of the model by loading and processing the necessary libraries and showing the result of running the model on a sample news article.

- 00:10:00 In this section, the speaker discusses the process of converting a numerical vector classifier to produce results from a neural network, using the ONNX framework. They explain that the SoftMax function was not used as it slowed down the process significantly, and instead manually input values were used. The model was then loaded and tested for accuracy, showing promising results. However, there were difficulties encountered with the installation on different operating systems and frameworks, highlighting the need for thorough testing and flexibility in deploying models.

- 00:15:00 In this section, the speaker discusses the potential problems that can arise when trying to develop software on different platforms and the importance of using established libraries rather than trying to implement everything manually. She mentions issues with using different floating-point formats and how this can lead to errors when trying to work with very large or very small numbers. Additionally, she suggests using pre-existing implementations of functions like SoftMax rather than trying to create custom ones, as the developers of established libraries have already dealt with potential problems that may arise.

- 00:20:00 In this section, the speaker discusses the issues he encountered while developing on MacOS and the differences between MacOS and Linux. He also mentions the need to load only necessary modules while disabling the rest to improve runtime performance, as loading too many modules could significantly affect the execution time. The speaker also recommends using the "timeet" utility to measure runtime accurately, taking into account the various factors that can affect performance.

- 00:25:00 In this section of the video, the speaker discusses the importance of measuring execution time for code, particularly for comparing the performance of different pieces of code. They emphasize the need to measure execution time multiple times and take the average for a more accurate assessment. The speaker then uses this approach to compare the performance of two pieces of code with different ways of handling data, which showed that one method was significantly faster than the other. Finally, the speaker provides a link to their presentation and thanks the audience for their participation.

- 2023.01.25

- www.youtube.com

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

We have added support for ONNX models in MQL5 since we believe this is the future. We have created this topic to discuss and study this promising field which can assist in raising the use of machine learning to a new level. By using the new capabilities, you can train models in your preferred environment and then run them in trading with minimal effort.

A brief technology overview

ONNX (Open Neural Network Exchange) is an open-source format for exchanging machine learning models between various frameworks. Developed by Microsoft, Facebook and Amazon Web Services (AWS), it aims at facilitating the development and deployment of ML models.

Key benefits of ONNX:

To use ONNX, developers can export their models from various frameworks such as TensorFlow or PyTorch to the ONNX format. Further, the models can be included in MQL5 applications and run in the MetaTrader 5 trading terminal. This is a unique opportunity not offered by anyone else.

One of the most popular tools for converting models to the ONNX format is Microsoft's ONNXMLTools. ONNXMLTools can be easily installed. For installation details and model conversion examples, please see the project page at https://github.com/onnx/onnxmltools#install.

To run a trained model, you should use ONNX Runtime. ONNX Runtime is a high-performance cross-platform engine designed to run ML models exported in the ONNX format. With ONNX, developers can build models in one framework and then easily deploy them in other environments. This provides flexibility while simplifying the development process.

ONNX is a powerful tool for ML developers and researchers. Use these capabilities to efficiently develop and implement models in trading using MQL5.

Explore available information and new approaches to working with ML models and share them with MQL5.community members. We have found some useful publications concerning this topic, which can assist you in exploring and getting started with this new promising technology.