- 4.2.4.1 Building a test recurrent model in Python

4.Building a test recurrent model in Python

To build test recurrent models in Python, we will use the previously developed template. Moreover, we will take the script file convolution.py, which we used when testing convolutional models. Let's make a copy of it with the file name lstm.py. In the created copy, we leave the perceptron model and the best convolutional model, deleting the rest. This approach will allow us to compare the performance of the new models with the architectural solutions discussed earlier.

# Creating a perceptron model with three hidden layers and regularization

|

# Model with 2-dimensional convolutional layer

|

After that, we will create three new models using the recurrent LSTM block. Initially, we will take the convolutional neural network model and replace the convolutional and pooling layers with a single recurrent layer with 40 neurons at the output. Note that the input to the recurrent LSTM block should be a three-dimensional tensor of the format [batch, timesteps, feature]. Just like in the case of a convolutional layer, when specifying the dimensionality of a layer in the model, we don't explicitly mention the batch dimension, as its value is determined by the batch size of the input data.

# Add an LSTM block to the model

|

In this model, we specified parameter return_sequences=False which instructs the recurrent layer to produce the result only after processing the full batch. In this version, our LSTM layer returns a two-dimensional tensor in the format [batch, feature]. In this case, the dimension of the feature measurement will be equal to the number of neurons that we specified during the creation of the recurrent layer. A tensor of the same dimension is required for the input of a fully connected neural layer. Therefore, we do not need additional reformatting of the data, and we can use a fully connected neural layer.

keras.layers.Dense(40, activation=tf.nn.swish,

|

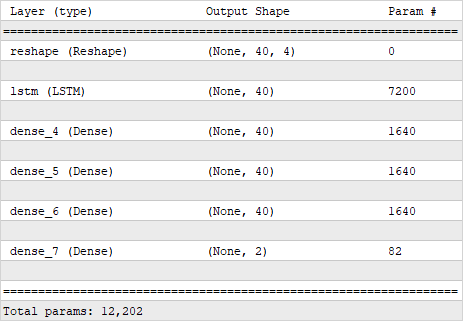

Structure of a recurrent model with four fully connected layers

In this implementation, we use the recurrent layer for preliminary data processing, while decision-making in the model is carried out by several fully connected perceptron layers that follow the recurrent layer. As a result, we got a model with 12,202 parameters.

We will compile all neural models with the same parameters. We use the Adam method for optimization and the standard deviation for the network error. We also add an additional metric accuracy.

model2.compile(optimizer='Adam',

|

We compiled earlier neural network models with the same parameters.

One more point should be noted. Recurrent models are sensitive to the sequence of the input signal being fed. Therefore, when training a neural network, unlike the previously discussed models, we cannot shuffle the input data. For this purpose, when we start training the model, we will specify the False for the shuffle parameter. The rest of the training parameters of the model remain unchanged.

history2 = model2.fit(train_data, train_target,

|

In the first model, we used a recurrent layer for preliminary data processing before using a fully connected perceptron for decision-making. However, it is also possible to use recurrent neural layers in their pure form, without subsequent utilization of fully connected layers. It is this implementation that I propose to consider as the second model. In this case, we simply replace all the fully connected layers with a single recurrent layer, and we set the size of the layer to match the desired output size of the neural network.

It's important to note that the recurrent neural layer requires a three-dimensional tensor as input, whereas we obtained a two-dimensional tensor at the output of the previous recurrent layer. Therefore, before passing information to the input of the next recurrent layer, we need to reshape the data. In this implementation, we set the last adjustment to be equal to two, while leaving the size of the temporal labels dimension for the model's calculation. We don't expect any data distortion from such reshaping, as we're grouping sequential data, essentially just enlarging the time interval. At the same time, the time interval between any two subsequent elements in the new time series remains constant.

# LSTM block model without fully connected layers

|

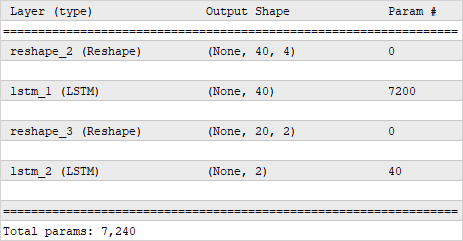

Now we have a neural network where the first recurrent layer performs preliminary data processing, and the second recurrent layer generates the output of the neural network. By eliminating the use of a perceptron, we've reduced the number of neural layers in the network and, consequently, the total number of parameters, which in the new model amounts to 7,240 parameters.

The structure of a recurrent neural network without the use of fully connected layers

We compile and train the model with the same parameters as all previous models.

model4.compile(optimizer='Adam',

|

history4 = model4.fit(train_data, train_target,

|

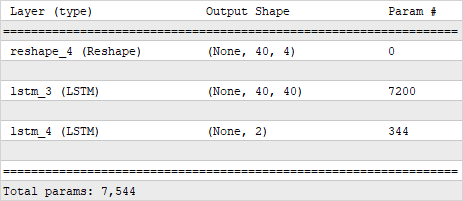

In the second recurrent model, to create the input tensor for the second LSTM layer, we reshaped the tensor of results from the previous layer. The Keras library gives us another option. In the first LSTM layer, we can specify the parameter return_sequences=True, which switches the recurrent layer to a mode that outputs results at each iteration. As a result of this action, at the output of the recurrent layer, we immediately obtain a three-dimensional tensor of the format [batch, timesteps, feature]. This will allow us to avoid reformatting the data before the second recurrent layer.

# LSTM model block without fully connected layers

|

The structure of a recurrent neural network without the use of fully connected layers

As you can see, with this model construction, the dimensionality of the tensor at the output of the first recurrent layer has changed. As a result, the number of parameters in the second recurrent layer has slightly increased. This resulted in a total increase in parameters throughout the model, reaching 7,544 parameters. Nevertheless, this is still fewer parameters than the total number of parameters in the first recurrent model that used a perceptron for decision-making.

Let's supplement the plotting block with new models.

# Rendering model training results

|

plt.figure()

|

Additionally, let's add the new models to the testing block to evaluate their performance on the test dataset and display the results.

# Check the results of models on a test sample

|

print('LSTM model')

|

print('LSTM only model')

|

print('LSTM sequences model')

|

In this section, we have prepared a Python script that creates a total of 5 neural network models:

- Fully connected perceptron

- Convolutional model

- 3 models of recurrent neural networks

Upon executing the script, we will conduct a brief training of all five models using a single dataset and then compare the performance of the trained models on a shared set of test data. This will give us the opportunity to compare the performance of various architectural solutions on real data. The test results will be provided in the next chapter.