- Program types and their construction features

- Statistical analysis and fuzzy logic tools

- OpenCL: Parallel computations in MQL5

- Integration with Python

OpenCL: Parallel computations in MQL5

A large huge number of calculations are carried out while training and running neural networks. This is a rather resource-intensive process. Solving more complex problems also requires more complex neural networks with a large number of neurons. With an increase in the number of neurons in the network, the amount of computations performed increases, and consequently, the consumption of resources and time also increases. While humanity has learned to create new and more advanced computational machines, managing time is still beyond our capabilities.

It's natural that the next wave of neural network development has come with the advancement of computational power. As a rule, neurons perform fairly simple operations, but in large numbers. At the same time, there are a lot of similar neurons in the neural network. This allows for parallelizing individual blocks of computations on different computational resources and then consolidating the obtained data. As a result, the time for performing operations is significantly reduced.

The development of computing technologies has led to the emergence of video cards (GPU) with a large number of computing cores capable of performing simple mathematical operations. Next, it became possible to transfer part of the calculations from the CPU to the GPU, which made it possible to parallelize the calculation process both between the microprocessor and the video card, and at the video card level between different computing units.

OpenCL (Open Computing Language) is an open free standard for cross-platform parallel programming of various accelerators used in supercomputers, cloud servers, personal computers, mobile devices, and embedded platforms.

OpenCL is a C-like programming language that allows GPU computing. The support of this language in MQL5 allows us to organize multi-threaded calculations of our neural networks on the GPU directly from the MQL5 program.

To understand the organization of GPU computing, it is necessary to make a short digression into the architecture of video cards and the OpenCL API.

In OpenCL terminology, a computer's microprocessor (CPU) is a Host. It manages all the processes of the program that is being executed. All microprocessors with support for OpenCL technology in the CPU and GPU are Devices. Each device has its own unique number within the platform.

One Device can have multiple Computer Units. Their number is determined by the number of physical and virtual microprocessor cores. For video cards, these will be SIMD cores. Each SIMD core contains several Stream Cores. Each thread processor has several processing elements Processing Elements (or ALU).

The specific number of Computer Units, SIMD cores, Stream Cores, and Processing Elements depends on the architecture of a particular device.

An important feature of the GPU is vector computing. Each microprocessor consists of several computing modules. They can all execute the same instruction. At the same time, different executable threads may have different initial data. This allows all threads of the GPU program to process data in parallel. Thus, all computing modules are loaded evenly. A big advantage is that vectorization of computations is done automatically at the hardware level, without the need for additional processing in the program code.

OpenCL was developed as a cross-platform environment for creating programs using mass parallel computing technology. The applications created in it have their own hierarchy and structure. Organization of the program, preparation, and consolidation of data are carried out in the Host program.

The Host, like a regular application, starts and runs on the CPU. To organize multi-threaded computations, a context is allocated, which is an environment for executing specialized objects of the OpenCL program. A context combines a set of OpenCL devices for running a program, the program objects themselves with their source codes, and a set of memory objects visible to the host and to OpenCL devices. The function responsible for creating a context in MQL5 is CLContextCreate, in which the device for executing the program is specified as a parameter. The function returns a context handle.

int CLContextCreate( |

Inside the context, an OpenCL program is created using the CLProgramCreate function. The parameters of this function include the context handle and the source code of the program itself. As a result of the function execution, we obtain a program handle.

int CLProgramCreate( |

An OpenCL program is divided into separate kernels which are executable functions. The CLKernelCreate function is provided for declaring a kernel. In the parameters of the function, you specify the handle of the previously created program and the name of the kernel within it. At the output, we get the handle of the kernel.

int CLKernelCreate( |

Please note that later, when the kernel is called from the main program on the GPU, several of its instances are launched in different parallel threads. This defines the index space, NDRange, which can be one-dimensional, two-dimensional, or three-dimensional. NDRange is an array of integers. The size of the array indicates the dimension of the space, and its elements indicate the dimension in each of the directions.

Each copy of the kernel is executed for each index from this space and is called a Work-Item. Each work item is provided with a global index ID. In addition, each such unit executes the same code, but the data for execution may be different.

Work items are organized into Work-Groups. Work groups represent a larger partition in the index space. Each group is assigned a group index ID. The dimensionality of work groups corresponds to the dimensionality used for addressing individual elements. Each element is assigned a unique local index ID within the group. Thus, work units can be addressed either by the global index ID or by a combination of group and local indices.

This approach allows for reducing the computation time, but at the same time complicates the process of data exchange between different kernel instances. This needs to be taken into account when creating programs.

As mentioned above, the OpenCL program operates within its own context, isolated from the calling program. As a consequence, it does not have access to the variables and arrays of the main program. Therefore, before starting the program, you need to copy all the data necessary for executing the program from RAM to GPU memory. After the kernel has finished its execution, the obtained results need to be loaded back from the GPU memory. At this point, you need to understand that the time spent on copying data from RAM to GPU memory and back is an overhead time when performing calculations on video cards. Therefore, in order to reduce the overall execution time of the entire program, transferring calculations to the GPU is advisable only when the time saved from GPU computations significantly outweighs the costs of data transfer.

Inside the GPU, there is also a ranking of memory into global, local, and private. The fastest access is achieved for the kernel to private memory, but access to it is only possible from the current instance of the kernel. The most time-consuming access is required for the global memory, but its capacity is the largest among the three mentioned. All running instances of the kernel have access to it. Global memory is used to exchange information with the main program.

Global memory provides read and write access to elements for all work groups. Each Work-Item can write to and read from any part of the global memory.

Local memory is a group-local memory area, in which you can create variables shared by the entire group. It can be implemented as dedicated memory on the OpenCL device or allocated as a region within the global memory.

Private memory is an area visible only to the Work-Item. Variables defined in the private memory of one work item are not visible to others.

Sometimes constant memory is also allocated. This is an area of global memory that remains constant during the execution of the kernel. The host allocates and initializes memory objects located in constant memory.

Let's consider two implementations of a single task: one using the OpenCL technology and the other without. As you will see later, one of the main operations we will be using is matrix multiplication. We will be performing matrix multiplication of matrix by matrix and matrix by vector. For the experiment, I propose comparing the multiplication of a matrix by a vector.

For the matrix-vector multiplication function in the classical implementation, we will use a system of two nested loops. Below is an example of such an implementation. The function parameters include a matrix and two vectors: the matrix and one vector of input data, as well as one vector for storing the results. According to the rules of vector mathematics, multiplication is only possible when the number of columns in the matrix is equal to the size of the vector. The result of such an operation will be a vector with a size equal to the number of rows in the matrix.

//+------------------------------------------------------------------+ |

In the body of the function, we will first determine the dimensions of the matrix, check for compatibility with the vector size, and set the size of the output vector to be equal to the number of rows in the input matrix. After that, we will construct a system of loops. The outer loop will iterate over the rows of the matrix and, accordingly, the elements of the result vector. In the body of this loop, we will start by setting the corresponding element of the result vector to zero. Then, we will create a nested loop and calculate the sum of the products of corresponding elements of the current matrix row and the vector.

The function is not complicated. However, the weak point of such an implementation is the increase in execution time proportional to the growth of the number of elements in the matrix and the vector.

This issue can be solved by using OpenCL. Of course, such an implementation will be a little more complicated. First, let's write an OpenCL program and save it in the mult_vect_ocl.cl file. The *.cl extension is generally accepted for OpenCL programs, but not necessary for implementation in the MQL5 environment. In this case, we will use the file only to store the program text, while the program will be loaded as text.

We will enable support for the double data type in the program code. Please note that not all GPUs support the double type. And even if they do, in most cases this functionality is disabled by default.

//--- By default some GPUs don't support doubles |

And another aspect to consider. MetaTrader 5 allows the use of OpenCL devices for calculations with both double precision support and without. Therefore, when using the double data type in your OpenCL program, it's important to check the compatibility of the used device. Otherwise, we can get an error during the execution of the OpenCL program and while terminating its operation.

At the same time, MetaTrader 5 does not limit the ability to use all available data types. The OpenCL language allows for the use of various scalar data types:

- Boolean: bool

- Integers: char, uchar, short, ushort, int, uint, long, ulong

- Floating-point: float, double

Similar data types are also supported in MQL5. It's important to remember that each data type has its own limitations on the possible range of values, as well as the amount of memory used to store the data. Therefore, if your program doesn't require high precision or the range of possible values isn't too large, it's recommended to use less resource-intensive data types. This will allow more efficient use of device memory and reduce the cost of copying data between the main memory and OpenCL context memory. In particular, the type double can be replaced with float. It provides lower precision, but it occupies half the memory and is supported by all modern OpenCL devices. This helps reduce the costs of data transfer between devices and expand the application's usability.

OpenCL also allows you to use vector data types. Vectorization allows parallelizing computations at the microprocessor level rather than at the software level. Using a vector of four elements of the type double allows you to completely fill the 256-bit vector of SIMD instructions and perform calculations on the entire vector in one cycle. In this way, during one clock cycle of the microprocessor, we perform operations on four elements of our data array.

OpenCL supports vector variables of all integer and floating point types of 2, 3, 4, 8, and 16 elements. However, the possibility of using them depends on the specific device. Therefore, before choosing a vector dimension, check the technical characteristics of your equipment.

Now back to our program. Please provide the kernel code for calculating the vector product of a matrix row with a vector. In the kernel parameters, we will specify pointers to the arrays of input data and results. We will also pass the number of columns in the matrix as a parameter. The number of rows in the matrix does not matter for operations in the kernel, since it only performs operations of multiplying one row by a vector. Essentially, it is the multiplication of two vectors.

Note here that instead of the type of data buffers, we specified the abstract type TYPE. You will not find such a data type in any documentation. In fact, as mentioned above, not all OpenCL devices support the type double. To make our program more versatile, it was decided to replace the data type using a macro substitution. We will specify the actual data type in the main program. This approach allows us to literally change the data type in one place in the main program. After that, the entire program will switch to working with the specified data type without the risk of losing information due to type mismatch.

In the kernel body, the get_global_id function will specify the global ID index of the running Work-Item unit. In this case, the index serves as an equivalent to the iteration counter of the outer loop in the classical implementation. It specifies the sequence number of the matrix array and the element of the result vector. Next, we will calculate the sum of values for the corresponding thread in a similar manner to the calculation inside the nested loop of the classical implementation. But there is a nuance here. For the calculations, we will utilize vector operations with four elements. In turn, to use vector operations, we need to prepare the data. We get an array of scalar elements from the Host program, so we will transfer the necessary elements to our private vector variables using the ToVect function (we will consider its code below). Then, using the vector operation dot, we obtain the value of the multiplication of two vectors of four elements. In other words, with one operation, we obtain the sum of the products of four pairs of values. The obtained value is added to a local variable where the product of the matrix row and the vector accumulates.

After exiting the loop, we will save the accumulated sum into the corresponding element of the result vector.

//+------------------------------------------------------------------+ |

As mentioned earlier, to transfer data from the scalar value buffer to a vector variable, we have created the ToVect function. In the function parameters, we pass a pointer to the data buffer, the starting element, the total number of elements in the vector (matrix row), and the offset in the buffer before the beginning of the vector. The last parameter, offset, is needed to accurately determine the start of a row in the matrix buffer since OpenCL uses one-dimensional data buffers.

Next, we check the number of elements until the end of the vector to avoid going beyond its bounds and transfer the data from the buffer to the private vector variable. We fill the missing elements with zero values.

TYPE4 ToVect(__global TYPE *array, int start, int size, int shift) |

As a result, the function returns the created vector variable with the corresponding values.

This completes the OpenCL program. Next, we will continue working on the side of the main program (Host). MQL5 provides the COpenCL class in the standard library OpenCL.mqh for operations with OpenCL.

First, let's perform the preparatory work: we will include the standard library, load the previously created OpenCL program as a resource, and declare constants for the kernel, buffer, and program parameters indices. We will also specify the data type used in the program. I specified the float type because my laptop's integrated GPU does not support double.

#include <OpenCL/OpenCL.mqh> |

Let's declare an instance of the class for working with OpenCL and variables for storing data buffer handles.

COpenCL* cOpenCL; |

In the next step, we will initialize an instance of the class. To do this, we will create the OpenCL_Init function. In the function parameters, we will pass the matrix and the vector of input data.

In the function body, we will create an instance of the class for working with OpenCL, initialize the program, specify the number of kernels, and create pointers to the kernel and data buffers. We will also copy the input data into the context memory. At each step, we check the results of the operations, and in case of an error, we exit the method with a result of false. The function code is provided below.

bool OpenCL_Init(matrix<TYPE> &source1, vector<TYPE> &source2) |

buffer_Result = CLBufferCreate(cOpenCL.GetContext(), |

The actual calculations will be carried out in the kernel. To run it, let's write the MultOCL function. In the function parameters, we will pass a pointer to the result vector and the dimensions of the input data matrix.

First, we will pass pointers to data buffers and parameters of buffer sizes to the kernel. These operations are performed by the CLSetKernelArgMem and SetArgument methods. We define the index space in the NDRange array according to the number of rows in the source data matrix. The kernel is launched for execution using the Execute method. After executing the entire array of kernel instances, we read the computation results from the device memory using the CLBufferRead method.

bool MultOCL(int rows, int cols, vector<TYPE> &result) |

After the program has finished running, it's necessary to release resources and delete the instance of the class for working with OpenCL. This functionality is performed in the OpenCL_Deinit function. In it, we will first check the validity of the pointer to the object, then call the Shutdown method to release resources, and finally delete the object.

void OpenCL_Deinit() |

Obviously, when using OpenCL, the amount of work for the programmer increases. What do we get in return?

To evaluate the performance, let's create a small script opencl_test.mq5. In the external parameters of the script, we specify the size of the input data matrix.

//+------------------------------------------------------------------+ |

In the body of the script, let's declare the matrix and data vectors We will fill the input data with random values.

//+------------------------------------------------------------------+ |

In the next step, we will initialize the OpenCL context by calling the previously discussed OpenCL_Init function. At the same time, do not forget to check the results of the operations.

if(!OpenCL_Init(X, Y)) |

Now we can measure the speed of operations in the OpenCL context. Using the GetTickCount function, we get the number of milliseconds from the system start before and after the calculations. Calculations are feasible in the previously considered MultOCL function.

uint start = GetTickCount(); |

After performing the operations, we clear the OpenCL context.

In a similar manner, we will measure the execution time of operations using the classical method on the CPU.

start = GetTickCount(); |

In conclusion of the script, we will once again add a timing measurement for the matrix-vector multiplication using matrix operations in MQL5.

start = GetTickCount(); |

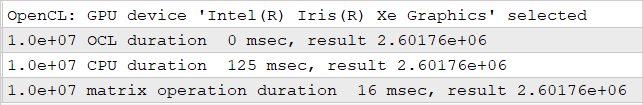

The described script was tested on a laptop with an Intel Core i7-1165G7 CPU and an integrated Intel(R) Iris(R) Xe GPU. Based on the measured execution times, the OpenCL technology emerged as the winner. The slowest was the classical implementation using the nested loops system. Furthermore, the computation results were identical in all three variants.

The results of the comparative testing of computations using OpenCL and without it are as follows:

It's important to note that when measuring the computation speed using OpenCL technology, we excluded overhead costs such as the initialization and deinitialization of the OpenCL context, program, buffers, and data transfer. Therefore, when performing individual operations, its usage might not be as efficient. However, as will be shown further, during the training and operation of neural networks, there will be many such operations, and the process of initializing the OpenCL context and program will only occur once, during the program launch. At the same time, we will try to minimize the process of data exchange between devices. Therefore, utilizing this technology will be highly beneficial.