Sometimes I want the fast genetic optimization to test for many more possibilities than it does. I have a small neural network that seemingly has tons of local minimums. For each weight it could find a good value that it gets closer to but there is a much better negative value that it doesn't test for. I'd like it to first test for way more starting possibilities to really make sure it finds the best one without doing the 1 trillion options of the slow complete algorithm. You can see that it gets stuck at local minimums by running the test with the same inputs a couple of times. Depending on the random values it tries at the start the best one of each will be different, indicating they have not all found the very best result (global minimum). Sometimes it looks like it is testing a lot of options, but it got a pretty good pass by random at the start. So while each generation it is slowly improving but it doesn't get to the level of the good pass in time so it automatically stops thinking it can't make improvements. So yea, is there a way to change the settings how many possibilities it tries each generation? (I think its called population size) And also how many generations before it thinks it cant improve anymore and stops? If there isn't a way to change these how could I make a feature request so these could be added? Another feature I would request would be to add a stop optimization button that stops the backtesting only then still proceeds to do the forward testing. Currently the stop button stops the whole thing. Also if anyone could look at this bug one of my other posts I would appreciate it. (https://www.mql5.com/en/forum/454524)

No! It is a "black box" and we have no direct control over it.

You can try the Service Desk, but I very much doubt you will get any result.

It will be easier for you to build your own optimisation methods, using the following functionality ... Documentation on MQL5: Working with Optimization Results

The following article may be of use ...

Parallel Particle Swarm Optimization

Stanislav Korotky, 2020.12.14 08:43

The article describes a method of fast optimization using the particle swarm algorithm. It also presents the method implementation in MQL, which is ready for use both in single-threaded mode inside an Expert Advisor and in a parallel multi-threaded mode as an add-on that runs on local tester agents.

Sometimes I want the fast genetic optimization to test for many more possibilities than it does. I have a small neural network that seemingly has tons of local minimums. For each weight it could find a good value that it gets closer to but there is a much better negative value that it doesn't test for. I'd like it to first test for way more starting possibilities to really make sure it finds the best one without doing the 1 trillion options of the slow complete algorithm. You can see that it gets stuck at local minimums by running the test with the same inputs a couple of times. Depending on the random values it tries at the start the best one of each will be different, indicating they have not all found the very best result (global minimum). Sometimes it looks like it is testing a lot of options, but it got a pretty good pass by random at the start. So while each generation it is slowly improving but it doesn't get to the level of the good pass in time so it automatically stops thinking it can't make improvements. So yea, is there a way to change the settings how many possibilities it tries each generation? (I think its called population size) And also how many generations before it thinks it cant improve anymore and stops? If there isn't a way to change these how could I make a feature request so these could be added? Another feature I would request would be to add a stop optimization button that stops the backtesting only then still proceeds to do the forward testing. Currently the stop button stops the whole thing. Also if anyone could look at this bug one of my other posts I would appreciate it. (https://www.mql5.com/en/forum/454524)

Are you optimizing the weights of the neural network with the tester optimization ?

(the way the genetic algorithm is tuned in the tester it will keep extending the test if it finds improvement-was using custom max as Fernando mentioned-)Are you optimizing the weights of the neural network with the tester optimization ?

(the way the genetic algorithm is tuned in the tester it will keep extending the test if it finds improvement-was using custom max as Fernando mentioned-YIYes I am optimizing the weights using the tester. I know it extends if it finds improvement, my problem is it will sometimes find a pretty good pass at the start then while optimizing it is overall slowly improving but it doesn't get high enough in time to extend the test.

Detailed visual depiction: (you might have to change your resolution a little)

*

_____ ____/--------/******** Test stops before it gets high enough ______/****

__ __/---------/********

Yes I am optimizing the weights using the tester. I know it extends if it finds improvement, my problem is it will sometimes find a pretty good pass at the start then while optimizing it is overall slowly improving but it doesn't get high enough in time to extend the test.

Detailed visual depiction: (you might have to change your resolution a little)

*

_____ ____/--------/******** Test stops before it gets high enough ______/****

__ __/---------/********

yeah usually it does this :

Try the custom max method , and , if you have nvidia hardware try PyTorch . You will not be limited in how many parameters or layers you can deploy .

So , my suggestion , move the test data to python , optimize the signal with a neural net then bring it back in the tester and optimize the money management of that signal.

yeah usually it does this :

Try the custom max method , and , if you have nvidia hardware try PyTorch . You will not be limited in how many parameters or layers you can deploy .

So , my suggestion , move the test data to python , optimize the signal with a neural net then bring it back in the tester and optimize the money management of that signal.

Unfortunately I have an AMD gpu but maybe I could get it working.

You missed the asterisk in my post above. Here is a better image:

Unfortunately I have an AMD gpu but maybe I could get it working.

You missed the asterisk in my post above. Here is a better image:

maybe it can't "walk around" that solution with your parameters.

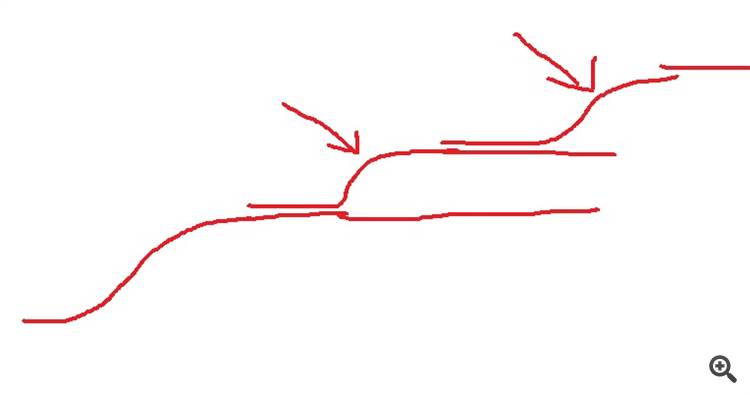

with custom max you may see something like this if you hit the sweetspot where it "appears" to be finding a new "region" for improvement

Did you run the parameters on that asterisk ? was it an outlier ? (i.e. too little trades , too little trades and all wins randomly , too little trades all wins by luck (news) etc) There has to be a reason it can't walk it

They've added support for some AMD recently . TensorFlow supports some gpus too if i recall

https://www.hpcwire.com/off-the-wire/amd-extends-support-for-pytorch-machine-learning-development-on-select-rdna-3-gpus-with-rocm-5-7/#:~:text=with%20ROCm%205.7-,AMD%20Extends%20Support%20for%20PyTorch%20Machine%20Learning%20Development%20on,3%20GPUs%20with%20ROCm%205.7&text=Oct.%2016%2C%202023%20%E2%80%94%20AMD,via%20the%20ROCm%205.7%20platform.

- www.hpcwire.com

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

Sometimes I want the fast genetic optimization to test for many more possibilities than it does. I have a small neural network that seemingly has tons of local minimums. For each weight it could find a good value that it gets closer to but there is a much better negative value that it doesn't test for. I'd like it to first test for way more starting possibilities to really make sure it finds the best one without doing the 1 trillion options of the slow complete algorithm. You can see that it gets stuck at local minimums by running the test with the same inputs a couple of times. Depending on the random values it tries at the start the best one of each will be different, indicating they have not all found the very best result (global minimum). Sometimes it looks like it is testing a lot of options, but it got a pretty good pass by random at the start. So while each generation it is slowly improving but it doesn't get to the level of the good pass in time so it automatically stops thinking it can't make improvements. So yea, is there a way to change the settings how many possibilities it tries each generation? (I think its called population size) And also how many generations before it thinks it cant improve anymore and stops? If there isn't a way to change these how could I make a feature request so these could be added? Another feature I would request would be to add a stop optimization button that stops the backtesting only then still proceeds to do the forward testing. Currently the stop button stops the whole thing. Also if anyone could look at this bug one of my other posts I would appreciate it. (https://www.mql5.com/en/forum/454524)