I set the same date and the same settings, but the results came out differently.Does anyone know why?

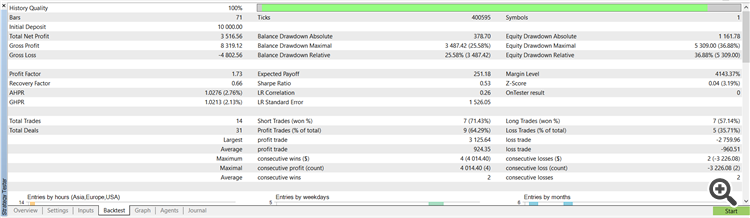

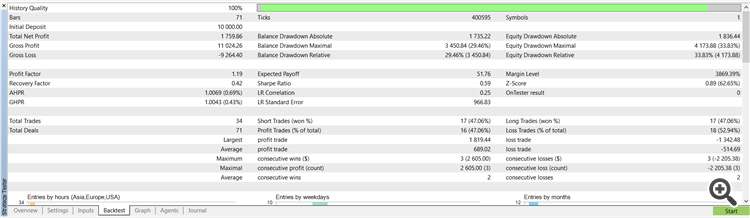

First model testing resultssecond model testing results

Firstly, thank you so much for putting this together, it is nice to look in different directions. It is easy to follow and well put together.

For me I get similar success rates and slightly lower number of trades with the demo account but when I use the meta trader demo account. With my trading account It only trades once . I am assuming it is time zone for the broker my broker is in Australia (GMT+10). The first transaction from demo account is; Core 1 2023.01.02 07:02:00 deal #2 sell 1 EURUSD at 1.07016 done (based on order #2)

The first transaction from My Broker Australia (GMT+10) is; Core 1 2023.01.03 00:00:00 failed market sell 1 EURUSD [Market closed] and not exactly certain how to resolve this. Possibly the whole model is time Zone dependent. If that was the case the times should be out in whole hours? but how does the start transaction 2023.01.02 07:02:00 become 2023.01.03 00:00:00?

Would appreciate any suggestions on the cause of this.

trade serve

Same here, I manage to reproduce very similar results with the original onnx files on my MetaQuates-Demo account.

Then, I manage to re-train the Python MLs to completion; though with the following warnings/errors which can be ignored:

D:\MT5 Demo1\MQL5\Experts\article_12433\Python>python ONNX.eurusd.D1.10.Training.py 2023-11-19 18:07:38.169418: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'cudart64_110.dll'; dlerror: cudart64_110.dll not found 2023-11-19 18:07:38.169664: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine. data path to save onnx model 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 5187/5187 [00:00<00:00, 6068.93it/s] 2023-11-19 18:07:40.434910: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'nvcuda.dll'; dlerror: nvcuda.dll not found 2023-11-19 18:07:40.435070: W tensorflow/stream_executor/cuda/cuda_driver.cc:263] failed call to cuInit: UNKNOWN ERROR (303) 2023-11-19 18:07:40.437138: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:169] retrieving CUDA diagnostic information for host: WIN-SSPXX7BO0B0 2023-11-19 18:07:40.437323: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:176] hostname: WIN-SSPXX7BO0B0 2023-11-19 18:07:40.437676: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX AVX2 To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags. Epoch 1/50 111/111 - 1s - loss: 1.6160 - mae: 0.9378 - val_loss: 2.7602 - val_mae: 1.3423 - lr: 0.0010 - 1s/epoch - 12ms/step Epoch 2/50 111/111 - 0s - loss: 1.4932 - mae: 0.8952 - val_loss: 2.4339 - val_mae: 1.2412 - lr: 0.0010 - 287ms/epoch - 3ms/step ...

both ML scripts finish with:

111/111 - 0s - loss: 1.2812 - mae: 0.8145 - val_loss: 1.2598 - val_mae: 0.8142 - lr: 1.0000e-06 - 366ms/epoch - 3ms/step Epoch 50/50 111/111 - 0s - loss: 1.3030 - mae: 0.8203 - val_loss: 1.2604 - val_mae: 0.8143 - lr: 1.0000e-06 - 365ms/epoch - 3ms/step 33/33 [==============================] - 0s 1ms/step - loss: 1.1542 - mae: 0.7584 test_loss=1.154 test_mae=0.758 2023-11-19 18:07:57.480814: I tensorflow/core/grappler/devices.cc:66] Number of eligible GPUs (core count >= 8, compute capability >= 0.0): 0 2023-11-19 18:07:57.481315: I tensorflow/core/grappler/clusters/single_machine.cc:358] Starting new session 2023-11-19 18:07:57.560110: I tensorflow/core/grappler/devices.cc:66] Number of eligible GPUs (core count >= 8, compute capability >= 0.0): 0 2023-11-19 18:07:57.560380: I tensorflow/core/grappler/clusters/single_machine.cc:358] Starting new session 2023-11-19 18:07:57.611678: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:354] MLIR V1 optimization pass is not enabled saved model to model.eurusd.D1.10.onnx

24/24 - 0s - loss: 0.6618 - accuracy: 0.6736 - val_loss: 0.8993 - val_accuracy: 0.4759 - lr: 4.1746e-05 - 37ms/epoch - 2ms/step Epoch 300/300 24/24 - 0s - loss: 0.6531 - accuracy: 0.6770 - val_loss: 0.8997 - val_accuracy: 0.4789 - lr: 4.1746e-05 - 39ms/epoch - 2ms/step 11/11 [==============================] - 0s 682us/step - loss: 0.8997 - accuracy: 0.4789 test_loss=0.900 test_accuracy=0.479 2023-11-19 18:07:19.838160: I tensorflow/core/grappler/devices.cc:66] Number of eligible GPUs (core count >= 8, compute capability >= 0.0): 0 2023-11-19 18:07:19.838516: I tensorflow/core/grappler/clusters/single_machine.cc:358] Starting new session 2023-11-19 18:07:19.872285: I tensorflow/core/grappler/devices.cc:66] Number of eligible GPUs (core count >= 8, compute capability >= 0.0): 0 2023-11-19 18:07:19.872584: I tensorflow/core/grappler/clusters/single_machine.cc:358] Starting new session saved model to model.eurusd.D1.63.onnx

Next I re-compile the original ONNX.Price.Prediction.2M.D1.mq5 to use the new MLs I have trained.

The backtest results with the same MetaQuates-Demo account were much different from the original; which don't look good.

Would really appreciate to know what has gone wrong?

Many thanks.

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

New article An example of how to ensemble ONNX models in MQL5 has been published:

ONNX (Open Neural Network eXchange) is an open format built to represent neural networks. In this article, we will show how to use two ONNX models in one Expert Advisor simultaneously.

For stable trading, it is usually recommended to diversify both the traded instruments and the trading strategies. The same refers to machine learning models: it is easier to create several simpler models that one complex one. But it can be difficult to assemble these models into one ONNX model.

However, it is possible to combine several trained ONNX models in one MQL5 program. In this article, we will consider one of the ensembles called the voting classifier. We will show you how easy it is to implement such an ensemble.

First model testing results

Now let us test the second model. Here are the second model testing results.

The second model turned out to be much stronger than the first one. The results confirm the theory that weak models need to be ensembled. However, this article was not about the theory of ensembling, but about the practical application.

Author: MetaQuotes