Discussion of article "Neural networks made easy (Part 22): Unsupervised learning of recurrent models"

hello may you please help me with the error which says " invalid pointer access in 'NeuroNetPR.mqh' (2512,18)"

hello i have tried that and it still didn't work ....the same error has been there from article 8 and i haven't found a way to solve it

the error points on the CNET ::save function on the point of declaration of bool result=layers.Save(handle); and points to the "layers" variable

1432189 #:

hello i have tried that and it still didn't work ....the same error has been there from article 8 and i haven't found a way to solve it

the error points on the CNET ::save function on the point of declaration of bool result=layers.Save(handle); and points to the "layers" variable

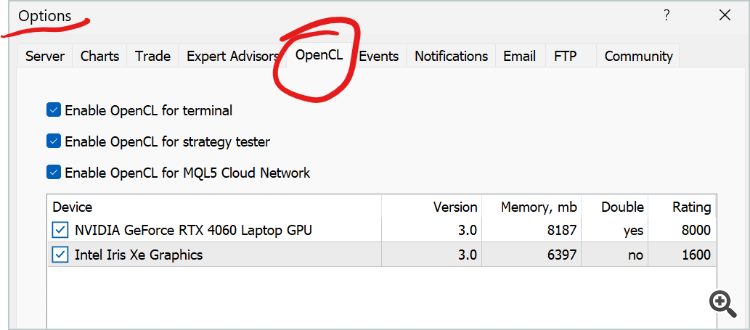

What you see in MetaTrader 5 Options

You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

New article Neural networks made easy (Part 22): Unsupervised learning of recurrent models has been published:

We continue to study unsupervised learning algorithms. This time I suggest that we discuss the features of autoencoders when applied to recurrent model training.

The model testing parameters were the same: EURUSD, H1, last 15 years. Default indicator settings. Input data about the last 10 candles into the encoder. The decoder is trained to decode the last 40 candles. Testing results are shown in the chart below. Data is input into the encoder after the formation of each new candle is completed.

As you can see in the chart, the test results confirm the viability of this approach for unsupervised pre-training of recurrent models. During test training of the model, after 20 learning epochs, the model error almost stabilized with a loss rate of less than 9%. Also, information about at least 30 previous iterations is stored in the latent state of the model.

Author: Dmitriy Gizlyk