Machine learning in trading: theory, models, practice and algo-trading - page 626

You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

Why, no, there is a surplus of trees taken... it was fine with 10 answers (I don't remember how many I set)

500 is a lot, enough for any dataset

Why were there such errors on fractional numbers? ... strange, I expected that the machine will be able to learn exactly... It turns out that in the case of patterns (in forex) their form is not unambiguous (like in the multiplication table), it's good if you get a fair prediction in 60% of cases.

the errors were because r parameter was low, which means that only half of the examples were used for training, and this half was trained on half of the trees :) and there are few examples

you have to set r~1 to be exact. It's used for pseudoregularization and for tests on out of bag samples

it's just this mechanism should be set up, in case of the forest there are only 2 settings

You should also understand that NS (RF) is not a calculator, but it approximates a function, and for many tasks too high accuracy is even more bad than good.

I'm afraid that regression/prediction on the net will produce about the same thing as looking for similar sites/patterns in history (which I did 3 months ago):

the errors were because r parameter was low, which means that only half of the examples were used for training, and this half was trained on half of the trees :) and there are few examples

you should set r~1 to be exact. It's used for pseudoregularization and for tests on out of bag samples

It's just this mechanism should be set up, in the case of the forest you have only 2 settings

I.e. if you set r to 1 then it will be trained on all samples.

Anyway not on all, not all signs will be used, that in general on all there is a modification of the model, where you can set that on all. But this is not very recommended because the forest will stupidly remember all the options

and the selection of settings is often such a subjective thing, you have to experiment

if less than 1, then on remaining samples model is validated (model is estimated on data that didn't fall into training sample). classic r is 0.67, on remaining 33% it is validated. Of course, this is relevant to large samples, in small samples it makes no sense, it's better to set 1.

Anyway not on all, not all signs will be used, that in general on all there is a modification of the model, where you can set that on all. But this is not very recommended because the forest will stupidly remember all the options

and the selection of settings is often such a subjective thing, you have to experiment

If it's less than 1 then on remaining samples model is validated (model is estimated on data that wasn't taken into training sample). According to classic r is set to 0.67, on remaining 33% of model is validated

Features selection

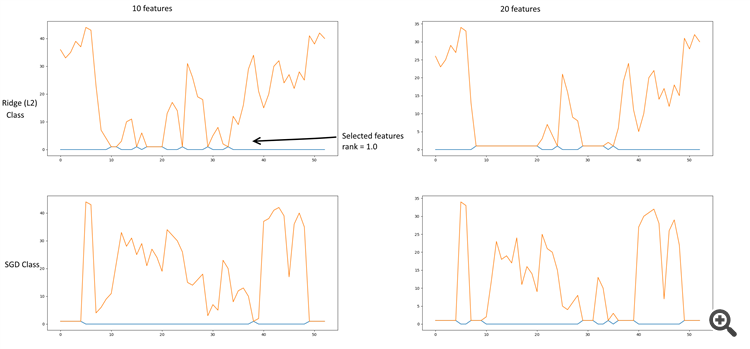

Little bit of datamining. I was doing features selection via Chi^2 + KBest, RFE (Recursive feature elimination) + (SGDClassifier, RidgeClassifier), L2 (Ridge, RidgeClassifier), L1 (Lasso). Ridge regularization gives more sane results.

Some graphs:

RFE + Ridge & SGD

Ridge regressor (L2).

Ridge classifier (L2)

The file contains a table of parameter values, and their sampling by attribute selection.

The most significant coefficients turned out to be:

- 10, 11 - Close, Delta (Open-Close)

- 18-20 - Derivative High, Low, Close

- 24 - Log derivative Close

- 29, 30 - Lowess

- 33 - Detranding Close - Lowess

- 35 - EMA 26 (13 as an option)

- 40 - Derivative EMA 13

PS. The Ridge Classifier line in the table is based on one class, it does not reflect the dependence of parameters on other classes.Reference to the script.

sketched a new network diagram, this is the first description. There will be more later (hopefully)

https://rationatrix.blogspot.ru/2018/01/blog-post.html

sketched a new network diagram, this is the first description. There will be more later (hopefully)

https://rationatrix.blogspot.ru/2018/01/blog-post.html

And I wonder why the first post was deleted. He blogged the scheme. =)