Machine learning in trading: theory, models, practice and algo-trading - page 192

You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

The whole world had been waiting for this moment with bated breath.

And now it has finally happened!

Release 12 of jPrediction, which generates code of trained ternary classifiers in MQL, has been released. MetaTrader users no longer need to port ternary classifier codes from Java to MQL. All the code for MQL is now stored in files with mqh extension.

(Loud applause and shouts of "Hurray!")

But that's not all. jPrediction 12 is now about 12% faster than the previous version!

(Loud applause while tossing bonnets)

Numerous jPrediction users can download and use version 12 for free by downloading it from my website (link in my profile, first post on my home page).

(Knocking of keys and movement of download indicators on monitors)

Congratulations are accepted in verbal and written form, as well as gifts and money transfers via WebMoney.

I'm embarrassed to ask, for which version of MKUL? 4 or 5???

I checked it on the 5-th version. But there are no OOP and other features typical to 5. So it seems that there should be compatibility with the 4th? Of course, I don't think so, because I didn't check it on 4.

Well great, I just compared the code that generates Prediction with the code that I wrote, the result is the same. I was just worried if I had a mistake where, as you remember with 1d was, checked now everything is the same, the result is identical. For today's day the signal selection for buying was so bad that I decided to leave yesterday's one and I have not lost, and I have optimized the 12 release with better results but I have less entries... only three. So all in all ok, but will need to see tomorrow in more detail. So tomorrow I will report more specifically.... So, for today, the oil picture... Judge for yourself.....Grief to complain. And let me explain again, the signals that the network defined as "I do not know" (points without arrows). We determine the fact. For today it is true. That is, when the network says "I do not know" we mean that it is true...

But, my God, what a bore

Sitting with a sick man, day and night,

Without a single step out of sight!

What a low insidiousness

To amuse a half-dead man,

To adjust his pillows,

To bring his medicine sadly to him,

To sigh and think to myself:

When the devil take thee!)))

I found a package that allows you to take a deeper look at the MO algorithms, I myself do not understand it at all, but something tells me that the package is good, maybe someone will be interested...

partykit package

The 14th version of jPrediction is out.

The new version has an improved algorithm for identifying and removing insignificant predictors from models

You can downloadjPrediction 14 for free and use it for classification tasks by downloading it from my website (link in my profile, topmost post on my homepage).

Examples (indicators) are taken purely for illustration I strongly recommend not to use indicators

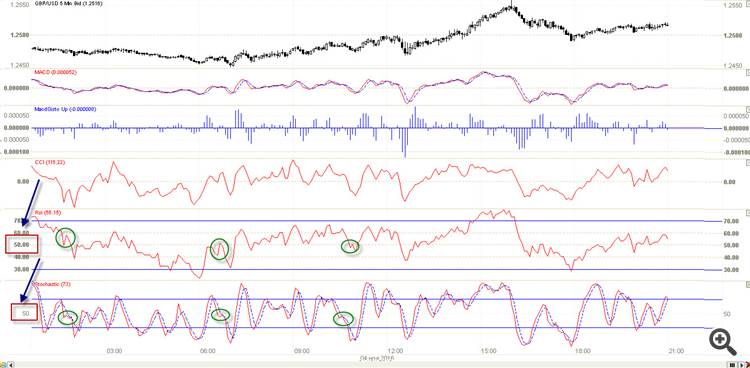

Let's imagine the following situation: we have 5 predictors and the price, we need to predict the price movement with high probability( say, over 70%)) and we know beforehand that there is only one pattern in these predictors that can help us predict the market with such an accuracy. That's whenRSI and stochastic make a mini zigzag down in the ~50 area

s

By the way notice the pattern lies so to speak in two planes of vision, digital (pattern in the ~50 area) and figurative (zigzag - image), so when searching for patterns it makes sense to consider such planes...

And that's all, there are no more working patterns in these predictors, everything else is just noise, those first three indicators are originally noise, and inRSI and stochastic there is only one pattern, everything else inRSI and stoch is also total noise...

Now let's think about how we can look for such patterns in the data... Can regular MOs do it?

The answer is no, why?

Because the target of MO is aimed at predicting all movements, it is either a zigzag or a trace color or... candlestick or direction or... or.. .all targets force MOs to explain all price movements , and this is impossible with noise predictors under 99%...

I'll tell a little story with moral, I've created a synthetic sample of 20 predictors, 4 predictors interacting together, completely explain the target, the rest 16 predictors were just random noise, after training with new "OOS" datathe model guessed all new values, it showed 0% error .... The moral of this fable is: if there are predictors in the data that can completely explain the target, then the MI will also learn andOOS will behave normally... Our results show the opposite, in those samples that we feed into MI there is more than 5% of useful information that can explain 5% of the target, and we want 100% of it, do you understand the utopia of approach? That's why MOs overtrain, we make them overtrain ourselves wanting to predict all 100%.

So back to the main point, how do we look for these working patterns? How to find this "needle" of robustness in the "stack " of data?

All we need is just to break each predictor into small pieces of similar situations (patterns) and by trying all possible combinations and comparing with target one we'll find what we're looking for.. . How to break predictors? With what?

The answer is simple, even though I didn't realize it right away, we just need to cluster each predictor into, let's say, 30 clusters.

I didn't paint the picture, but I think the idea is clear, we simply divide each predictor into those clusters and try different combinations between them, we can find some patterns that work, like in the picture - when stochastic has cluster 1 and RSI has cluster 2 it will grow...

Now the essence of how to search for working patterns

This is our hypothetical sample,target.The label is the target that let's say means growth/decline

An example of how to look for growth

we look for lines which are repeated at least 10 times in the whole sampling and in each of the found identical clusters the number of "1" in target.label should exceed 70% of "0"

Not quite sure what you mean by "clustering". Usually cluster not one particular predictor, but on the contrary take a dozen of them, and find areas in space where these points are grouped. For example, in the picture below, having two predictors, clustering into two clusters will give just blue and red clusters.

Maybe you are talking about patterns? The green pattern - the price goes down and then up. Yellow: price goes up from the bottom. Red: up->down. You got it?