This article is interesting. I haven't even tried to look at neural networks before, believing that they are a "dark forest" and everything I read on this topic before was repulsed by the abundance of incomprehensible words, but in this article, indeed, the theory is quite simple and understandable, thank you to the author for that!

And I would like to clarify this phrase: "Both EAs showed similar results with a hit rate of just over 6%." Am I right to understand that after the first "pass" of training, the forecast of the neural network was justified only by 6%.

And after 35 epochs of training - only 12% ???

Such a low result does not motivate to study the topic further.

What are the methods to improve the accuracy of prediction?

Good afternoon, Dimitri.

The topic is very interesting and necessary. Thank you for these articles).

1. I have a question about the code of the Traine(... ) method:

TempData.Clear(); bool sell=(High.GetData(i+2)<High.GetData(i+1) && High.GetData(i)<High.GetData(i+1)); //в строчке ниже скорее всего не верно определяется Low фрактал bool buy=(Low.GetData(i+2)<Low.GetData(i+1) && Low.GetData(i)<Low.GetData(i+1)); //знаки сравнения нужно поменять наоборот buy=(Low.GetData(i+2)>Low.GetData(i+1) && Low.GetData(i)>Low.GetData(i+1));/

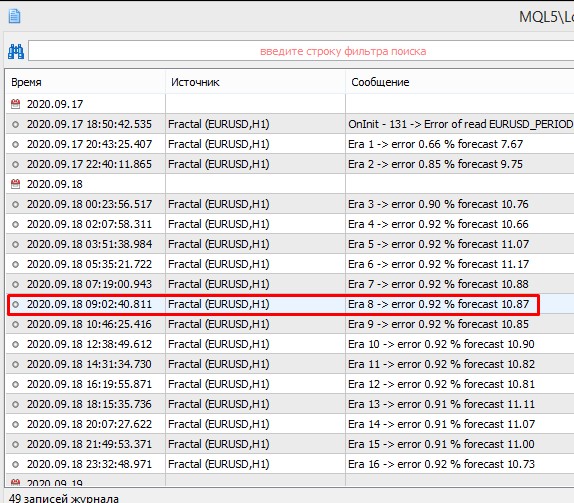

When I ran the modified version on training the results improved:

Already at epoch 8 the accuracy was 16.98% against the old variant at the same epoch 10.87%

2. Why does the multilayer neural network have such a low % of accuracy, not up to 50% ?

Good afternoon, Dimitri.

The topic is very interesting and necessary. Thank you for these articles).

1. I have a question about the code of the Traine(... ) method:

When I ran the modified version on training the results improved:

Already at epoch 8 the accuracy was 16.98% vs the old variant at the same epoch 10.87%

Thanks for the comment, Alexander. An unfortunate copypaste error.

Good afternoon, Dimitri.

2. Why does the multilayer neural network have such a low % of accuracy, not up to 50%?

I wrote in the first article that I took random indicators from standard ones with standard parameters. A neural network is a good tool, but not something supernatural. It looks for patterns where they are. But if there are no patterns in the raw data, it won't come up with them on its own. Exactly hitting a fractal is quite a difficult task and, to be honest, I didn't expect to get an exact hit. But what I got gives ground for further work.

Thank you for the comment, Alexander. An unfortunate copypaste error.

It happens)

I wrote in the first article that I took random indicators from standard ones with standard parameters. A neural network is a good tool, but not something supernatural. It looks for patterns where they are. But if there are no patterns in the raw data, it won't come up with them on its own. Exactly hitting a fractal is quite a difficult task and, to be honest, I didn't expect to get an exact hit. But what I got gives ground for further work.

Makes sense).

Thanks, your work is great in any case )

Dmitry, good afternoon!

Very interesting series of articles about neural networks. At the moment I am experimenting with different sets of indicators and tasks for the network. I decided to set a task for the network to determine the probability of occurrence of the next bar either with the Hight level higher than the Open level of the current bar by 100 points, or with the Low level lower than the Open level of the current bar by 100 points.

if(add_loop && i<(int)(bars-MathMax(HistoryBars,0)-1) && i>1 && Time.GetData(i)>dtStudied && dPrevSignal!=-2) { TempData.Clear(); double DiffMin=100; double DiffLow=Open.GetData(i+1)-Low.GetData(i); double DiffHigh=High.GetData(i)-Open.GetData(i+1); bool sell=(DiffLow>=DiffMin); bool buy=(DiffHigh>=DiffMin); TempData.Add((double)buy); TempData.Add((double)sell); TempData.Add((double)(!buy && !sell)); Net.backProp(TempData); ... }

When testing the Expert Advisor, the labels of predicted fractals are displayed on the chart, but the statistics on correctly predicted and not found fractals is not updated and is always equal to 0.00%. Can you point me to the error I made?

if(DoubleToSignal(dPrevSignal)!=Undefine) { if(DoubleToSignal(dPrevSignal)==DoubleToSignal(TempData.At(0))) dForecast+=(100-dForecast)/Net.recentAverageSmoothingFactor; else dForecast-=dForecast/Net.recentAverageSmoothingFactor; dUndefine-=dUndefine/Net.recentAverageSmoothingFactor; } else { if(sell || buy) dUndefine+=(100-dUndefine)/Net.recentAverageSmoothingFactor; }

- www.mql5.com

Dimitri, good afternoon!

Very interesting series of articles about neural networks. At the moment I am experimenting with different sets of indicators and tasks for the network. I decided to set a task for the network to determine the probability of occurrence of the next bar either with the Hight level higher than the Open level of the current bar by 100 points, or with the Low level lower than the Open level of the current bar by 100 points.

When testing the Expert Advisor, the labels of predicted fractals are displayed on the chart, but the statistics on correctly predicted and not found fractals is not updated and is always equal to 0.00%. Can you point me to the error I made?

Good afternoon,

you specified Diff=100, as far as I understand it is in pips. And the difference is calculated by price. I.e. for EURUSD it is calculated as 1.16715-1.15615=0.01. As a result, you have not comparable data and sell and buy will always be false.

Good day,

you specified Diff=100, as far as I understand it is in points. And the difference is calculated by price. I.e. for EURUSD it will be calculated as 1.16715-1.15615=0.01. As a result, you have not comparable data and sell and buy will always be false.

I have a question: Why the hell study the topic of a whole series of mega abstruse articles if this neural network has negligible accuracy ... I think the topic should either be discontinued or the EA should be improved.

I would like to add that my neural network is much more "complex" than yours, but it is guaranteed to give 70-80% of correct inputs and at the same time it is much simpler in structure ...

and I would add that there are a lot of other neural networks with much better accuracy than yours.

In general, I have the impression that you are paid money for your articles, but they are of no use ... I'm sorry.

I also do not agree with the title "Neural networks are easy", those who are engaged in machine learning on big data know - it is not easy ... :-)

>To evaluate the performance of the network you can use the mean square error of prediction, the percentage of fractals correctly predicted, the percentage of missed fractals.

I don't agree with this at all - the result is only the final balance, net profit and only and nothing else ... nothing at all, it's not science for science's sake, it's science for profit.

I'll even tell you why: there are Expert Advisors with 60% accuracy ... but thanks to a clever system they give more profit than advisors with 80% accuracy ...

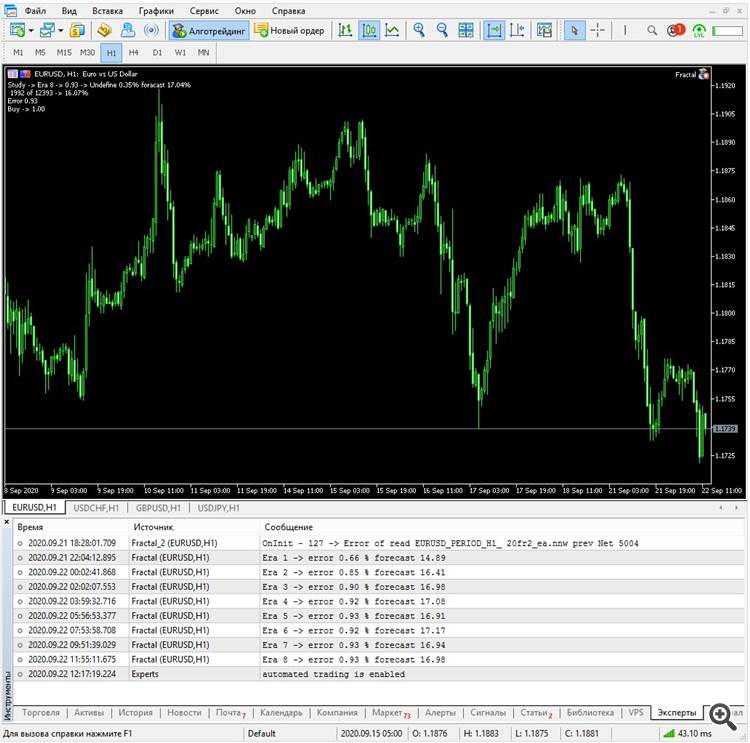

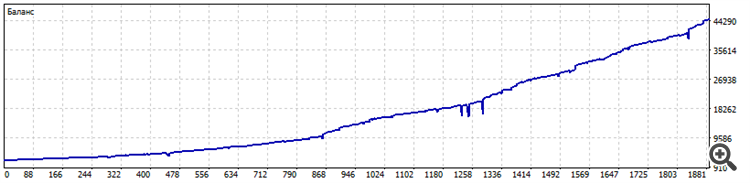

and you should start with the chart of the final trading stats of your EA or there is no point in reading it, I immediately turn down if there is no stats or it does not meet my requirements, then you can not read at all, below is the test of my not very smart neuronka

and you should start with the chart of the final trading state of your EA or there is no point in reading it, I immediately turn down, if there is no state or it does not meet my requirements, then you can not read at all, below is the test of my not very smart neuronka.

then you don't need the article section.

For your requirements - a profitable steith, the sections Codobase and Market are suitable.

yes, by the way, even your message does not fit your requirements, so it can be ignored too? )))

you need articles, you need them to properly test an EA working on the basis of neural network, there is a high probability that your state is from a tester, not only from MT4, but you may not have divided the training sample into train/test/validation.

You need articles to learn how to write good structured and readable code - I think the author's code in the article is perfect for these requirements.

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

New article Neural networks made easy (Part 2): Network training and testing has been published:

In this second article, we will continue to study neural networks and will consider an example of using our created CNet class in Expert Advisors. We will work with two neural network models, which show similar results both in terms of training time and prediction accuracy.

The first epoch is strongly dependent on the weights of the neural network that were randomly selected at the initial stage.

After 35 epochs of training, the difference in statistics increased slightly - the regression neural network model performed better:

Testing results show that both neural network organization variants generate similar results in terms of training time and prediction accuracy. At the same time, the obtained results show that the neural network needs additional time and resources for training. If you wish to analyze the neural network learning dynamics, please check out the screenshots of each learning epoch in the attachment.

Author: Dmitriy Gizlyk