1 - Thank you for the article, it has fascinated me for the nearest free time, and as soon as I have a free day - I will go deeper into the topic.

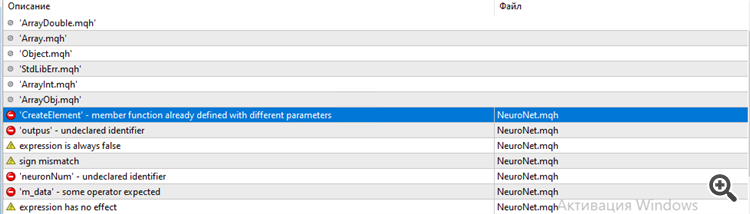

2 - A remark, the code does not compile. Apparently, you have written examples simplifying your developments, but you have not checked the resulting examples in practice. I think the errors in misprints are expressed, at least those I noticed. I haven't finished reading the article yet. And from the programming point of view in the methods of creating new neurons and other things, you do not delete the past ones if the index does not exceed the previous value, you get potential zombie objects...

3 - Question. The grid weights should not sum to one apparently right ?

2 - The implemented network will work very slowly, because it is implemented all through objects..., at least the weights should be implemented through simple arrays. Plus you can see that there are errors in the project of the network from the point of view of OOP, it is in the project itself, because of this so many objects turned out.

3 - The weights of the network can have any values, not limited by anything, when close to zero values they reduce their influence....

You must have mixed up the single value of a neuron, the final value, but after the sigmoid function...in this case, yes, the output of each neuron in the network is from 0 to 1, but in the article there was a tankence as activation of a neuron, and its output is from -1 to 1.

Another big disadvantage of this article, not a word was said about data preparation, normalisation, etc., and this is very important, because the neural network does not work with the original data.

Daniil Kurmyshev:

2 - The implemented network will work very slowly, because it is implemented all through objects..., at least weights should be implemented through simple arrays. Plus you can see that there are errors in the network project from the OOP point of view, it is in the project itself, because of that so many objects turned out.

There will be no loss of performance, I have tested it, and you can look in the posts of admin Renat, he showed that OOP in MQL5 works as fast as procedural style.

As for the article, the presentation is good, but the material has already been covered many times in articles on this resource, although it may be useful.

"If we want to feed the input of a neural network with some data array of 10 elements, then the input layer of the network should contain 10 neurons. This will allow the entire data set to be accepted. The redundant input neurons will just be ballast."

no, it shouldn't, one may be enough

"The number of hidden layers is determined by the causal relationship between the input data and the expected output. For example, if we are building our model in relation to the "5 why" technique, it is logical to use 4 hidden layers, which in sum with the output layer will give us the opportunity to put 5 questions to the original data."

what is "5 why's" and what do 4 layers have to do with it? Looked it up, a simple decision tree answers this question. The universal approximator is a 2-layer NS, which will answer any number of "why" :) The other layers are mainly used for data preprocessing in complex designs. For example, compressing an image from a large number of pixels and then recognising it.

"Since the output of a neuron is a logical result, the questions posed to the neural network should assume an unambiguous answer."

If the output is not a simple adder, the answer is assumed to be probabilistic.

I was afraid to look at the code, because there are plenty of high-quality implementations on the Internet )).

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

New article Neural Networks Made Easy has been published:

Artificial intelligence is often associated with something fantastically complex and incomprehensible. At the same time, artificial intelligence is increasingly mentioned in everyday life. News about achievements related to the use of neural networks often appear in different media. The purpose of this article is to show that anyone can easily create a neural network and use the AI achievements in trading.

The following neural network definition is provided in Wikipedia:

Artificial neural networks (ANN) are computing systems vaguely inspired by the biological neural networks that constitute animal brains. An ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain.

That is, a neural network is an entity consisting of artificial neurons, among which there is an organized relationship. These relations are similar to a biological brain.

The figure below shows a simple neural network diagram. Here, circles indicate neurons and lines visualize connections between neurons. Neurons are located in layers which are divided into three groups. Blue indicates the layer of input neurons, which mean an input of source information. Green and blue are output neurons, which output the neural network operation result. Between them are gray neurons forming a hidden layer.

Author: Dmitriy Gizlyk