You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

All,

The question here is to get the time stamp of the tick, and not the time when the tick arrives at the at terminal using GetTickCount() (ie whenever the start() function is called ), as suggested.

MarketInfo(Symbol(), MODE_TIME) returns the time stamp of the tick as sent from the broker's server in the data feed.

Regards

VS

All,

The question here is to get the time stamp of the tick, and not the time when the tick arrives at the at terminal using GetTickCount() (ie whenever the start() function is called ), as suggested.

MarketInfo(Symbol(), MODE_TIME) returns the time stamp of the tick as sent from the broker's server in the data feed.

1) GetTickCount(), should work Live. Pointless on historical data.

2) Even mt5_data isn't saved in milliseconds. However, no_problem Live.

3) I don't see where you're going with that. If it's the same time in milliseconds then having milliseconds wouldn't help. If it's different times in milliseconds than GetTickCount() might help. Help in the sense that your code processes the current tick within less than a millisecond. How important all depends upon what you're trying to accomplish... i guess.

Thanks everybody for their responses. There was an indicator that was posted to the codebase, Rogue Tick Detector. But it is not approved yet. You can find it here for now.

The basic idea is that there are times where the current tick tick0 will come in with a later timestamp than the previous tick tick-1. But the price would no longer be actionable, but the EA or human trader wouldn't know this until after the fact. It was causing price-based events to be triggered erroneously. Rogue tick detector was able to flag these fake ticks and prevent EA from acting on them (wait for the next 'good' tick)

The current method of capturing timestmps of the ticks, MarketInfo(Symbol(), MODE_TIME), does not return millisecond timestamps. So I came here asking to see if there were alternatives we were overlooking.

The EAs which include the rogue tick detection functions all run on VPS in New York with SSD drives, windows 2008, and are usually <2ms from broker server. No HFT or hyperscalping (trades avg hold time are appx 1 hour)

Brings me back to one of my original questions: So how is the mt4 (and mt5) platform [itself] supposed to properly distinguish between ticks that come in at the 'same time'?

edit, thanks ankasoftware for clarifying.

Brings me back to one of my original questions: So how is the mt4 (and mt5) platform [itself] supposed to properly distinguish between ticks that come in at the 'same time'?

You cannot . . . does your Broker actually send you multiple ticks that happen t the same time ? or do they just increment the count by 2 and send the latter tick ? how could you know if they did ? are you aware that there is a huge difference in the tick count between Brokers ?

Yes, I am aware that different brokers have different feeds and the tick count can differ greatly. But it just so happens that the rogue ticks are being sent during certain times, mainly when trades were closed at a profit. It was affecting triggering of closealls and orders, so we found a way to detect and ignore them the best we can. I even suspected intentional feed manipulation at some point from some of the brokers.

But perhaps we should have a tick count ratio, where we count the total ticks

It may very well not affect many people, but I thought the potential damage was enough to warrant further investigation.

What do you have in mind? How would this be different from mt4 Volume? A ratio of what two numbers [ count and ??? ].

This subject gets very confusing very fast. Myself included do-not know everything about ticks, nor how metaQuotes processes it, nor why it'll be very critical to someone. Allow me to summarize some of my observations.

1) metaQuotes says: you want milli_seconds time_stamp, [ they immediately start thinking tick_data ], who's going to hold this tick_data the broker? you mean telling you there's 200 ticks within that minute isn't good enough for you? You really want us to save 200 entry of data because OHLC+V isn't good enough?

2) trader number1 says: I don't need anyone to save the information I just want milli_seconds time stamp for determining old data.

3) trader number2 says: I don't need you to save the information me, just give me the ability to import it and I'll get my own data.

4) trader number3 says: I don't see why it's such a big deal saving and providing tick data. Come on, computers have more power and memory these days, surely my broker can provide the data somewhere.

5) broker says: man I have a hard enough time giving you m1 data for more than 3_months what makes you think I'm capable or willing to provide that much data when you connect or for testing.

6) trader number4: we don't need it for testing and just a small portion for the data would be sufficient live, I don't complain about insufficient m1 now so whats the problem.

7) metaQuotes: still no-go, this means we'll have to facilitate functions which returns milli_seconds and indicators and such which distinguishes ticks ... are you trying to crash the terminal or something?

8) trader number5: you mean Volume isn't market_depth but a count of the number of ticks within a given time_frame :) . You mean I can miss tick? You mean ticks can get lost or delay in cyber_space? You mean tick_count differ between brokers? You mean the data the broker stores wouldn't have been the same as what I would have received? Whats all the fuss about tick then?

9) xxxxxxxxxxxx says: tick is top secret, whats provided certainly is good enough, i helped design the tick generator and have very little interest in providing that kind of resolution. not gonna happen.... period.

10) trader number6: there are technological limitations, to what can be provided, how tick counts works, what can be received, etc..etc. This is not a metaTrader problem, rather allot of retail platforms experience this problem. Look toward institutional software and be ready to shell the big bucks.

Trader#3 to Trader#10: i dis-agree.

Ubzen says: I just don't know what to say anymore.

Ps> nearly forgot trader#7: fine, I'll save my own tick which comes to my terminal and program my own indicators and such to process this data... This is how I interpreted the question and hence why I recommended the GetTickCount().

Ps> nearly forgot trader#7: fine, I'll save my own tick which comes to my terminal and program my own indicators and such to process this data... This is how I interpreted the question and hence why I recommended the GetTickCount().

I'm hoping to stay on topic here :). With that said, imagine a broker who sends milliseconds timeStamps with every tick. But, then proceed not-to save this information on her_side. Given all the dis-trust about brokers in general, this broker would open_up an onslaught of inquires. But this time people have proof in milliseconds, but the broker have no records to counter with. So in some sense, asking for tick_data | milliseconds or whatever which leads to the same arguments is basically asking the broker to save tick_data and for the platform to facilitate it.

On a second note, consider the reverse back-tests which most ppl do. Where you run a strategy_live for about a week and then proceed to perform a back_test upon that week in_order to verify if you'll get the same results. This person have milliseconds time_stamps live and delays and missing packets live. Of course like the original poster, you ignore the missing data and/or dismiss the delayed ticks. However, when you perform the back-test, all the broker's data with correct timestamps are there. This'll obviously generate different results then what you just received live.

So tell me, were you mislead Live or are you being mislead during the Back_Test?

I however Agree with your statement above. Imo, all this creates a set of paradoxes which leads me to stay away from inter_minute processes all_together. The platform has limitations, I just accept and move_on.

...

I however Agree with your statement above. Imo, all this creates a set of paradoxes which leads me to stay away from inter_minute processes all_together. The platform has limitations, I just accept and move_on.

Timers, Timer Resolution, and Development of Efficient Code

Windows Timer Resolution : Megawatts wastedInteresting links thank you, which led me to Microsecond Resolution Time Services for Windows. I have conducted some tests based on information from those pages.

My tests on a Win 7 PC and a Windows 2012 VPS indicate that GetTickCount() always has a resolution of 15.6 msecs (64 interrupts per second) regardless of the system timer setting, whereas the resolution when obtaining millisecond time by calling the kernel32.dll functions GetSystemTime() [or GetLocalTime()] or GetSystemTimeAsFileTime() is affected by the system timer settings, and can give down to 0.5 msec resolution on both machines I tested.

GetTickCount() Resolution

Here is code for a script to test GetTickCount() resolution:

This always gives 15 or 16 (i.e. 15.6) on both machines tested regardless of system timer resolution changes mentioned below for the other tests.

GetSystemTime() Resolution

Now things start to get interesting. Here is code for a script to test GetSystemTime() resolution:

That gives a resolution of 15/16 msecs on a freshly booted PC with no other software running, but 1 msec if Chrome is running on the PC! As angevoyageur's second link explains Chrome sets the system timer to 1 msec resolution, as does some other software.

I found two little utilities for setting the system timer resolution, so that 1 msec (or even 0.5 msecs) resolution can be obtained in a controlled way on a cleanly booted machine:

Windows System Timer Tool: http://vvvv.org/contribution/windows-system-timer-tool

Timer-Resolution: http://www.lucashale.com/timer-resolution/

I prefer the first one of the two, Windows System Timer Tool. With that I could reliably get 1 msec resolution via GetSystemTime(). GetLocalTime() could also be used similarly.

Btw the script code above is an example of how much better new MT4 code can be thanks to structs. In the old MT4 accessing GetSystemTime() required use of an integer array plus lots of messy bit manipulation.

GetSystemTimeAsFileTime() Resolution

Finally, I noted that Microsecond Resolution Time Services for Windows mentioned that GetSystemTimeAsFileTime() is a faster function for accessing system time, as well as requiring a smaller and simpler struct. The latter is certainly true for the new MT4 as the "struct" can be reduced to just a ulong.

Here is code for a script to test GetSystemTimeAsFileTiime() resolution:

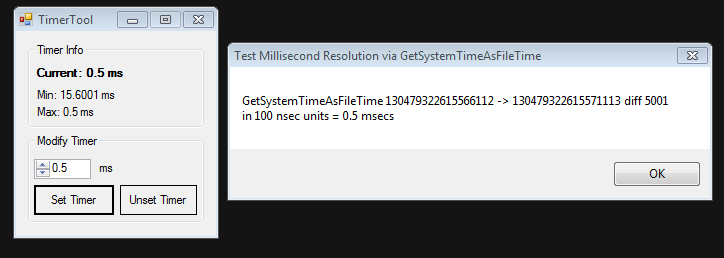

If Windows System Timer Tool is used to set a system timer resolution of 0.5secs that little script reports a resolution of 5000 (or sometimes 5001) 100 nsecs units = 0.5 msecs.

Use of GetSystemTimeAsFileTiime() is indeed simpler and can show finer resolution.

Here are some pics of this one in use.

After a clean boot:

With Timer Tool used to set the system timer resolution to 1 ms:

And with Timer Tool used to set the system timer resolution to 0.5 ms:

ConclusionThe best function to use for obtaining millisecond timings in MT4 is GetSystemTimeAsFileTiime() called as shown in the test script above, with a utility such as Windows System Timer Tool used to set the desired system timer resolution.