Multi-Head attention

In the previous section, we got acquainted with the mechanism of Self-Attention mechanism, which was introduced in June 2017 in the article Attention Is All You Need. The key feature of this mechanism is its ability to capture dependencies between individual elements in a sequence. We even implemented it and managed to test it on real data. The model demonstrated its effectiveness.

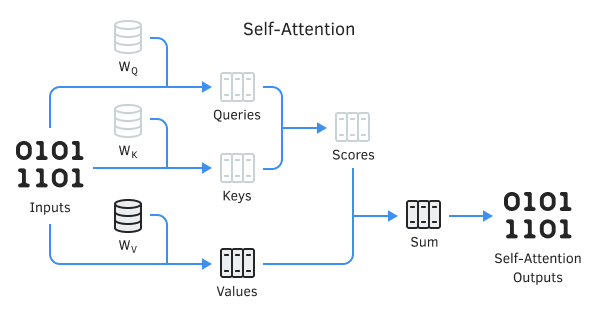

Recall that the Self-Attention algorithm uses three trainable matrices of weights (WQ, WK, and WV). The matrix data is used to obtain 3 three entities: Query, Key, and Value. The first two determine the pairwise relationship between elements of the sequence, while the last one represents the context of the analyzed element.

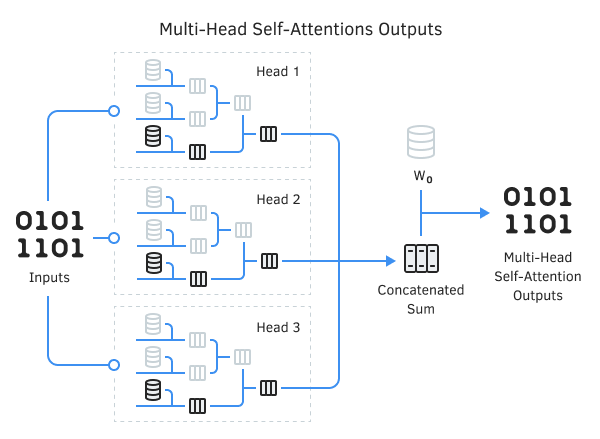

It's not a secret that situations are not always straightforward. The same situation can often be interpreted from various perspectives. With different points of view, the conclusions can be completely opposite. In such situations, it's important to consider all possible options and only draw a conclusion after a comprehensive analysis. That's why in the same paper, the authors of the method proposed using Multi-Head Attention to address such problems. This is the launch of several parallel Self-Attention threads, with different weights. Here, each 'head' has its own opinion, and the decision is made by a balanced vote. A solution like this should better identify connections between different elements of the sequence.

Multi-Headed Attention architecture diagram

In the Multi-Head Attention architecture, several Self-Attention threads with different weights are used in parallel, which simulates a versatile analysis of the situation. The results of the threads are concatenated into a single tensor. The final result of the algorithm is determined by multiplying the tensor by W0 matrix, the parameters of which are selected in the process of training the neural network. This whole architecture replaces the Self-Attention block in the encoder and decoder of the transformer architecture.

It is the Multi-Head Attention architecture that is most often used to solve practical problems.