- Description of architecture and implementation principles

- Building Self-Attention with MQL5 tools

- Organizing parallel computing in the attention block

- Testing the attention mechanism

Description of architecture and implementation principles

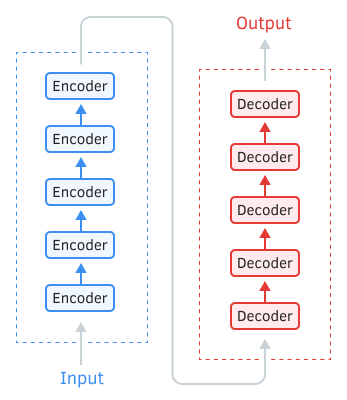

The Transformer architecture is based on sequential Encoder and Decoder blocks with similar architectures. Each of the blocks includes several identical layers with different weight matrices.

Transformer architecture

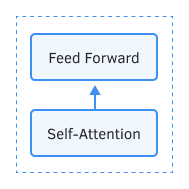

Each Encoder layer contains two internal layers: Self-Attention and Feed Forward. The Feed Forward layer includes two fully connected layers of neurons with a ReLU activation function on the internal layer. Each layer is applied to all elements of the sequence with the same weights, enabling simultaneous independent calculations for all sequence elements in parallel threads.

Encoder

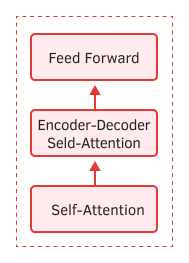

The Decoder layer has a similar structure with an additional layer called Self-Attention which analyzes dependencies between the input and output sequences.

Decoder

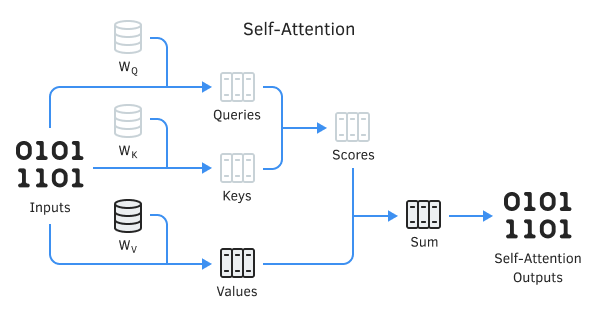

The Self-Attention mechanism includes several iterative actions applied to each element of the sequence.

- First, we compute the Query, Key, and Value vectors. The mentioned vectors are obtained by multiplying each element of the sequence by the corresponding matrix, WQ, WK and WV.

- Next, we determine pairwise dependencies between elements of the sequence. To do this, we multiply the Query vector with the Key vectors of all elements of the sequence. This iteration is repeated for the Query vector of each element in the sequence. As a result of this iteration, we obtain a matrix called Score with a size of N*N, where N is the sequence length.

- The next step involves dividing the obtained values by the square root of the dimension of the Key vector and normalizing using the Softmax function with respect to each Query. Thus, we obtain coefficients representing the pairwise dependencies between the elements of the sequence.

- By multiplying each Value vector by the corresponding attention coefficient, we obtain the adjusted value of the element. The goal of this iteration is to focus attention on relevant elements and reduce the influence of irrelevant values.

- Next, we summarize all the adjusted Value vectors for each element. The result of this operation will be the vector of output values for the Self-Attention layer.

The results of iterations for each layer are added to the input sequence and normalized.

For data normalization, we first determine the mean value of the entire sequence. Then, for each element, we calculate the quotient of its deviation from the mean divided by the standard deviation of the sequence.