Creating a model for testing

In the previous section, we created a template for an Expert Advisor to test the feasibility of using our neural network models for conducting trading operations in financial markets. This is a universal template that can work with any model. However, it has limited parameters for the description of one candlestick and for the configuration of the results layer. As a result of the model operation, it should return a tensor of values that the decision-making block in the template can unambiguously interpret.

For testing purposes, I decided to build a new model that involves multiple types of neural layers. We will create and train the model using a script. The script format is familiar to us from the numerous tests that we examined in this book. We will create a new script in the file gpt_not_norm.mq5. We will save the new script file in the gpt subdirectory of our book in accordance with the file structure.

At the script's global level, we will declare two constants:

- BarsInHistory — number of bars in the training dataset

- ModelName — file name to save the trained model

Next, we define the external parameters of the script. First of all, this is the name of the file with the training dataset StudyFileName. Please note that we are using a dataset without prior data normalization. In the previous section, in our Expert Advisor template, we did not configure data preprocessing, so the entire calculation relies on using batch normalization layers. The tests we conducted earlier confirm the possibility of such a replacement.

The external parameter OutputFileName contains the name of the file for writing the dynamics of changes in the model error during the training process.

We plan to use a block with the GPT architecture. For such an architecture, it's common to use a parameter to specify the length of the internal buffer sequence for the pattern. To request this parameter from the user, we will create an external parameter BarsToLine.

Next comes the set of parameters that has become standard for such scripts:

- NeuronsToBar — number of input layer neurons per bar

- UseOpenCL — flag for using OpenCL

- BatchSize — batch size between weight matrix updates

- LearningRate — learning rate

- HiddenLayers — number of hidden layers

- HiddenLayer — number of neurons in the hidden layer

- Epochs — number of iterations for updating the weight matrix before the training process stops.

#define HistoryBars 40

input int BarsToLine = 60;

|

After declaring external parameters, we add our neural network model library to the script.

//+------------------------------------------------------------------+

|

This is where the work in the global field ends. Let's continue writing the script code in the body of the OnStart function. In the body of the function, we use a structured approach to call individual functions, each of which performs specific actions.

void OnStart(void)

|

The first function in our script is the model initialization function NetworkInitialize. In its parameters, this function receives a pointer to the model object that needs to be initialized.

The function body provides two options for model initialization. First, we attempt to load a pre-trained model from the file specified in the external parameters of the script and check the operation result. If the model is successfully loaded, we skip the block that creates a new model and continue working with the loaded model. This capability enables us to stop and resume the learning process if necessary.

bool NetworkInitialize(CNet &net)

|

If the loading of a pre-trained model fails, we create a new neural network. First, we create a dynamic array to store pointers to objects describing neural layers and then we immediately call the CreateLayersDesc function to create the architecture description of our model.

CArrayObj layers;

|

As soon as our dynamic array of objects contains the complete description of the model to be created, we call the model generation method, specifying in the parameters a pointer to the dynamic array describing the model, the loss function, and the model optimization parameters.

//--- initialize the network

|

We ensure to verify the result of the operation.

After creating the model, we set the user-specified flag for using OpenCL technology and the error smoothing range.

net.UseOpenCL(UseOpenCL);

|

This concludes the model initialization function. Let's now consider the algorithm of the CreateLayersDesc function that creates the architecture description of the model. In the parameters, the function receives a pointer to a dynamic array object describing the model architecture. In the body of the function, we immediately clear the received array.

bool CreateLayersDesc(CArrayObj &layers)

|

First, we create the initial data layer. The algorithm for creating all neural layers will be the same, so we begin by initiating a new object for describing the neural layer. As always, we verify the result of the operation, that is, check the creation of a new object.

CLayerDescription *descr;

|

Once the neural layer description object is created, we fill it with data sufficient to unambiguously understand the architecture of the neural layer being created.

descr.type = defNeuronBase;

|

We will input information about the last three candlesticks into the created model. In fact, this is not enough, both in terms of the amount of information for the neural network to make a decision and from the practical trading perspective. However, we should remember that we will use blocks with the GPT architecture in our model. This architecture involves the accumulation of historical data inside a block, compensating for the lack of information. At the same time, using a small amount of initial data allows for a significant reduction in computational operations at each iteration. Thus, the size of the initial data layer is determined as the product of the number of elements to describe one candlestick and the number of analyzed candlesticks. In our case, the number of description elements for one candlestick is specified in the external parameter NeuronsToBar, and the number of analyzed candlesticks is specified by the GPT_InputBars constant.

The initial data layer does not use either an activation function or parameter optimization. Note that we write the initial data directly to the results buffer.

if(!layers.Add(descr))

|

Once we have filled the architecture description object of the neural layer with the necessary set of data, we add it to our dynamic array of pointers to objects.

I would like to remind you that we did not pre-process the initial data. Therefore, in the neural network architecture, we have included the creation of a batch data normalization layer immediately after the initial data layer. According to the above algorithm, we instantiate a new object describing the neural layer. It is important to verify the result of the object creation operation, as in the next stage, we will be populating the elements of this object with the necessary description of the architecture of the created neural layer. Attempting to access the object elements with an invalid pointer will result in a critical error.

//--- create a data normalization layer

|

In the description of the neural layer being created, we specify the type of the neural layer defNeuronBatchNorm, which corresponds to the batch normalization layer. We set the sizes of the neural layer and the window of initial data to be equal to the size of the previous input data neural layer.

We will indicate the batch size at the batch size level between updates of the weight matrix, which the user specified in the external parameter BatchSize.

Similar to the previous layer, the batch normalization layer does not employ an activation function. However, it introduces the Adam optimization method for trainable parameters.

descr.type = defNeuronBatchNorm;

|

After specifying all the necessary parameters for describing the neural layer to be created, we add a pointer to the object to the dynamic array of pointers describing the architecture of our model.

if(!layers.Add(descr))

|

As we have discussed previously, four parameters in the description of one candlestick might be insufficient. Therefore, it would be beneficial to add a few more parameters. To use machine learning methods in conditions of a shortage of parameters, a number of approaches have been developed that have been combined into the field of Feature Engineer. One such approach involves the use of convolutional layers, in which the number of filters exceeds the size of the input window. The logic of this approach is that the description vector of one element is considered as the coordinates of a certain point representing the current state in an N-dimensional space, where N is the length of the description vector of one element. By performing convolution, we project this point onto the convolution vector. We use exactly this property when compressing data and reducing its dimensionality. The same property will be used to increase data dimensionality. As you can see, there is no contradiction here with the previously studied approach to using convolutional layers. We simply use the number of filters exceeding the description vector of one element and thereby increase the space dimension. Let's use the described method and create the next convolutional layer with the number of filters being twice the number of elements in the description of one candlestick. It should be noted that in this case we are making a convolutional layer within the description of one candlestick, so the size of the initial data window and its step size will be equal to the size of the description vector of one candlestick.

The algorithm for creating the description of the neural layer remains the same. First, we create a new instance of the neural layer description object and check the result of the operation.

//--- Convolutional layer

|

Then we fill in the required information.

descr.type = defNeuronConv;

|

We pass a pointer to the populated instance of the object into the dynamic array describing the architecture of the model being created.

if(!layers.Add(descr))

|

After the information passes through the convolutional layer, we expect to obtain a tensor with eight elements describing the state of one candlestick. However, we know that fully connected models do not evaluate the dependence between elements, whereas such dependencies are typically strong when analyzing time series data.

Hence, at the next stage, we aim to analyze such dependencies. We discussed such analysis during our introduction to convolutional networks. Despite seeming peculiar, we employ the same type of neural layers to address two seemingly different tasks. In fact, we are performing a similar task but with different data. In the preceding convolutional layer, we decomposed the description vector of a single candlestick into a larger number of elements. We can look at this task from another perspective. As we discussed during the study of the convolutional layer, the convolution process involves determining the similarity between two functions. That is, in each filter, we identify the similarity of the original data with some reference function. Each filter uses its own reference function. By conducting convolution operations on the scale of a single bar, we sought the similarity of each bar with some reference.

Now we want to analyze the dynamics of changes in candlestick parameters. To do this, we need to perform convolution between identical elements of description vectors for different candles. After convolution, the previous layer returned three values sequentially (the number of analyzed candlesticks) from each filter. So, the next step is to create a convolutional layer with a window of initial data and a step equal to the number of analyzed candlesticks. In this convolutional layer, we will also use eight filters.

Let's create a description for the convolutional neural layer following the algorithm mentioned earlier.

//--- Convolutional layer 2

|

descr.type = defNeuronConv;

|

if(!layers.Add(descr))

|

Thus, after preprocessing the data in one batch normalization layer and two consecutive convolutional layers, we obtained a tensor with 64 elements (8 * 8). Let me remind you that we fed a tensor of 12 elements to the input of the neural network: 3 candlesticks with 4 elements each.

Next, we will process the signal in a block with the GPT architecture. In it, we will create four sequential neural layers with eight attention heads in each. We have exposed the size of the depth of analyzed data in the external parameters of the script. This will allow us to conduct training with different depths and choose the optimal parameter based on the trade-off between training costs and model performance. The algorithm for creating a description of the neural layer remains the same.

//--- GPT layer

|

descr.type = defNeuronGPT;

|

if(!layers.Add(descr))

|

After the GPT block, we will create a block of fully connected neural layers. All layers in the block will be identical. We included the number of layers and neurons in each into the external parameters of the script. According to the algorithm proposed above, we create a new instance of the neural layer description object and check the result of the operation.

//--- Hidden fully connected layers

|

After successfully creating a new object instance, we populate it with the necessary data. As mentioned above, we will take the number of neurons in the layer from the external parameter HiddenLayer. I chose the activation function Swish. Certainly, for greater script flexibility, more parameters can be moved to external settings, and you can conduct multiple training cycles with different parameters to find the best configuration for your model. This approach will require more time and expense for training the model but will allow you to find the most optimal values for the model parameters.

descr.type = defNeuronBase;

|

Since we plan to create identical neural layers, we then create a loop with a number of iterations equal to the number of neural layers to be created. In the body of the loop, we will add the created neural layer description to the dynamic array of architecture descriptions for the model being created. And, of course, we check the result of the operations at each iteration of the loop.

for(int i = 0; i < HiddenLayers; i++)

|

To complete the model, we will create a results layer. This is a fully connected layer that contains two neurons with the tanh activation function. The choice of this activation function is based on the aggregate assessment of the target values of the trained model:

- The first element of the target value takes 1 for buy targets and −1 for sell targets, which is best configured by the hyperbolic tangent function tanh.

- We trained the models on the EURUSD pair, therefore, the value of the expected movement to the nearest extremum should be in the range from −0.05 to 0.05. In this range of values, the graph of the hyperbolic tangent function tanh is close to linear.

If you plan to use the model on instruments with an absolute value of the expected movement to the nearest extremum of more than 1, you can scale the target result. Then use reverse scaling when interpreting the model signal. You might also consider using a different activation function.

We use the same algorithm to create a description of the neural layer in the architecture of the created model. First, we create a new instance of the neural layer description object and check the result of the operation.

//--- Results layer

|

We then populate the created object with the necessary information: the type of neural layer, the number of neurons, the activation function, and the optimization method for the model parameters.

descr.type = defNeuronBase;

|

We add a pointer to the populated object to the dynamic array describing the architecture of the model being created and check the operation result.

if(!layers.Add(descr))

|

We completed the function.

Following in the script algorithm is the LoadTrainingData function that loads the training dataset. In the parameters, the function receives a string variable with the name of the file to load and pointers to two dynamic array objects: data for patterns and result for target values.

bool LoadTrainingData(string path, CArrayObj &data, CArrayObj &result)

|

Let me remind you that we will load the training sample without preliminary normalization of the initial data from the file study_data_not_norm.csv since we plan to use the model in real-time, and we will use a batch normalization layer to prepare the initial data.

The algorithm for loading the source data will completely repeat what we previously considered while performing the same task in the GPT architecture testing script. Lets briefly recap on the process. To load the training dataset, we declare two new variables to store pointers to data buffers, in which we will read patterns and their target values one by one from the file (pattern and target respectively). We will create the object instances later. This is because we will need new object instances to load each pattern. Therefore, we will create objects in the body of the loop before the actual process of loading data from the file.

CBufferType *pattern;

|

After completing the preparatory work, we open the file with the training sample to read the data. When opening a file, among other flags, we specify FILE_SHARE_READ. This flag opens shared access to the file for data reading. That is, by adding this flag, we do not block access to the file from other applications for reading the file. This will allow us to run several scripts in parallel with different parameters, and they will not block each others access to the file. Of course, we can run several scripts in parallel only if the hardware capacity allows it.

//--- open the file with the training sample

|

Make sure to check the operation result. In case of a file opening error, we inform the user about the error, delete all previously created objects, and exit the program.

After successfully opening the file, we create a loop to read data from the file. The operations in the body of the loop will be repeated until one of the following events occurs:

- The end of the file is reached.

- The user interrupts program execution.

//--- show the progress of loading training data in the chart comment

|

In the body of the loop, we first create new instances of objects to record the current pattern and its target values. Again, we immediately check the result of the operation. If an error occurs, we inform the user about the error, delete previously created objects, close the file, and exit the program. It is very important to delete all objects and close the file before exiting the program.

if(!(target = new CBufferType()))

|

After this, we organize a nested loop with the number of iterations equal to the full data pattern. We have created training samples of 40 candlesticks per pattern. Now, we need to sequentially read all the data. However, our model does not require such a large pattern description for training. Therefore, we will skip unnecessary data and will only write the last required data to the buffer.

int skip = (HistoryBars - GPT_InputBars) * NeuronsToBar;

|

After loading the current pattern data in full, we organize a similar loop to load target values. This time the number of iterations of the loop will be equal to the number of target values in the training dataset, that is, in our case, two. Before starting the loop, we will check the state of the pattern saving flag. We enter the loop only if the pattern description has been loaded in full.

for(int i = 0; i < 2; i++)

|

After the data loading loops have been executed, we move on to the block in which pattern information is added to our dynamic arrays. We add pointers to objects to the dynamic array of descriptions of patterns and target results. We also check the results of all operations.

if(!data.Add(pattern))

|

After successfully adding to the dynamic arrays, we inform the user about the number of loaded patterns and proceed to load the next pattern.

//--- show the loading progress in the chart comment (no more than 1 time every 250 milliseconds)

|

After successfully loading all the data from the training dataset, we close the file and complete the data loading function.

Next, we move on to the procedure for training our model in the NetworkFit function. In its parameters, the function receives pointers to three objects:

- trainable model

- dynamic array of system state descriptions

- dynamic array of target results

bool NetworkFit(CNet &net, const CArrayObj &data, const CArrayObj &result, VECTOR &loss_history)

|

In the body of the method, we first do a little preparatory work. We start by preparing local variables.

int patterns = data.Total();

|

After completing the preparatory work, we organize nested loops to train our model. The external loop will count the number of updates to the weight matrices, and in the nested loop, we will iterate over the patterns of our training dataset.

Let me remind you that in the GPT architecture, historical data is accumulated in a stack. Therefore, for the model to work correctly, the historical sequence of data input to the model is very important, similar to recurrent models. For this reason, we cannot shuffle the training dataset within a single training batch, and we will feed the model with patterns in chronological order. However, for model training, we can use random batches from the entire training dataset. It is worth noting that when determining the training batch, its size should be increased by the size of the internal accumulation sequence of the GPT block, as maintaining their chronological sequence is necessary for correctly determining dependencies between elements.

Thus, before running the nested loop, we define the boundaries of the current training batch.

In the body of the nested loop, before proceeding with further operations, we check the flag for the forced termination of the program, and if necessary, we interrupt the function execution.

//--- loop through epochs

|

First, we perform the feed-forward pass through the model by calling the net.FeedForward method. In the parameters of the feed-forward method, we pass a pointer to the object describing the current pattern state and check the result of the operation. If an error occurs during method execution, we inform the user about the error, delete the created objects, and exit the program.

if(!net.FeedForward(data.At(k + i)))

|

After the successful execution of the feed-forward method, we check the fullness of the buffer of our GPT block. If the buffer is not yet full, move on to the next iteration of the loop.

if(i < BarsToLine)

|

The backpropagation method net.Backpropagation is called only after the cumulative sequence of the GPT block is filled. This time, in the parameters of the method, we pass a pointer to the object representing the target values. It is very important to check the result of the operation. If an error occurs, we perform the operations as if there was an error in the direct method.

if(!net.Backpropagation(result.At(k + i)))

|

Using the feed-forward and backpropagation methods, we have executed the respective algorithms for training our model. At this stage, the error gradient has already been propagated to each trainable parameter. All that remains is to update the weight matrices. However, we perform this operation not at every training iteration but only after accumulating a batch. In this particular case, we will update the weight matrices after completing the iterations of the nested loop.

//--- reconfigure the network weights

|

As you have seen in the model testing graphs, the model error dynamics almost never follow a smooth line. Saving the model with minimal error will allow us to save the most appropriate parameters for our model. Therefore, we first check the current model error and compare it to the minimum error achieved during training. If the error has dropped, we save the current model and update the minimum error variable.

//--- notify about the past epoch

|

Additionally, we have introduced the count counter. We will use it to count the number of update iterations from the last minimum error value. If its value exceeds the specified threshold (in the example, it is set to 10 iterations), then we interrupt the training process.

if(count >= 10)

|

After completing a full training cycle, we will need to save the accumulated dynamics of the model error changes during the training process to a file. To do this, we have created the SaveLossHistory function. In the parameters, the function receives a string variable with the file name for storing the data and a vector of errors during the model training process.

In the function body, we open the file for writing. In this case, we use the file name that the user specified in the parameters. We immediately check the result. If an error occurs when opening the file, we inform the user and exit the function.

void SaveLossHistory(string path, const VECTOR &loss_history)

|

If the file was opened successfully, we organize a loop in which we write, one by one, all the values of the model error accumulation vector during training. After completing the full data writing loop, we close the file and inform the user about the location of the file.

With this, our script for creating and training the model is complete, and we can begin training the model on the previously created dataset of non-normalized training data from the file study_data_not_norm.csv.

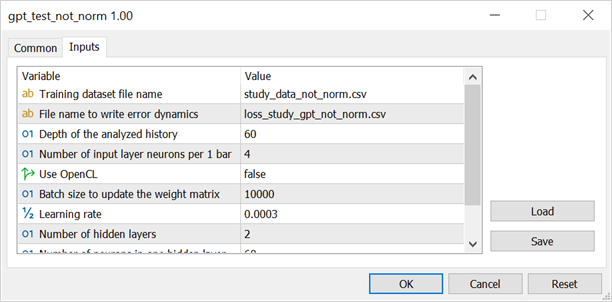

The next step is to start the model training process. Here you need to be patient, as the learning process is quite long. Its duration depends on the hardware used. For example, I started training a model with the parameters shown in the screenshot below.

On my Intel Core i7-1165G7 laptop, it takes 35-36 seconds to compute one batch between weight matrix updates. So, full training of the model with 5000 iterations of weight updates will take approximately 2 days of continuous operation. However, if you notice that training has halted and the minimum error hasn't changed for an extended period, you can manually stop the model training. If the achieved performance doesn't meet the requirements, you can continue training the model with different values for the learning rate and batch size for weight updates. The common approach to selecting parameters is as follows:

- The learning rate: training starts with a larger learning rate, and during training, we gradually decrease the learning rate.

- Weight matrix update batch size: training starts with a small batch and gradually increases.

Model training parameters

The techniques mentioned above allow initial fast and rough training of the model followed by finer tuning. If during the model training process, the error consistently increases, it indicates an excessively high learning rate. Using a large batch for weight matrix updates helps to adjust the weight matrices in the most prioritized direction, but it requires more time to perform operations between weight updates. On the other hand, a small batch leads to faster and more chaotic parameter updates, while still maintaining the overall trend. However, when using small batch sizes, it is recommended to decrease the learning rate to reduce model overfitting to specific parts of the training dataset.