- Description of architecture and implementation principles

- Building an LSTM block in MQL5

- Organizing parallel computing in the LSTM block

- Implementing recurrent models in Python

- Comparative testing of recurrent models

Organizing parallel computing in the LSTM block

In previous chapters, we looked at the implementation of an LSTM block using MQL5. However, a new stage in the development of neural networks came precisely with the development of parallel computing technologies. This is especially important for resource-intensive tasks such as recurrent neural networks. Therefore, it is especially important for us to add the ability to use multi-threaded parallel computing tools in the LSTM block class.

As mentioned when creating a block algorithm using MQL5, our class already has an implementation of multi-threaded calculations of individual blocks thanks to the use of objects of the previously discussed class of the base neural layer as gates in the LSTM block algorithm. Therefore, within the framework of this chapter, we only have to implement the missing part:

- Thread consolidating and processing data from internal neural layers within the forward pass.

- Propagating the error gradient from the output of the LSTM block to the internal neural layers within the backpropagation pass.

This gives us an understanding of the task. We already have an MQL5 implementation of the process. This gives an understanding of the process and the algorithm for executing operations.

Therefore, we can proceed with the work. Let me remind you of the architecture for constructing a multi-threaded computing process. The actual execution of the computation process in parallel threads is carried out in an environment different from the main program — in the OpenCL context. To perform operations, three main components are required:

- Program of performed operations.

- Initial data for performing operations.

- Process control commands (moment of program launch, number of threads created, etc.)

Let's look at the implementation of these points.

4.Making additions to the OpenCL program

The first item we indicate is the program of the operations being performed. This means that we need to augment our OpenCL program with new kernels to perform the additional operations we require. We collected all the code of the OpenCL program in the file opencl_program.cl. Open this file and add two new kernels to it: LSTMFeedForward and LSTMCalcHiddenGradient. The names of the kernels correspond to the names of the methods of our classes. Therefore, it is easy to guess that the first will complement the feed-forward pass method, and the second will complement the error gradient backpropagation method.

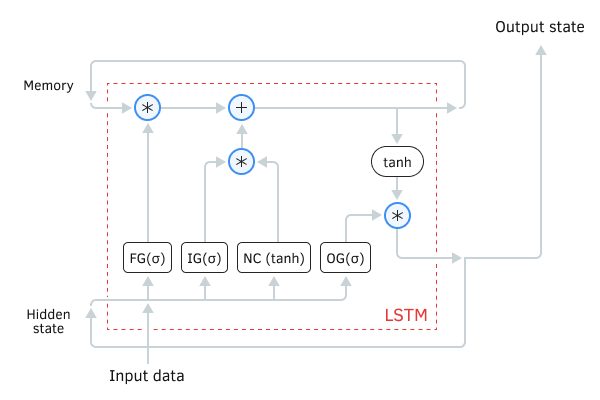

Recurrent LSTM block diagram

We start with the feed-forward pass kernel LSTMFeedForward. In the parameters, this buffer will receive pointers to six data buffers (four source data buffers and two result buffers) and one constant:

- forgetgate: pointer to the forget gate buffer (source data)

- inputgate: pointer to the input gate buffer (source data)

- outputgate: pointer to the result gate buffer (source data)

- newcontent: pointer to the new content buffer (source data)

- memory: pointer to a memory stream (result buffer)

- hiddenstate: pointer to the hidden state stream (result buffer)

- outputs_total: number of elements in the data stream (constant)

__kernel void LSTMFeedForward(__global TYPE *forgetgate,

|

At the beginning of the method, as before, we receive the thread index, which serves as a pointer to the data being processed. Also, we immediately determine the shift in the data buffers to access the data we need.

{

|

To improve the performance of our program, we will use vector arithmetic. Let's use vector variables of type TYPE4. Let me remind you that we use the TYPE macro substitution to quickly switch the double or float data type used, depending on the requirements for calculation accuracy and the OpenCL device used. But before we begin performing operations, we will transfer the data from our global data buffers to local vector variables.

TYPE4 fg = ToVect4(forgetgate, shift, 1, outputs_total, 0);

|

Now, by analogy with the MQL5 program code, we will perform arithmetic operations to update the state of the memory stream. According to the algorithm of the LSTM block, we must first adjust the incoming memory stream to the value of the oblivion gate, and then add a new context, adjusted by the value of the input gate, to the resulting value. After completing the operations, we return the value of the updated memory stream back to the buffer.

TYPE4 temp = mem * fg;

|

Next, we need to define a new hidden state flow value. It will also be supplied to the output of the LSTM block for transmission to the next neural layer. Here we need to first normalize the current memory state using the hyperbolic tangent function and then adjust the resulting value by the result gate value. The result of the operations is written to the data buffer.

temp = tanh(temp) * og;

|

The operations of the feed-forward kernel are now completed. From the results of the work of the internal layers of our recurrent LSTM block, we updated the state of the memory stream and obtained values that will be provided at the output of the recurrent block.

In the second kernel LSTMCalcHiddenGradient, we need to perform the reverse operation, that is, carry out the error gradient in the opposite direction, from the output of the recurrent block to the output of each internal neural layer. The specific operation of the backpropagation kernel requires an increase in the number of used data buffers to 10:

- outputs: pointer to the result vector buffer (source data)

- gradients: pointer to the gradient vector buffer of the current layer (source data)

- inputgate: pointer to the input gate buffer (source data)

- outputgate: pointer to the result gate buffer (source data)

- newcontent: pointer to the new content buffer (source data)

- memory: pointer to a memory stream (source data)

- fg_gradients: pointer to the oblivion gate gradient buffer (result buffer)

- ig_gradients: pointer to the input gate gradient buffer (result buffer)

- og_gradients: pointer to the result gate gradient buffer (result buffer)

- nc_gradients: pointer to the new content gradient buffer (result buffer)

- outputs_total: number of elements in the data stream (constant)

__kernel void LSTMCalcHiddenGradient(__global TYPE *outputs_

|

At the beginning of the kernel, we determine the thread ID and the offset in the data buffers to the values being processed.

{

|

As in the forward pass kernel, we will use operations with vector variables of type TYPE4. Therefore, in the next step, we transfer the original data from global buffers to local vector variables.

TYPE4 out = ToVect4(outputs, shift, 1, outputs_total, 0);

|

After completing the preparatory operations, we proceed to execute the mathematical part of the kernel. Formulas for carrying out operations and their explanation are presented when describing the construction of a process using MQL5. Therefore, in this section, only the implementation of the process in OpenCL will be given.

When implementing this part in MQL5, we decided that it was inappropriate to create an additional data buffer to store the normalized value of the memory stream. In the kernel parameters, we received a pointer to a stream not of the current memory state, but of a recurrent block arriving at the input from the previous iteration of the forward pass. Therefore, before proceeding with the error gradient distribution operations, we need to find the value of the normalized state of the memory stream. We define it as the ratio of the result buffer value to the result gate value. To eliminate division by zero, we add a small constant in the denominator.

TYPE4 m = out / (og + 1.0e-37f); |

Following the logic of the error gradient backpropagation algorithm, we first determine the error gradient at the output of the oblivion gate neural layer. To do this, we need to multiply the error gradient at the output of our LSTM block by the derivative of the product. In this case, it is equal to the value of the normalized memory state. We will immediately write the resulting value into the corresponding data buffer.

//--- OutputGate gradient

|

Next, we must similarly determine the error gradient with another multiplier, which is the normalized memory state. That is, we multiply the error gradient at the output of our recurrent block by the state of the results gate.

Before continuing to propagate the gradient to the remaining neural layers, we need to pass it through the hyperbolic tangent function. In other words, we multiply the previously obtained value by the derivative of the hyperbolic tangent.

//--- Adjust the memory gradient to the derivative TANH

|

Now we only need to propagate the error gradient across the remaining internal layers. The algorithm will be the same for all neural layers. The only difference is in the buffer used as a derivative of the multiplication function. After determining the error gradient, we immediately write its value into the appropriate buffer.

//--- InputGate gradient

|

After completing the operations, we exit the kernel.

Thus, we implemented the missing kernels to organize forward and backward passes as part of performing operations for a recurrent LSTM block. This completes the modification of the OpenCL program, and we move on to performing operations on the side of the main program.

4.Implementing functionality on the side of the main program

After making changes to the OpenCL program, we must do the second part of the work and organize the process on the side of the main program. The first thing we will do is create constants for working with kernels. Here we need to create constants to identify kernels and their parameters. We will add the specified constants to those previously created in the file defines.mqh.

#define def_k_LSTMFeedForward 26

|

//--- LSTM Feed Forward

|

When adding constants, we follow the previously defined naming rules. All kernel constants begin with the prefix def_k_, and parameter constants contain the kernel abbreviation: def_lstmff_ for feed-forward kernel parameters and def_lstmhgr_ for gradient backpropagation kernel parameters.

//--- LSTM Hidden Gradients

|

We then go to the neuronnet.mqh file, which contains the code for our neural network class. In the CNet::InitOpenCL method, we need to change the number of used kernels and simultaneously open buffers.

if(!m_cOpenCL.SetKernelsCount(28))

|

Changing the last parameter is not critical since in our buffer creation method, we, if necessary, change the size of the array for storing buffer handles. However, using the standard OpenCL.mqh library, there is no such functionality. This may result in a runtime error.

Next, we declare the kernels for use within our program, while always controlling the process of operations.

if(!m_cOpenCL.KernelCreate(def_k_LSTMFeedForward, "LSTMFeedForward"))

|

if(!m_cOpenCL.KernelCreate(def_k_LSTMHiddenGradients, "LSTMCalcHiddenGradient"))

|

This completes the preparatory work, and we move on to making changes directly to the code of the executable methods of our recurrent LSTM block class.

According to the chronology of the execution of the algorithm of our neural network, we will be the first to make changes to the feed-forward method. In it, we first organize a check for the presence of data in the memory of the OpenCL context.

bool CNeuronLSTM::FeedForward(CNeuronBase *prevLayer)

|

We then pass pointers to the created buffers to our kernel parameters. Here we indicate the constants necessary for the correct execution of the program code. Again, we check the results of the operations.

//--- pass parameters to the kernel

|

This completes the preparatory work stage. Let's move on to launching the kernel to perform operations. First, let's determine the number of required threads. In the kernel body, we use vector operations and therefore the number of threads will be four times less than the size of the buffers.

We write the calculated number of threads into the NDRangearray and indicate the zero offset in the data buffers in the off_set array. The kernel is added in the execution queue. If an error occurs when queuing the kernel, the m_cOpenCL.Execute function will return a false result, which we must check and process.

//--- launch the kernel

|

This completes the work on the LSTM feed-forward method. Let's move on to making additions to the backpropagation method.

As in the case of the feed-forward pass, we will begin work in the error gradient distribution method CNeuronLSTM::CalcHiddenGradient by checking the presence of source data in the OpenCL context memory.

bool CNeuronLSTM::CalcHiddenGradient(CNeuronBase *prevLayer)

|

else // Block for working with OpenCL

|

Next, we completely repeat the algorithm for working with OpenCL kernels on the side of the main program. After creating the necessary buffers in the OpenCL context memory, we pass the data buffer handles and variable values to the kernel parameters. And it is very important to monitor the execution of all process operations.

//--- pass parameters to the kernel

|

This concludes the stage of preparatory work. We move on to the procedure for launching the kernel. First of all, here we write the number of threads to start in the NDRange array and the zero offset in the off_set array.

Thanks to the use of vector operations in the kernel body, we need four times fewer threads for the full cycle of operations. Therefore, before we write the value to the NDRangearray, we need to calculate it.

After this, we will send our kernel to the execution queue.

//--- launch the kernel

|

I might sound repetitive, but I want to stress the importance of checking the result of each operation. This is a crucial point since any error in performing the operation can both distort the entire result of our neural network and cause a critical error, resulting in the termination of the entire program.

With this, we have completed the work on the recurrent LSTM block class. We have organized the class to work in two environments:

- Implemented operation on the CPU using standard MQL5 tools.

- Created the ability to implement multi-threaded parallel calculations using OpenCL.

Now, we can evaluate the results of our work by creating and testing a recurrent neural network model.