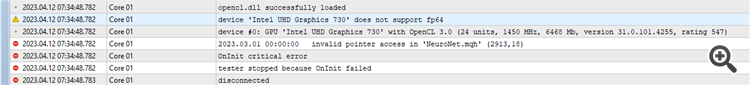

I encounter the following error

2023.04.12 07:35:20.755 Core 01 2023.03.01 00:00:00 invalid pointer access in 'NeuroNet.mqh' (2913,18)

2023.04.12 07:35:20.755 Core 01 OnInit critical error

2023.04.12 07:35:20.755 Core 01 tester stopped because OnInit failed

Intel UHD 730

Metatrader build 3661

What's my reason?

2023.04.13 11:46:35.381 Core 1 2023.01.02 12:00:00 Error of execution kernel bool CNeuronMLMHAttentionOCL::SumAndNormilize(CBufferFloat*,CBufferFloat*,CBufferFloat*) MatrixSum: unknown OpenCL error 132640

What's my reason?

2023.04.13 11:46:35.381 Core 1 2023.01.02 12:00:00 Error of execution kernel bool CNeuronMLMHAttentionOCL::SumAndNormilize(CBufferFloat*,CBufferFloat*,CBufferFloat*) MatrixSum: unknown OpenCL error 132640

Try using this library

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

Check out the new article: Neural networks made easy (Part 37): Sparse Attention.

In the previous article, we discussed relational models which use attention mechanisms in their architecture. One of the specific features of these models is the intensive utilization of computing resources. In this article, we will consider one of the mechanisms for reducing the number of computational operations inside the Self-Attention block. This will increase the general performance of the model.

We trained the model and tested the EA using EURUSD H1 historical data for March 2023. During the learning process, the EA showed profit during the testing period. However, the profit was obtained because the size of the average profitable trade was larger than the size of the average losing trade. But the number of winning and losing positions was approximately the same. As a result, the profit factor was 1.12, and the recovery factor was 1.01.

Author: Dmitriy Gizlyk