- Description of architecture and implementation principles

- Construction using MQL5

- Organizing parallel computing in convolutional networks using OpenCL

- Implementing a convolutional model in Python

- Practical testing of convolutional models

Description of architecture and implementation principles

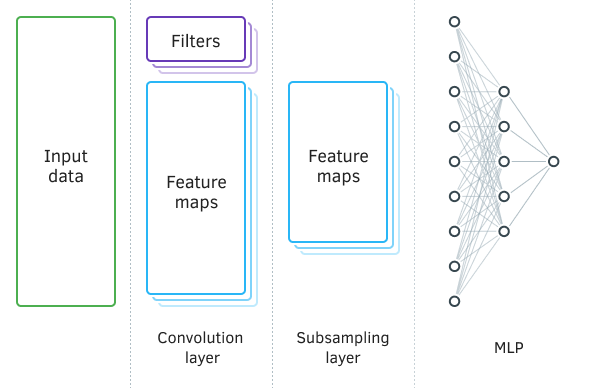

Convolutional networks, in comparison with a fully connected perceptron, have two new types of layers: convolutional (filter) and pooling (subsampling). Alternating, the specified layers are designed to highlight the main components and filter out noise in the original data while simultaneously reducing the dimensionality (volume) of the data, which is then fed into a fully connected perceptron for decision-making. Depending on the tasks to be solved, it is possible to consistently use several groups of alternating convolutional and subsample layers.

Convolutional neural network

The Convolution layer is responsible for recognizing objects in the source data set. In this layer, sequential operations of mathematical convolution of the original data with a small template (filter) are carried out, acting as the convolution kernel.

Convolution is an operation in functional analysis that, when applied to two functions f and g, returns a third function corresponding to the cross-correlation function of f(x) and g(-x). The operation of convolution can be interpreted as the "similarity" of one function to the mirrored and shifted copy of another.

In other words, the convolutional layer searches for a template element in the entire original sample. At the same time, on each iteration, the template is shifted across the array of original data with a specified step, which can be from 1 to the size of the template. If the magnitude of the shift step is smaller than the size of the template, then such convolution is called overlapping.

As a result of the convolution operation, we obtain a feature array that shows the similarity of the original data to the desired template at each iteration. Activation functions are used for data normalization. The size of the obtained array will be smaller than the array of original data, and the number of such arrays is equal to the number of templates (filters).

It is also important for us to note that the templates themselves are not specified during the design of the neural network but are selected during the training process.

Next subsample layer is used to reduce the size of the feature array and filter noise. The application of this iteration is based on the assumption that the similarity of the original data to the template is primary, and the exact coordinates of the feature in the array of original data are not so important. This allows addressing the scaling issue, as it permits some variability in the distance between the sought-after objects.

At this stage, data is condensed by maintaining the maximum or average value within a specified window. This way, only one value per data window is saved. Operations are carried out iteratively, shifting the window by a specified step with each new iteration. Data compaction is performed separately for each array of features.

Pooling layers with a window and a step of two are often used, which makes it possible to halve the size of the feature array. However, in practice, it is also possible to use a larger window. Furthermore, consolidation iterations can be carried out both with overlapping (when the step size is smaller than the window size) and without.

At the output of the pooling layer, we obtain arrays of features of smaller dimensions.

Depending on the complexity of the tasks being solved, after the pooling layer, it is possible to use one or several groups of convolutional and pooling layers. The principles of their construction and functionality comply with the principles described above.

In the general case, after one or several groups of "convolution + compaction", arrays of features obtained from all filters are gathered into a single vector and fed into a multilayer perceptron for the neural network's decision-making.

Convolutional neural networks are trained by the well-known method of error backpropagation. This method belongs to the unsupervised learning methods and imply propagating the error gradient from the output layer of neurons through hidden layers to the input layer of neurons with adjustment of weights towards the anti-gradient.

Convolutional neural networks are trained by the well-known method of error backpropagation.

In the pooling layer, the error gradient is calculated for each element in the feature array, analogous to the gradients of neurons in a fully connected perceptron. The algorithm for transferring the gradient to the previous layer depends on the compaction operation used. If only the maximum value is taken, then the entire gradient is passed to the neuron with the maximum value. For the other elements within the consolidation window, a zero gradient is set, as during the forward pass they did not influence the final result. If the operation of averaging is used within the window, then the gradient is evenly distributed to all elements within the window.

Weights are not used in the compaction operation, therefore, nothing is adjusted during the training process.

Operations are somewhat more complicated when training the neurons of the convolutional layer. The error gradient is calculated for each element of the feature array and descends to the corresponding neurons of the previous layer. The process of training the convolutional layer is based on convolution and reverse convolution operations.

To propagate the error gradient from the pooling layer to the convolutional layer, first, the edges of the error gradient array, obtained from the pooling layer, are padded with zero elements, and then a convolution of the resulting array is performed with the convolution kernel rotated by 180°. The output is an array of error gradients equal to the input data array, in which the gradient indices will correspond to the index of the corresponding neuron of the previous layer.

To obtain the weight deltas, convolution is performed between the matrix of input values and the matrix of error gradients of this layer, rotated by 180°. The output is an array of deltas with a size equal to the convolution core. The resulting deltas should be adjusted for the derivative of the activation function of the convolutional layer and the learning coefficient. After that, the weights of the convolution core change by the value of the corrected deltas.

It probably sounds rather hard to understand. Let's try to clarify these points while considering the code in detail.