One question remains, how can we understand why the IN_RADIUS value is taken modulo normalise the data:

double _dimension=fabs(IN_RADIUS)*((Low(StartIndex()+w+Index)-Low(StartIndex()+w+Index+1))-(High(StartIndex()+w+Index)-High(StartIndex()+w+Index+1))))/fmax(m_symbol.Point(),fmax(High(StartIndex()+w+Index),High(StartIndex()+w+Index+1))-fmin(Low(StartIndex()+w+Index),Low(StartIndex()+w+Index+1))));

because the cluster radius is a constant and has a positive value.

Perhaps this is an error and the whole numerator should be taken modulo?

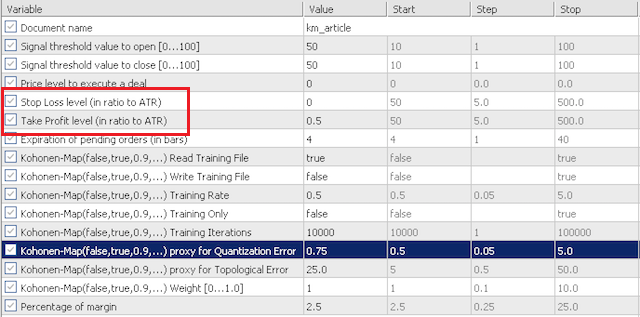

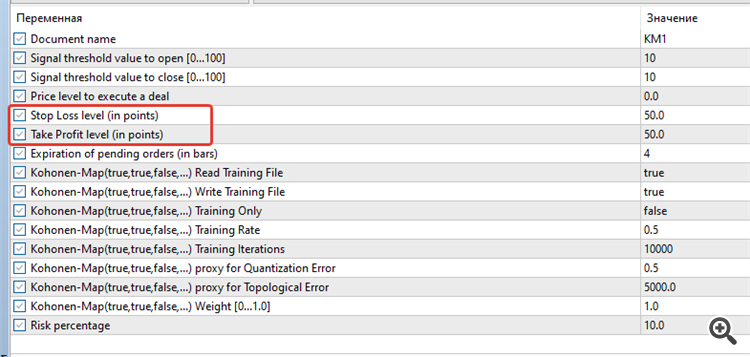

I assembled the Expert Advisor, but two parameters Stop Loss and Take Profit are not the same as on the screenshot in the article:

As a result, not a single trade...

Am I doing something wrong?

And how to use another indicator instead of ATR, for example MACD?

I built the Expert Advisor, but two parameters Stop Loss and Take Profit are not the same as on the screenshot in the article:

As a result, not a single trade...

Am I doing something wrong?

And how to use another indicator instead of ATR, for example MACD ?

You can do it this way:

bool CSignalMACD::InitMACD(CIndicators *indicators) { //--- add object to collection if(!indicators.Add(GetPointer(m_MACD))) { printf(__FUNCTION__+": error adding object"); return(false); } //--- initialize object if(!m_MACD.Create(m_symbol.Name(),m_period,m_period_fast,m_period_slow,m_period_signal,m_applied)) { printf(__FUNCTION__+": error initializing object"); return(false); } //--- ok return(true); }

also in protected:

protected: CiMACD m_MACD; // object-oscillator //--- adjusted parameters int m_period_fast; // the "period of fast EMA" parameter of the oscillator int m_period_slow; // the "period of slow EMA" parameter of the oscillator int m_period_signal; // the "period of averaging of difference" parameter of the oscillator ENUM_APPLIED_PRICE m_applied; // the "price series" parameter of the oscillator

and in public:

void PeriodFast(int value) { m_period_fast=value; } void PeriodSlow(int value) { m_period_slow=value; } void PeriodSignal(int value) { m_period_signal=value; } void Applied(ENUM_APPLIED_PRICE value) { m_applied=value;

and again in protected:

protected: //--- method of initialisation of the oscillator bool InitMACD(CIndicators *indicators); //--- methods of getting data double Main(int ind) { return(m_MACD.Main(ind)); } double Signal(int ind) { return(m_MACD.Signal(ind)); }

and finally:

bool CSignalKM::OpenLongParams(double &price,double &sl,double &tp,datetime &expiration) { CExpertSignal *general=(m_general!=-1) ? m_filters.At(m_general) : NULL; //--- if(general==NULL) { m_MACD.Refresh(-1); //--- if a base price is not specified explicitly, take the current market price double base_price=(m_base_price==0.0) ? m_symbol.Ask() : m_base_price; //--- price overload that sets entry price to be based on MACD price =base_price; double _range=m_MACD.Main(StartIndex())+((m_symbol.StopsLevel()+m_symbol.FreezeLevel())*m_symbol.Point()); //

But what's the point?

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

New article MQL5 Wizard techniques you should know (Part 02): Kohonen Maps has been published:

Todays trader is a philomath who is almost always (either consciously or not...) looking up new ideas, trying them out, choosing to modify them or discard them; an exploratory process that should cost a fair amount of diligence. This clearly places a premium on the trader's time and the need to avoid mistakes. These series of articles will proposition that the MQL5 Wizard should be a mainstay for traders. Why? Because not only does the trader save time by assembling his new ideas with the MQL5 Wizard, and greatly reduce mistakes from duplicate coding; he is ultimately set-up to channel his energy on the few critical areas of his trading philosophy.

A Common misconception with these maps is that the functor data should be an image or 2 dimensional. Images such as the one below is all often shared as being representative of what Kohonen Maps are.

While not wrong I want highlight that the functor can and perhaps should (for traders) have a single dimension. So rather than reducing our high dimensional data to a 2D map we will map it onto a single line. Kohonen maps by definition are meant to reduce dimensionality so I want us to take this to the next level for this article. The kohonen map is different from regular neural networks both in number of layers and the underlying algorithm.

It is a single-layer (usually linear 2D grid as afore mentioned) set of neurons, instead of multiple layers. All the neurons on this layer which we are referring to as the functor connect to the feed, but not to themselves meaning the neurons are not influenced by each other’s weights directly, and only update with respect to the feed data. The functor data layer is often a “map” that organizes itself at each training iteration depending on the feed data. As such, after training, each neuron has weight adjusted dimension in the functor layer and this allows one to calculate the Euclidean distance between any 2 such neurons.

Author: Stephen Njuki