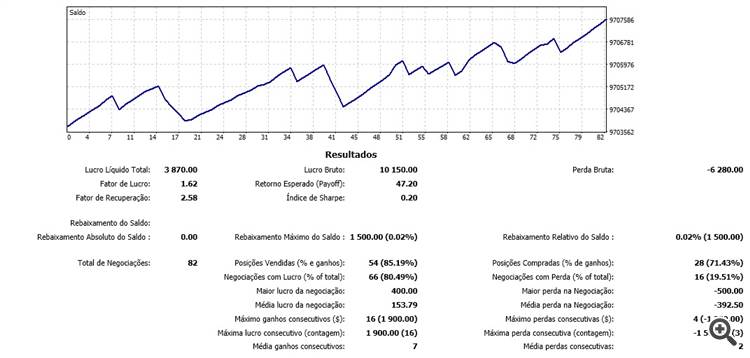

Thank you very much for your articles. His algorithms are very good, for me they are the best algorithms in mql5 for neural networks as they allow to update the network in real time, during the market, line by line. I am testing his algorithms on the Brazilian futures market and the results are encouraging, the forecast has reached an efficiency of over 80% in the last 100 bars, which has led to positive trades. I look forward to the next article.

New article Neural networks made easy (Part 11): A take on GPT has been published:

Author: Dmitriy Gizlyk

Interesting material but the code is all in one huge spaghetti bowl pretty hard to get to grips with it. One class per file would have been easier.

Would be good to have a diagram showing how all the classes are held together.

Right now I'm at NeuronBase which is derived from Object but uses NeuronProof which is derived from NeuronBase and also Layer which uses practically every type of Neuron class defined.

Plenty of forward declarations required in order to put everything in separate files and get to grips with it.

Probably easier to start clean and use the concepts you have explained in the various chapters.

I tried running the spaghetti bowl it made my GPU smoke, producing nothing very useful unfortunately.

Anyhow thanks for the great materials.

:-)

Interesting material but the code is all in one huge spaghetti bowl pretty hard to get to grips with it. One class per file would have been easier.

Would be good to have a diagram showing how all the classes are held together.

Right now I'm at NeuronBase which is derived from Object but uses NeuronProof which is derived from NeuronBase and also Layer which uses practically every type of Neuron class defined.

Plenty of forward declarations required in order to put everything in separate files and get to grips with it.

Probably easier to start clean and use the concepts you have explained in the various chapters.

I tried running the spaghetti bowl it made my GPU smoke, producing nothing very useful unfortunately.

Anyhow thanks for the great materials.

:-)

Hi,

Check NN.chm. Maybe it will help you.

Hi,

Yes it did thank you.

The code isn't as bad now and I can see clearly how to change the net architecture if necessary.

I have everything in separate class files, plus a much smaller spaghetti bowl which is still necessary because mt5 has both definition and implementations in the same files.

I tried doing some training on GBPUSD using the code as is but results don't appear to be good the error climbs from the natural 50% to 70% and stays there with no improvement over subsequent epochs.

Any suggestions where to start tweaking?

BTW this line:

#define FileName Symb.Name()+"_"+EnumToString((ENUM_TIMEFRAMES)Period())+"_"+IntegerToString(HistoryBars,3)+StringSubstr(__FILE__,0,StringFind(__FILE__,".",0))

Need to be corrected to:

#define FileName Symb.Name()+"_"+EnumToString((ENUM_TIMEFRAMES)Period())+"_"+IntegerToString(HistoryBars)+StringSubstr(__FILE__,0,StringFind(__FILE__,".",0))

At first I wasn't really understanding the article, because I wasn't really getting the main idea.

When I asked ChatGPT himself about this text he clarified things a lot and I understood most of what I was reading:

"The Transformer algorithm is a model used to process natural language, i.e. text. This model breaks text into a sequence of words (or "tokens"), and then performs a series of operations on each of these words to better understand their meaning.

One of the operations the model performs is self-attention, which consists of calculating the importance that each word has in relation to the other words in the sequence. To do this, the model uses three vectors: the query vector, the key vector and the value vector, which are calculated for each word.

The model then calculates the attention, which is the importance that each word has in relation to the other words in the sequence. It uses a mathematical function called softmax to normalise the attention coefficients.

Finally, the model combines all the self-attention operations to produce a final result, which is used to predict the meaning of the text.

In short, the Transformer algorithm uses complex mathematical operations to understand the meaning of the text."

Hi again :) I found the problem. On in include folder the Opencl config was fail, because i tested my system, and GPU dont dupport the code only the cpu, a little change and working perfectly, but slowwly :S

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

New article Neural networks made easy (Part 11): A take on GPT has been published:

Perhaps one of the most advanced models among currently existing language neural networks is GPT-3, the maximal variant of which contains 175 billion parameters. Of course, we are not going to create such a monster on our home PCs. However, we can view which architectural solutions can be used in our work and how we can benefit from them.

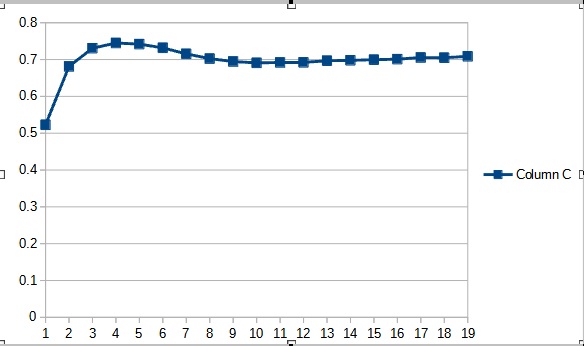

The new class of the neural network was tested on the same data set, which was used in previous tests: EURUSD with the H1 timeframe, historical data of the last 20 candlesticks is fed into the neural network.

The test results have confirmed the assumption that more parameters require a longer training period. In the first training epochs, an Expert Advisor with fewer parameters shows more stable results. However, as the training period is extended, an Expert Advisor with a large number of parameters shows better values. In general, after 33 epochs the error of Fractal_OCL_AttentionMLMH_v2 decreased below the error level of the Fractal_OCL_AttentionMLMH EA, and it further remained low.

Author: Dmitriy Gizlyk